1.1 Supervised learning 15

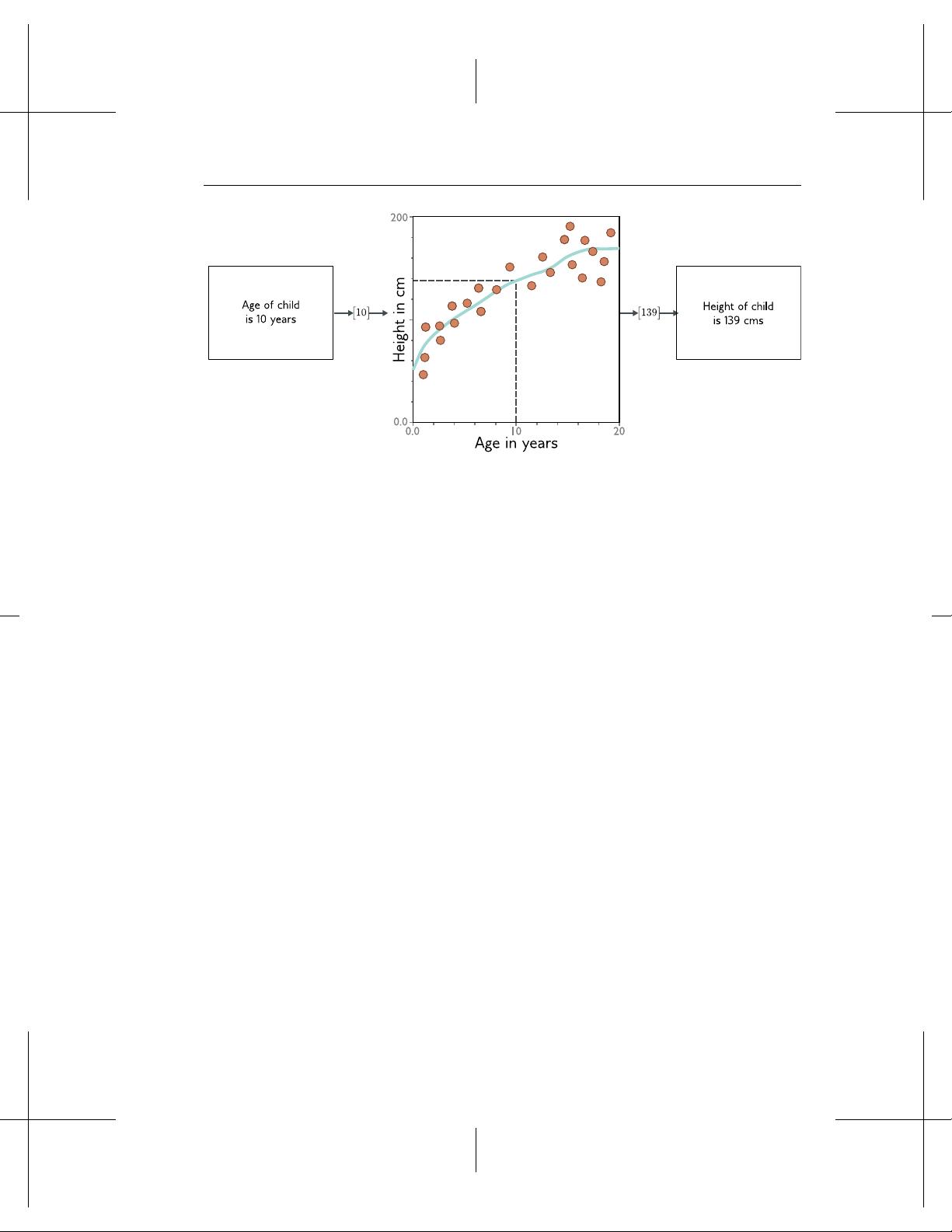

Consider a model to predict the height of a child from their age (gure 1.3). The machine

learning model is a mathematical equation that describes how the average height varies

as a function of age (cyan curve in gure 1.3). When we run the age through this

equation, it returns the height. For example, if the age is 10 years, then we predict that

the height will be 139 cm.

More precisely, the model represents a family of equations mapping the input to

the output (i.e., a family of dierent cyan curves). The particular equation (curve) is

chosen using training data (examples of input/output pairs). In gure 1.3 these pairs

are represented by the orange points and we can see that the model (cyan line) describes

this data reasonably. When we talk about training or tting a model, we mean that we

search through the family of possible equations (possible cyan curves) relating input to

output to nd the one that describes the training data most accurately.

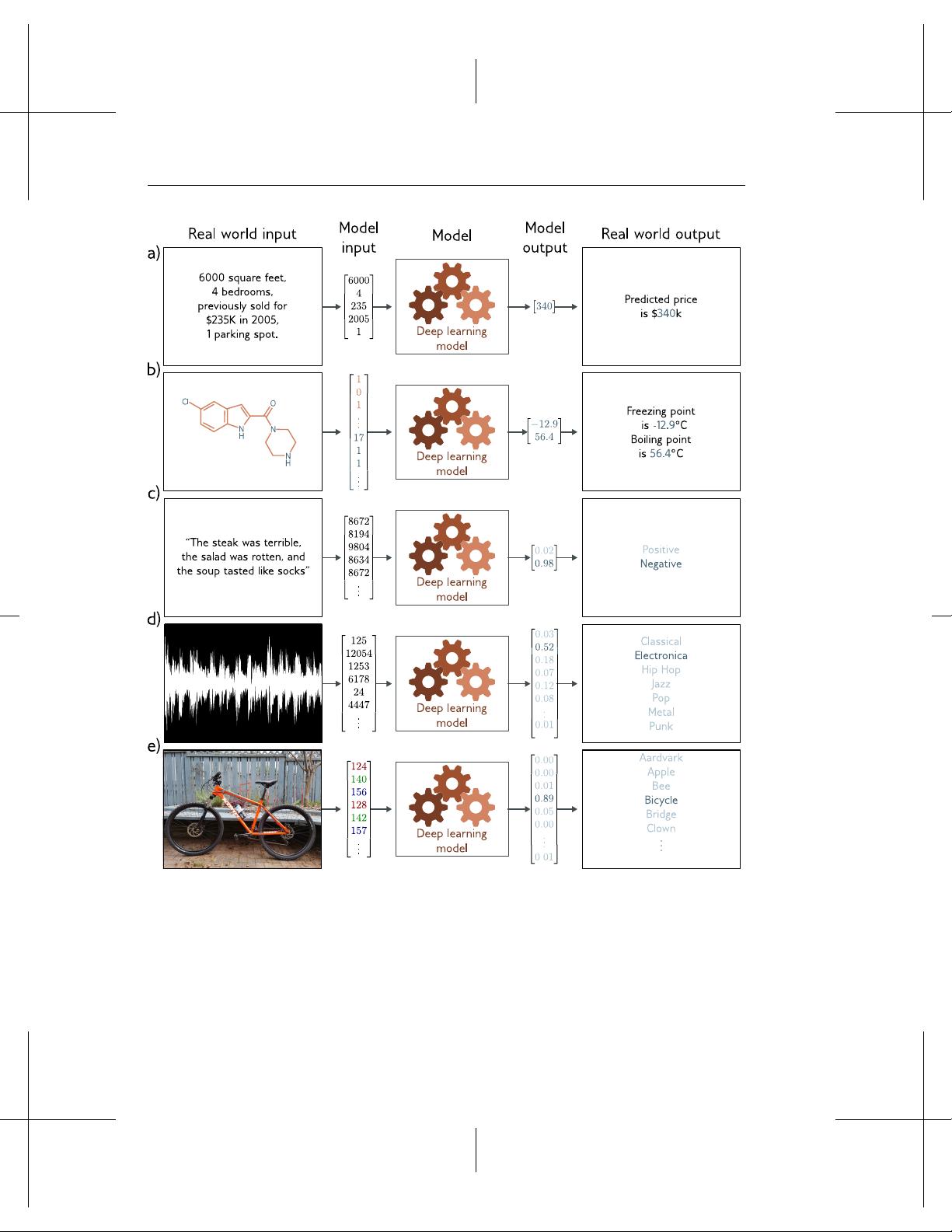

It follows that the models in gure 1.2 require labeled input/output pairs for training.

For example, the music classication model would require a large number of audio clips

where the genre of each had been identied by a human expert. These input/output

pairs take the role of a teacher or supervisor for the training process and this gives rise

to the term supervised learning.

1.1.4 Deep neural networks

This book concerns deep neural networks, which are a particularly useful type of machine

learning model. They are equations that can represent an extremely broad family of

relationships between input and output, and where it is particularly easy to search

through this family to nd the relationship that describes the training data.

Deep neural networks can process inputs that are very large, of variable length,

and contain various kinds of internal structure. They can output single numbers (for

univariate regression), multiple numbers (for multivariate regression), or probabilities

over two or more classes (for binary and multi-class classication, respectively). As we

shall see in the next section, their outputs may also be very large, of variable length,

and contain internal structure. It is probably hard to imagine equations with these

properties; the reader should endeavor to suspend disbelief for now.

1.1.5 Structured outputs

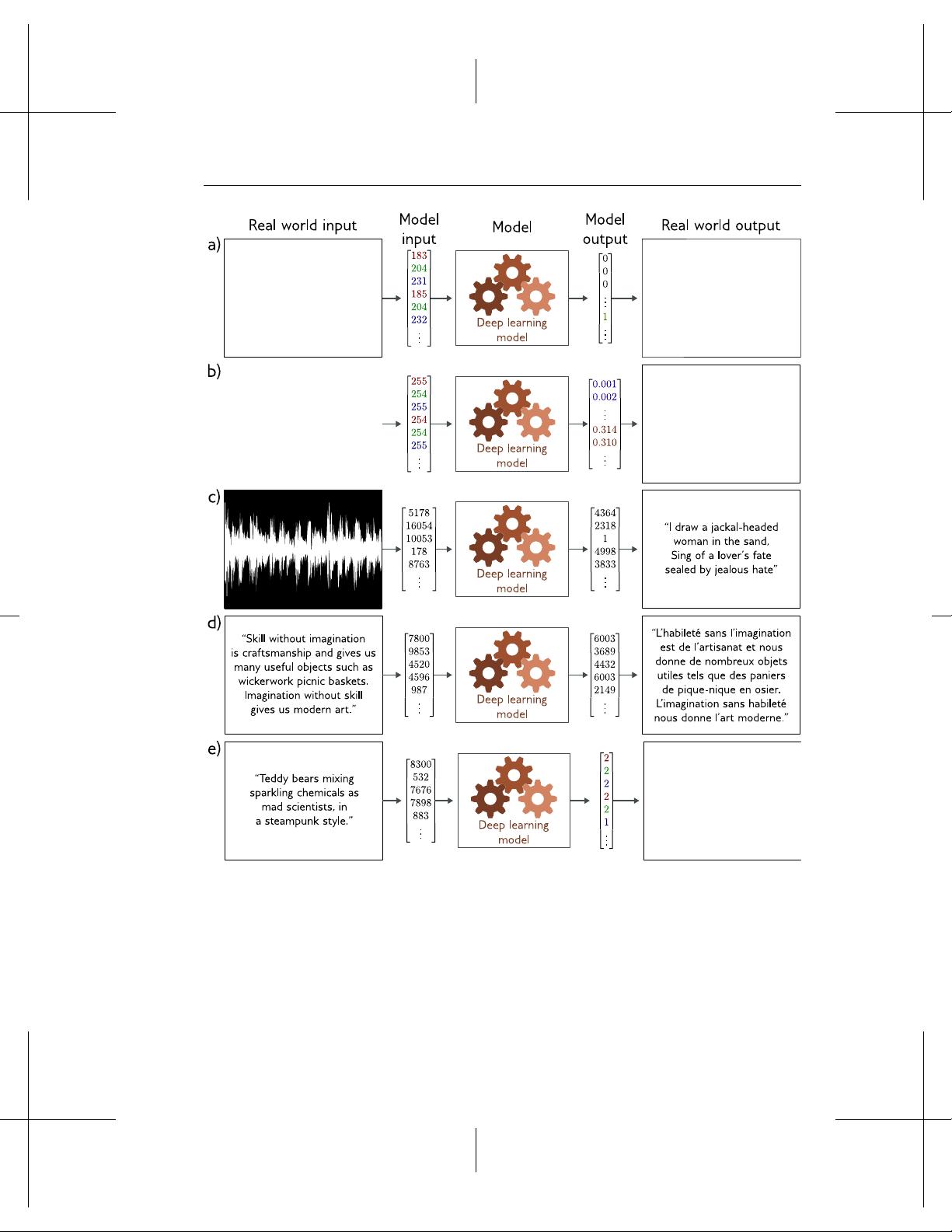

Figure 1.4a depicts a multivariate binary classication model for semantic segmentation.

Here, every pixel of an input image is assigned a binary label that indicates whether it

belongs to a cow or the background. Figure 1.4b shows a multivariate regression model

where the input is an image of a street scene and the output is the depth at each pixel.

In both cases, the output is high-dimensional and structured. However, this structure is

closely tied to the input and this can be exploited; if a pixel is labeled as “cow”, then a

neighbor with a similar RGB value probably has the same label.

Figures 1.4c-e depict three models where the output has a complex structure that is

not so closely tied to the input. Figure 1.4c shows a model where the input is an audio

Draft: please send errata to udlbookmail@gmail.com.