This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination.

SHEN et al.: MOT BY SUBMODULAR OPTIMIZATION 3

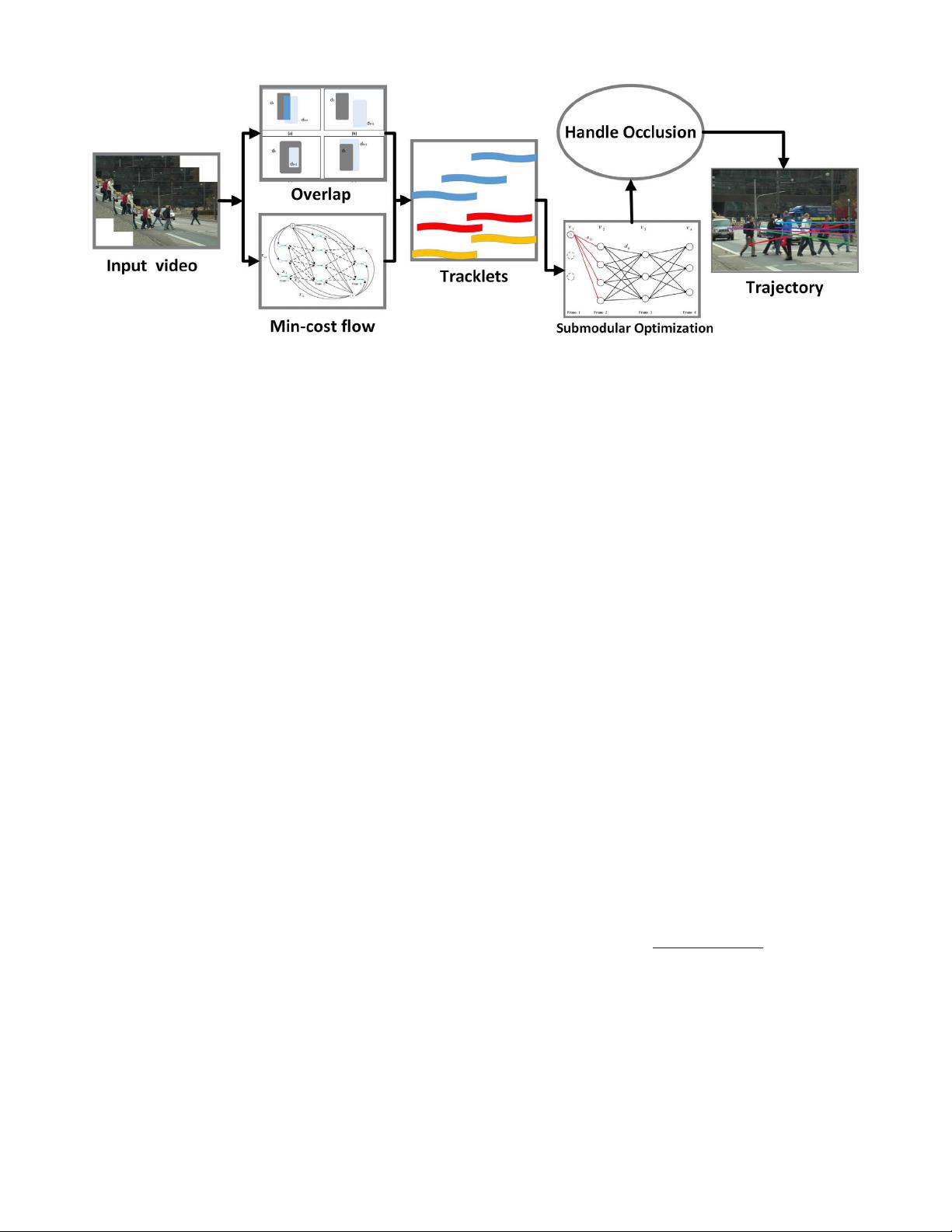

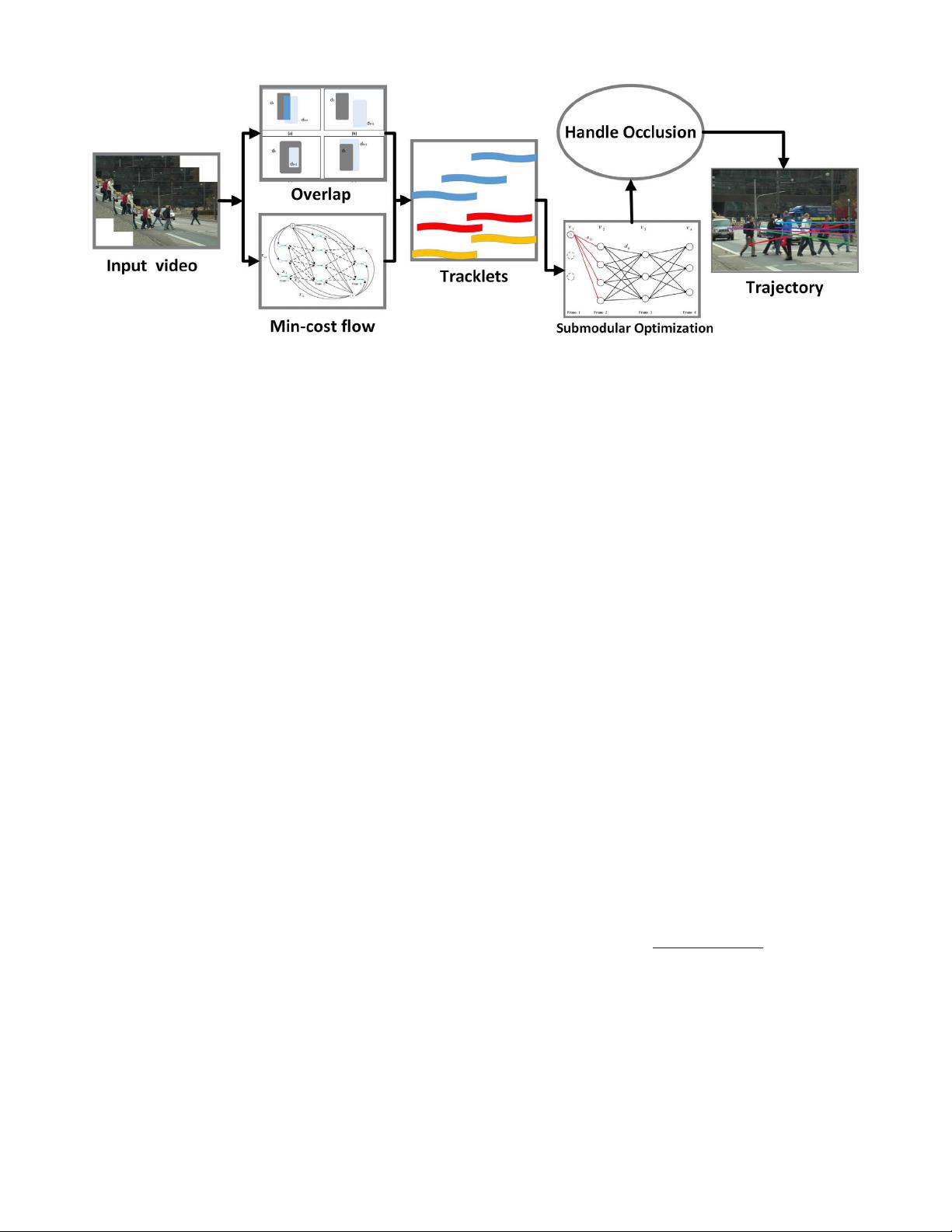

Fig. 2. Flowchart of our MOT algorithm. First, we generate tracklets by overlap criteria and min-cost flow, respectively. Then, we formulate the MOT

problem as the maximization of a submodular optimization problem, where handling occlusion is also incorporated into our framework.

Zamir et al. [14] generated the tracklets of pedestrians using a

global method. The method in [15] only relies on spatial char-

acteristics between detections, and it lacks robustness when

detections are too close with each other in space. Tracklets by

Zamir et al. [14] exploit the appearance features in a batch of

video frames. However, appearance cues are not sufficient to

discriminate multiple objects, especially for tracking objects

with similar appearances. In this paper, we first adopt intu-

itive overlap criteria on detections to obtain reliable tracklets.

Then, a network flow model is built to generate another kind

of tracklets. We incorporate these two kinds of tracklets into

our submodular optimization as a new selection process.

Another group of important related works are the submod-

ular optimization approaches for image and video processing.

Submodularity has been applied to many vision tasks, such

as video summarization [22], detection [24], object recogni-

tion [25], and clustering [26]. Liu et al. [26] presented the

entropy rate of random walk on a graph for compact and

homogeneous clustering. Jiang and Davis [24] solved a facility

location problem for salient region detection by maximizing

a submodular objective function. Submodular maximization

solves many important problems including certain constraint

satisfaction problems and maximum facility location prob-

lems. In our framework, we formulate the MOT problem as a

submodular optimization problem. A submodular function is

designed to pick the correct tracklets from the candidate set

of tracklets, and these tracklets can constitute the final target

trajectory.

III. O

UR TRACKING APPROACH

The flowchart of our MOT algorithm is given in Fig. 2.

The first step detects the pedestrians in each frame. We use the

object detections provided by the MOTChallenge2015 dataset.

Next, we divide the input sequence into a number of video

segments and find the low-level tracklets, where each video

segment contains ten frames. Two strategies are used to create

the low-level tracklets. Then the low-level tracklets in differ-

ent segments are used as the input to generate trajectories.

We propose a data association model that can be solved by

a submodular function to connect the low-level tracklets and

generate the final trajectories. The final step is to optimize our

trajectory result and solve the occlusion problem.

A. Finding Low-Level Tracklets

In this section, we describe how to get the low-level track-

lets. We divide an input video into K segments of ten frames

each. Then we adopt two data association methods to find

tracklets and then incorporate these tracklets into our sub-

modular optimization framework. Tracklets have been used as

reliable inputs in many previous works which can reduce the

computational complexity and noise. In this paper, we incor-

porate appearance and motion similarity between different

tracklets into our cost function.

1) Overlap Criteria Method: An intuitive overlap criterion

is used in this paper to connect bounding boxes between con-

secutive frames according to their overlap ratio just like [15].

Fig. 3 shows the overlap of two detections d

t

and d

t+1

from

consecutive frames t and t + 1, respectively. There are four

situations including partial overlap and complete overlap for

these two detections. Detections d

t

and d

t+1

have an over-

lapped part in Fig. 3(a), and they do not have overlaps in

Fig. 3(b). Fig. 3(c) and (d) shows that one detection contains

another one. In general, we calculate the value of an overlap

region as follows:

V

overlap

=

S

share

S

t

+ S

t+1

− S

share

(1)

where S

share

means the common area of two detections and S

t

and S

t+1

represent the areas of detections d

t

and d

t+1

, respec-

tively. And the value of V

overlap

measures the percentage of

the common area of corresponding detections. As shown in

Fig. 3(b), when two detections d

t

and d

t+1

are apart in spa-

tial domain, V

overlap

equals to 0, which means they are little

likely to be part of the same target. Finally, we connect two

detections as a tracklet if V

overlap

> 0.6.

This method relies on the spatial characteristics between

detections in consecutive frames. If two detections which