3-Sweep: Extracting Editable Objects from a Single Photo

Tao Chen

1,2,3

∗

Zhe Zhu

1

Ariel Shamir

3

Shi-Min Hu

1

Daniel Cohen-Or

2

1

TNList, Department of Computer Science and Technology, Tsinghua University

2

Tel Aviv University

3

The Interdisciplinary Center

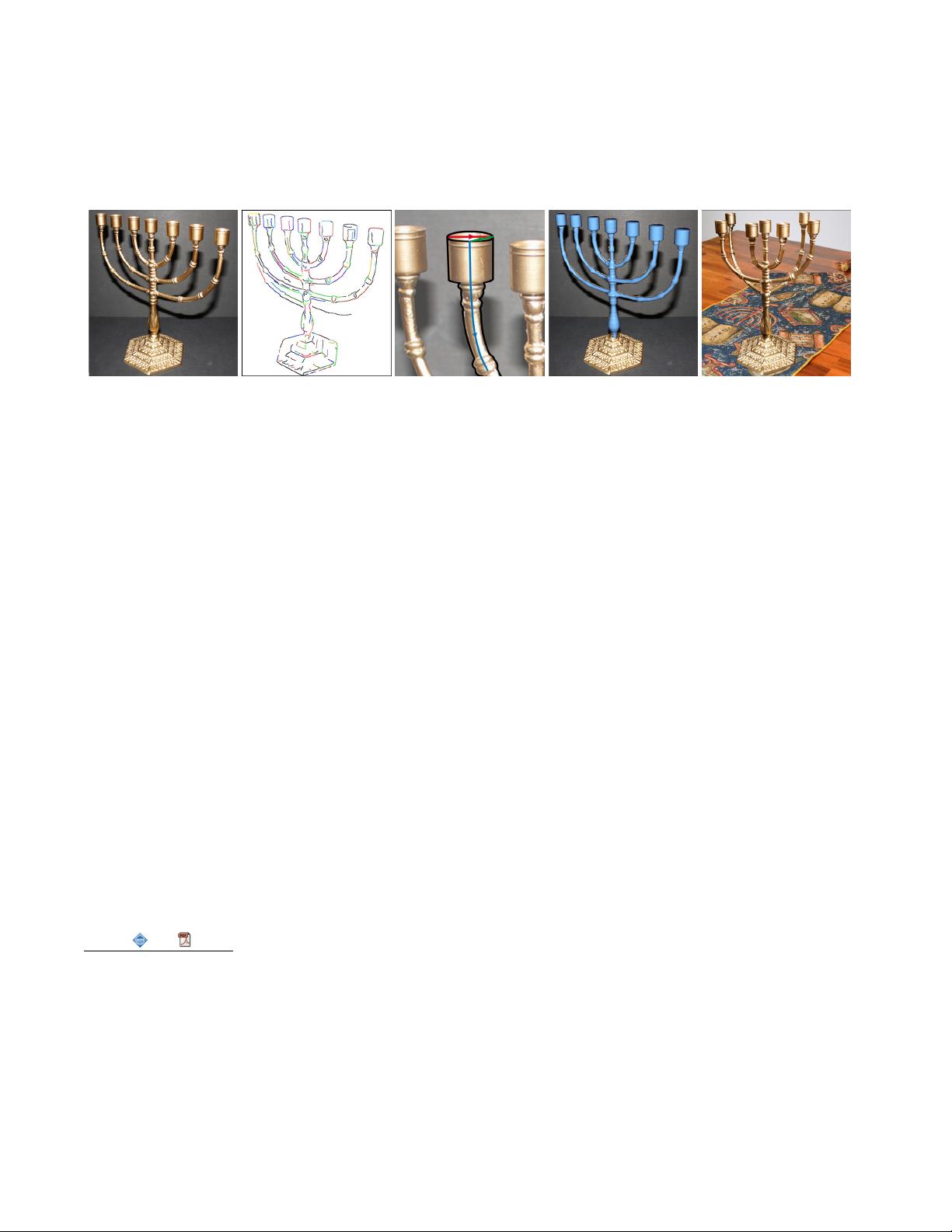

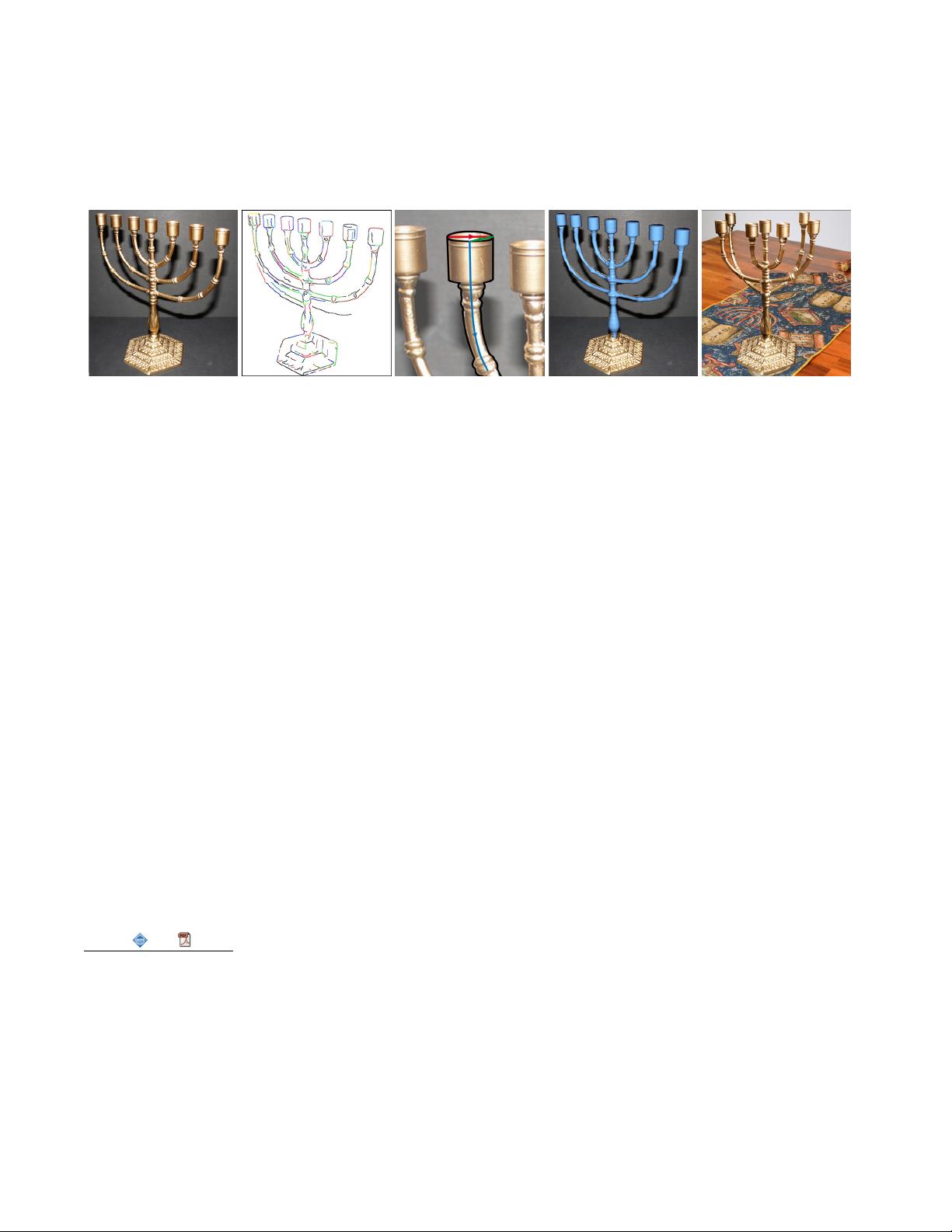

(a) (b) (c) (d) (e)

Figure 1: 3-Sweep Object Extraction. (a) Input image. (b) Extracted edges. (c) 3-Sweep modeling of one component of the object. (d) The

full extracted 3D model. (e) Editing the model by rotating each arm in a different direction, and pasting onto a new background. The base of

the object is transferred by alpha matting and compositing.

Abstract

We introduce an interactive technique for manipulating simple 3D

shapes based on extracting them from a single photograph. Such

extraction requires understanding of the components of the shape,

their projections, and relations. These simple cognitive tasks for

humans are particularly difficult for automatic algorithms. Thus,

our approach combines the cognitive abilities of humans with the

computational accuracy of the machine to solve this problem. Our

technique provides the user the means to quickly create editable 3D

parts— human assistance implicitly segments a complex object in-

to its components, and positions them in space. In our interface,

three strokes are used to generate a 3D component that snaps to the

shape’s outline in the photograph, where each stroke defines one

dimension of the component. The computer reshapes the compo-

nent to fit the image of the object in the photograph as well as to

satisfy various inferred geometric constraints imposed by its global

3D structure. We show that with this intelligent interactive mod-

eling tool, the daunting task of object extraction is made simple.

Once the 3D object has been extracted, it can be quickly edited and

placed back into photos or 3D scenes, permitting object-driven pho-

to editing tasks which are impossible to perform in image-space.

We show several examples and present a user study illustrating the

usefulness of our technique.

CR Categories: I.3.5 [Computer Graphics]: Computational Ge-

ometry and Object Modeling—Geometric algorithms, languages,

and systems;

Keywords: Interactive techniques, Photo manipulation, Image

processing

Links: DL PDF

∗

This work was performed while Tao Chen was a postdoctoral researcher

at TAU and IDC, Israel.

1 Introduction

Extracting three dimensional objects from a single photo is still a

long way from reality given the current state of technology, as it in-

volves numerous complex tasks: the target object must be separated

from its background, and its 3D pose, shape and structure should be

recognized from its projection. These tasks are difficult, and even

ill-posed, since they require some degree of semantic understand-

ing of the object. To alleviate this problem, complex 3D models

can be partitioned into simpler parts, but identifying object parts

also requires further semantic understanding and is difficult to per-

form automatically. Moreover, having decomposed a 3D shape into

parts, the relations between these parts should also be understood

and maintained in the final composition.

In this paper we present an interactive technique to extract 3D man-

made objects from a single photograph, leveraging the strengths of

both humans and computers (see Figure 1). Human perceptual abil-

ities are used to partition, recognize and position shape parts, using

a very simple interface based on triplets of strokes, while the com-

puter performs tasks which are computationally intensive or require

accuracy. The final object model produced by our method includes

its geometry and structure, as well as some of its semantics. This

allows the extracted model to be readily available for intelligent

editing, which maintains the shape’s semantics.

Our approach is based on the observation that many man-made ob-

jects can be decomposed into simpler parts that can be represented

by a generalized cylinder, cuboid or similar primitives. The key

idea of our method is to provide the user with an interactive tool

to guide the creation of 3D editable primitives. The tool is based

on a rather simple modeling gesture we call 3-sweep. This gesture

allows the user to explicitly define the three dimensions of the prim-

itive using three sweeps. The first two sweeps define the first and

second dimension of a 2D profile and the third, longer, sweep is

used to define the main curved axis of the primitive.

As the user sweeps the primitive, the program dynamically adjusts

the progressive profile by sensing the pictorial context in the pho-

tograph and automatically snapping to it. Using such 3-sweep op-

erations, the user can model 3D parts consistent with the object in

the photograph, while the computer automatically maintains global

constraints linking it to other primitives comprising the object.