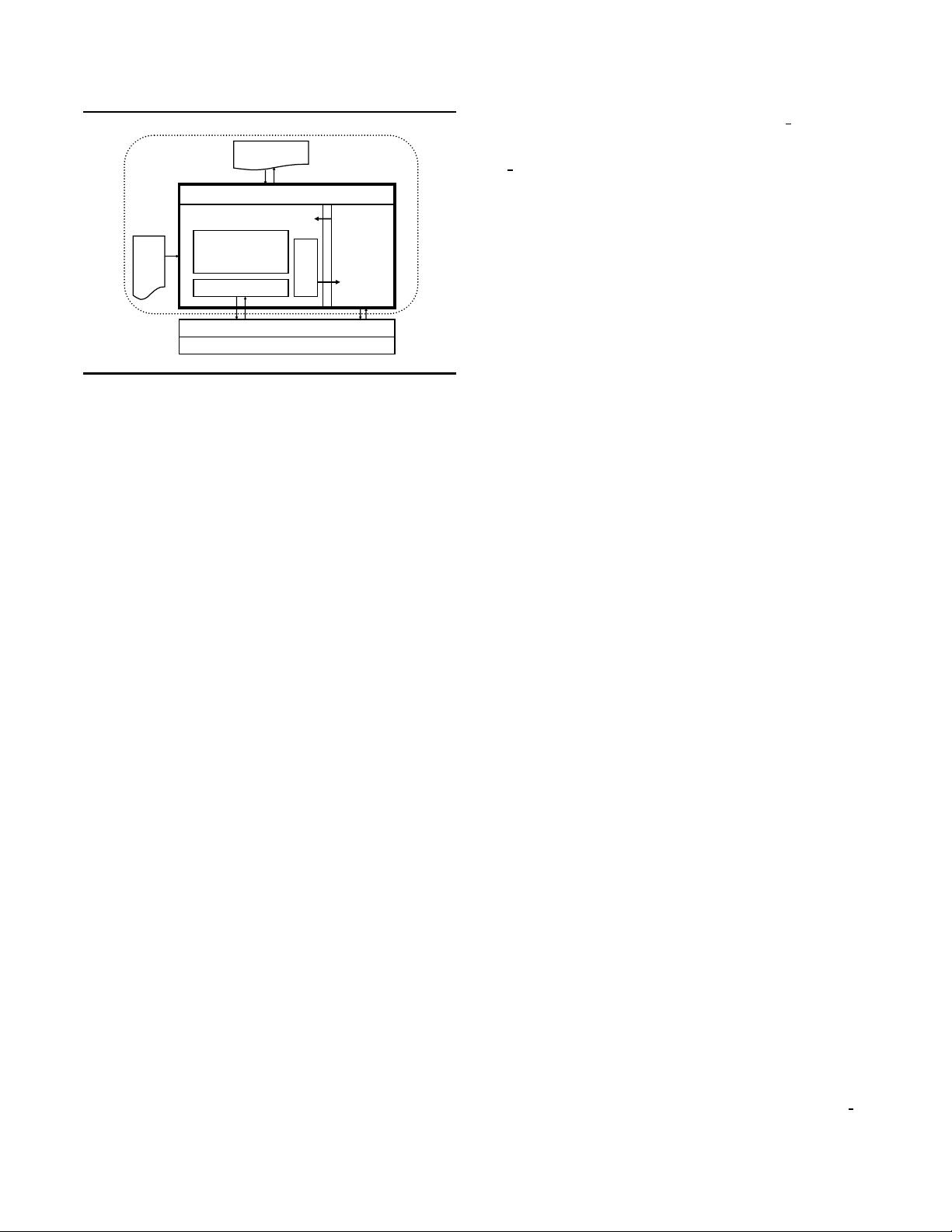

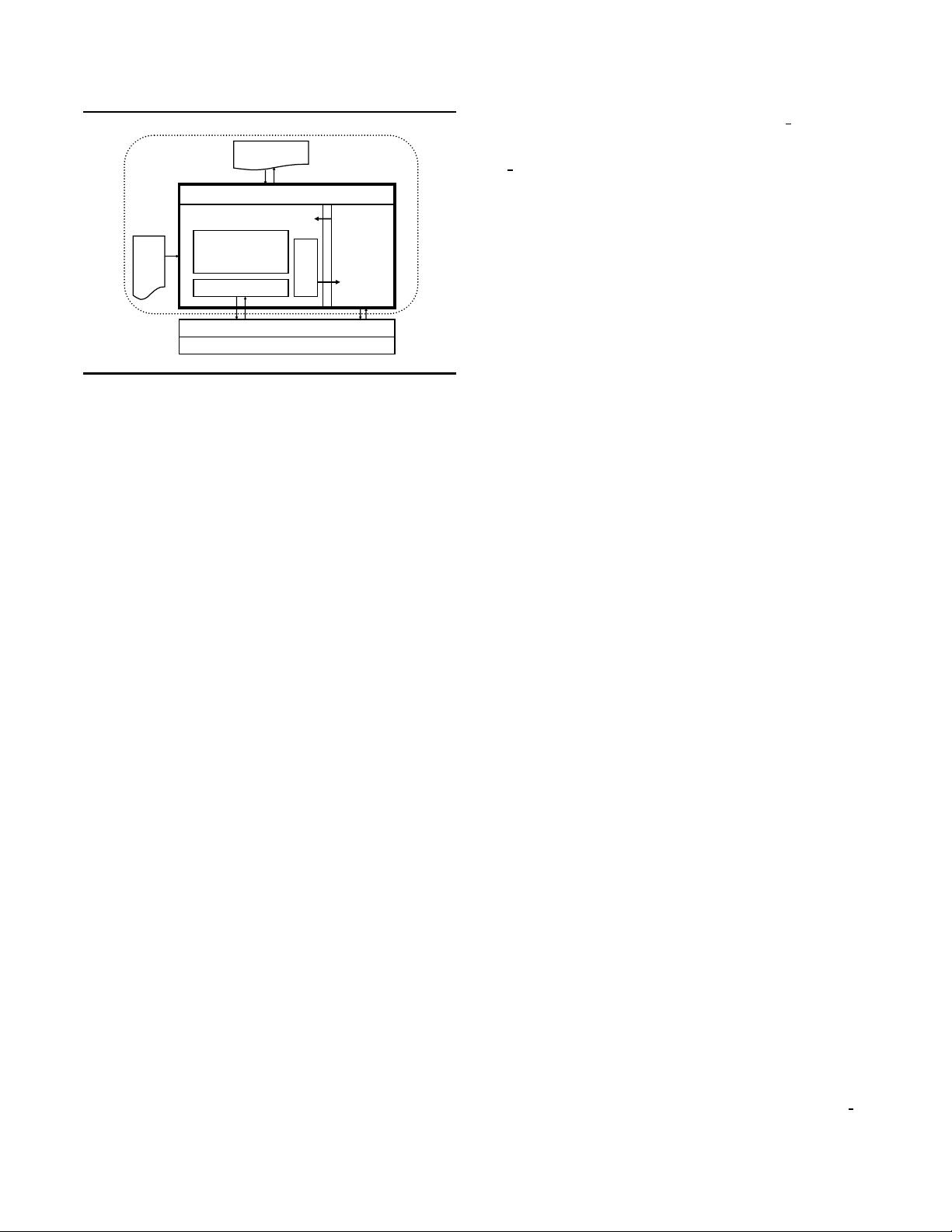

JIT Compiler

Emulation Unit

Dispatcher

Virtual Machine (VM)

Code

Cache

Instrumentation APIs

Application

Operating System

Hardware

Pin

Pintool

Address Space

Figure 2. Pin’s software architecture

mentation API invoked by Pintools. The VM consists of a just-in-

time compiler (JIT), an emulator, and a dispatcher. After Pin gains

control of the application, the VM coordinates its components to

execute the application. The JIT compiles and instruments applica-

tion code, which is then launched by the dispatcher. The compiled

code is stored in the code cache. Entering/leaving the VM from/to

the code cache involves saving and restoring the application register

state. The emulator interprets instructions that cannot be executed

directly. It is used for system calls which require special handling

from the VM. Since Pin sits above the operating system, it can only

capture user-level code.

As Figure 2 shows, there are three binary programs present

when an instrumented program is running: the application, Pin, and

the Pintool. Pin is the engine that jits and instruments the applica-

tion. The Pintool contains the instrumentation and analysis routines

and is linked with a library that allows it to communicate with Pin.

While they share the same address space, they do not share any li-

braries and so there are typically three copies of glibc. By making

all of the libraries private, we avoid unwanted interaction between

Pin, the Pintool, and the application. One example of a problematic

interaction is when the application executes a glibc function that

is not reentrant. If the application starts executing the function and

then tries to execute some code that triggers further compilation, it

will enter the JIT. If the JIT executes the same glibc function, it

will enter the same procedure a second time while the application

is still executing it, causing an error. Since we have separate copies

of glibc for each component, Pin and the application do not share

any data and cannot have a re-entrancy problem. The same prob-

lem can occur when we jit the analysis code in the Pintool that

calls glibc (jitting the analysis routine allows us to greatly reduce

the overhead of simple instrumentation on Itanium).

3.2 Injecting Pin

The injector loads Pin into the address space of an application. In-

jection uses the Unix Ptrace API to obtain control of an application

and capture the processor context. It loads the Pin binary into the

application address space and starts it running. After initializing

itself, Pin loads the Pintool into the address space and starts it run-

ning. The Pintool initializes itself and then requests that Pin start

the application. Pin creates the initial context and starts jitting the

application at the entry point (or at the current PC in the case of

attach). Using Ptrace as the mechanism for injection allows us to

attach to an already running process in the same way as a debug-

ger. It is also possible to detach from an instrumented process and

continue executing the original, uninstrumented code.

Other tools like DynamoRIO [6] rely on the LD

PRELOAD en-

vironment variable to force the dynamic loader to load a shared li-

brary in the address space. Pin’s method has three advantages. First,

LD

PRELOAD does not work with statically-linked binaries, which

many of our users require. Second, loading an extra shared library

will shift all of the application shared libraries and some dynami-

cally allocated memory to a higher address when compared to an

uninstrumented execution. We attempt to preserve the original be-

havior as much as possible. Third, the instrumentation tool cannot

gain control of the application until after the shared-library loader

has partially executed, while our method is able to instrument the

very first instruction in the program. This capability actually ex-

posed a bug in the Linux shared-library loader, resulting from a

reference to uninitialized data on the stack.

3.3 The JIT Compiler

3.3.1 Basics

Pin compiles from one ISA directly into the same ISA (e.g., IA32

to IA32, ARM to ARM) without going through an intermediate

format, and the compiled code is stored in a software-based code

cache. Only code residing in the code cache is executed—the origi-

nal code is never executed. An application is compiled one trace at

a time. A trace is a straight-line sequence of instructions which ter-

minates at one of the conditions: (i) an unconditional control trans-

fer (branch, call, or return), (ii)a pre-defined number of conditional

control transfers, or (iii) a pre-defined number of instructions have

been fetched in the trace. In addition to the last exit, a trace may

have multiple side-exits (the conditional control transfers). Each

exit initially branches to a stub, which re-directs the control to the

VM. The VM determines the target address (which is statically un-

known for indirect control transfers), generates a new trace for the

target if it has not been generated before, and resumes the execution

at the target trace.

In the rest of this section, we discuss the following features of

our JIT: trace linking, register re-reallocation, and instrumentation

optimization. Our current performance effort is focusing on IA32,

EM64T, and Itanium, which have all these features implemented.

While the ARM version of Pin is fully functional, some of the

optimizations are not yet implemented.

3.3.2 Trace Linking

To improve performance, Pin attempts to branch directly from a

trace exit to the target trace, bypassing the stub and VM. We

call this process trace linking. Linking a direct control transfer

is straightforward as it has a unique target. We simply patch the

branch at the end of one trace to jump to the target trace. However,

an indirect control transfer (a jump, call, or return) has multiple

possible targets and therefore needs some sort of target-prediction

mechanism.

Figure 3(a) illustrates our indirect linking approach as imple-

mented on the x86 architecture. Pin translates the indirect jump

into a move and a direct jump. The move puts the indirect target

address into register %edx (this register as well as the %ecx and

%esi shown in Figure 3(a) are obtained via register re-allocation,

as we will discuss in Section 3.3.3). The direct jump goes to the

first predicted target address 0x40001000 (which is mapped to

0x70001000 in the code cache for this example). We compare

%edx against 0x40001000 using the lea/jecxz idiom used in Dy-

namoRIO [6], which avoids modifying the conditional flags reg-

ister eflags. If the prediction is correct (i.e. %ecx=0), we will

branch to match1 to execute the remaining code of the predicted

target. If the prediction is wrong, we will try another predicted tar-

get 0x40002000 (mapped to 0x70002000 in the code cache). If the

target is not found on the chain, we will branch to LookupHtab

1,

which searches for the target in a hash table (whose base address is

192