Computers and Electrical Engineering 108 (2023) 108695

3

Natural language processing has actively studied attention mechanisms as essential elements for neural networks. In recent years,

attention mechanisms in computer vision have been applied to various elds to enhance performance by highlighting regions of in-

terest in images and detecting long-range dependencies. Segmentation, generation of images, and classication of images are some of

the applications of computer vision using them. Previous studies have proven that attention mechanisms can improve object detection

performance by locating and recognizing objects in images. In addition, a MAD unit is suggested in [29] to identify the activation of the

neuron in the lower and higher streams via the aggressive search. A new structure couplenet is suggested, which combines the local and

global information related to objects to improve detection performance. Recent improvements have been made in the accuracy of

object detection methods using transformers.

The attention-based NLP models’ success has inspired a recent initiative to integrate transformers into CNNs. The ViT Vision

Transformer is a pure transformer used to classify the images. The 2D image is reshaped into attened patches to handle it. The

transformer attens the patches and maps them to dimensions using a trainable linear projection using constant latent vector sizes

across all its layers. The results obtained when trained on large datasets prove that the transformers efciently extract features of an

image. Video Vision Transformer (ViViT) is proposed for the classication of videos. A sequence of spatiotemporal tokens extracted

from the video input is used in this architecture as the primary method of computation for self-attention. Several methods are applied

to factorize the model along spatial and temporal dimensions to increase efciency and scalability. The author suggested the Tran-

sUNet, which integrates the transformer’s encoder and the U-Net. Transformer encodes the tokenized image patches based on the CNN

feature map to detect global context initially and extract global context later. Decoding ensures that the encoded features are

upsampled, and the CNN feature maps are combined for precise localization. TransUNet leverages detailed, high-resolution geospatial

information and the global context by combining CNN features and transformers. In various medical applications, TransUNet out-

performs other competing methods [29].

Many methods based on U-Net related to detecting video anomaly utilize the successive stacked frames as input and the constraints

of motion like loss of optical ow to extract the temporal information. The structure is limited, and inadequate temporal information is

used to predict and reconstruct. Better performance is obtained by ViViT than other models for video classication using different

variants of the transformers [29]. Using the transformers, the model performs better, encoding both spatial and temporal features in

the videos. Furthermore, the U-Net is widely used for detecting video anomalies. In addition, the potential integration of the U-Net and

the transformer is mentioned by the TransUNet. The model inspires us to modify the encoder of the transformer in the proposed system

to make reliable predictions in the video. The model encodes spatial data, while the suggested encoder of the transformer encodes the

temporal data. The model performs better than the baseline model based on prediction without the transformer module. However, the

performance is comparatively lesser and triggers the need for a novel approach. This research intends to solve the complexity of the

existing approaches by proposing a transformer-based network framework for object detection (TOD − Net).

3. Methodology

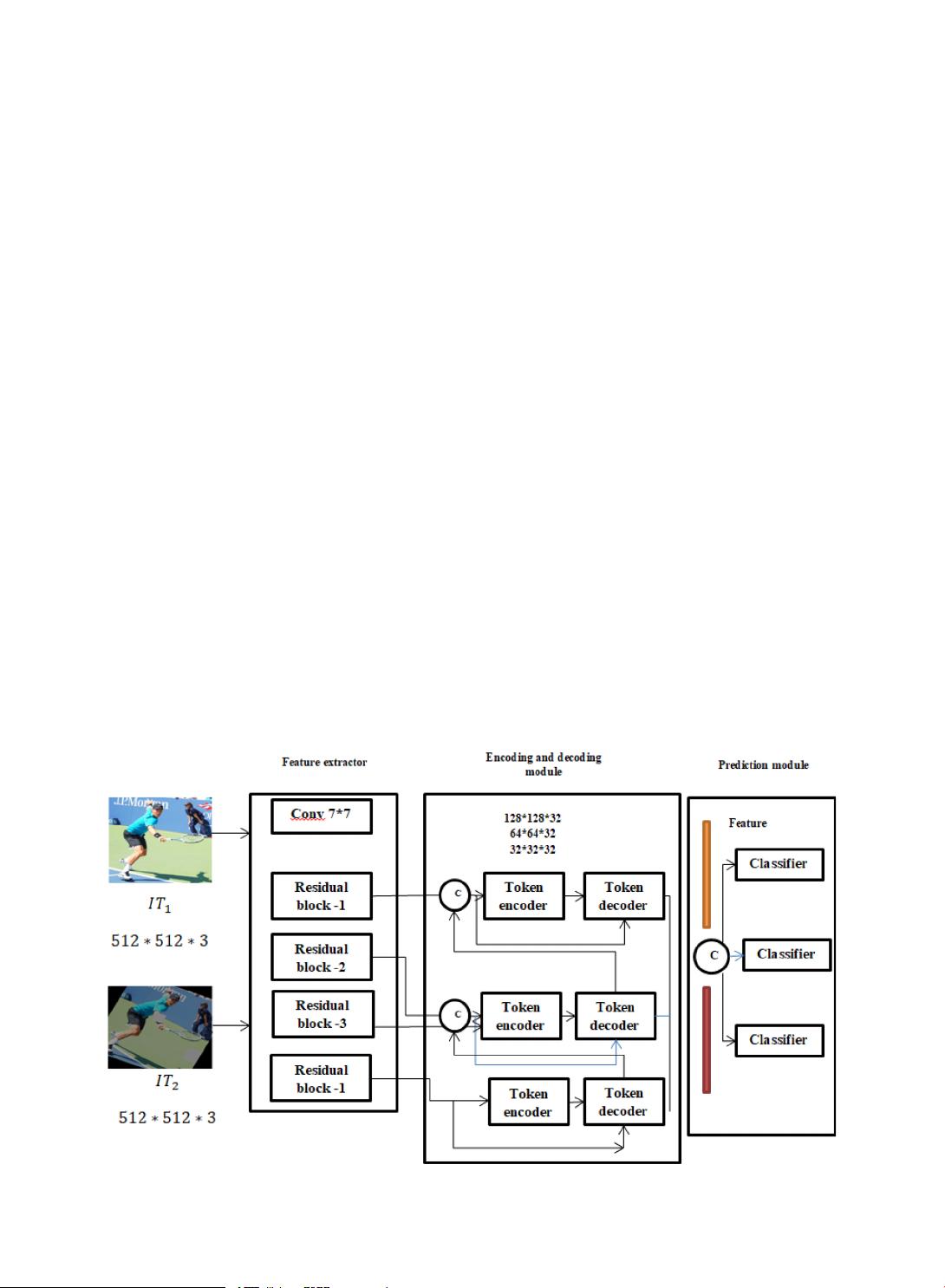

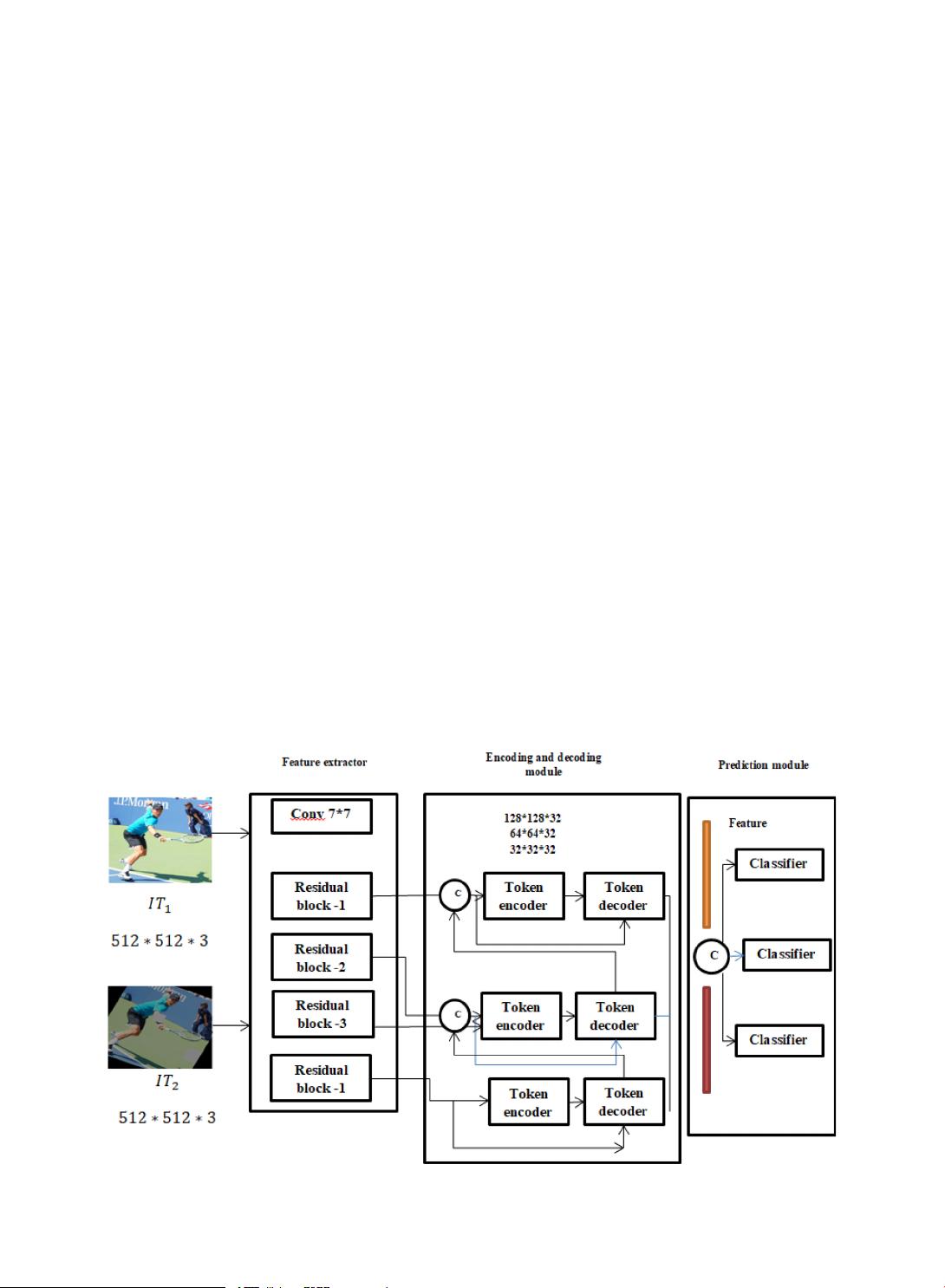

The backbone of the proposed network uses TOD − Net as the feature extractor that is modied by taking the existing ResNet-18 and

removing the primary fully connected layer. Hence, the feature extractor has a convolution layer of 7 × 7 and four residual blocks

Fig 1. Overview of TOD − Net.

M. Sirisha and S.V. Sudha