SUBMODULAR VIDEO OBJECT PROPOSAL SELECTION FOR SEMANTIC OBJECT

SEGMENTATION

Tinghuai Wang

Nokia Labs,

Nokia Technologies, Finland

ABSTRACT

Learning a data-driven spatio-temporal semantic representa-

tion of the objects is the key to coherent and consistent la-

belling in video. This paper proposes to achieve semantic

video object segmentation by learning a data-driven represen-

tation which captures the synergy of multiple instances from

continuous frames. To prune the noisy detections, we exploit

the rich information among multiple instances and select the

discriminative and representative subset. This selection pro-

cess is formulated as a facility location problem solved by

maximising a submodular function. Our method retrieves

the longer term contextual dependencies which underpins a

robust semantic video object segmentation algorithm. We

present extensive experiments on a challenging dataset that

demonstrate the superior performance of our approach com-

pared with the state-of-the-art methods.

Index Terms— Submodular function, semantic video ob-

ject segmentation, deep learning

1. INTRODUCTION

The proliferation of user-uploaded videos which are fre-

quently associated with semantic tags provides a vast resource

for computer vision research. These semantic tags, albeit not

spatially or temporally located in the video, suggest visual

concepts appearing in the video. This social trend has led

to an increasing interest in exploring the idea of segmenting

video objects with weak supervision or labels.

Hartmann et al. [1] firstly formulated the problem as

learning weakly supervised classifiers for a set of independent

spatio-temporal segments. Tang et al. [2] learned discrima-

tive model by leveraging labelled positive videos and a large

collection of negative examples based on distance matrix. Liu

et al. [3] extended the traditional binary classifition prob-

lem to multi-class and proposed nearest neighbor-based la-

bel transfer algorithm which encourages smoothness between

regions that are spatio-temporally adjacent and similar in ap-

pearance. Zhang et al. [4] utilized pre-trained object detector

to generate a set of detections and then pruned noisy detec-

tions and regions by preserving spatio-temporal constraints.

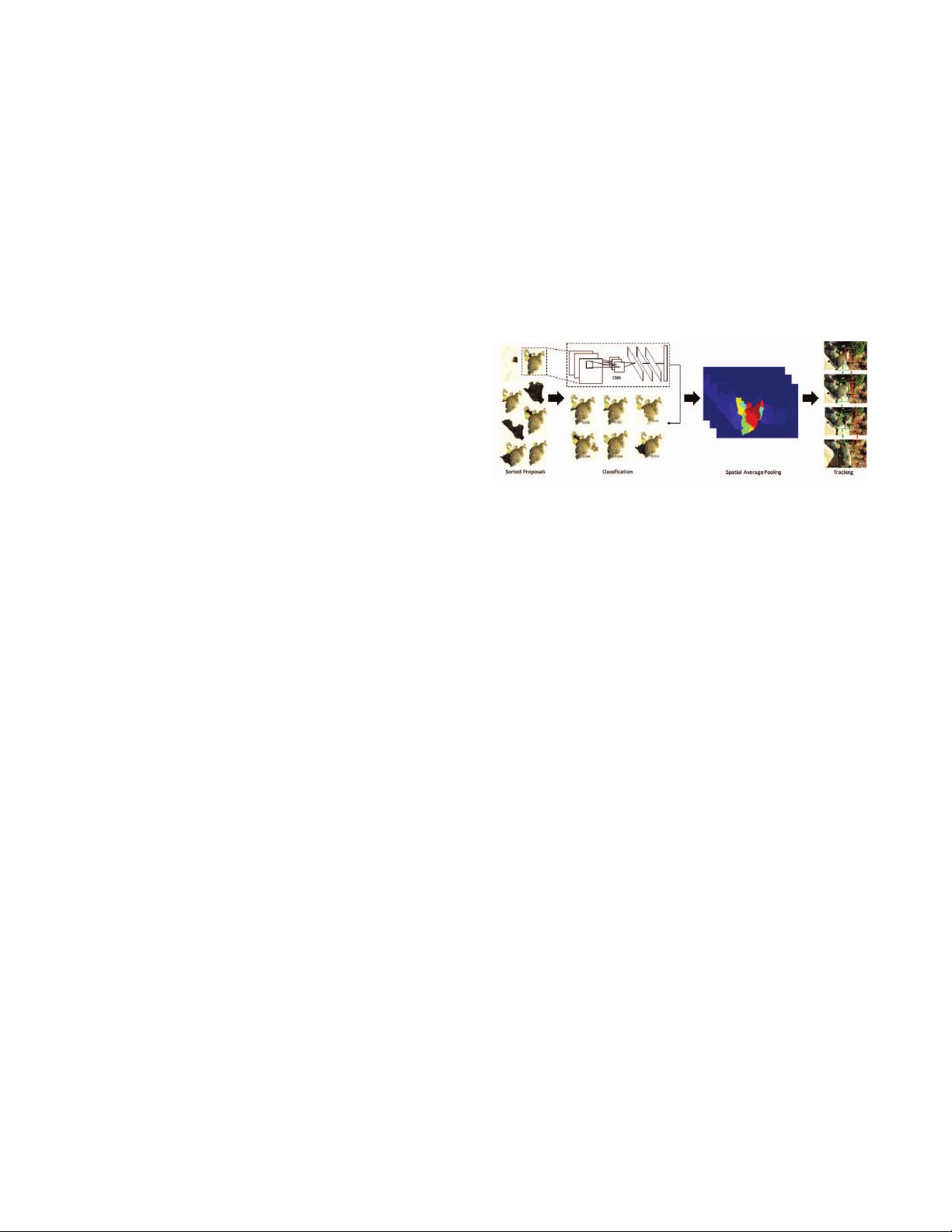

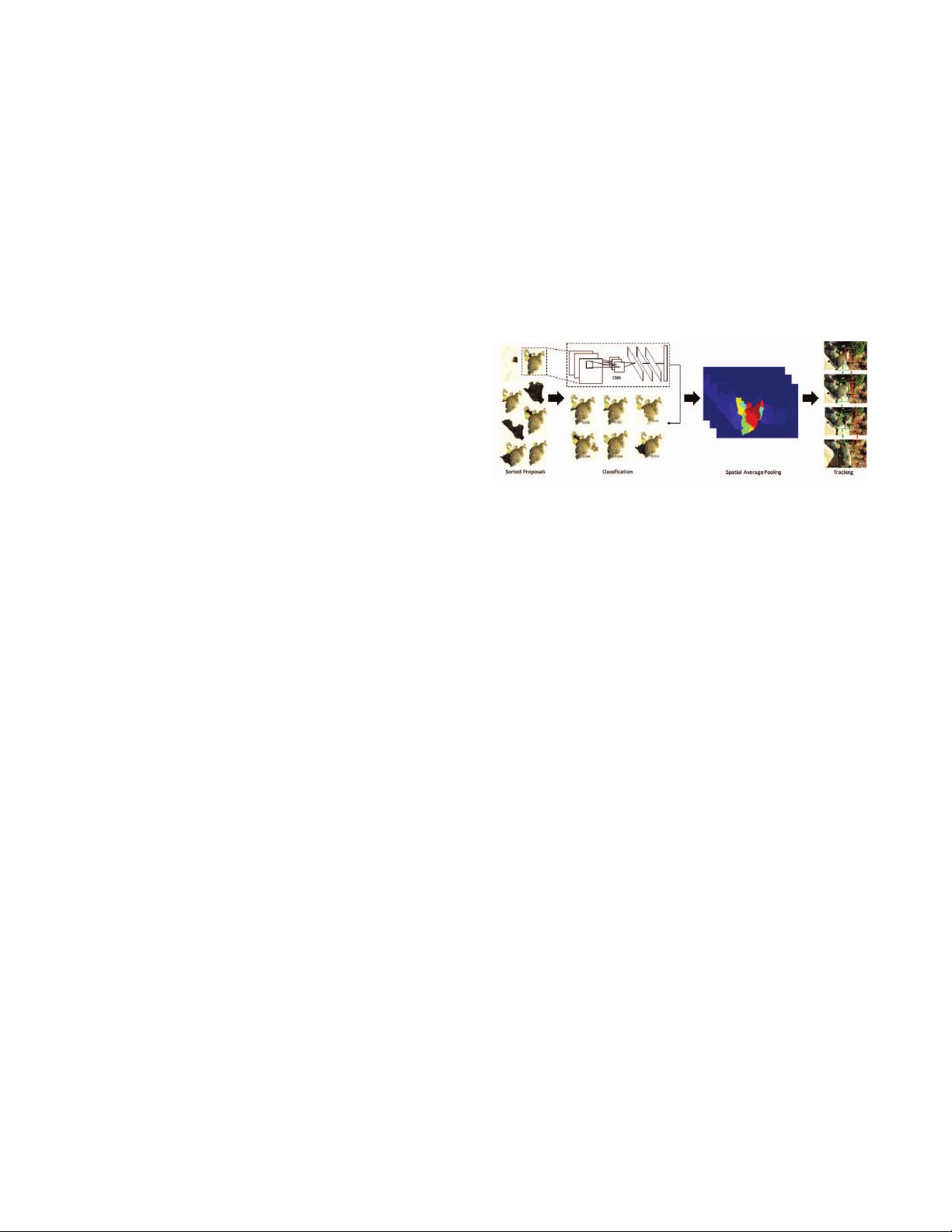

Fig. 1. An illustration of the proposed object discovery strat-

egy .

In contrast to previous works, we propose to learn a class-

specific representation which captures the synergy of multi-

ple instances from continuous frames. To prune the noisy

detections, we exploit the rich information among multiple

instances and select the discriminative and representative sub-

set. In this framework, our algorithm is able to bridge the gap

between image classification and video object segmentation,

leveraging the ample pre-trained image recognition models

rather than strongly-trained object detectors.

2. OBJECT DISCOVERY

Semantic object segmentation requires not only localising ob-

jects of interest within a video, but also assigning class label

for pixels belonging to the objects. One potential challenge

of using pre-trained image recognition model to detect ob-

jects is that any regions containing the object or even part of

the object, might be “correctly” recognised, which results in

a large search space to accurately localise the object. To nar-

row down the search of targeted objects, we adopt category-

independent bottom-up object proposals [5]. The proposed

object discovery strategy is illustrated in Fig. 1, in which the

key steps are detailed in the following sections.

2.1. Proposal Scoring and Classification

We combine the objectness score associated with each pro-

posal from Endres and Hoiem [5] and motion information as

a context cue to characterise video objects. We follow Papa-

zoglou and Ferrari [6] which roughly produces a binary map

,((( ,&,3