Manifold Clustering

Richard Souvenir and Robert Pless

Washington University in St. Louis

Department of Computer Science and Engineering

Campus Box 1045, One Brookings Drive, St. Louis, MO 63130

{rms2, pless}@cse.wustl.edu

Abstract

Manifold learning has become a vital tool in data driven

methods for interpretation of video, motion capture, and

handwritten character data when they lie on a low dimen-

sional, non-linear manifold. This work extends manifold

learning to classify and parameterize unlabeled data which

lie on multiple, intersecting manifolds. This approach sig-

nificantly increases the domain to which manifold learn-

ing methods can be applied, allowing parameterization of

example manifolds such as figure eights and intersecting

paths which are quite common in natural data sets. This

approach introduces several technical contributions which

may be of broader interest, including node-weighted multi-

dimensional scaling and a fast algorithm for weighted low-

rank approximation for rank-one weight matrices. We show

examples for intersecting manifolds of mixed topology and

dimension and demonstrations on human motion capture

data.

1. Introduction

Data-driven modeling is a powerful approach for non-

rigid motion analysis. Manifold learning approaches have

been applied to automatically parameterize image data sets

including head pose, facial expressions, bird flight, MR im-

agery, and handwritten characters. Each of these data sets

lies on low-dimensional manifolds that are not linear sub-

spaces of the (high-dimensional) input data space. Manifold

learning approaches seek to explicitly or implicitly define

a low-dimensional embedding of the data points that pre-

serves some properties (such as geodesic distance or local

relationships) of the high-dimensional point set.

When the input data points are drawn from multiple

(low-dimensional) manifolds, many manifold learning ap-

proaches suffer. In the case where the multiple mani-

folds are separated by a gap, techniques such as isomet-

ric feature mapping (Isomap) [15] may discover the dif-

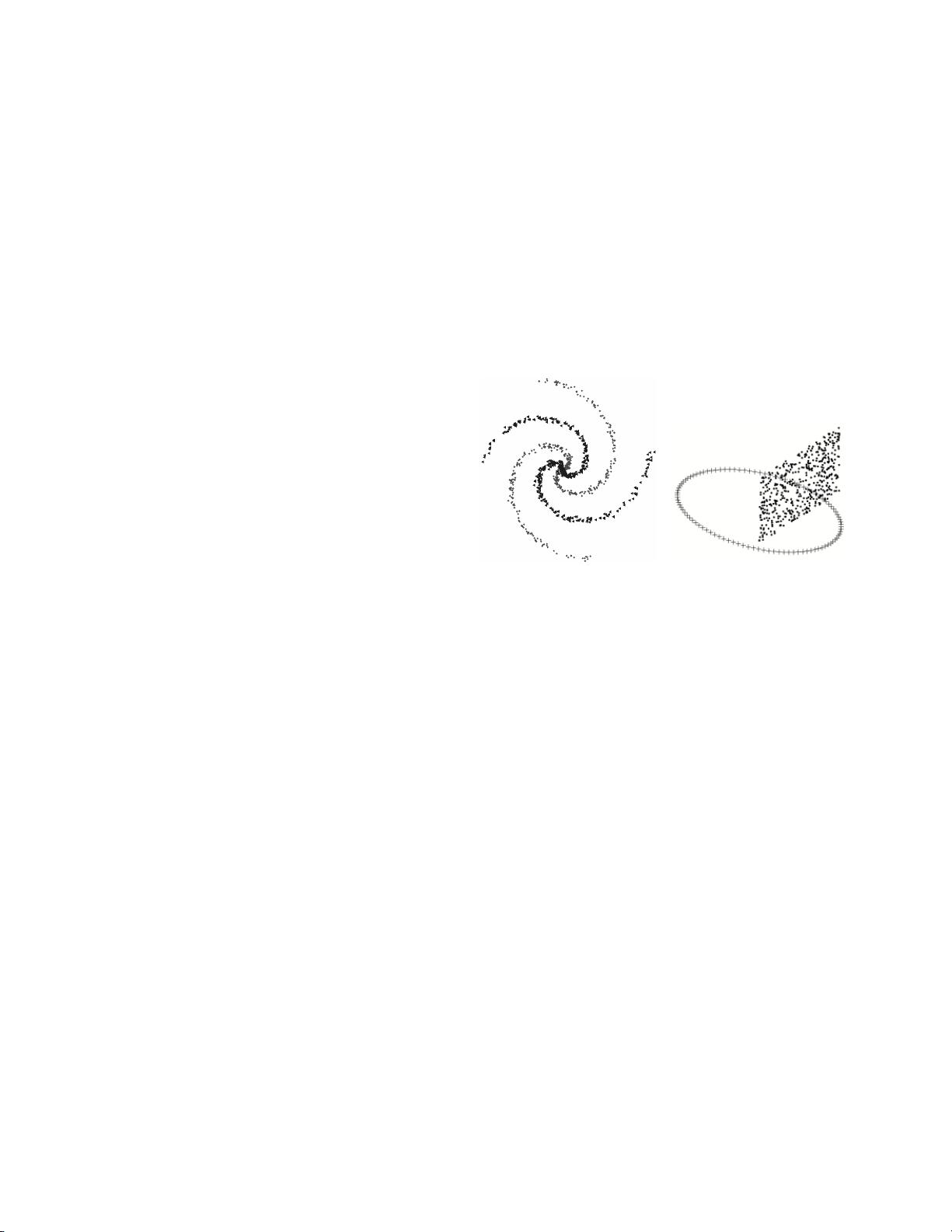

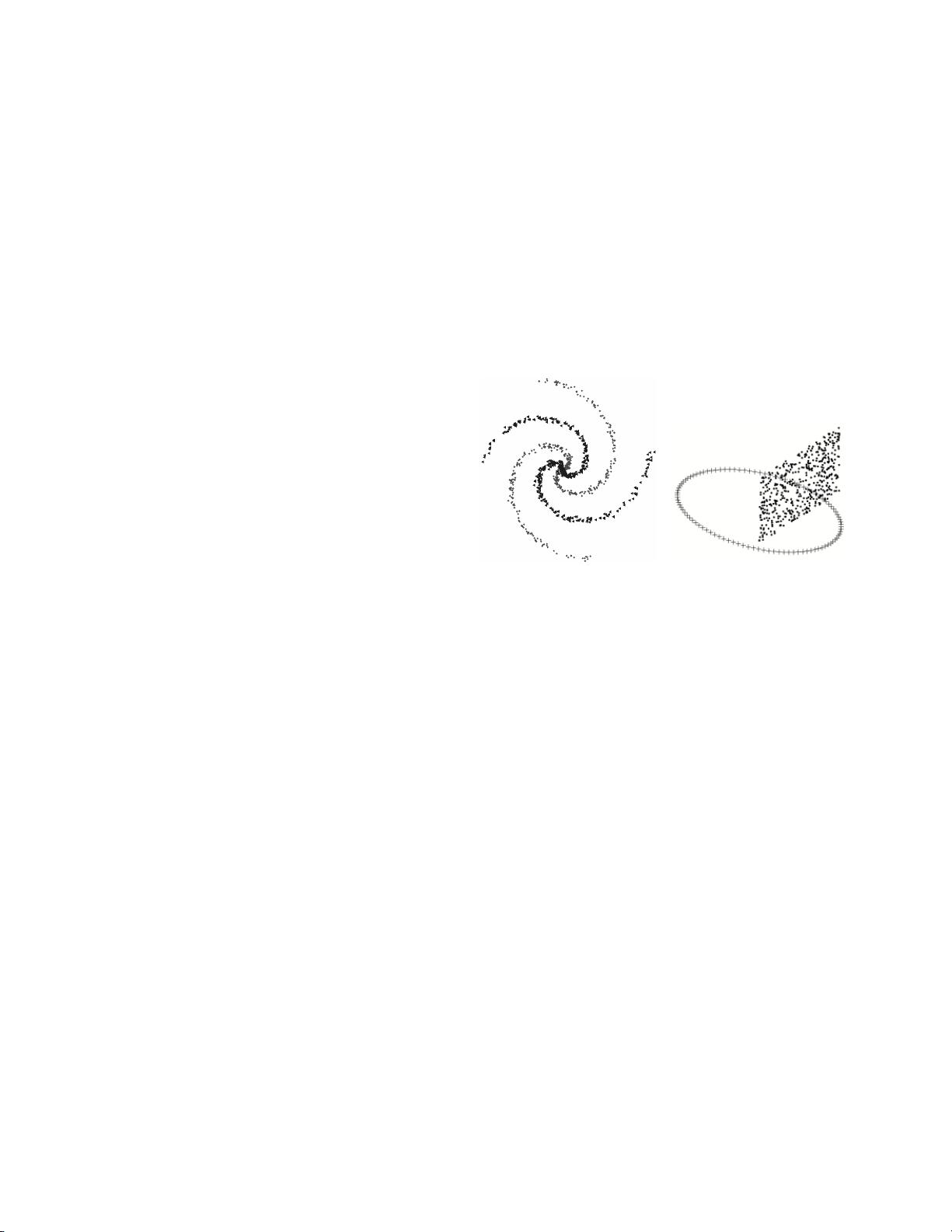

Figure 1. For high-dimensional data points

which lie on intersecting low-dimensional

manifolds, manifold embedding techniques

benefit from first separating points into dis-

tinct classes. These toy problems illustrate

relevant cases. (left) The spirals data set,

which can be embedded in two dimensions

with minimal error, can also be embedded

as two one-dimensional manifolds. (right)

The circle-plane data set includes component

manifolds of different dimension. The seg-

mentations shown here by the different sym-

bols are automatically determined by the ap-

proach developed in this paper.

ferent manifolds as different connected components in the

local neighborhood graph, and spectral clustering tech-

niques may identify and cluster each manifold based on

the optimization of certain objective functions. However,

if there is significant overlap in the manifolds, prior meth-

ods fail in one of two ways: either, in the case of Isomap,

from the non-existence of a low-dimensional embedding

which exactly (or nearly) preserves properties of the high-

dimensional manifold, or, in the case of Locally Linear Em-

bedding (LLE) [13] or Semidefinite Embedding (SDE) [16],

from the fact that additional work is necessary to interpret

R. Souvenir and R. Pless, Manifold Clustering, in Proceedings of the 10th International Conference on Computer Vision (ICCV 2005),

Beijing, China, October 2005, pp. 648 – 653.