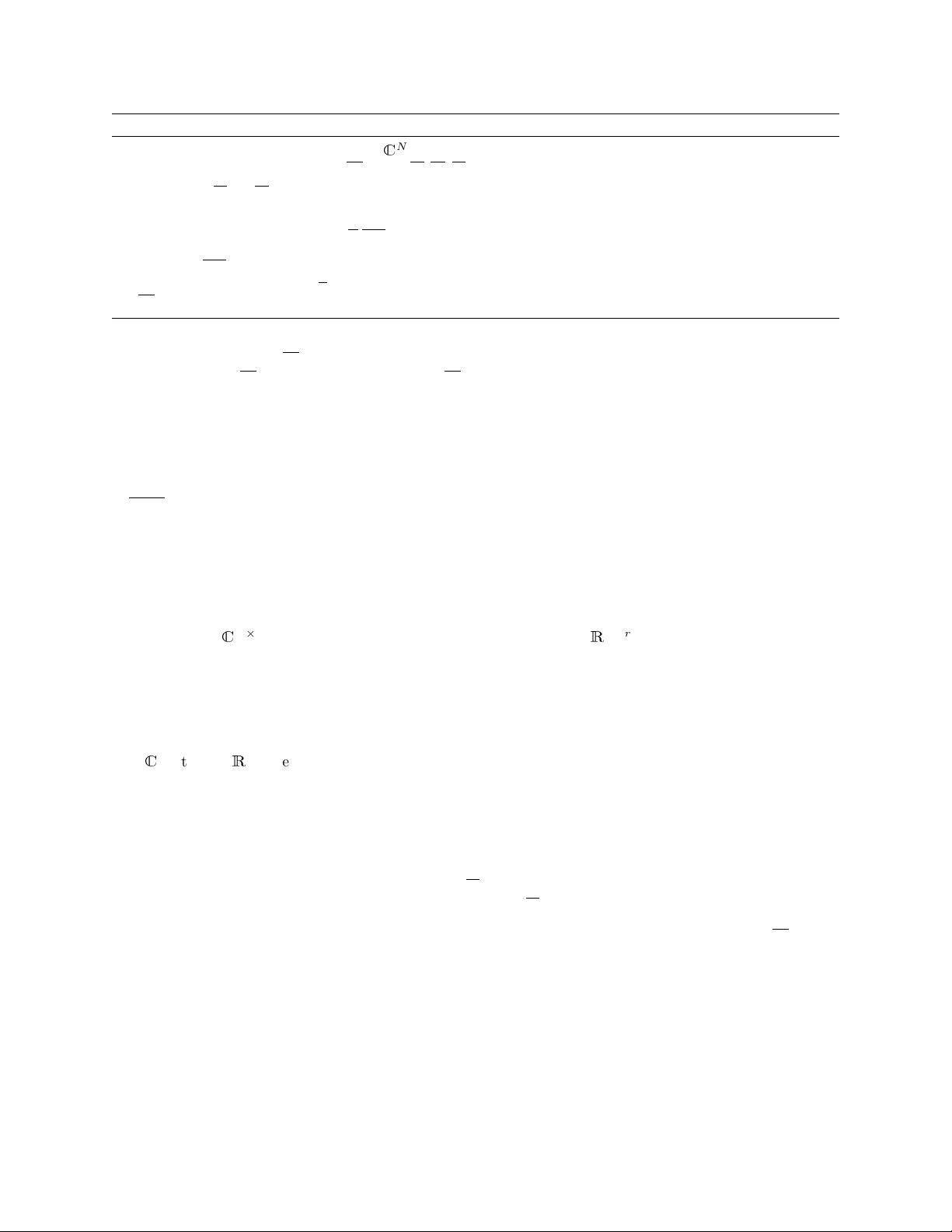

Table 1: Complexity of various sequence models in terms of sequence length (

L

), batch size (

B

), and hidden dimension

(

H

); tildes denote log factors. Metrics are parameter count, training computation, training space requirement, training

parallelizability, and inference computation (for 1 sample and time-step). For simplicity, the state size

N

of S4 is tied

to

H

. Bold denotes model is theoretically best for that metric. Convolutions are efficient for training while recurrence

is efficient for inference, while SSMs combine the strengths of both.

Convolution

3

Recurrence Attention S4

Parameters LH H

2

H

2

H

2

Training

˜

LH(B + H ) BLH

2

B(L

2

H + LH

2

) BH (

˜

H +

˜

L) + B

˜

LH

Space BLH BLH B(L

2

+ HL) BLH

Parallel Yes No Yes Yes

Inference LH

2

H

2

L

2

H + H

2

L H

2

3.4 Architecture Details of the Deep S4 Layer

Concretely, an S4 layer is parameterized as follows. First initialize a SSM with

A

set to the HiPPO matrix

(2)

. By Lemma 3.1 and Theorem 1, this SSM is unitarily equivalent to some (

Λ − P Q

∗

, B, C

) for some

diagonal Λ and vectors P , Q, B, C ∈

C

N×1

. These comprise S4’s 5N trainable parameters.

The overall deep neural network (DNN) architecture of S4 is similar to prior work. As defined above, S4

defines a map from

R

L

→

R

L

, i.e. a 1-D sequence map. Typically, DNNs operate on feature maps of size

H

instead of 1. S4 handles multiple features by simply defining

H

independent copies of itself, and then mixing

the

H

features with a position-wise linear layer for a total of

O

(

H

2

)+

O

(

HN

) parameters per layer. Nonlinear

activation functions are also inserted between these layers. Overall, S4 defines a sequence-to-sequence map of

shape (batch size, sequence length, hidden dimension), exactly the same as related sequence models such as

Transformers, RNNs, and CNNs.

Note that the core S4 module is a linear transformation, but the addition of non-linear transformations

through the depth of the network makes the overall deep SSM non-linear. This is analogous to a vanilla CNN,

since convolutional layers are also linear. The broadcasting across

H

hidden features described in this section

is also analogous to depthwise-separable convolutions. Thus, the overall deep S4 model is closely related to a

depthwise-separable CNN but with global convolution kernels.

Finally, we note that follow-up work found that this version of S4 can sometimes suffer from numerical

instabilities when the

A

matrix has eigenvalues on the right half-plane [

14

]. It introduced a slight change to

the NPLR parameterization for S4 from Λ − P Q

∗

to Λ − P P

∗

that corrects this potential problem.

Table 1 compares the complexities of the most common deep sequence modeling mechanisms.

4 Experiments

Section 4.1 benchmarks S4 against the LSSL and efficient Transformer models. Section 4.2 validates S4 on

LRDs: the LRA benchmark and raw speech classification. Section 4.3 investigates whether S4 can be used as

a general sequence model to perform effectively and efficiently in a wide variety of settings including image

classification, image and text generation, and time series forecasting.

4.1 S4 Efficiency Benchmarks

We benchmark that S4 can be trained quickly and efficiently, both compared to the LSSL, as well as efficient

Transformer variants designed for long-range sequence modeling. As outlined in Section 3, S4 is theoretically

much more efficient than the LSSL, and Table 2 confirms that the S4 is orders of magnitude more speed- and

memory-efficient for practical layer sizes. In fact, S4’s speed and memory use is competitive with the most

3

Refers to global (in the sequence length) and depthwise-separable convolutions, similar to the convolution version of S4.

7