3D retinal SD-OCT images. This is due to two main reasons.

First, the structure of the retina is different from that of the

brain. Geometric moment invariants (GMIs) can distinguish

different geometric structures of brain, but it fails to distinguish

the plate-like structure of retinal layers. Second, retinal

SD-OCT images have much higher resolution than brain MRI

images. In HAMMER, the driving voxels are selected by using

a fuzzy clustering method. Since the fuzzy clustering method

needs to calculate the distance between voxels, it is time

consuming especially when the data is large and may have local

minima. Our work is inspired by HAMMER and tries to extend

HAMMER to OCT registration. Compared with other retinal

OCT image registration methods, we adopt HAMMER's

hierarchical attribute matching mechanism to improve the

registration accuracy while reducing the computation

complexity. Furthermore, our work presents several novel

elements compared with HAMMER: 1)We propose to use

intensity-based region feature, surface-based structure feature

and vessel-like feature instead of GMI feature to distinguish

different structures of retina. 2) We propose an efficient

driving voxel selection method to further reduce the

computation complexity. In our method, rather than randomly

selecting the active voxels, the active voxels are hierarchically

selected following a strategy for importance coefficient

assignment. 3) A preprocessing step is designed to remove the

motion distortion in retinal OCT data before registration. To the

best of our knowledge, the proposed method is the first

longitudinal retinal OCT image registration algorithm which

considered both normal data and serious pathological data.

III. METHODS

A. Overview of the Approach

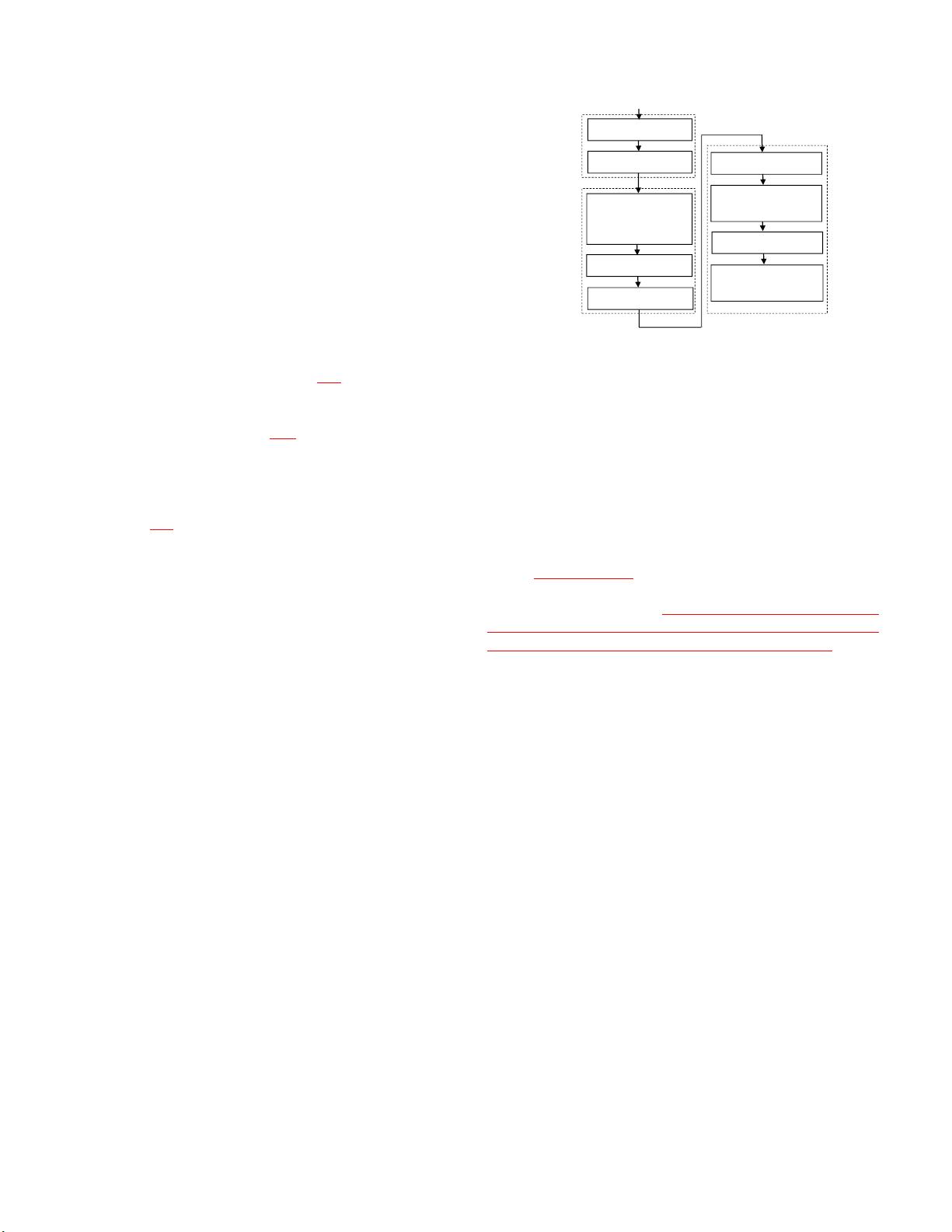

The deformable transformation is a free form mapping at

each voxel

. It can be solved by finding a transformation of

each voxel such that the energy function is minimized.

Considering the high resolution of OCT data, the energy

function would be a very high dimension function which makes

it extremely difficult to find the global optimal solution. The

main difficulties are the computation complexity and the local

minima problem. To speed up registration process and reduce

local minima, a novel design-detection-deformation

mechanism is designed. The proposed method consists of four

steps: preprocessing, feature design, correspondence detection

and hierarchical deformation. The overall flowchart is shown in

Fig. 2. In preprocessing step, OCT data are first segmented by

detecting 7 surfaces using graph search-based method and then

B-scans are flattened to correct eye movement. In the design

step, a couple of features are designed for each voxel in the

image. In the detection and deformation step, active voxels are

hierarchically selected and point-to-point correspondences

between the subject and the template images are established.

The image is then hierarchically deformed according to the

detected correspondences in multi-resolution. The detail of

each step is discussed in the following parts.

Input template and

subject image

Output 3D registration

result

Fig. 2. Flowchart of the proposed algorithm.

B. Preprocessing

1) Multi-resolution graph search: 3D graph-based optimal

surface segmentation method can detect multiple interacting

surfaces simultaneously [21]. The basic idea is to transform the

optimal surface detection problem into computing a minimum

cut in an arc-weighted directed graph. This method and its

variations are successfully applied to retinal layer segmentation

of macular optical coherence tomography images [22-31]. The

surface segmentation methods designed for normal retinas can

also be used to segment the retinas with glaucoma and multiple

sclerosis or other diseases without dramatic change in the layer

structure in the early stage. However, it is difficult to segment

the retinas with additional layer structures such as sub-RPE

fluid and intra-retinal cysts by using the same methods. In our

algorithm, by considering both normal and serious pathological

data such as CNV, the surface segmentation is conducted based

on our previously proposed constrained graph search method

[32]. In preprocessing, all B-scans of the template image and

the subject image are segmented by seven retinal surfaces as

shown in Fig.3. These seven surfaces partition an OCT dataset

into six layers: (1) retinal nerve fiber layer (RNFL), (2)

ganglion cell layer and inner plexiform layer (GCL+IPL), (3)

inner nuclear layer (INL), (4) outer plexiform layer (OPL), (5)

outer nuclear layer and inner/outer segment layer

(ONL+IS/OSL), (6) retinal pigment epithelium (RPE).

2) B-scans flattening: Eye movement artifact occurring

during 3D OCT scanning is a problem for retinal OCT imaging

and makes image registration difficult. During OCT acquisition

process, since the volume is acquired in a few seconds, eye

movement caused by heart beat and respiration in the scan

process results in motion artifacts. In motion distorted data, the

positions of layers varies greatly in consecutive B-scans which

make interpolation and regularization difficult. The position

shifts of B-scans can be viewed in the y-z slices, as shown in

Fig. 4 (a), where each column corresponds to a B-scan.

Flattening the 3D OCT volumes is often used to correct eye