2nd USENIX Conference on File and Storage Technologies

USENIX Association

117

the workload or the request stream is drawn from a

LRU Stack Depth Distribution (SDD), then LRU is the

optimal policy [16]. LRU has several advantages, for

example, it is simple to implement and responds well

to changes in the underlying SDD model. However,

while the SDD model captures “recency”, it does not

capture “frequency”. To quote from [16, p. 282]: “The

significance of this is, in the long run, that each page

is equally likely to be referenced and that therefore

the model is useful for treating the clustering effect of

locality but not the nonuniform page referencing.”

C. Frequency

The Independent Reference Model (IRM) provides a

workload characterization that captures the notion of

frequency. Specifically, IRM assumes that each page

reference is drawn in an independent fashion from

a fixed distribution over the set of all pages in the

auxiliary memory. Under the IRM model, policy LFU

that replaces the least frequently used page is known

to be optimal [16], [17]. The LFU policy has sev-

eral drawbacks: it requires logarithmic implementation

complexity in cache size, pays almost no attention to

recent history, and does not adapt well to changing

access patterns since it accumulates stale pages with

high frequency counts that may no longer be useful.

A relatively recent algorithm LRU-2 [18], [19] ap-

proximates LFU while eliminating its lack of adaptivity

to the evolving distribution of page reference frequen-

cies. This was a significant practical step forward. The

basic idea is to remember, for each page, the last

two times when it was requested, and to replace the

page with the least recent penultimate reference. Under

the IRM assumption, it is known that LRU-2 has the

largest expected hit ratio of any on-line algorithm that

knows at most two most recent references to each page

[19]. The algorithm has been shown to work well on

several traces [18], [20]. Nonetheless, LRU-2 still has

two practical limitations [20]: (i) it needs to maintain

a priority queue, and, hence, it requires logarithmic

implementation complexity and (ii) it contains at one

crucial tunable parameter, namely, Correlated Informa-

tion Period (CIP), that roughly captures the amount of

time a page that has only been seen once recently should

be kept in the cache.

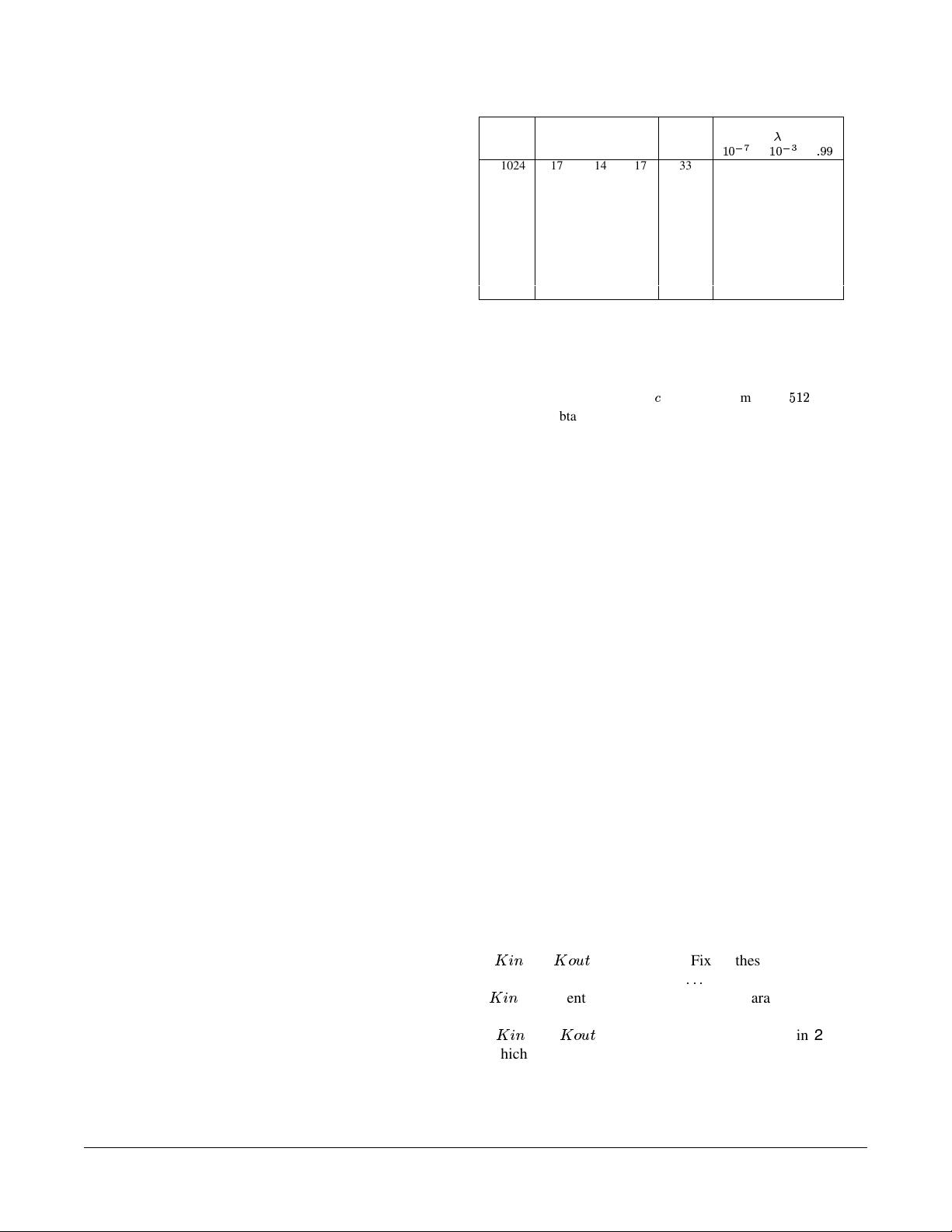

In practice, logarithmic implementation complexity

is a severe overhead, see, Table I. This limitation was

mitigated in 2Q [20] which reduces the implementation

complexity to constant per request. The algorithm 2Q

uses a simple LRU list instead of the priority queue

used in LRU-2; otherwise, it is similar to LRU-2. Policy

ARC has a computational overhead similar to 2Q and

both are better than LRU-2, see, Table I.

Table II shows that the choice of the parameter CIP

c LRU ARC 2Q LRU-2 LRFU

4

5 6 8 9 5 6 8 : ; < <

1024 17 14 17 33 554 408 28

2048 12 14 17 27 599 451 28

4096 12 15 17 27 649 494 29

8192 12 16 18 28 694 537 29

16384 13 16 19 30 734 418 30

32768 14 17 18 31 716 420 31

65536 14 16 18 32 648 424 34

131072 14 15 16 32 533 432 39

262144 13 13 14 30 427 435 42

524288 12 13 13 27 263 443 45

TABLE I. A comparison of computational overhead of various

cache algorithms on a trace P9 that was collected from a

workstation running Windows NT by using Vtrace which

captures disk requests. For more details of the trace, see

Section V-A. The cache size

=

represents number of

>

byte

pages. To obtain the numbers reported above, we assumed

that a miss costs nothing more than a hit. This focuses the

attention entirely on the “book-keeping” overhead of the cache

algorithms. All timing numbers are in seconds, and were

obtained by using the “clock()” subroutine in “time.h” of

the GNU C compiler. It can be seen that the computational

overhead of ARC and 2Q is essentially the same as that of

LRU. It can also be seen that LRU-2 has roughly double

the overhead of LRU, and that LRFU can have very large

overhead when compared to LRU. The same general results

hold for all the traces that we examined.

crucially affects performance of LRU-2. It can be seen

that no single fixed a priori choice works uniformly well

across across various cache sizes, and, hence, judicious

selection of this parameter is crucial to achieving good

performance. Furthermore, we have found that no single

a priori choice works uniformly well across across

various workloads and cache sizes that we examined.

For example, a very small value for the CIP parameters

work well for stable workloads drawn according to the

IRM, while a larger value works well for workloads

drawn according to the SDD. Indeed, it has been

previously noted [20] that “it was difficult to model the

tunables of the algorithm exactly.” This underscores the

need for on-line, on-the-fly adaptation.

Unfortunately, the second limitation of LRU-2 per-

sists even in 2Q. The authors introduce two parameters

(

? @ B

and

? D E F

) and note that “Fixing these parameters

is potentially a tuning question

! ! !

” [20]. The parameter

? @ B

is essentially the same as the parameter CIP

in LRU-2. Once again, it has been noted [21] that

“

? @ B

and

? D E F

are predetermined parameters in 2Q,

which need to be carefully tuned, and are sensitive to

types of workloads.” Due to space limitation, we have

shown Table II only for LRU-2, however, we have

observed similar dependence of 2Q on the workload