92

• 2023 IEEE International Solid-State Circuits Conference

ISSCC 2023 / SESSION 5 / IMAGE SENSORS / 5.2

5.2 1.22μm 35.6Mpixel RGB Hybrid Event-Based Vision Sensor

with 4.88μm-Pitch Event Pixels and up to 10K Event Frame

Rate by Adaptive Control on Event Sparsity

Kazutoshi Kodama

1

, Yusuke Sato

1

, Yuhi Yorikado

1

, Raphael Berner

2

,

Kyoji Mizoguchi

1

, Takahiro Miyazaki

1

, Masahiro Tsukamoto

1

,

Yoshihisa Matoba

1

, Hirotaka Shinozaki

1

, Atsumi Niwa

1

, Tetsuji Yamaguchi

1

,

Christian Brandli

2

, Hayato Wakabayashi

1

, Yusuke Oike

1

1

Sony Semiconductor Solutions, Atsugi, Japan

2

Sony Advanced Visual Sensing, Schlieren, Switzerland

Recently, image sensors for mobile devices have shrunk in pixel size and increased in

resolution, while there is a strong demand for low-light photography and high-frame-

rate video recording with low power. Because smaller pixels result in reduced sensitivity,

the exposure time must be increased. However, when capturing fast-moving objects with

longer exposure times, blurry images are unavoidable. To solve this problem, bracketing

and burst imaging have recently been reported [1]. These techniques combine multiple

RGB frames into a single frame. To capture high-speed objects with the same exposure

time, the number of frames and output frame rate are increased. As a result, the power

consumption increases significantly. Conversely, event-based vision sensors (EVSs)

have been proposed as a more efficient means to capture motion information than the

existing frame-based RGB sensors [2,3]. The possibility of effective image enhancement

such as deblur and video frame interpolation using a deep neural network (DNN) with

RGB and event data have been reported [4,5]. To satisfy the required image quality, these

image-enhancement techniques need RGB characteristics equivalent to advanced mobile

RGB sensors and focal alignment on a sensor between RGB and event pixels. To address

these issues, this paper proposes a hybrid-type sensor embedding high-frame-rate event

pixels with advanced mobile 1.22m RGB pixels in an existing mobile image sensor

architecture. To allow image enhancement of the hybrid data in the mobile application

processor, efficient packaging of the data in frames and accurate synchronization

between RGB and EVS data are required. Therefore, the EVS part of the sensor uses a

scan readout access and global detection. The scan readout type EVS has the problem

that the frame rate decreases as the number of pixels increases, owing to the limited

access speed of event rows and limited bandwidth of the sensor output interface.

Therefore, a variable frame rate, which changes the frame rate according to the number

of detected events, as well as an event drop filter and event compression to reduce and

control the amount of detected data are adopted.

Figure 5.2.1 shows the stacked sensor architecture. The sensor comprises an upper pixel

chip and a lower chip, which are connected by Cu–Cu connections. RGB and event pixels

are arranged on the upper chip, where each pixel employs a different customized design

to satisfy mobile imaging quality. The RGB pixels adopt quad Bayer coding and are

controlled by reset (RST), transfer gate (TRG), and select (SEL) signals from row drivers.

The readout signal is connected to the column ADC on the lower chip through vertical

signal line (VSL) and converted to 10b digital data. Furthermore, the output signal is

connected to an image signal processor. The event pixels adopt white color filters. Four

photodiodes of 1.22m pitch are connected and used as one event pixel. The analog

front-end circuits (AFE) for event pixels use a 4.88m pitch and are placed on the lower

chip under the pixel array. The AFE comprises a sample and hold (S&H) circuit for

holding the output after logarithmic conversion, comparator with three threshold values

of V

on

, V

reset

, and V

off

for contrast change detection, and logic circuit for holding the

detection results of positive and negative events. The AFE detects both positive and

negative contrast changes in one frame and outputs 2b data. The detected event signals

are readout per row and input to an event drop filter (EDF) and event compression occurs

in the event signal processor. RGB and event signals are synchronized at the sensor

output interface (I/F) block and output at the same time. Because the frame rate of events

is higher than that of RGB, multiple event frames are output while one RGB frame is

output.

Figure 5.2.2 shows the timing chart. RGB data adopts rolling shutter readout and is

synchronized with line sync to access each row. A single image of RGB data is

synchronized with the frame sync period. Event data is globally detected and readout in

a scan access manner. The threshold of the comparator in event pixel AFE is set to V

on

and V

off

for positive and negative event detections, respectively. After the detection phase,

the comparator threshold is set to V

reset

, and the S&H circuit holds the current pixel output

voltage in the case that the event pixel detects events. The detected event signals are

stored in two latches in the logic unit, and data is sequentially transferred for each row,

and one event frame is synchronized with the period of frame sync. To achieve a high

frame rate, only the rows where at least one event is detected are read, and the frame

sync is variable and can be shorter when the data transfer time is shortened because of

skipped rows. Frame sync of event output is synchronized with line sync of RGB, and

the total length of multiple event frames is adjusted to be identical to the length of one

RGB frame, allowing it to be easily received and handled by the mobile application

processor. When the events are sparse, the event frame rate is higher and the number

of event frames in a single RGB frame increase.

Figure 5.2.3 (a) shows the data flow and timing chart of an event drop filter. The

bandwidth of the output I/F of the sensor is limited by the amount of output data per

row, and frame-based processing would need a large memory and, correspondingly, the

latency would be increased. Therefore, an event drop filter per row is adopted. Event

input is stored in a temporal memory. An accumulator counts the number of detected

events for each processing patch, and the drop ratio control block calculates the drop

rate using three bits. After determining the drop ratio, the stored event data is read, and

events are dropped in the event drop filter block according to the result of the drop rate

control. To efficiently utilize the limited bandwidth of the I/F, the number of events per

processing patch is kept constant. Figure 5.2.3 (b) shows the data flow and timing chart

of event compression. The compression ratio is data dependent, and depending on the

adopted compression scheme, the amount of data after compression can become larger

than that without compression. Therefore, the event compression block implements

several compression schemes and automatically selects the best scheme (including the

option not to compress) as per the compression ratio. The compression selector

calculates the amount of data after compression and selects the appropriate scheme. It

does not use any compression if the compression ratio in all the compression schemes

exceeds 100%. The compression is processed per row to relax the bandwidth, and the

filtered output is stored in temporal memory. Because the data size after compression is

different for each row, the interface synchronization signal is variably controlled by output

control in accordance with the data size to realize a high frame rate.

The sensor is implemented in a 90nm 1P 7M Back-illuminated CMOS image sensor

(BI CIS) process and a 22nm 1P 10M standard CMOS process. The chip size is 11.4mm

(H) × 10.5mm (V), and Fig. 5.2.4(a) shows a hybrid output image with a fixed 1100fps

event output. Figure 5.2.4(b) shows the measurement result of event frame rate with

and without event signal processing. By skipping rows without events, the event output

can achieve a variable frame rate of up to 10kfps. EDF and compression can improve the

frame rate compared to without these processes. Figure 5.2.4(c) shows a captured event

with a 4000 fps output using variable frame rate and these signal processings. RGB

image and EVS events of a moving object are captured at the same time. Figure 5.2.5(a)

shows the experimental result of a deblurred output image using the sensor. The blurry

image is recovered by deblur NN using RGB data with a fixed 1100 fps event output.

Figure 5.2.5(b) shows the EDF output. Despite EDF dropping 50% of the events, the

object can be identified. Figure 5.2.6 depicts the comparison table. The power

consumption of the hybrid output is 525mW. A maximum event rate of 4,562Meps is

achieved thanks to the variable frame rate. EDF and compression is adopted for event

signal processing. A 1.22µm RGB pixel is achieved, which is the smallest reported pixel

for a hybrid sensor. The achieved RGB performance with a random noise of 1.57e- and

dynamic range of 67.8 dB is best among the hybrid sensors in the table thanks to

advanced mobile RGB pixel and architecture. The RGB pixel resolution is 35.6Mpixel,

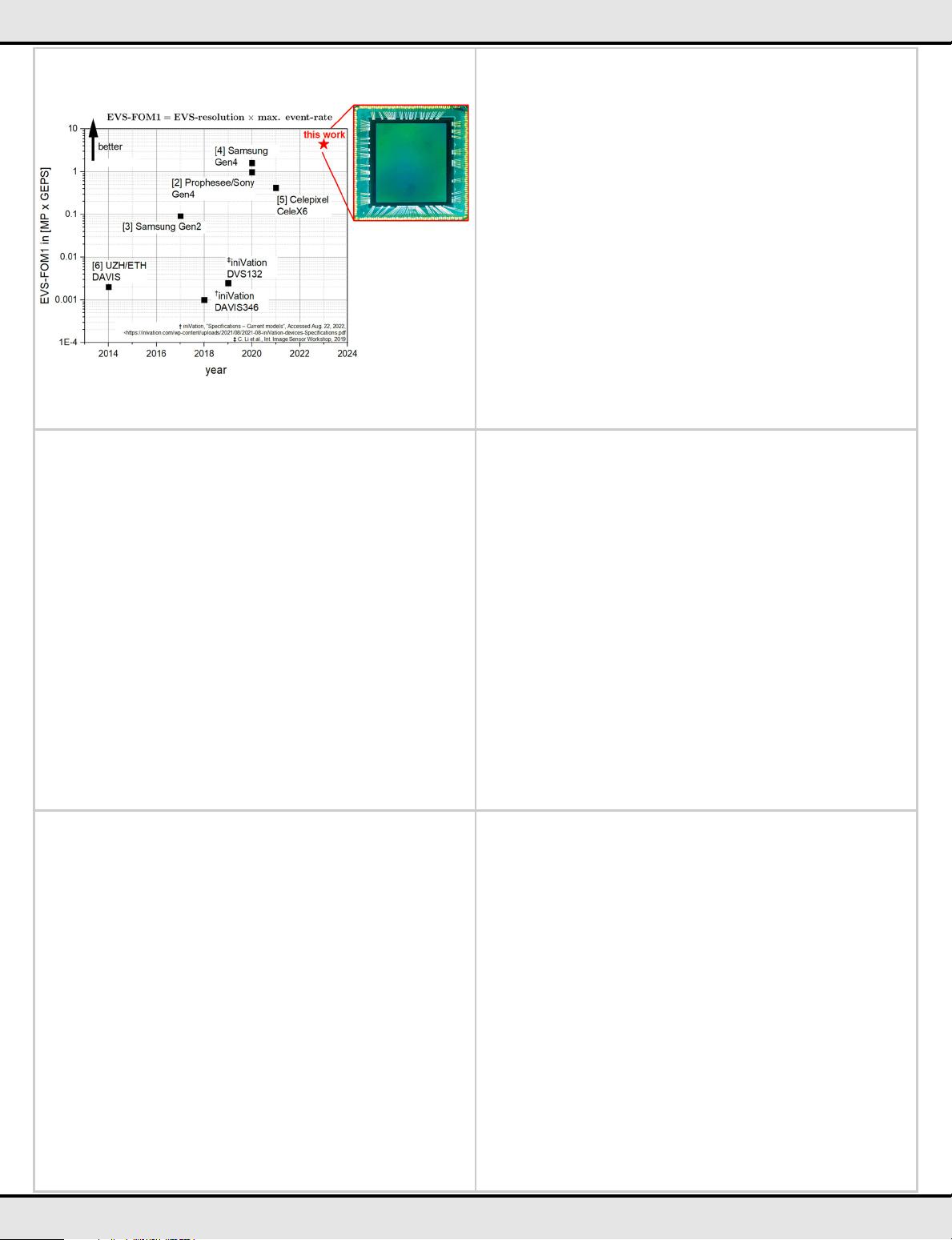

while the event pixel resolution is full HD (2.08 Mpixel). Figure 5.2.7 shows the

photograph of a chip.

References:

[1] O. Liba et al., “Handheld Mobile Photography in Very Low Light”, ACM Trans. on

Graphics, vol. 38, pp. 16, 2019.

[2] T. Finateu et al., “A 1280×720 Back-Illuminated Stacked Temporal Contrast Event-

based Vision Sensor with 4.86µm Pixels, 1.066GEPS Readout, Programmable Event Rate

Controller and Compressive Data Formatting Pipeline,” ISSCC, pp. 112-113, Feb. 2020.

[3] Y. Suh et al., “A 1280×960 Dynamic Vision Sensor with a 4.95µm Pixel Pitch and

Motion Artifact Minimization,” ISCAS, Oct. 2020.

[4] Z. Jiang, “Learning Event-Based Motion Deblurring,” CVPR, June 2020.

[5] S. Tulyakov et al., “Time Lens++: Event-based Frame Interpolation with Parametric

Non-linear Flow and Multi-scale Fusion,” CVPR, June 2022.

[6] S. Chen, "Development of Event-based Sensor and Applications," CVPR, June 2021

[7] C. Brandli et al., “A 240×180 130dB 3s Latency Global Shutter Spatiotemporal Vision

Sensor,” JSSC, pp.2333-2341, Oct. 2014.

978-1-6654-9016-0/23/$31.00 ©2023 IEEE