new manifold. For example, a classifier with rectangle decision

boundaries is not suitable if the new manifold is a hypersphere.

Second, the kernel classifiers are deprecated, because there is no

reason to use a more complex classifier than necessary, besides

it makes no sense to increase the data dimension after just

reducing it.

2.3. Out-of-sample extension

To extend the built classifier to the out-of-sample examples, M

3

first maps the test data X

T

onto the same manifold as where Z

S

locates, and then classifies the transformed test data Z

T

with the

classifier built in the classifier construction stage.

Fig. 1 illustrates the diagram of the manifold mapping of out-of-

sample examples. Different from the training data mapped from

the original manifold M to the target manifold H via the supervised

manifold mapping function f, the test data fails to be mapped in the

same way, because it does not have the supervised information that

f requires. To extend the supervised manifold mapping of f to the

out-of-sample test data, we construct an intermediate manifold N

between M and H as their bridge to establish an unsupervised

connection between them. The prerequisite is that there exist a

manifold mapping

z

from M to N , a manifold mapping

Z

from H to

N and its inverse mapping

Z

1

. By forcing the image of M on N to

be consistent with the image of H on N , the unsupervised

relationship between M and H can be formulated with the

mapping of

z

and

Z

1

.

The following Consistent Mapping Principle provides the deter-

mination of the best map point of arbitrary test data from manifold

M to manifold H based on the idea described above.

Definition 2 (Consistent Mapping Principle). Suppose xA X

T

is an

arbitrary test data point on manifold M, the best map point of x on

manifold H, denoted as z

, should be

z

¼ arg min

z

J

z

X

S

ðxÞ

Z

Z

S

ðzÞJ

2

ð5Þ

where

z

X

S

ðxÞ :

R

N

-

R

m

ð6Þ

is the relationship between X

S

and x A M, and

Z

Z

S

ðzÞ :

R

d

-

R

m

ð7Þ

is the relationship between Z

S

and an arbitrary data point zA H.

By defining the intermediate manifold N as the local coordinate

space of the test data, Consistent Mapping Principle minimizes the

difference between local coordinate of the test data on H and its

local coordinate on M. Therefore, the best map point of xA X

T

onto

H is the one that can best preserve the relationship of x with the

training data on M.

Although in theory the functions

z

X

S

and

Z

Z

S

should reflect the

manifold structure of M and H respectively, the fact is in most

cases little is known about the structure of M, while relatively

more information can be obtained about the structure of H from the

supervised manifold mapping function f. As a result,

z

X

S

can choose

some metric which is universally applicable to different data sets,

while

Z

Z

S

must be selected under the restriction of the known

structure of H.

3. Spectral method

Spectral method is proposed on the foundation of spectral graph

theory [27]. Its basic idea is to transform the graph segmentation

problem to the eigenvalue problem of Laplacian matrix. The

advantage of spectral method lies in its outstanding graph partition

ability without estimating any explicit model of the data

distribution.

Consider an undirected weighted graph G ¼ðV, E, WÞ with V the

vertex set, E the edge set, and W the similarity set. The problem of

graph segmentation refers to dividing G into p unconnected

subgraphs fG

1

, ..., G

p

g, so that their inner-cluster similarity can

be maximized while their outer-cluster similarity is minimized.

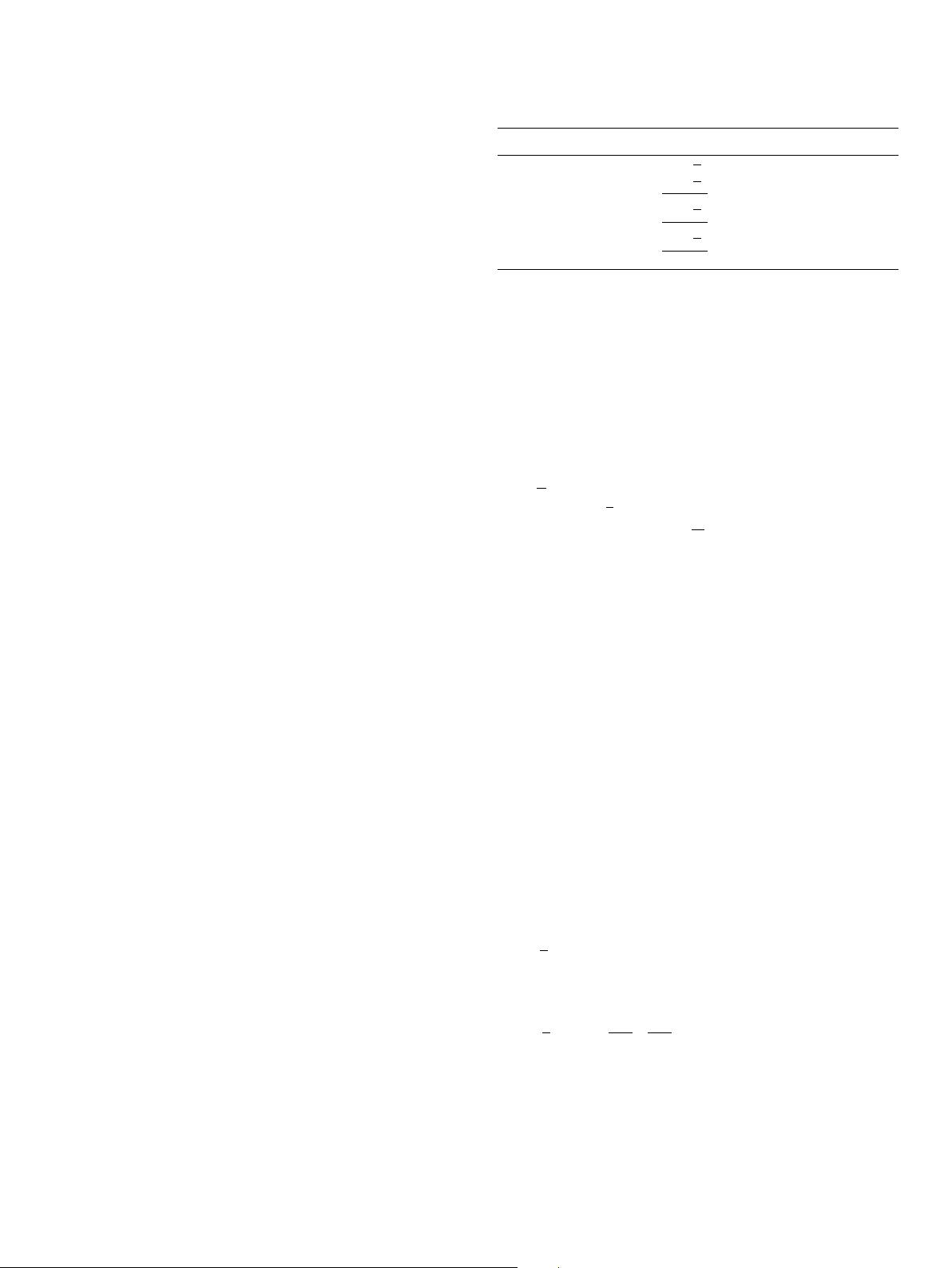

Four well-known graph partition criteria have been proposed,

including Min Cut [28], Ratio Cut [29] , Normalized Cut [30] and

Min-Max Cut [31]. Their objectives and solutions are summarized

in Table 1, in which V

i

represents the vertex set of G

i

,

cutðV

i

, V

i

Þ¼

X

i A V

i

, j A V

i

w

ij

ð8Þ

is the similarity between V

i

and V

i

¼ V=V

i

, jV

i

j is the number of the

vertices in V

i

.

Definition 3 ( Laplacian matrix). The Laplacian matrix of graph G is

L ¼

def

DW ð9Þ

where W ¼ðw

ij

Þ

nn

,

D ¼ diagðW1

n

Þ

¼

d

1

d

2

&

d

n

2

6

6

6

6

4

3

7

7

7

7

5

ð10Þ

with

d

i

¼

X

n

j ¼ 1

w

ij

ð11Þ

Definition 4 (Normalized Laplacian matrix). The normalized

Laplacian matrix of graph G is

L ¼

def

D

1=2

LD

1=2

¼ ID

1=2

WD

1=2

ð12Þ

Property 5. The Laplacian matrix L satisfies that 8 f A

R

n

f

T

Lf ¼

1

2

X

n

i, j ¼ 1

w

ij

ðf

i

f

j

Þ

2

ð13Þ

Property 6. The normalized Laplacian matrix L satisfies that 8 f A

R

n

f

T

Lf ¼

1

2

X

n

i, j ¼ 1

w

ij

f

i

ffiffiffiffi

d

i

p

f

j

ffiffiffiffi

d

j

q

0

B

@

1

C

A

2

ð14Þ

The eigenvector solutions of the multiway-cut criteria are

usually collected in an indicating matrix F for post-processing,

such as k-means clustering.

F ¼½v

1

v

2

v

p

ð15Þ

By far, there are three different graph construction methods that

are most regularly used in the spectral method.

Table 1

Objectives and solutions of four multiway-cut graph partition criteria.

Criteria Minimization objective Relaxed solution

Min Cut

P

p

i ¼ 1

cutðV

i

, V

i

Þ

min

v

1

,..., v

p

Lv ¼

l

v

Ratio Cut

P

p

i ¼ 1

cutðV

i

, V

i

Þ

jV

i

j

min

v

1

,..., v

p

Lv ¼

l

v

Normalized Cut

P

p

i ¼ 1

cutðV

i

, V

i

Þ

cutðV

i

, VÞ

min

v

1

,..., v

p

Lv ¼

l

Dv

Min–Max Cut

P

p

i ¼ 1

cutðV

i

, V

i

Þ

cutðV

i

, V

i

Þ

min

v

1

,..., v

p

Lv ¼

l

Wv

P. He et al. / Neurocomputing 74 (2011) 1450–1466 1453