972 IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 36, NO. 5, MAY 2014

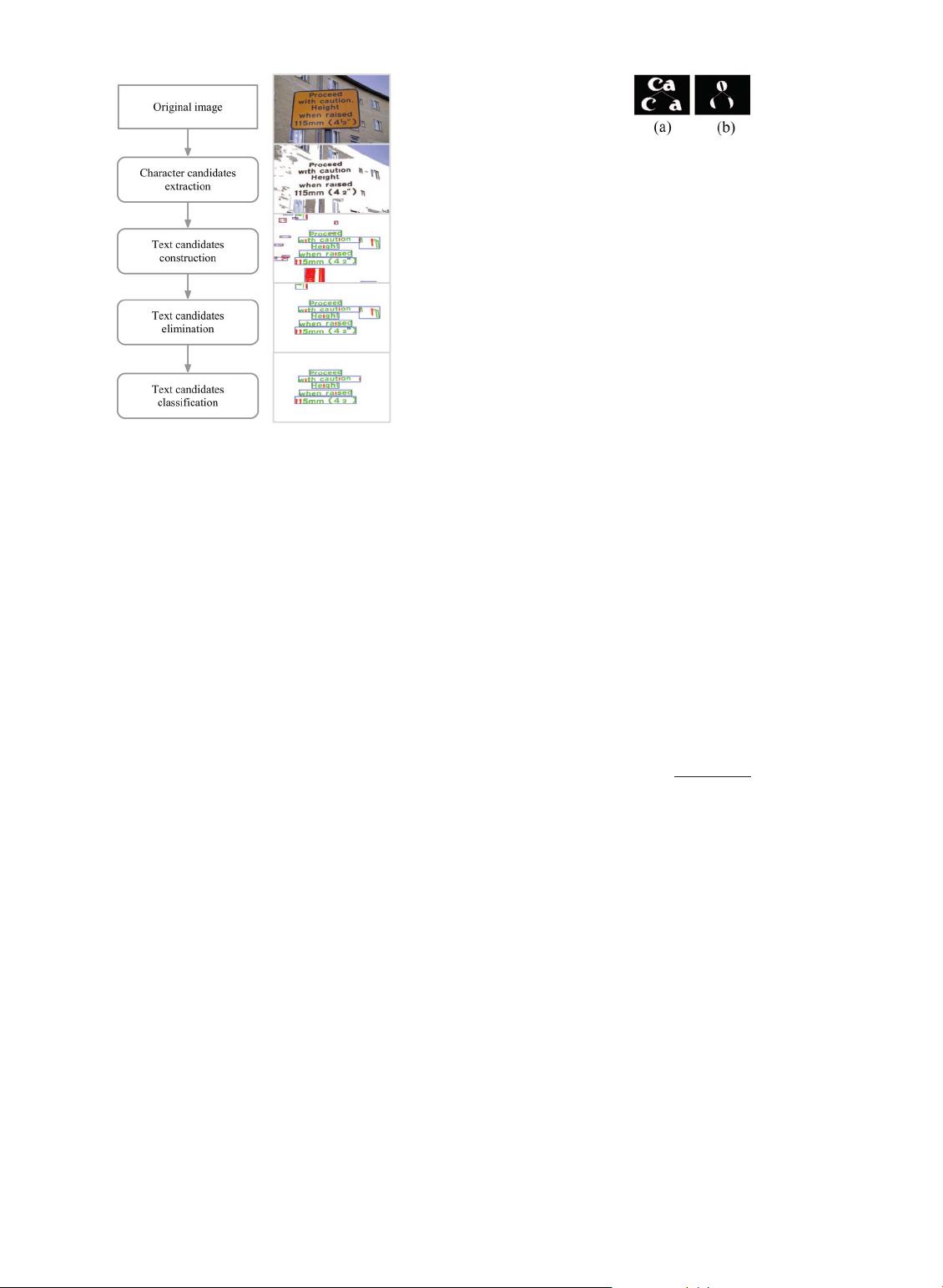

Fig. 1. Flowchart of the proposed system and results after each step of

the sample. Text candidates are labeled by blue bounding rectangles;

character candidates identified as characters are colored green, and

others red.

2) Text candidates construction. Distance weights and clus-

tering threshold are learned simultaneously using the pro-

posed metric learning algorithm; character candidates are

clustered into text candidates by the single-link clustering

algorithm using the learned parameters. More details are

presented in Section 3.2.

3) Text candidates elimination. The posterior probabilities

of text candidates corresponding to non-texts are estimated

using the character classifier and text candidates with

high non-text probabilities are removed. More details are

presented in Section 3.3.

4) Text candidates classification. Text candidates corre-

sponding to true texts are identified by the text classifier.

An AdaBoost classifier is trained to decide whether an text

candidate corresponding to the true text or not [28].

3.1 Character Candidates Extraction

3.1.1 Pruning Algorithm Overview

Repeating components is the major pitfall when the MSER

algorithm is applied as a character segmentation algorithm.

Considering the MSERs tree presented in Fig. 3(a), this fig-

ure shows that fourteen MSERs are detected for the word

“PACT” but only four of them are really interested to us.

The hierarchical structure of MSERs is quite useful for

designing a pruning algorithm. As characters cannot “con-

tain” or be “contained” by other characters in real world,

it is safe to remove children once the parent is known

to be a character, and vice versa. If the MSERs tree is

pruned by applying this kind of parent-children elimination

operation recursively, we are still “safe” and all charac-

ters are preserved after the elimination. As an example,

Fig. 3(e) shows that a set of disconnected nodes contain-

ing all the desired characters can be extracted by applying

this algorithm to the MSERs tree in Fig. 3. However, it

can be computationally expensive to identify characters,

which usually entails the computations of complex features.

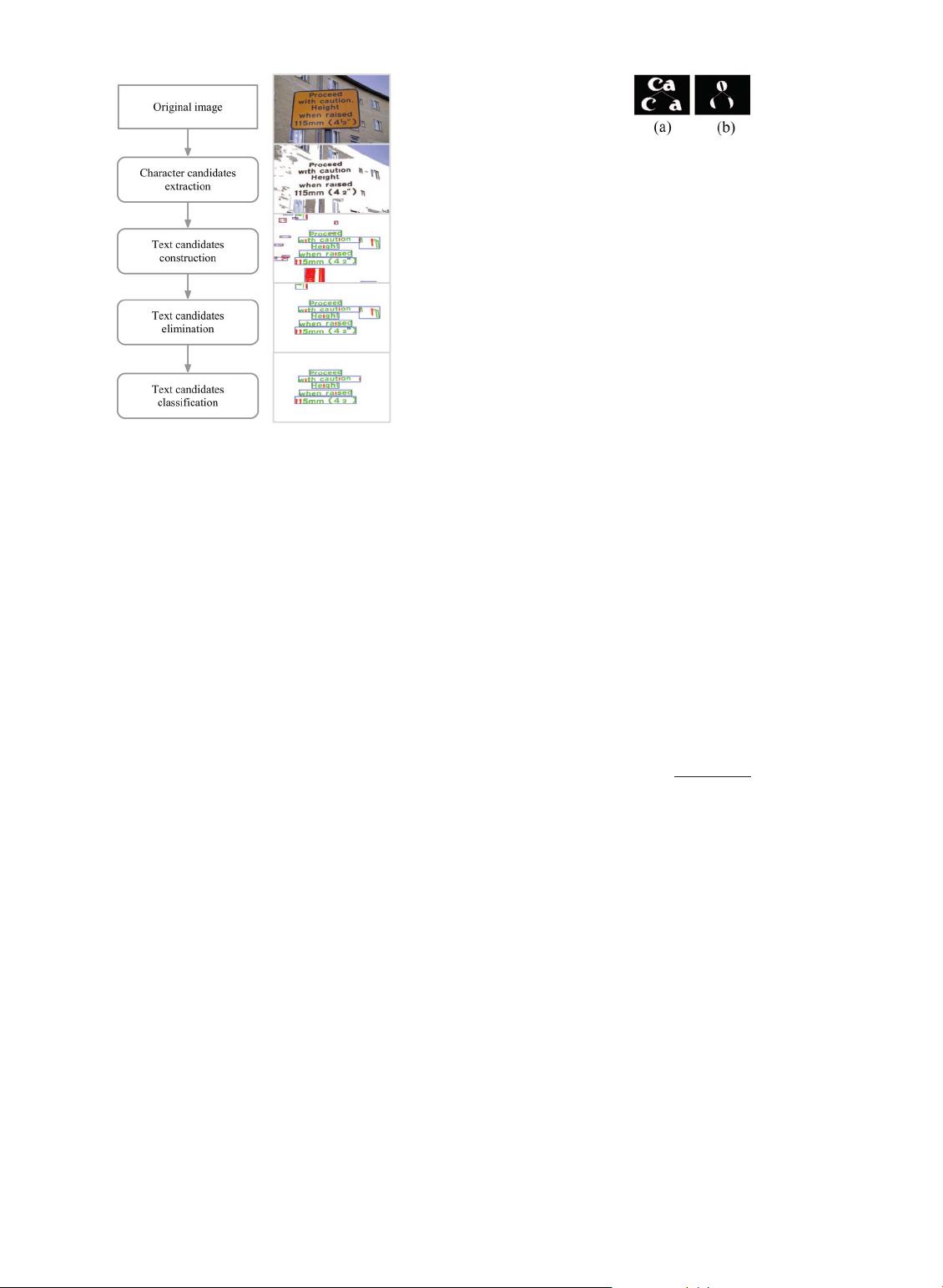

Fig. 2. Character correspondence in MSERs trees. (a) A MSERs tree

whose children corresponds to characters. (b) A MSERs tree whose

parent corresponds to character.

Fortunately, rather than identifying the character, we can

simply choose the one that is more likely to be characters

in a parent-children relationship. [21] claimed that such

pairwise relationships may not be sufficient to eliminate

non-character MSERs, and pruning should exploit some

complicated higher-order properties of text. Alternatively,

our empirical study indicates that this probability can be

fast estimated using our regularized variation scheme with

reasonable accuracy. As there are different situations (one-

child and multiple-children) in MSERs trees, we design

two algorithms based on the parent-children elimination

operation, namely the linear reduction and tree accumulation

algorithm. The linear reduction algorithm is used to remove

line segments in the MSERs tree at first and the accumu-

lation algorithm is then used to further remove repeated

characters.

3.1.2 Variation and Its Regularization

According to Matas et al.[16], an “extremal region” is a con-

nected component of an image whose pixels have either

higher or lower intensity than its outer boundary pixels.

Extremal regions of the whole image are extracted as a

rooted tree. An extremal region is in fact a set of pixels.

Its variation is defined as follows. Let R

l

be an extremal

region, B(R

l

) = (R

l

, R

l+1

,...,R

l+

) ( is a parameter) be

the branch of the tree rooted at R

l

, the variation (instability)

of R

l

is defined as

V(R

l

) =

|R

l+

− R

l

|

|R

l

|

, (1)

where |R| denotes the number of pixels in R. An extremal

region R

l

is a maximally stable extremal region if its varia-

tion is lower and more stable than its parent R

l−1

and child

R

l+1

[16]. Informally, a maximally stable extremal region is

an extremal region whose size remains virtually unchanged

over a range of intensity levels [20].

As MSERs with lower variations have sharper borders

and are more likely to be characters, one possible strat-

egy for the parent-children elimination operation is to select

parent or children based on who have the lowest variation.

However, this strategy alone will not work because MSERs

corresponding to characters may not necessarily have low-

est variations. Consider a very common situation depicted

in Fig. 2. The children of the MSERs tree in Fig. 2(a) cor-

respond to characters while the parent of the MSRE tree

in Fig. 2(b) corresponds to a character. The “minimizing

variation” strategy cannot deal with this situation because

either the parent or children may have the lowest varia-

tions. However, our experiment shows that this difficulty

can be easily overcome by variation regularization. The

basic idea is to penalize variations of MSERs with too large

or too small aspect ratios. Note that we are not requir-

ing characters to have the lowest variations globally, and