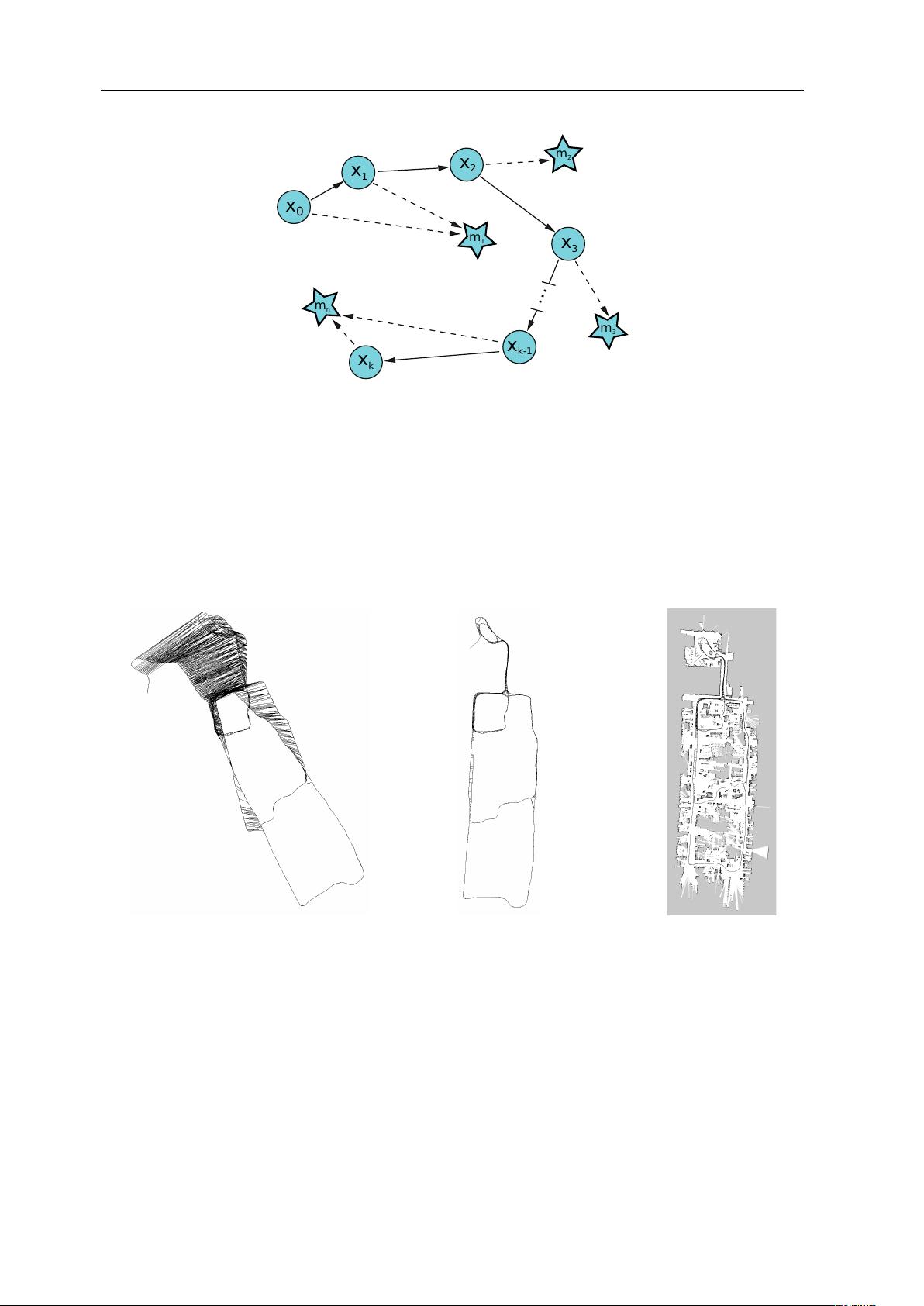

Figure 5. Example inverse depth map reconstructions obtained from DTAM using a single low sample cost volume with S = 32. (a)

Regularised solution obtained without the sub-sample refinement is shown as a 3D mesh model with Phong shading (inverse depth map

solution shown in inset). (b) Regularised solution with sub-sample refinement using the same cost volume also shown as a 3D mesh model.

(c) The video frame as used in PTAM, with the point model projections of features found in the current frame and used in tracking. (d,e)

Novel wide baseline texture mapped views of the reconstructed scene used for tracking in DTAM.

The refinement step is embedded in the iterative optimisa-

tion scheme by replacing the located a

n+1

u

with the sub-

sample accurate version. It is not possible to perform this

refinement post-optimisation, as at that point the quadratic

coupling energy is large (due to a very small θ), and so

the fitted parabola is a spike situated at the minimum. As

demonstrated in Figure 5 embedding the refinement step in-

side each iteration results in vastly increased reconstruction

quality, and enables detailed reconstructions even for low

sample rates, e.g. S ≤ 64.

2.2.6 Setting Parameter Values and Post Processing

Gradient ascent/descent time-steps σ

q

, σ

d

are set optimally

for the update scheme provided as detailed in [3]. Various

values of β can be used to drive θ towards 0 as iterations in-

crease while ensuring θ

n+1

< θ

n

(1 − βn). Larger values

result in lower quality reconstructions, while smaller values

of β with increased iterations result in higher quality. In our

experiments we have set β = 0.001 while θ

n

≥ 0.001 else

β = 0.0001 resulting in a faster initial convergence. We

use θ

0

= 0.2 and θ

end

= 1.0e − 4. λ should reflect the

data term quality and is set dynamically to 1/(1 + 0.5

¯

d),

where

¯

d is the minimum scene depth predicted by the cur-

rent scene model. For the first key-frame we set λ = 1. This

dynamically altered data term weighting sensibly increases

regularisation power for more distant scene reconstructions

that, assuming similar camera motions for both closer and

further scenes, will have a poorer quality data term.

Finally, we note that optimisation iterations can be inter-

leaved with updating the cost volume average, enabling the

surface (though in a non fully converged state) to be made

available for use in tracking after only a single ρ computa-

tion. For use in tracking, we compute a triangle mesh from

the inverse depth map, culling oblique edges as described in

[9].

2.3. Dense Tracking

Given a dense model consisting of one or more keyframes,

we can synthesise realistic novel views over wide baselines

by projecting the entire model into a virtual camera. Since

such a model is maintained live, we benefit from a fully pre-

dictive surface representation, handling occluded regions

and back faces naturally. We estimate the pose of a live

camera by finding the parameters of motion which generate

a synthetic view which best matches the live video image.

We refine the live camera pose in two stages; first with

a constrained inter-frame rotation estimation, and second

with an accurate 6DOF full pose refinement against the

model. Both are formulated as iterative Lucas-Kanade style

non-linear least-squares problems, iteratively minimising

an every-pixel photometric cost function. To converge to

the global minimum, we must initialise the system within

the convex basin of the true solution. We use a coarse-fine

strategy over a power of two image pyramid for efficiency

and to increase our range of convergence.

2.3.1 Pose Estimation

We first follow the alignment method of [8] between con-

secutive frames to obtain rotational odometry at lower levels

within the pyramid, offering resilience to motion blur since

consecutive images are similarly blurred. This optimisa-

tion is more stable than 6DOF estimation when the number

of pixels considered is low, helping to converge for large

pixel motions, even when the true rotation is not strictly ro-

tational (Figure 6). A similar step is performed before fea-

ture matching in PTAM’s tracker, computing first the inter-

frame 2D image transform and fitting a 3D rotation [7].

The rotation estimate helps inform our current best estimate

of the live camera pose,

ˆ

T

wl

. We project the dense model

in to a virtual camera v at location T

wv

=

ˆ

T

wl

, with colour