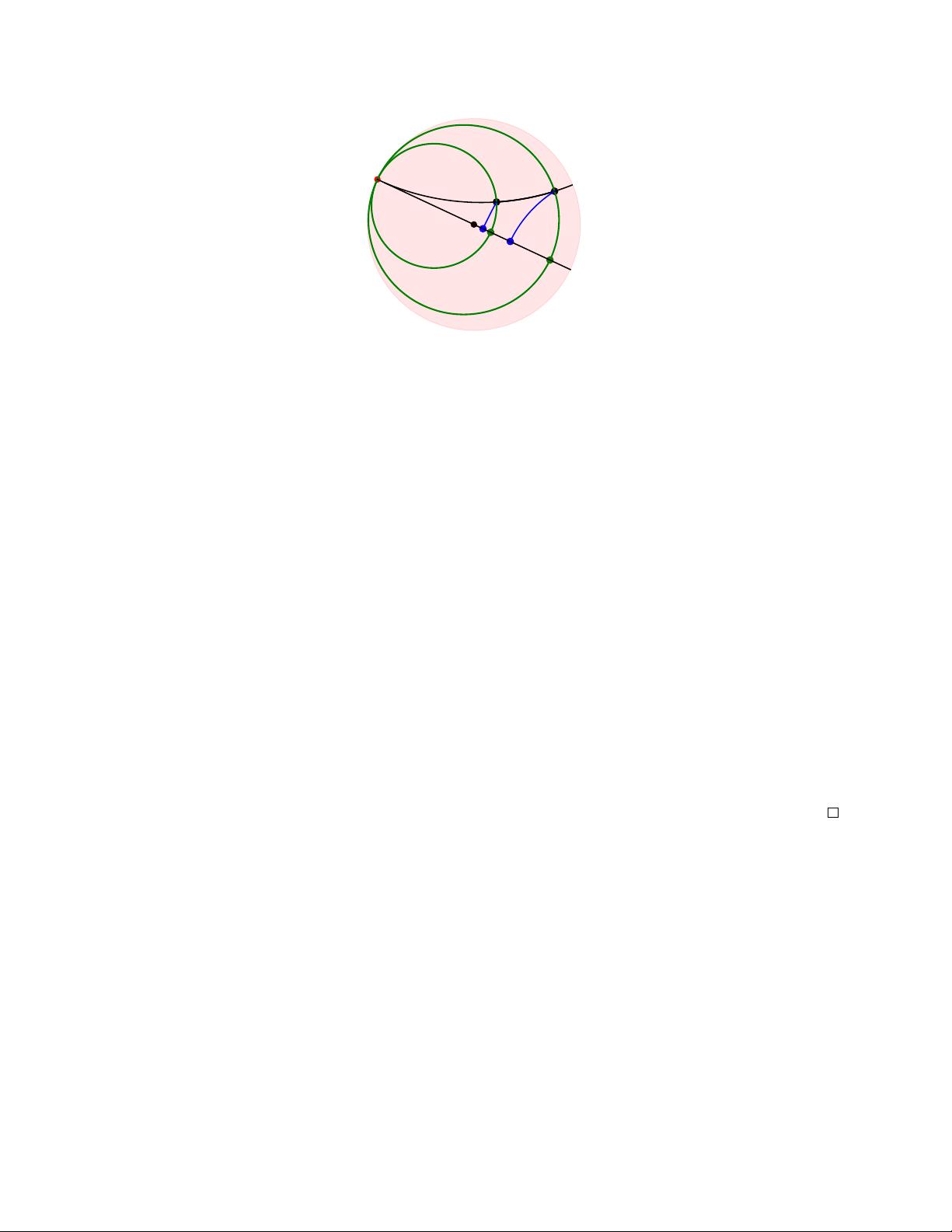

It turns out that this intersection generally consists of two points instead of one. When that happens, one of them will be

strictly closer to the base point b, and we define π

H

b,p

1

,...,p

K

(x) to be that point.

As with Proposition 3.4,

π

H

b,p

1

,...,p

K

(·)

preserves distances along

K

-dimensional manifolds (Corollary A.10). In contrast,

geodesic projections in hyperbolic spaces never preserve distances (except between points already in the target):

Proposition 3.5.

Let

M ⊂ H

d

be a geodesic submanifold. Then every geodesic segment of distance at least

r

from

M

gets at least cosh(r) times shorter under the geodesic projection π

G

M

(·) to M:

length(π

G

M

(I)) ≤

1

cosh(r)

length(I).

In particular, the shrink factor grows exponentially as the segment I moves away from M.

The proof is in Appendix B.

Computation

Interestingly, horosphere projections can be computed without actually computing the horospheres.

The key idea is that if we let

P = GH(p

1

, . . . , p

K

)

be the geodesic hull of the horospheres’ centers, then the intersection

S(p

1

, x) ∩ · · · ∩ S(p

K

, x)

is simply the orbit of

x

under the rotations around

P

. (This is true for the same reason that

spheres whose centers lie on the same axis must intersect along a circle around that axis). Thus,

π

H

b,p

1

,...,p

K

(·)

can be

viewed as the map that rotates x around until it hits M. It follows that it can be computed by:

1. Find the geodesic projection c = π

G

P

(x) of x onto P .

2. Find the geodesic α on M that is orthogonal to P at c.

3. Among the two points on α whose distance to c equals d

H

(x, c), returns the one closer to b.

The detailed computations and proof that this recovers horospherical projections are provided in Appendix A.

3.3 Intrinsic Variance Objective

In Euclidean PCA, directions are chosen to maximally preserve information from the original data. In particular, PCA

chooses directions that maximize the Euclidean variance of projected data. To generalize this to hyperbolic geometry,

we define an analog of variance that is intrinsic, i.e. dependent only on the distances between data points. As we will

see in Section 4, having an intrinsic objective helps make our algorithm location (or base point) independent.

The usual notion of Euclidean variance is the squared sum of distances to the mean of the projected datapoints.

Generalizing this is challenging because non-Euclidean spaces do not have a canonical choice of mean. Previous works

have generalized variance either by using the unexplained variance or Fr

´

echet variance. The former is the squared sum

of residual distances to the projections, and thus avoids computing a mean. However, it is not intrinsic. The latter is

intrinsic [10] but involves finding the Fr

´

echet mean, which is not necessarily a canonical notion of mean and can only

be computed by gradient descent.

Our approach uses the observation that in Euclidean space:

σ

2

(S) =

1

n

X

x∈S

kx − µ(S)k

2

=

1

n

2

X

x,y∈S

kx − yk

2

.

Thus, we propose the following generalization of variance:

σ

2

H

(S) =

1

n

2

X

x,y∈S

d

H

(x, y)

2

. (4)

This function agrees with the usual variance in Euclidean space, while being a function of distances only. Thus it is

well defined in non-Euclidean space, is easily computed, and, as we will show next, has the desired invariance due to

isometry properties of horospherical projections.

7