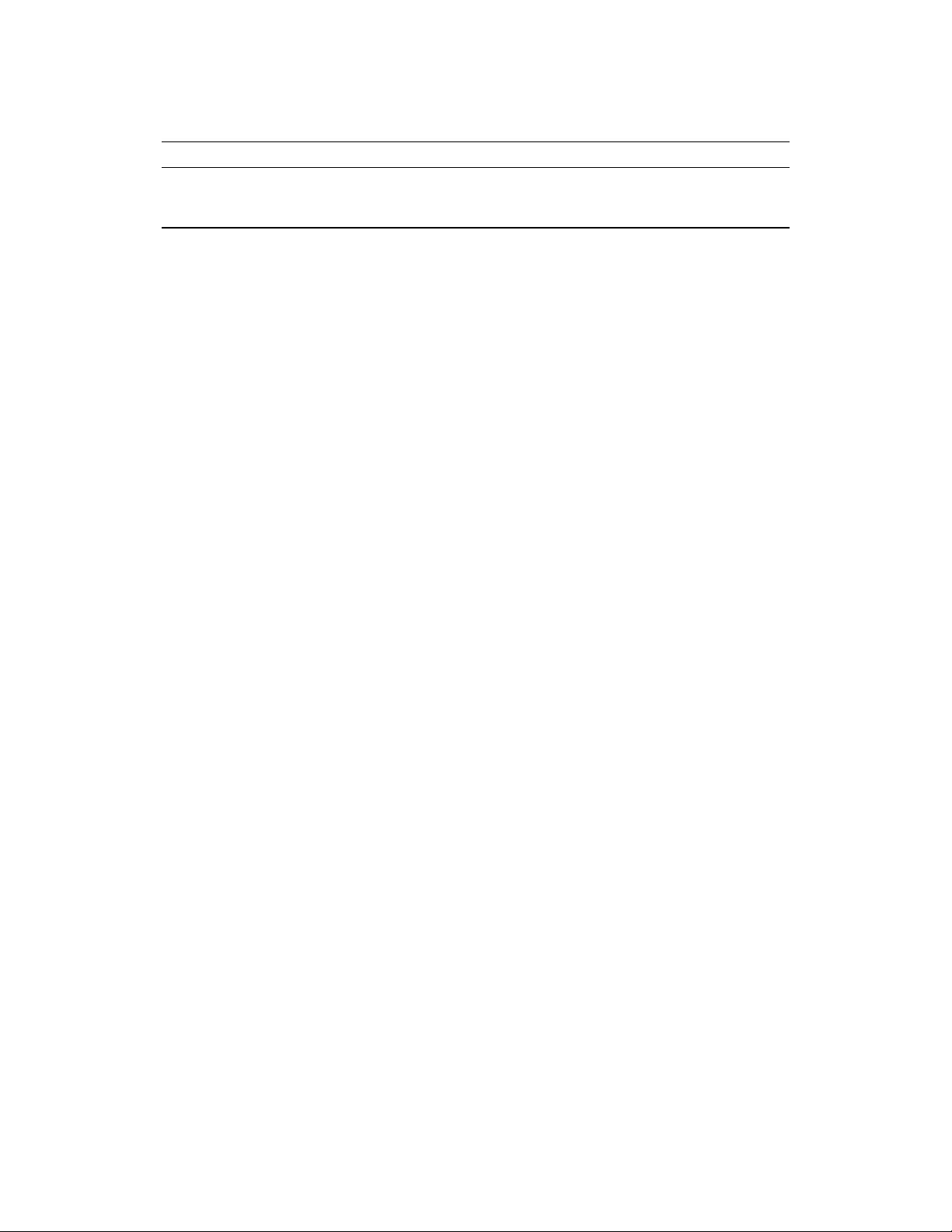

Raffel, Shazeer, Roberts, Lee, Narang, Matena, Zhou, Li and Liu

GLUE CNNDM SQuAD SGLUE EnDe EnFr EnRo

⋆

Baseline average 83.28 19.24 80.88 71.36 26.98 39.82 27.65

Baseline standard deviation 0.235 0.065 0.343 0.416 0.112 0.090 0.108

No pre-training 66.22 17.60 50.31 53.04 25.86 39.77 24.04

Table 1:

Average and standard deviation of scores achieved by our baseline model and

training procedure. For comparison, we also report performance when training on

each task from scratch (i.e. without any pre-training) for the same number of steps

used to fine-tune the baseline model. All scores in this table (and every table in

our paper except Table 14) are reported on the validation sets of each data set.

number of experiments we run. As a cheaper alternative, we train our baseline model 10

times from scratch (i.e. with different random initializations and data set shuffling) and

assume that the variance over these runs of the base model also applies to each experimental

variant. We don’t expect most of the changes we make to have a dramatic effect on the

inter-run variance, so this should provide a reasonable indication of the significance of

different changes. Separately, we also measure the performance of training our model for 2

18

steps (the same number we use for fine-tuning) on all downstream tasks without pre-training.

This gives us an idea of how much pre-training benefits our model in the baseline setting.

When reporting results in the main text, we only report a subset of the scores across all

the benchmarks to conserve space and ease interpretation. For GLUE and SuperGLUE, we

report the average score across all subtasks (as stipulated by the official benchmarks) under

the headings “GLUE” and “SGLUE”. For all translation tasks, we report the BLEU score

(Papineni et al., 2002) as provided by SacreBLEU v1.3.0 (Post, 2018) with “exp” smoothing

and “intl” tokenization. We refer to scores for WMT English to German, English to French,

and English to Romanian as EnDe, EnFr, and EnRo, respectively. For CNN/Daily Mail,

we find the performance of models on the ROUGE-1-F, ROUGE-2-F, and ROUGE-L-F

metrics (Lin, 2004) to be highly correlated so we report the ROUGE-2-F score alone under

the heading “CNNDM”. Similarly, for SQuAD we find the performance of the “exact match”

and “F1” scores to be highly correlated so we report the “exact match” score alone. We

provide every score achieved on every task for all experiments in Table 16, Appendix E.

Our results tables are all formatted so that each row corresponds to a particular experi-

mental configuration with columns giving the scores for each benchmark. We will include

the mean performance of the baseline configuration in most tables. Wherever a baseline

configuration appears, we will mark it with a

⋆

(as in the first row of Table 1). We also

will boldface any score that is within two standard deviations of the maximum (best) in a

given experiment.

Our baseline results are shown in Table 1. Overall, our results are comparable to existing

models of similar size. For example, BERT

BASE

achieved an exact match score of 80

.

8

on SQuAD and an accuracy of 84

.

4 on MNLI-matched, whereas we achieve 80

.

88 and

84

.

24, respectively (see Table 16). Note that we cannot directly compare our baseline to

BERT

BASE

because ours is an encoder-decoder model and was pre-trained for roughly

1

⁄4

as many steps. Unsurprisingly, we find that pre-training provides significant gains across

almost all benchmarks. The only exception is WMT English to French, which is a large

14