1384 IEEE TRANSACTIONS ON AUDIO, SPEECH, AND LANGUAGE PROCESSING, VOL. 19, NO. 5, JULY 2011

model is usually called the sinusoids plus noise model, or deter-

ministic plus stochastic decomposition. In this model, the sinu-

soidal part corresponds to the “deterministic” part of the signal

due to the structured nature of this model. The remaining signal

is the sinusoidal noise component

, also referred to here as

residual or sinusoidal error signal, which is the “stochastic” part

of the audio signal, since it is very difficult to accurately model,

but at the same time essential for high-quality audio synthesis.

Accurately modeling the stochastic component has been exam-

ined both for the single-channel case, e.g., [2], [20], [21] and

the multi-channel audio case [3]. Practically, after the sinusoidal

parameters are estimated, the noise component is computed by

subtracting the sinusoidal component from the original signal.

Note that in this paper we are only interested in encoding the

sinusoidal part.

A. Single-Channel Sinusoidal Selection

To perform single-channel sinusoidal analysis, we employed

state-of-the-art psychoacoustic analysis based on [22]. In the

th

iteration, the algorithm picks a perceptually optimal sinusoidal

component frequency, amplitude, and phase. This choice mini-

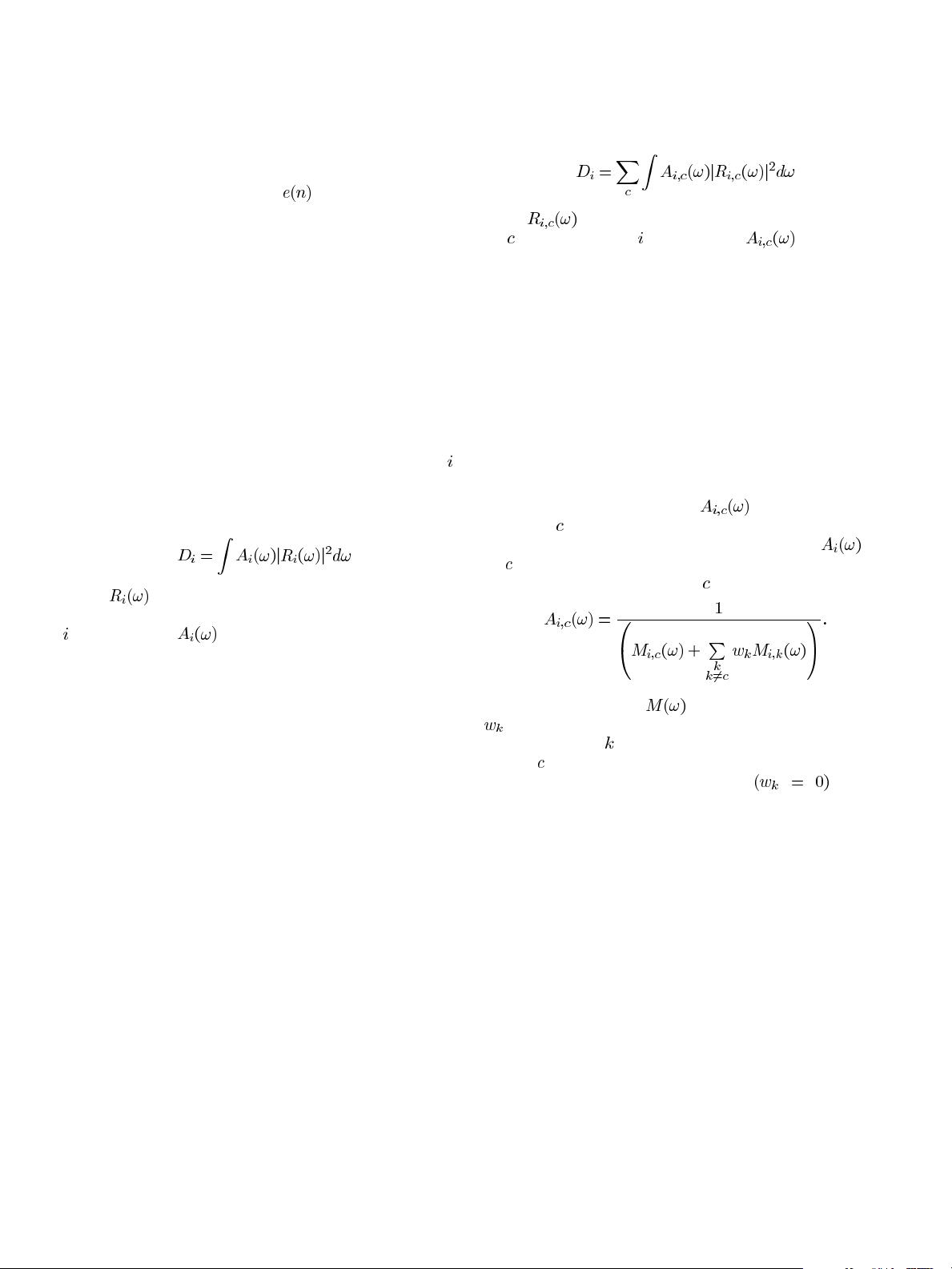

mizes the perceptual distortion measure

(2)

where

is the Fourier transform of the residual signal

(original frame minus the currently selected sinusoids) after the

th iteration, and is a frequency weighting function set

as the inverse of the current masking threshold energy.

One issue with CS encoding is that no further refinement of

the sinusoid frequencies can be performed in the encoder, be-

cause frequencies which do not correspond to exact frequency

bins would result in loss of the sparsity in the frequency do-

main. This is an important problem, because it implies that we

must restrict the sinusoidal frequency estimation to the selection

of frequency bins (e.g., following a peak-picking procedure),

without the possibility of further refinement of the estimated fre-

quencies in the encoder. This can be alleviated by zero-padding

the signal frame, in other words improving the frequency res-

olution during the parameter estimation by reducing the bin

spacing. We have found, though, that for CS-based encoding

this can be performed to a limited degree, as zero-padding will

increase the number of measurements that must be encoded as

explained in Section IV (and consequently the bitrate). Fortu-

nately, this problem can be partly addressed by employing the

“frequency mapping” procedure, described in Section IV. Fur-

thermore, since the sparsity restriction need not hold after the

signal is decoded, frequency re-estimation can be performed in

the decoder, such as interpolation among frames.

B. Multi-Channel Sinusoidal Selection

To perform multi-channel sinusoidal analysis, we have

extended the sinusoidal modeling method presented in

[23]—which employs a matching pursuit algorithm to de-

termine the model parameters of each frame—to include the

psychoacoustic analysis of [22]. For the multichannel case,

in each iteration, the algorithm picks a sinusoidal compo-

nent frequency that is optimal for all channels, as well as

channel-specific amplitudes and phases. This choice minimizes

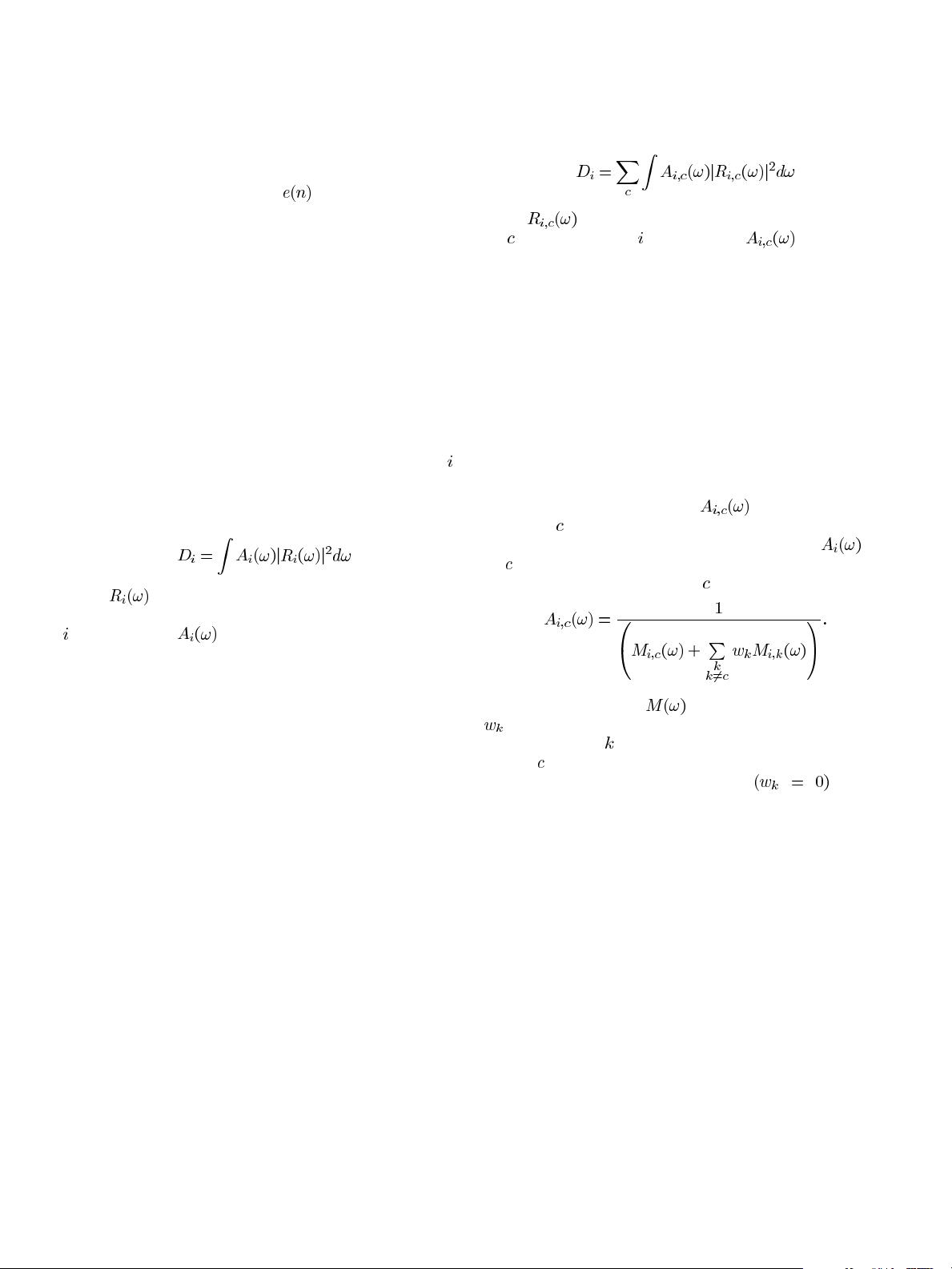

the perceptual distortion measure

(3)

where

is the Fourier transform of the residual signal of

the

th channel after the th iteration, and is a frequency

weighting function set as the inverse of the current masking

threshold energy. The contributions of each channel are simply

summed to obtain the final measure.

An important question is what masking model is suitable for

multi-channel audio where the different channels have different

binaural attributes in the reproduction. In transform coding, a

common problem is caused by binaural masking level differ-

ence (BMLD); sometimes quantization noise that is masked in

monaural reproduction is detectable because of binaural release,

and using separate masking analysis for different channels is not

suitable for loudspeaker rendering. However, this effect in para-

metric coding is not so well established.

We performed preliminary experiments using: 1) separate

masking analysis, i.e., individual

based on the masker

of channel

for each signal separately [see (3)]; 2) the masker

of the sum signal of all channel signals to obtain

for

all

; and 3) power summation of the other signals’ attenuated

maskers to the masker of channel

according to

(4)

In the above equation,

indicates the masker energy,

the estimated attenuation (panning) factor that was varied

heuristically, and

iterates through all channel signals ex-

cluding

. In this paper, we chose to use the first method, i.e.,

separate masking analysis for channels

, for the

reason that we did not find notable differencies in BMLD noise

unmasking, and that the sound quality seemed to be marginally

better with headphone reproduction. For loudspeaker reproduc-

tion, the second or third method may be more suitable.

The use of this psychoacoustic multi-channel sinusoidal

model resulted in sparser modeled signals, increasing the

effectiveness of our compressed sensing encoding.

III. C

OMPRESSED SENSING

Compressed sensing [15], [16]—also known as compressive

sensing or compressive sampling—is an emerging field which

has grown up in response to the increasing amount of data that

needs to be sensed, processed and stored. A great majority of

this data is compressed as soon as it has been sensed at the

Nyquist rate. The idea behind compressed sensing is to go di-

rectly from the full-rate, analog signal to the compact represen-

tation by using measurements in the sparse basis. Thus, the CS

theory is based on the assumption that the signal of interest is

sparse in some basis as it can be accurately and efficiently repre-

sented in that basis. This is not possible unless the sparse basis is

known in advance, which is generally not the case. Thus com-

pressed sensing uses random measurements in a basis that is