In-Datacenter Performance Analysis of a Tensor Processing Unit ISCA ’17, June 24-28, 2017, Toronto, ON, Canada

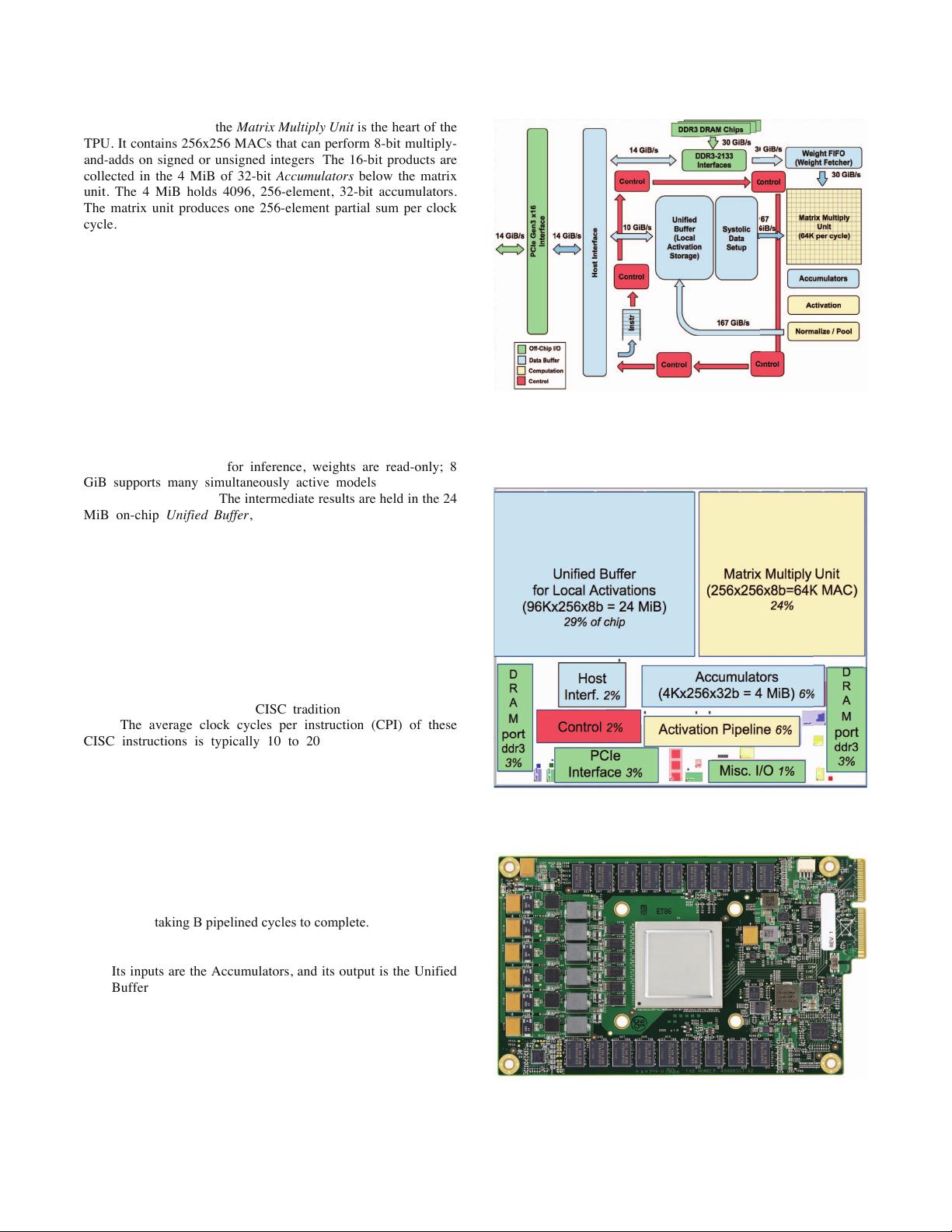

the upper-right corner, the Matrix Multiply Unit is the heart of the

TPU. It contains 256x256 MACs that can perform 8-bit multiply-

and-adds on signed or unsigned integers. The 16-bit products are

collected in the 4 MiB of 32-bit Accumulators below the matrix

unit. The 4 MiB holds 4096, 256-element, 32-bit accumulators.

The matrix unit produces one 256-element partial sum per clock

cycle. We picked 4096 by first noting that the operations per byte

needed to reach peak performance (roofline knee in Section 4) is

~1350, so we rounded that up to 2048 and then duplicated it so

that the compiler could use double buffering while running at

peak performance.

When using a mix of 8-bit weights and 16-bit activations (or

vice versa), the Matrix Unit computes at half-speed, and it

computes at a quarter-speed when both are 16 bits. It reads and

writes 256 values per clock cycle and can perform either a matrix

multiply or a convolution. The matrix unit holds one 64 KiB tile

of weights plus one for double buffering (to hide the 256 cycles it

takes to shift a tile in). This unit is designed for dense matrices.

Sparse architectural support was omitted for time-to-deployment

reasons. The weights for the matrix unit are staged through an on-

chip Weight FIFO that reads from an off-chip 8 GiB DRAM

called Weight Memory (for inference, weights are read-only; 8

GiB supports many simultaneously active models). The weight

FIFO is four tiles deep. The intermediate results are held in the 24

MiB on-chip Unified Buffer, which can serve as inputs to the

Matrix Unit. A programmable DMA controller transfers data to or

from CPU Host memory and the Unified Buffer.

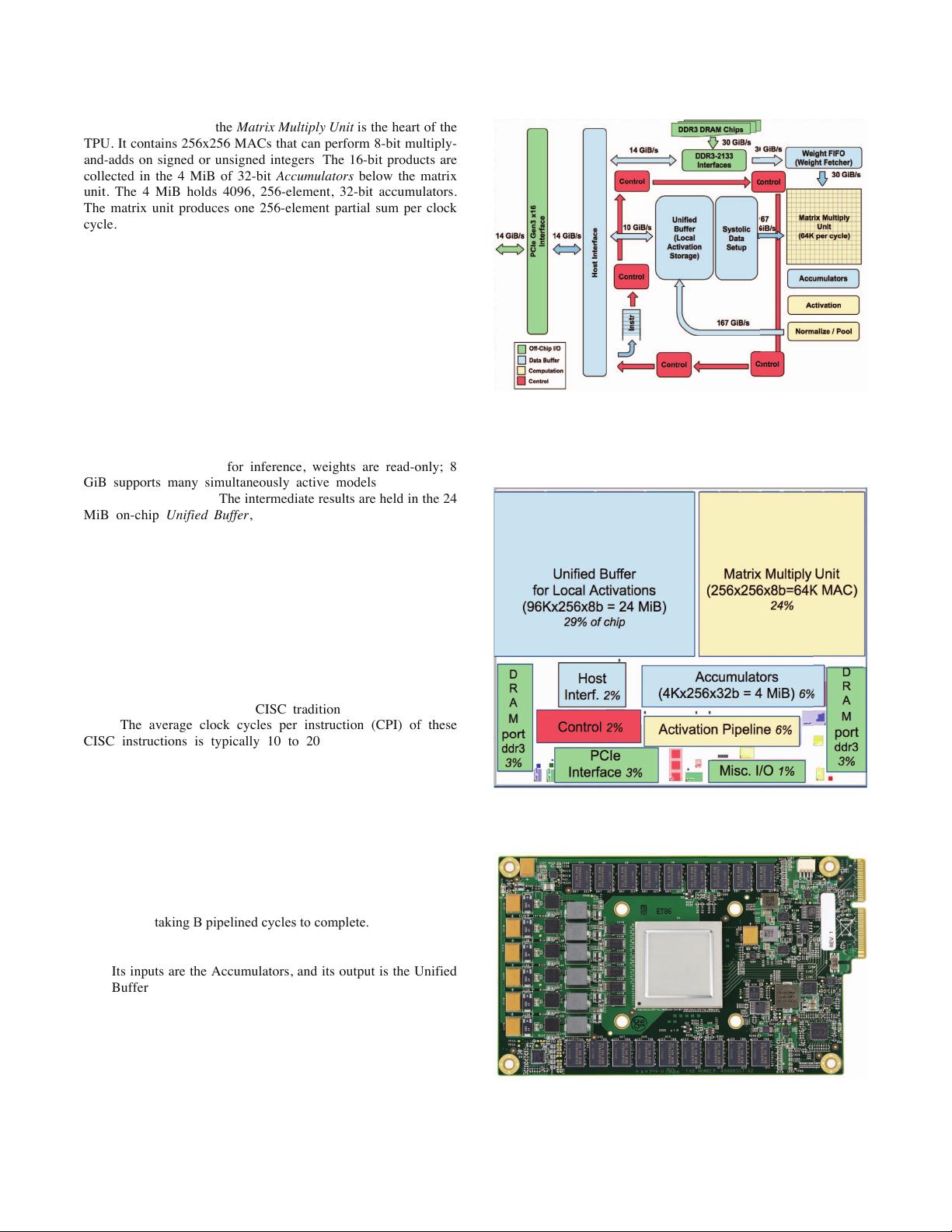

Figure 2 shows the floor plan of the TPU die. The 24 MiB

Unified Buffer is almost a third of the die and the Matrix Multiply

Unit is a quarter, so the datapath is nearly two-thirds of the die.

The 24 MiB size was picked in part to match the pitch of the

Matrix Unit on the die and, given the short development schedule,

in part to simplify the compiler (see Section 7). Control is just 2%.

Figure 3 shows the TPU on its printed circuit card, which inserts

into existing servers like an SATA disk.

As instructions are sent over the relatively slow PCIe bus,

TPU instructions follow the CISC tradition, including a repeat

field. The average clock cycles per instruction (CPI) of these

CISC instructions is typically 10 to 20. It has about a dozen

instructions overall, but these five are the key ones:

1. Read_Host_Memory reads data from the CPU host

memory into the Unified Buffer (UB).

2. Read_Weights reads weights from Weight Memory

into the Weight FIFO as input to the Matrix Unit.

3. MatrixMultiply/Convolve causes the Matrix

Unit to perform a matrix multiply or a convolution from the

Unified Buffer into the Accumulators. A matrix operation

takes a variable-sized B*256 input, multiplies it by

a

256x256 constant weight input, and produces a B*256

output, taking B pipelined cycles to complete.

4. Activate

performs the nonlinear function of the

artificial neuron, with options for ReLU, Sigmoid, and so on.

Its inputs are the Accumulators, and its output is the Unified

Buffer. It can also perform the pooling operations needed for

convolutions using the dedicated hardware on the die, as it i

s

connected to nonlinear function logic.

5. Write_Host_Memory writes data from the Unified

Buffer into the CPU host memory.

The other instructions are alternate host memory read/write, set

configuration, two versions of synchronization, interrupt host,

Figure 1. TPU Block Diagram. The main computation is the

yellow Matrix Multiply unit. Its inputs are the blue Weight

FIFO and the blue Unified Buffer and its output is the blue

Accumulators. The yellow Activation Unit performs the

nonlinear functions on the Accumulators, which go to the

Unified Buffer.

Figure 2. Floorplan of TPU die. The shading follows Figure 1.

The light (blue) datapath is 67%, the medium (green) I/O is

10%, and the dark (red) control is just 2% of the die. Control

is much larger (and much harder to design) in a CPU or GPU.

Figure 3. TPU Printed Circuit Board. It can be inserted into

the slot for a SATA disk in a server.

3