SHEN AND LI: MINIMUM TOTAL ERROR ENTROPY METHOD FOR PARAMETER ESTIMATION 4081

where is the error/perturbation at input (per-

turbs the measurements to fit the linear model) and

is a weighting vector with positive

entries. In [9], the authors show that the above optimization

problem can be efficiently solved by the singular value de-

composition (SVD) and based on the results of SVD, the

above optimization problem is proven to be equivalent to the

following minimization problem,

(6)

where

is a nonsingular weighting ma-

trix.

By defining

(7)

the above minimization problem (6) can be expressed as

(8)

By this method, we have written the cost function of LS (2)

and the cost function of TLS (8) in the same form. Because in

(7),

considers both the output error and the input error, and

also because it is used in the total least squares, we call it “total

error”. Comparing (8) with (2), we see that the LS minimizes the

sum of squared output error while the TLS method minimizes

the sum of squared total error in estimation.

In the TLS method, the designing of weighting coefficients

is quite application-specific. The latter coef-

ficients

weight the dimensions of the input error

, respectively, whose values depend on specific applications.

In the following of this paper, for the sake of simplicity, we set

all

to be equal (thus ), giving the dif-

ferent dimensions of input error the same weight. The algorithm

obtained in the following subsection can be easily extended to

cases that

are not equal, by using a weighting

matrix

. The first weighting coefficient weights the

output error. In reality, if the input noise intensity

and the

output noise intensity

are known as prior knowledge, the

coefficient

can be set as [3], [29], to avoid the

negative effect brought by the inequality of input and output

noise intensity. If the exact values of noise intensity are not

known and the input noise intensity is assumed to be comparable

to output noise intensity, we can roughly set

(Intuitively,

it is a natural choice in such a case. More detailed reasons for

this setting are providedintheSectionIII).

The difference in the meaning of cost function (8) from

that of cost function (2) can be interpreted explicitly from the

perspective of geometry. Essentially, the estimation problem is

tantamount to finding a

-dimensional hyperplane ,defined

by

to fit the -dimensional data points under specific op-

timization goals [9], [29]. The optimization goals are different

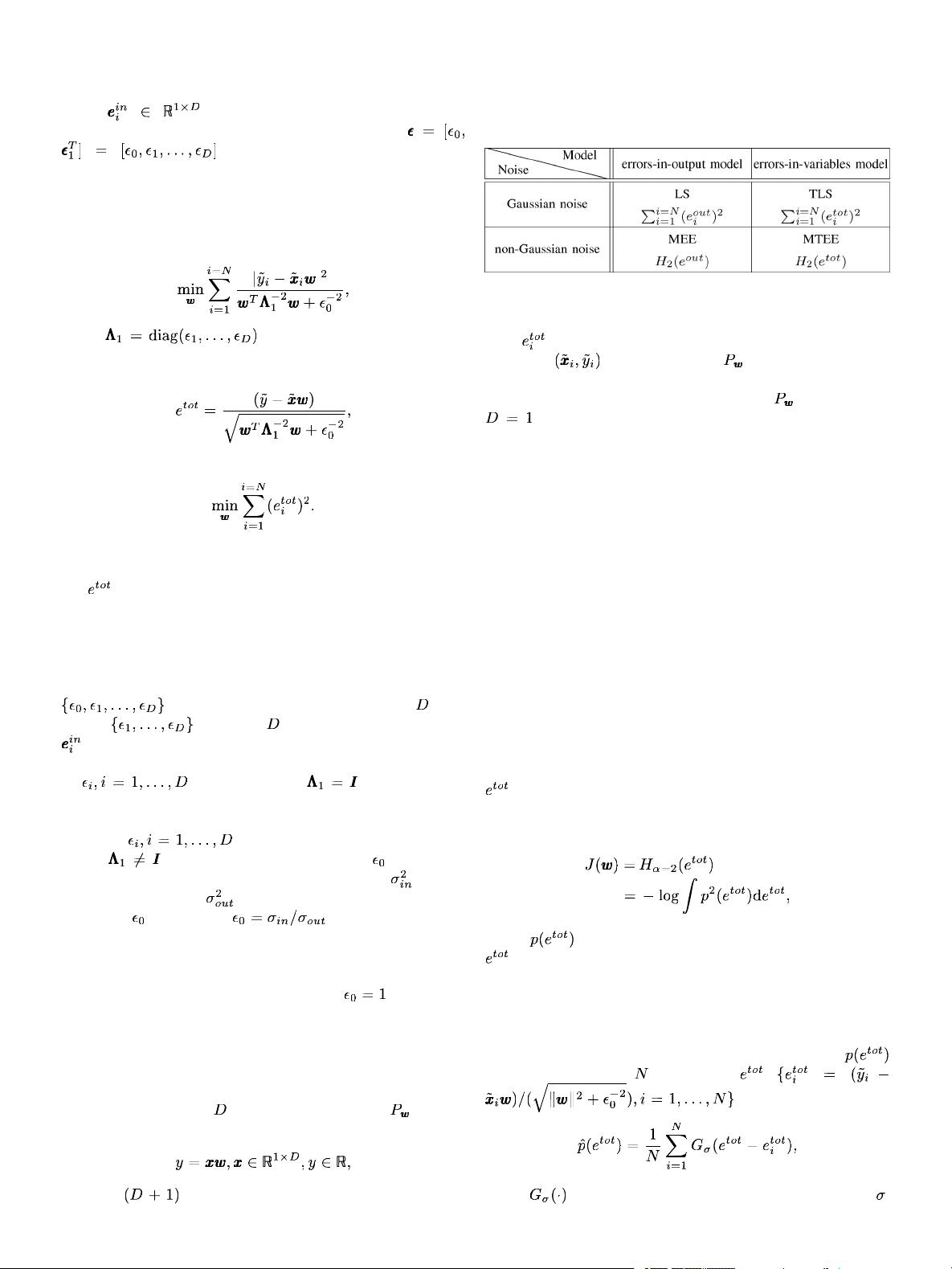

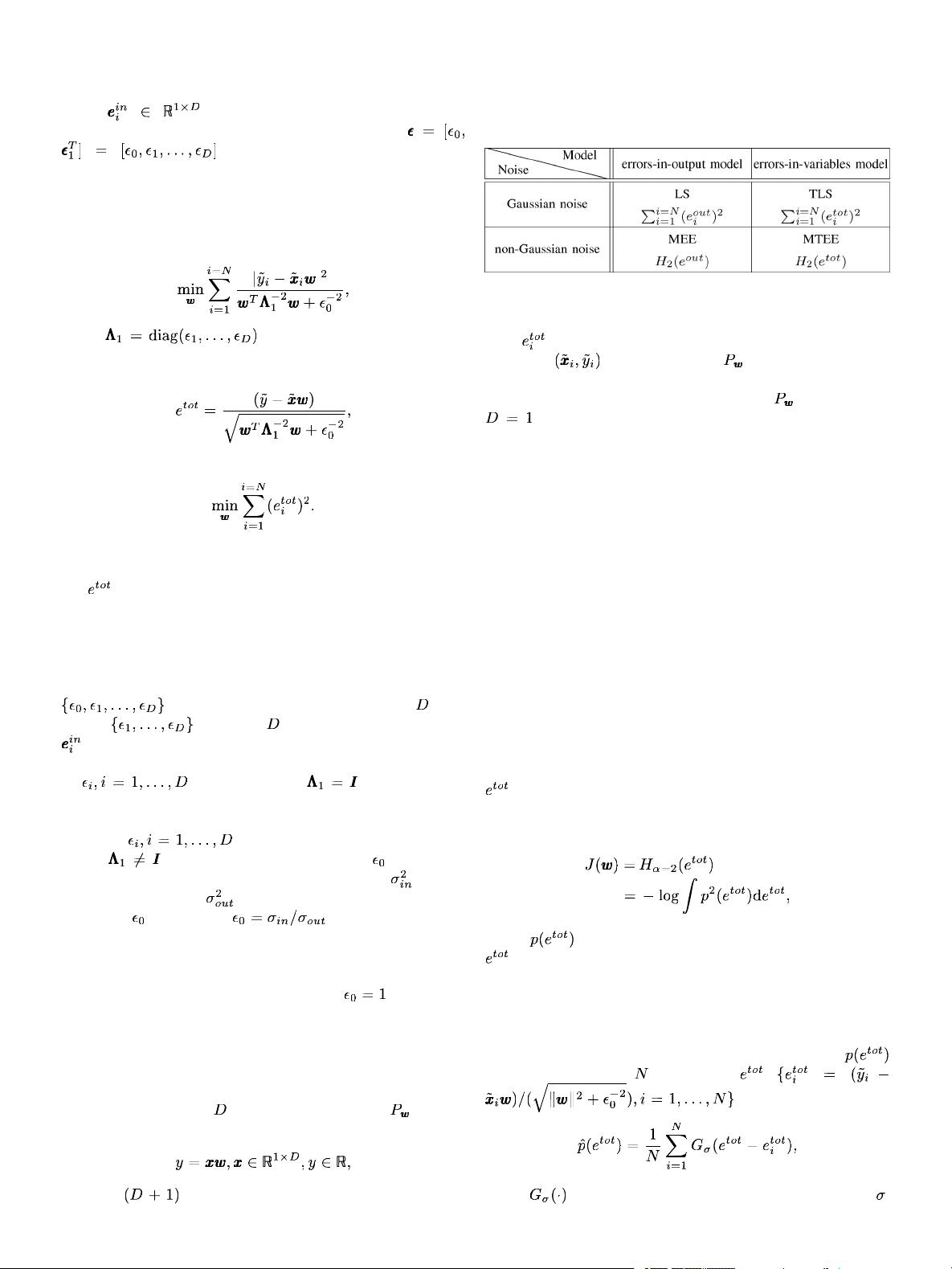

TABLE I

AC

OMPARISON OF THE OBJECTIVE FUNCTIONS OF THE FOUR METHODS.

for different estimation methods. In the TLS method, the total

error

is actually the (signed) perpendicular distance from

data point

to the hyperplane . In contrast, in the LS

method, the output error can be viewed as the vertical distances

from the data points to a fitting straight line

,inthecaseof

[9], [11], [29]. So, in other words, the two estimation

methods employ two different distance measures in the design

of cost functions.

We note that, like the LS method, the TLS method is based

on the second-order statistics of the total error. Thus it is no

longer efficient when the total error is non-Gaussian according

to the reasons discussed above. Considering the comparison

results between the LS method and the MEE method, we think

that in EIV system, when both (A.1)and(A.2) are invalid,

methods combining total error and information-theoretic mea-

sure may outperform the traditional TLS method. So, in this

work, we develop information-theoretic estimation algorithms

which considers the existence of measurement noise in the

input signal.

Algorithm Formula ti on

Given the above discussions, under the errors-in-variables

model, we propose to estimate the system parameters by

minimizing the quadratic Renyi's entropy of the total error

, and we call the proposed algorithm the minimum total

error entropy (MTEE) method. Specifically, the cost function

is given by

(9)

where

is the probability distribution function (pdf) of

. In the Table I, we can explicitly see the contribution of

the MTEE method by comparing its cost function with those of

the other three methods. To some extent, it can be viewed as a

complement to the existing LS method, TLS method and MEE

method.

Using the Parzen window method, the pdf

can be estimated by samples of ,

:

(10)

where

is a Gaussian kernel function with kernel size .

Substituting (10) into (9), we can obtain a convenient evaluation