1822 IEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING, VOL. 26, NO. 8, AUGUST 2014

∈

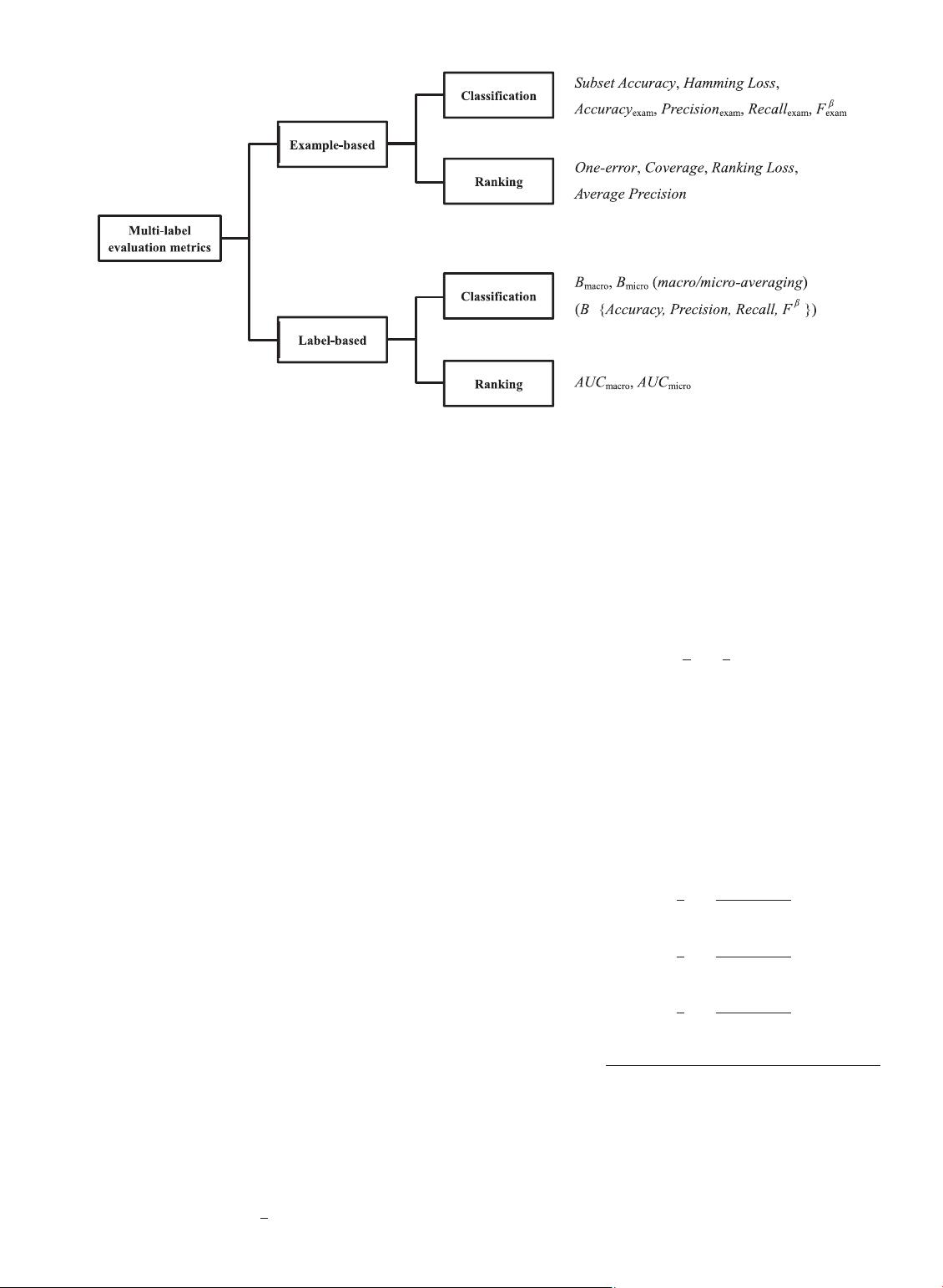

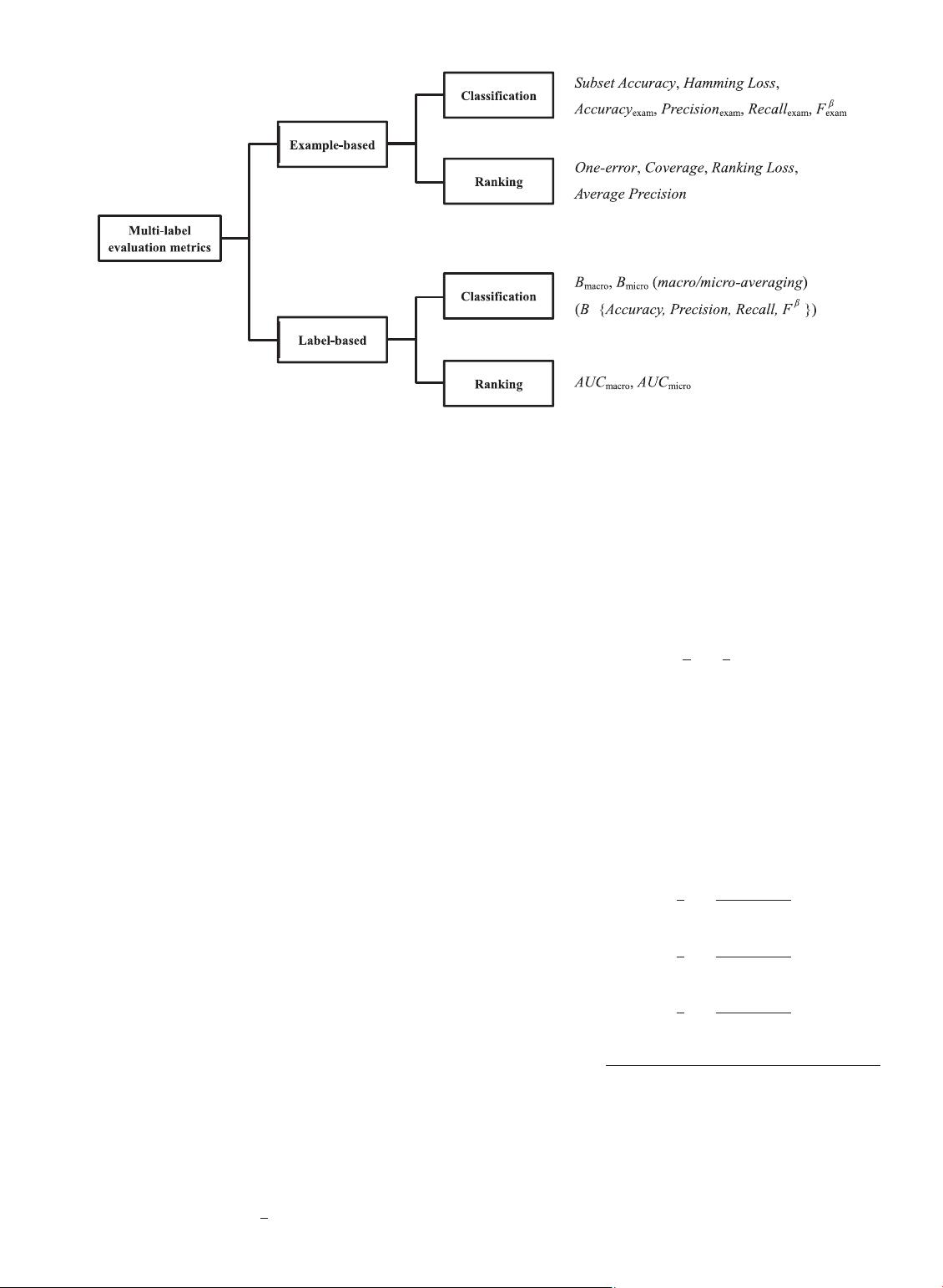

Fig. 1. Summary of major multi-label evaluation metrics.

2.2 Evaluation Metrics

2.2.1 Brief Taxonomy

In traditional supervised learning, generalization perfor-

mance of the learning system is evaluated with conven-

tional metrics such as accuracy, F-measure, area under

the ROC curve (AUC), etc. However, performance eval-

uation in multi-label learning is much complicated than

traditional single-label setting, as each example can be asso-

ciated with multiple labels simultaneously. Therefore, a

number of evaluation metrics specific to multi-label learn-

ing are proposed, which can be generally categorized into

two groups, i.e. example-based metrics [33], [34], [75]and

label-based metrics [94].

Following the notations in Table 1,let

S ={(x

i

, Y

i

)

| 1 ≤ i ≤ p)} be the test set and h(·) be the learned multi-

label classifier. Example-based metrics work by evaluating

the learning system’s performance on each test exam-

ple separately, and then returning the mean value across

the test set. Different to the above example-based met-

rics, label-based metrics work by evaluating the learning

system’s performance on each class label separately, and

then returning the macro/micro-averaged value across all class

labels.

Note that with respect to h(·), the learning system’s gen-

eralization performance is measured from classification per-

spective. However, for either example-based or label-based

metrics, with respect to the real-valued function f(·, ·) which

is returned by most multi-label learning systems as a com-

mon practice, the generalization performance can also be

measured from ranking perspective. Fig. 1 summarizes the

major multi-label evaluation metrics to be introduced next.

2.2.2 Example-based Metrics

Following the notations in Table 1, six example-based clas-

sification metrics can be defined based on the multi-label

classifier h(·) [33], [34], [75]:

• Subset Accuracy:

subsetacc(h) =

1

p

p

i=1

[[ h(x

i

) = Y

i

]] .

The subset accuracy evaluates the fraction of cor-

rectly classified examples, i.e. the predicted label set

is identical to the ground-truth label set. Intuitively,

subset accuracy can be regarded as a multi-label

counterpart of the traditional accuracy metric, and

tends to be overly strict especially when the size of

label space (i.e. q) is large.

• Hamming Loss:

hloss(h) =

1

p

p

i=1

1

q

|h(x

i

)Y

i

|.

Here, stands for the symmetric difference between

two sets. The hamming loss evaluates the fraction

of misclassified instance-label pairs, i.e. a relevant

label is missed or an irrelevant is predicted. Note that

when each example in

S is associated with only one

label, hloss

S

(h) will be 2/q times of the traditional

misclassification rate.

• Accuracy

exam

,Precision

exam

,Recall

exam

,F

β

exam

:

Accuracy

exam

(h) =

1

p

p

i=1

|Y

i

h(x

i

)|

|Y

i

h(x

i

)|

;

Precision

exam

(h) =

1

p

p

i=1

|Y

i

h(x

i

)|

|h(x

i

)|

Recall

exam

(h) =

1

p

p

i=1

|Y

i

h(x

i

)|

|Y

i

|

;

F

β

exam

(h) =

(1 + β

2

) · Precision

exam

(h) · Recall

exam

(h)

β

2

· Precision

exam

(h) + Recall

exam

(h)

.

Furthermore, F

β

exam

is an integrated version of

Precision

exam

(h) and Recall

exam

(h) with balancing fac-

tor β>0. The most common choice is β = 1 which

leads to the harmonic mean of precision and recall.

When the intermediate real-valued function f(·, ·) is

available, four example-based ranking metrics can be

defined as well [75]: