3

image

conv layers

feature maps

Region Proposal Network

proposals

classifier

RoI pooling

Figure 2: Faster R-CNN is a single, unified network

for object detection. The RPN module serves as the

‘attention’ of this unified network.

into a convolutional layer for detecting multiple class-

specific objects. The MultiBox methods [26], [27] gen-

erate region proposals from a network whose last

fully-connected layer simultaneously predicts mul-

tiple class-agnostic boxes, generalizing the “single-

box” fashion of OverFeat. These class-agnostic boxes

are used as proposals for R-CNN [5]. The MultiBox

proposal network is applied on a single image crop or

multiple large image crops (e.g., 224×224), in contrast

to our fully convolutional scheme. MultiBox does not

share features between the proposal and detection

networks. We discuss OverFeat and MultiBox in more

depth later in context with our method. Concurrent

with our work, the DeepMask method [28] is devel-

oped for learning segmentation proposals.

Shared computation of convolutions [9], [1], [29],

[7], [2] has been attracting increasing attention for ef-

ficient, yet accurate, visual recognition. The OverFeat

paper [9] computes convolutional features from an

image pyramid for classification, localization, and de-

tection. Adaptively-sized pooling (SPP) [1] on shared

convolutional feature maps is developed for efficient

region-based object detection [1], [30] and semantic

segmentation [29]. Fast R-CNN [2] enables end-to-end

detector training on shared convolutional features and

shows compelling accuracy and speed.

3 FASTER R-CNN

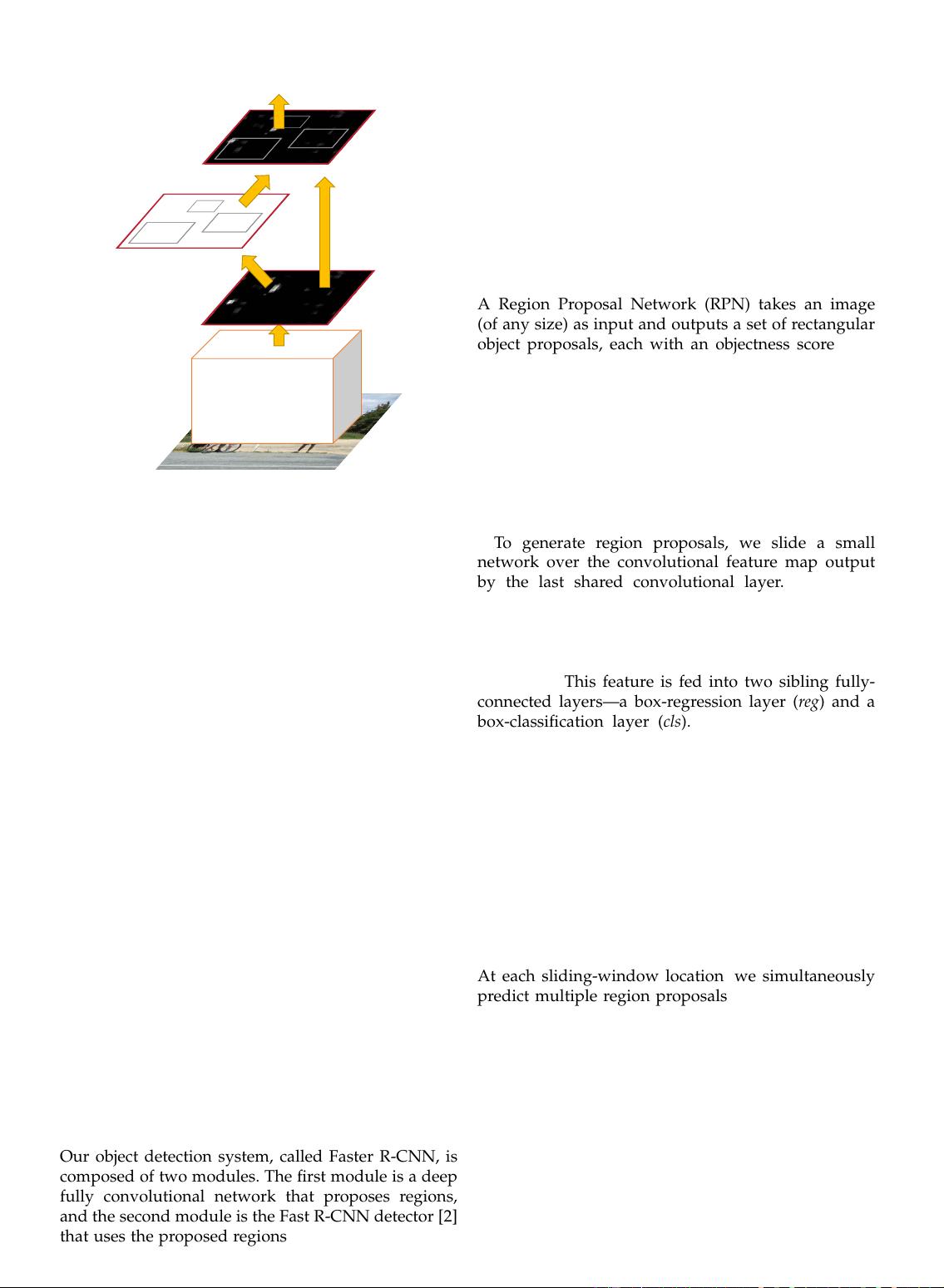

Our object detection system, called Faster R-CNN, is

composed of two modules. The first module is a deep

fully convolutional network that proposes regions,

and the second module is the Fast R-CNN detector [2]

that uses the proposed regions. The entire system is a

single, unified network for object detection (Figure 2).

Using the recently popular terminology of neural

networks with ‘attention’ [31] mechanisms, the RPN

module tells the Fast R-CNN module where to look.

In Section 3.1 we introduce the designs and properties

of the network for region proposal. In Section 3.2 we

develop algorithms for training both modules with

features shared.

3.1 Region Proposal Networks

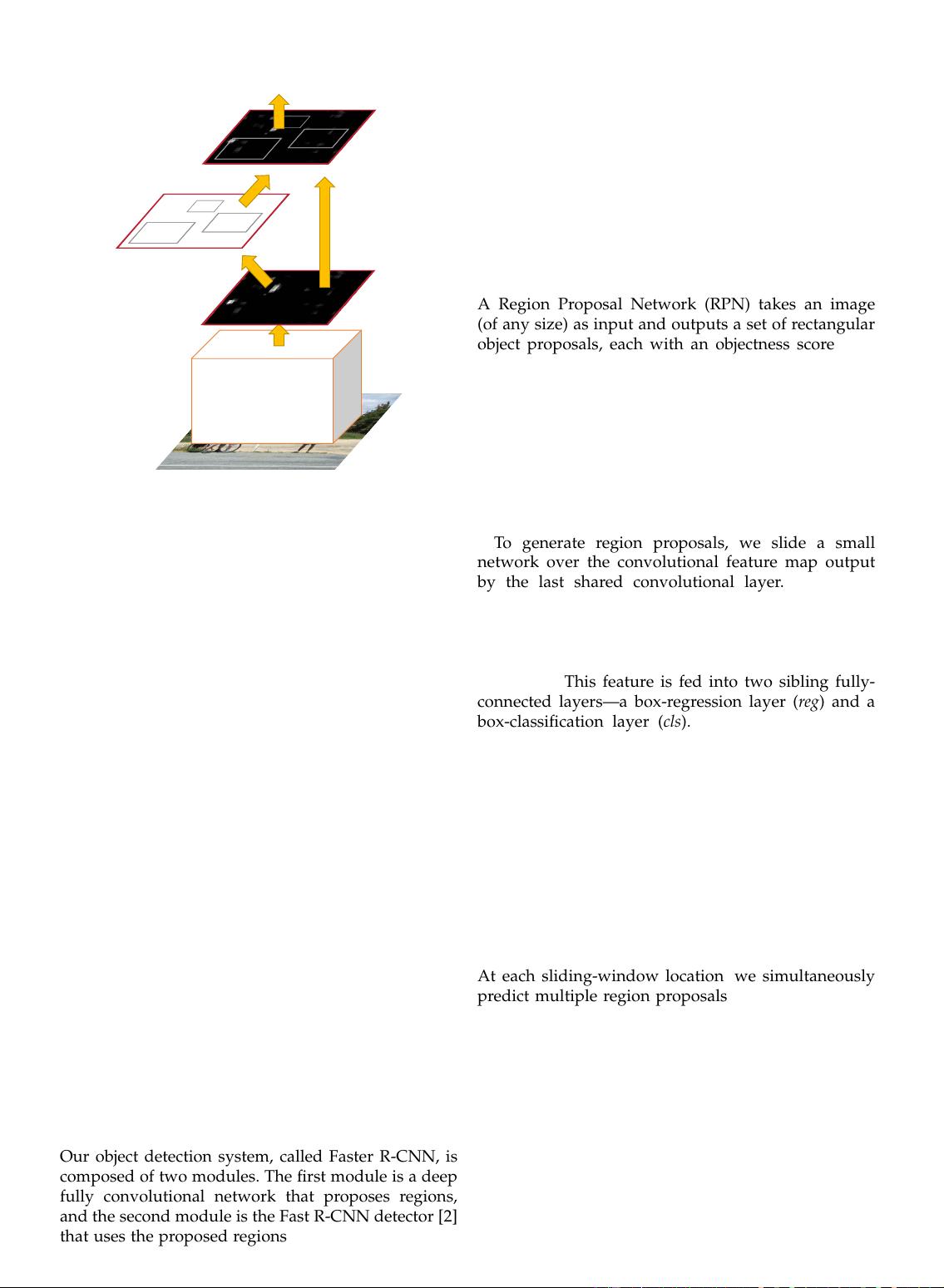

A Region Proposal Network (RPN) takes an image

(of any size) as input and outputs a set of rectangular

object proposals, each with an objectness score.

3

We

model this process with a fully convolutional network

[7], which we describe in this section. Because our ulti-

mate goal is to share computation with a Fast R-CNN

object detection network [2], we assume that both nets

share a common set of convolutional layers. In our ex-

periments, we investigate the Zeiler and Fergus model

[32] (ZF), which has 5 shareable convolutional layers

and the Simonyan and Zisserman model [3] (VGG-16),

which has 13 shareable convolutional layers.

To generate region proposals, we slide a small

network over the convolutional feature map output

by the last shared convolutional layer. This small

network takes as input an n × n spatial window of

the input convolutional feature map. Each sliding

window is mapped to a lower-dimensional feature

(256-d for ZF and 512-d for VGG, with ReLU [33]

following). This feature is fed into two sibling fully-

connected layers—a box-regression layer (reg) and a

box-classification layer (cls). We use n = 3 in this

paper, noting that the effective receptive field on the

input image is large (171 and 228 pixels for ZF and

VGG, respectively). This mini-network is illustrated

at a single position in Figure 3 (left). Note that be-

cause the mini-network operates in a sliding-window

fashion, the fully-connected layers are shared across

all spatial locations. This architecture is naturally im-

plemented with an n×n convolutional layer followed

by two sibling 1 × 1 convolutional layers (for reg and

cls, respectively).

3.1.1 Anchors

At each sliding-window location, we simultaneously

predict multiple region proposals, where the number

of maximum possible proposals for each location is

denoted as k. So the reg layer has 4k outputs encoding

the coordinates of k boxes, and the cls layer outputs

2k scores that estimate probability of object or not

object for each proposal

4

. The k proposals are param-

eterized relative to k reference boxes, which we call

3. “Region” is a generic term and in this paper we only consider

rectangular regions, as is common for many methods (e.g., [27], [4],

[6]). “Objectness” measures membership to a set of object classes

vs. background.

4. For simplicity we implement the cls layer as a two-class

softmax layer. Alternatively, one may use logistic regression to

produce k scores.