4 Big Data Mining and Analytics, March 2018, 1(1): 1-18

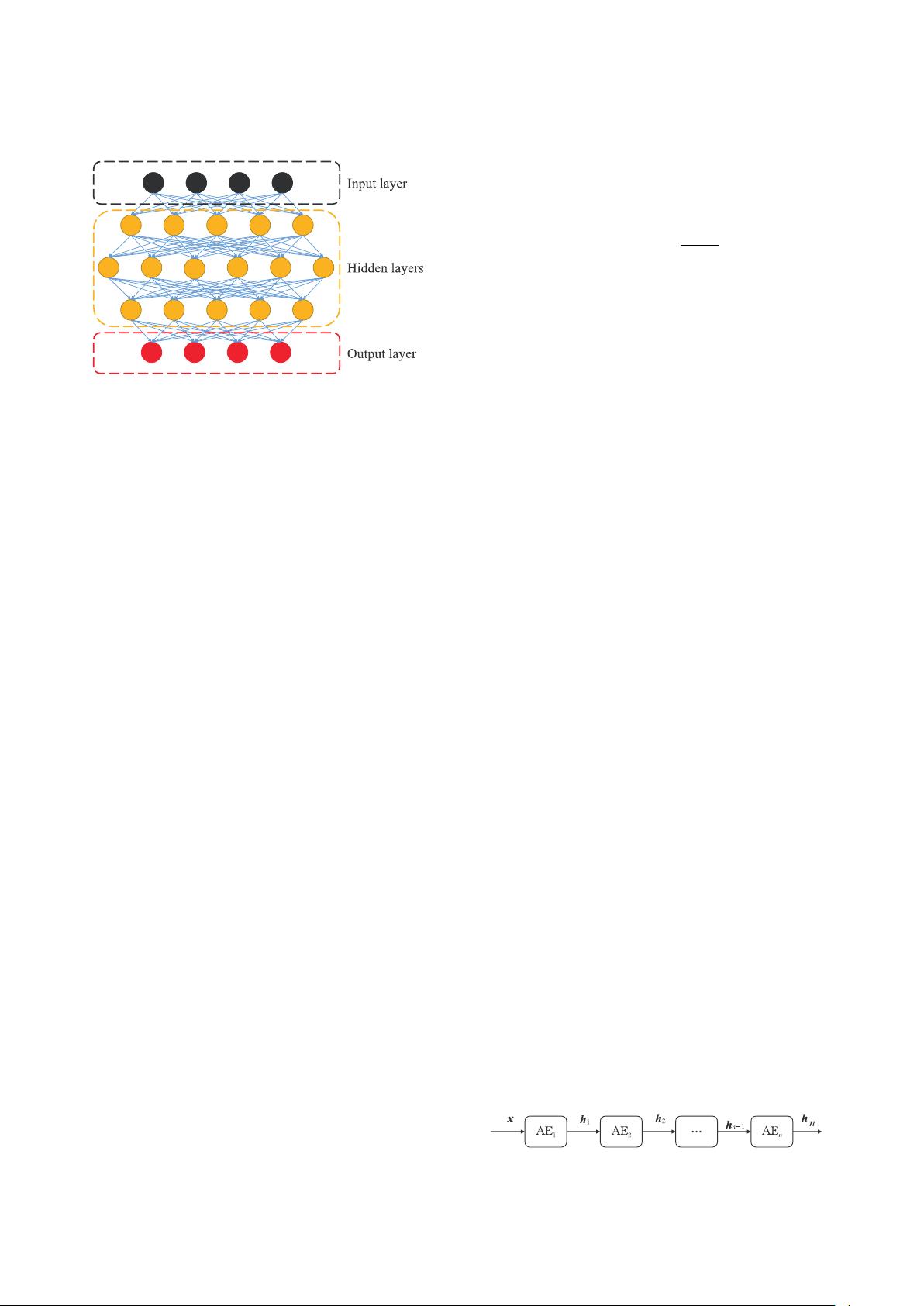

Fig. 1 An example of a deep feedforward network with an

input layer, three hidden layers, and an output layer.

Therefore, the deep feedforward network is one of the

most primitive deep learning architectures.

2.3 Stacked autoencoders

An autoencoder

[33–35]

is a simple deep feedforward

network, which includes an input layer, a hidden

layer, and an output layer. Meanwhile, according to

different functions, an autoencoder can be divided into

two parts: the encoder and decoder. The encoder

(denoted as f .x/) is used to generate a reduced feature

representation from an initial input x by a hidden layer

h, and the decoder (denoted as g.f .x//) is used to

reconstruct the initial input from the output of the

encoder by minimizing the loss function:

L.x; g.f .x/// (1)

By these two processes, high-dimensional data can

be converted to low-dimensional data. Therefore, the

autoencoder is very useful in classification and similar

tasks.

The autoencoder has three common variants: the

sparse autoencoder

[36, 37]

, denoising autoencoder

[38–40]

,

and contractive autoencoder

[41, 42]

, as follows:

Sparse autoencoder: Unlike autoencoders, the

sparse autoencoders add a sparse constraint ˝.h/

to the hidden layer h. Thus, its reconstruction error

can be evaluated by

L.x; g.f .x/// C ˝.h/ (2)

Denoising autoencoder: Unlike sparse

autoencoders, which add a sparse constraint

to the hidden layer, denoising autoencoders are

aimed at minimizing the loss function:

L.x; g.f .

e

x/// (3)

where

e

x is based on x with some noise.

Contractive autoencoder: Similar to sparse

autoencoders, contractive autoencoders add the

explicit regularizer ˝.h/ to the hidden layer h,

and minimize the explicit regularizer. The explicit

regularizer is

˝.h/D

@f .x/

@x

2

F

(4)

where ˝.h/ is the squared Frobenius norm

[43]

of the Jacobian partial derivative matrix of the

encoder function f .x/, and is a free parameter.

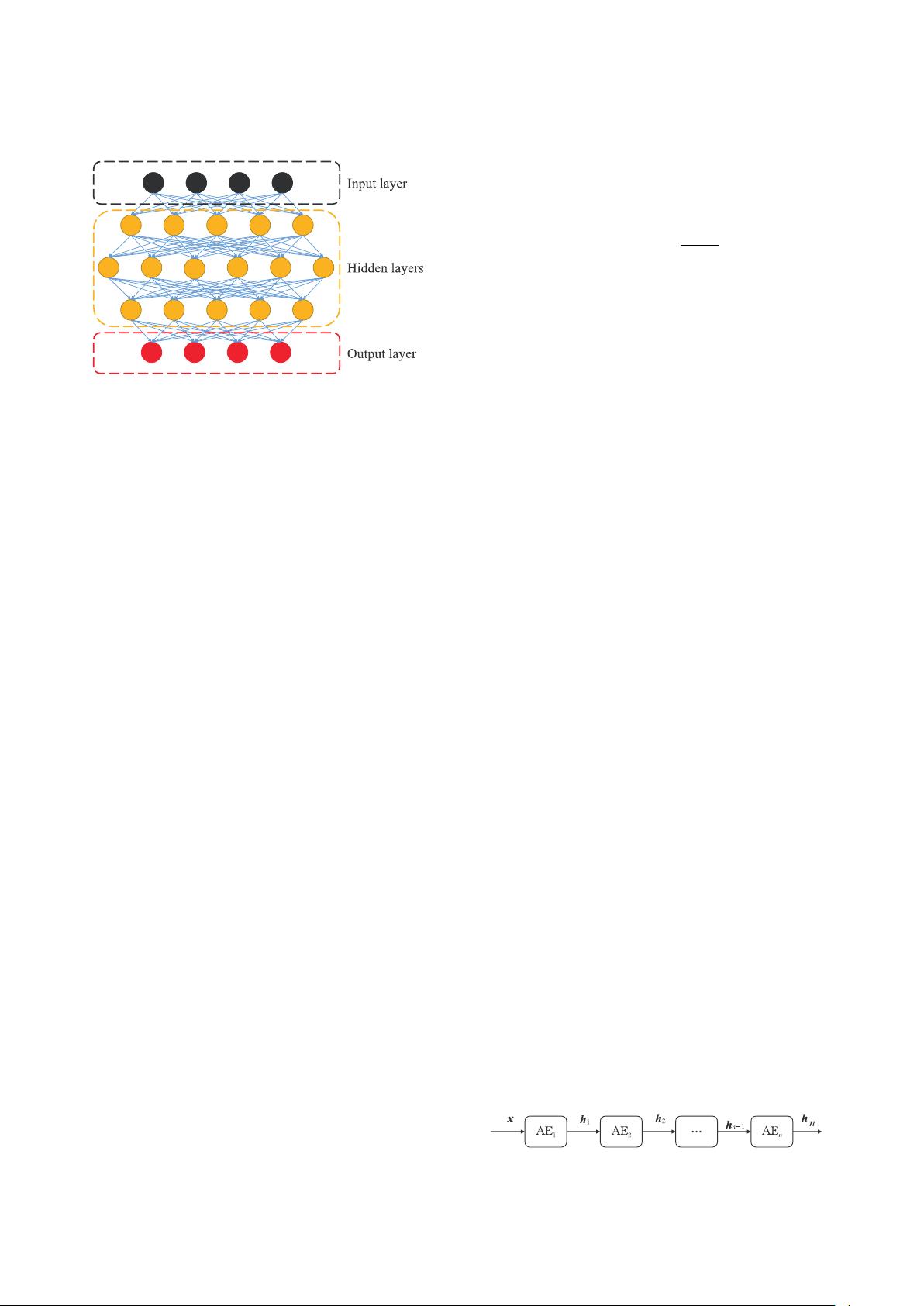

A stacked autoencoder

[31, 44]

is a neural network

with multiple autoencoder layers as shown in

Fig. 2. Furthermore, the input of the next layer

comes from the output of the previous layer in the

stacked autoencoders. An autoencoder usually consists

of only three layers and does not have a deep learning

architecture. However, the stacked autoencoder does

have a deep learning architecture with a stacked number

of autoencoders. It is worth mentioning that the training

of the stacked autoencoder can only accomplish an

action, layer-by-layer. For example, if we want to

train a network with an n ! m ! k architecture

using a stacked autoencoder, we must first train the

network n ! m ! n to get the transformation n ! m,

and then train the network m ! k ! m to get the

transformation m ! k, and finally stack the two

transformations to form the stacked autoencoder (i.e.,

n ! m ! k). This process is also called layer-wise

unsupervised pre-training

[17]

.

2.4 Deep belief networks

The Boltzmann machine

[45–48]

is derived from statistical

physics and is a modeling method based on energy

functions that can describe the high order interaction

between variables. Although the Boltzmann machine is

relatively complex, it has a relatively complete physical

interpretation and a strict mathematical statistics theory

as its basis. The Boltzmann machine is a symmetric

coupled random feedback binary unit neural network,

which includes a visible layer and multiple hidden

layers. The nodes of the Boltzmann machine can

be divided into visible units and hidden units. In a

Boltzmann machine, its visible and hidden units are

used to represent the random neural network learning

model, and its weights between two units in the

model are used to represent the correlation between the

Fig. 2 An example of a stacked autoencoder with n

autoencoders (i.e., AE

1

, AE

2

,.., AE

n

).