A Simple but Hard-to-Beat Baseline for Session-based

Recommendations

Fajie Yuan

∗†

University of Glagow

Glasgow, UK

f.yuan.1@research.gla.ac.uk

Alexandros Karatzoglou

Telefonica Research

Barcelona, Spain

alexandros.karatzoglou@gmail.com

Ioannis Arapakis

Telefonica Research

Barcelona, Spain

arapakis.ioannis@gmail.com

Joemon M Jose

University of Glagow

Glasgow, UK

joemon.jose@glasgow.ac.uk

Xiangnan He

National University of Singapore

Singapore

xiangnanhe@gmail.com

ABSTRACT

Convolutional Neural Networks (CNNs) models have been recently

introduced in the domain of top-

N

session-based recommendations.

An ordered collection of past items the user has interacted with in

a session (or sequence) are embedded into a 2-dimensional latent

matrix, and treated as an image. The convolution and pooling opera-

tions are then applied to the mapped item embeddings. In this paper,

we rst examine the typical session-based CNN recommender and

show that both the generative model and network architecture are

suboptimal when modeling long-range dependencies in the item

sequence. To address the issues, we introduce a simple, but very

eective generative model that is capable of learning high-level

representation from both short- and long-range item dependencies.

The network architecture of the proposed model is formed of a stack

of holed convolutional layers, which can eciently increase the

receptive elds without relying on the pooling operation. Another

contribution is the eective use of residual block structure in recom-

mender systems, which can ease the optimization for much deeper

networks. The proposed generative model attains state-of-the-art

accuracy with less training time in the session-based recommenda-

tion task. It accordingly can be used as a powerful recommendation

baseline to beat in future, especially when there are long sequences

of user feedback.

1 INTRODUCTION

Leveraging sequences of user-item interactions (e.g., clicks or pur-

chases) to improve real-world recommender systems has become

increasingly popular in recent years. These sequences are auto-

matically generated when users interact with online systems in

sessions (e.g., shopping session, or music listening session). For

example, users on Last.fm

1

or Soundcloud

2

typically enjoy a series

of songs during a certain time period without any interruptions, i.e.,

a listening session. The set of songs played in one session usually

have strong correlations [

7

], e.g., sharing the same album, artist,

or genre. Accordingly, a good recommender system is supposed to

generate recommendations by taking advantage of these sequential

patterns in the session.

∗

Work performed while at Telefonica Research, Spain.

†

Preprint. Work in progress.

1

https://www.last.fm

2

https://www.soundcloud.com

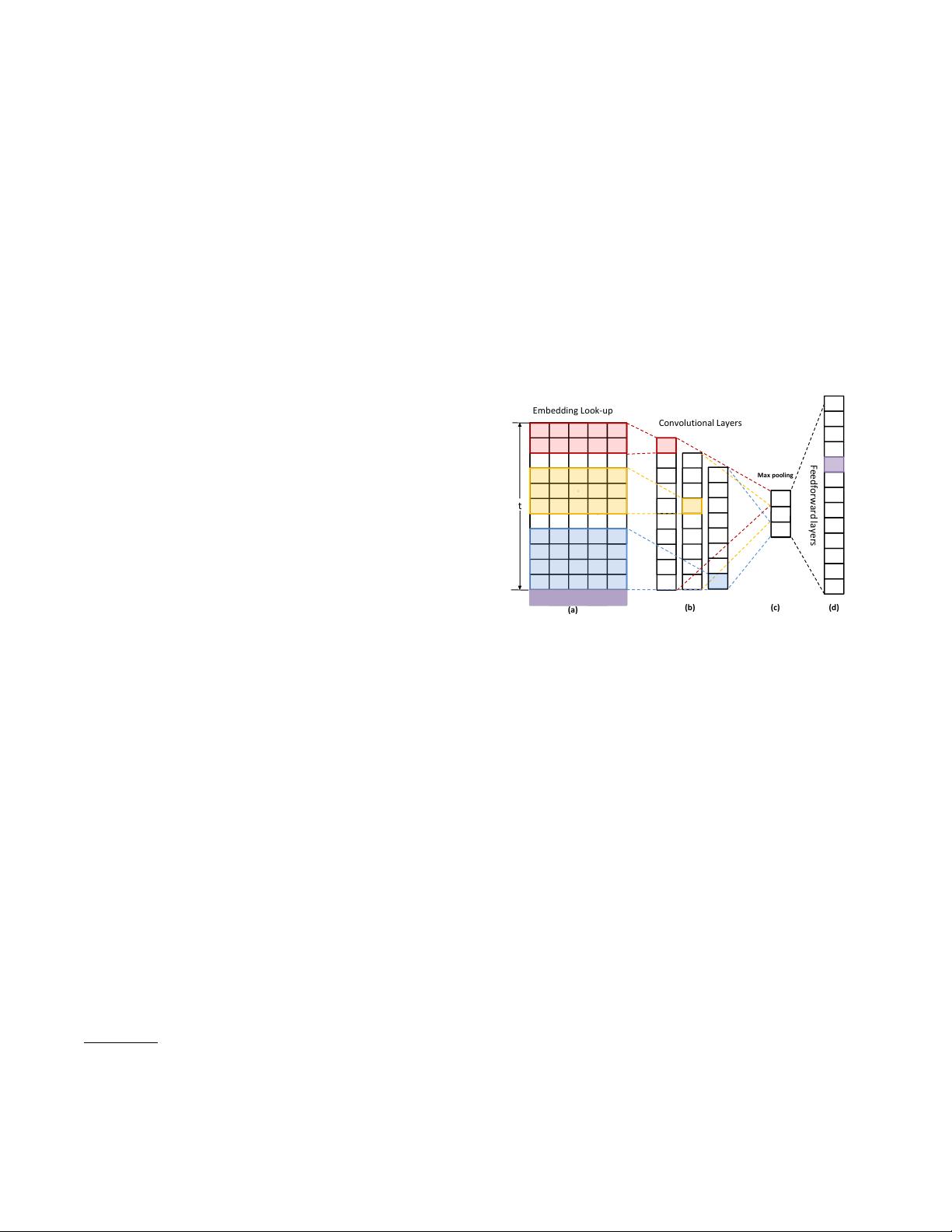

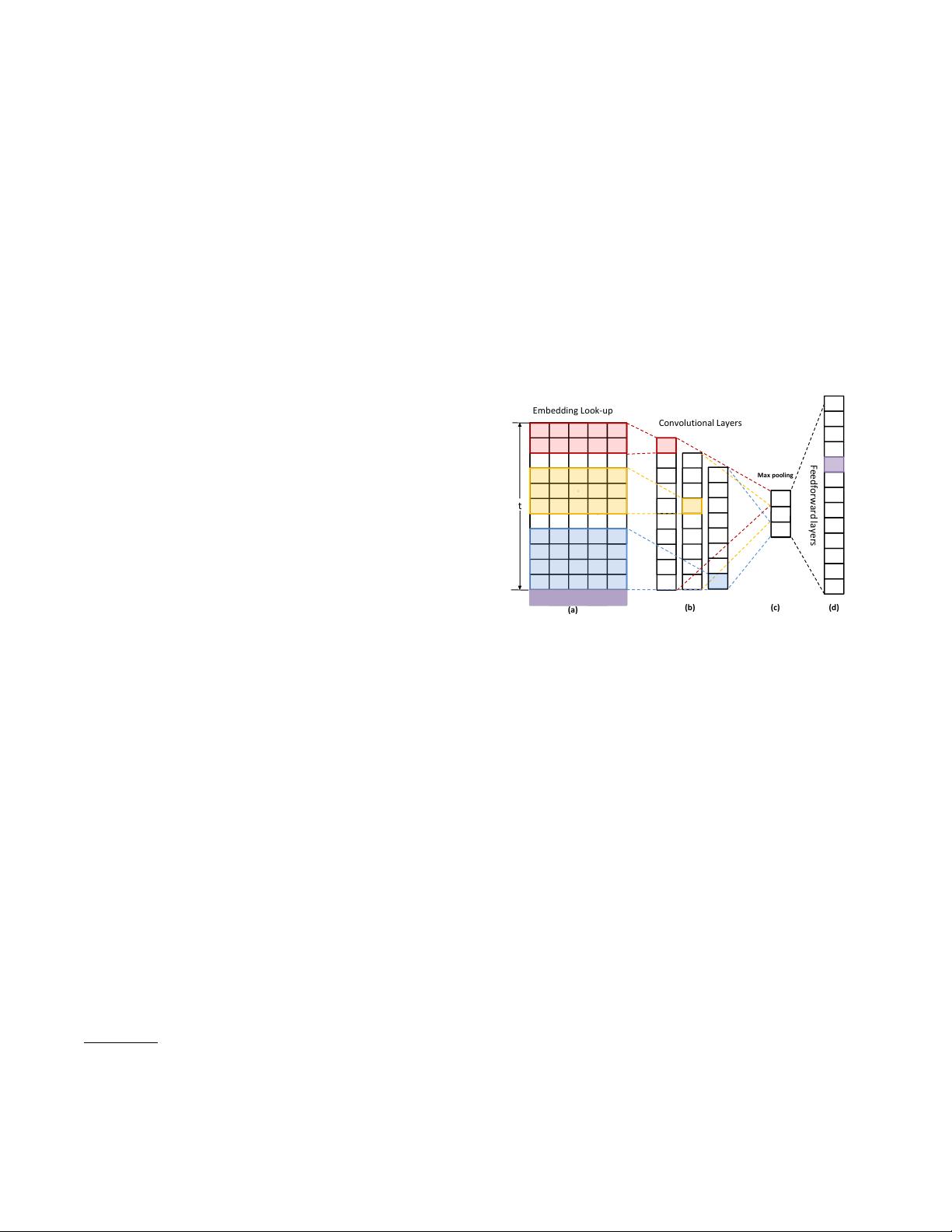

Embedding Look-up

Convolutional Layers

Max pooling

Feedforward layers

t

(a)

(b)

(c)

(d)

Figure 1: The basic structure of Caser [34]. The red, yellow and blue

regions denotes a

2

×k,

3

×k and

4

×k convolution lter respectively,

where k = 5. The purple row stands for the true next item.

A class of models often employed for these sequences of item

interactions are the Recurrent Neural Networks (RNNs). RNNs typ-

ically generate a softmax output where high probabilities represent

the most relevant recommendations. While eective, these RNN-

based models, such as [

3

,

17

,

28

], depend on a hidden state of the

entire past that cannot fully utilize parallel computation within

a sequence [

9

]. Thus their speed is limited in both training and

evaluation.

By contrast, training CNNs does not depend on the computations

of the previous time step and therefore allow parallelization over

every element in a sequence. Inspired by the successful use of CNNs

in image tasks, a newly proposed sequential recommender, referred

to as Caser [

34

], abandoned RNN structures, proposing instead

a convolutional sequence embedding model, and demonstrated

that this CNN-based recommender is able to achieve comparable

or superior performance to the popular RNN model in the top-

N

sequential recommendation task. The basic idea of the convolution

processing is to treat the

t × k

embedding matrix as the “image"

of the previous

t

interactions in

k

dimensional latent space and

regard the sequential pattens as local features of the “image". A max

pooling operation that only preserves the maximum value of the

convolutional layer is performed to increase the receptive eld, as

well as dealing with the varying length of input sequences. Fig. 1

depicts the key architecture of Caser.

arXiv:1808.05163v3 [cs.IR] 30 Aug 2018