Written in closed form, the coefficients of B are equal to:

b

i,j

=

1 for i = 1, j = 1

cos θ

i,j

for i ≥ 2, j = 1

cos θ

i,j

·

Q

j−1

k=1

sin θ

i,k

for 2 ≤ j ≤ i − 1

Q

j−1

k=1

sin θ

i,k

for i = j, 2 ≤ i, j ≤ n

0 for i + 1 ≤ j ≤ n

(2)

The spherical parametrization of B allows to describe a

correlation matrix using only

n×(n−1)

2

parameters, namely the

angles θ

i,j

, 1 ≤ i ≤ n, i < j < n.

Numpacharoen and Atsawarungruangkit [36] introduced an

algorithm to generate random correlation matrices based on the

spherical parameterization, building on prior work [35], [37].

The key insight is that the correlation coefficients c

i,j

can be

expressed as sums of products between cosines and sines of

θ

i,j

, by developing the computation of c

i,j

= (BB

T

)

i,j

. As

a result, each c

i,j

lies within a boundary determined by the

angles θ

p,q

for 1 ≤ p ≤ i and 1 ≤ q < j. This insight can

be used to generate a valid correlation matrix by sampling

the correlation coefficients one by one, uniformly within the

boundaries derived from the values previously sampled.

Algorithm 1 provides a high-level description of the proce-

dure to generate a random correlation matrix using boundaries

of their coefficients [36]. The first column of the matrix is

initialized uniformly at random within its boundaries, namely

the interval [−1, 1] (lines 4-5). The correlation coefficients

are sampled, in order, from top to bottom and from left

to right. The elements of the first column are initialized

uniformly at random c

i,1

∼ U([−1, 1]), i = 1, . . . , n. When

sampling the correlation c

i,j

with i > 1, j ≥ 2, the previously

sampled correlation coefficients restrict the values for angles

θ

p,q

, for 1 ≤ p ≤ i and 1 ≤ q < j, allowing to derive the

boundaries for c

i,j

. For complete details about this procedure,

we refer the reader to Algorithm 4 in the Appendix. To ensure

that every correlation coefficient in the correlation matrix is

equally distributed (i.e.that their CDF is almost identical), the

algorithm shuffles them at the end (lines 9-12).

B. Copulas

We describe the Gaussian copulas generative model, which

can be used to generate datasets of n variables with a variety

of dependencies between the variables.

Marginal distribution. Consider a random variable X

taking values in R. We denote by marginal distribution its cu-

mulative distribution function (CDF): F : R → [0, 1], F (x) =

P (X ≤ x). One example is the marginal of a standard normal

distribution, which we denote by Φ:

Φ(x) =

1

√

2π

Z

x

−∞

e

−

u

2

2

du

Gaussian multivariate distribution. We denote by

N(0, Σ) the Gaussian multivariate distribution with mean 0

and covariance matrix Σ. Its CDF is equal to:

Φ

Σ

(x

1

, . . . , x

n

) =

Z

x

1

−∞

. . .

Z

x

n

−∞

e

−

1

2

x

T

Σ

−1

x

p

(2π)

n

det(Σ)

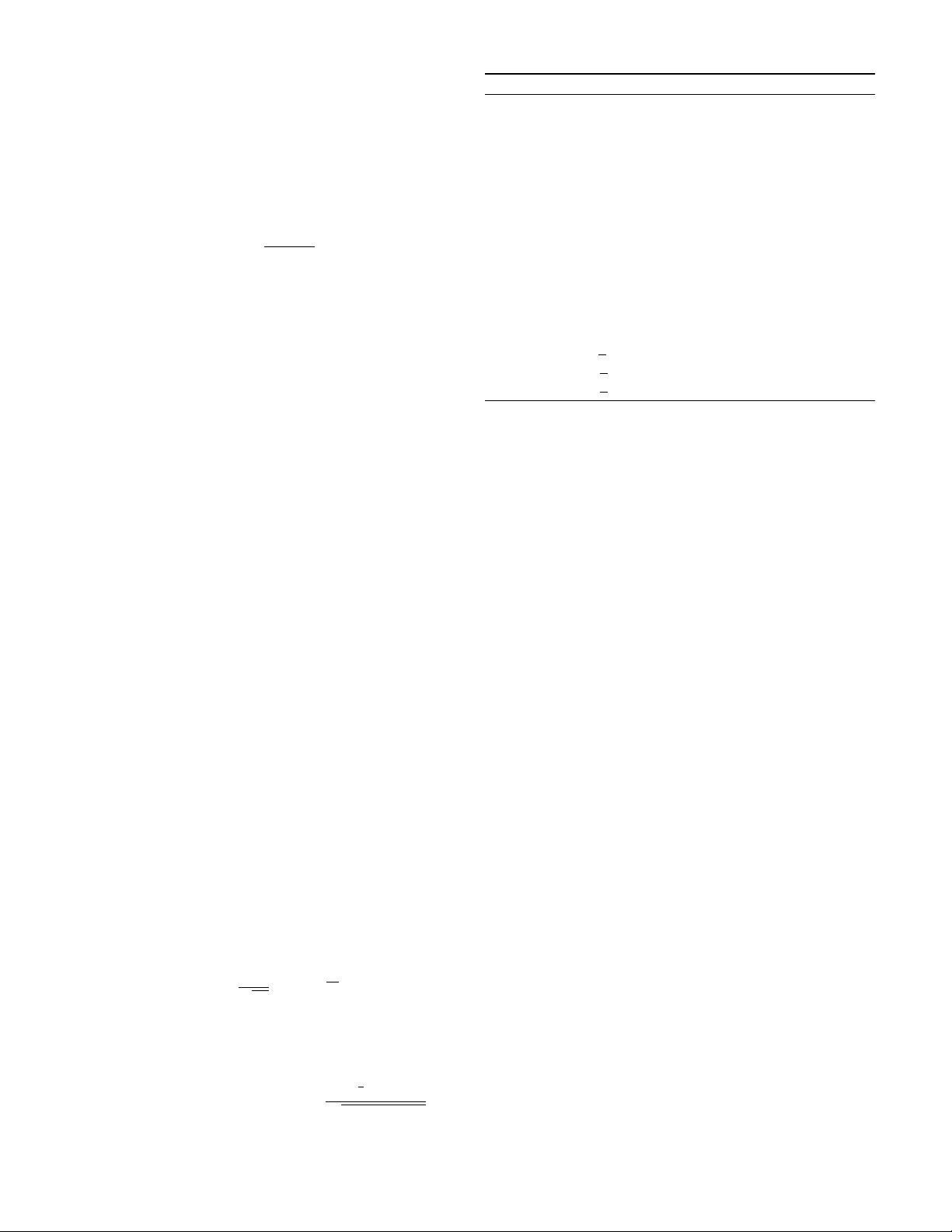

Algorithm 1 GENERATERANDOMCORRELATIONMATRIX

1: Inputs:

n: The number of variables.

K: A threshold for computational stability.

2: Output:

C ∈ R

n×n

: A valid triangular correlation matrix.

3: // Randomly initialize C’s first column.

4: for i ∈ {2, ··· , n} do

5: c

σ(i),1

← U([−1, 1]) // cos θ

σ(i),1

6: end for

7: constraints ← (c

σ(2),1

, . . . , c

σ(n),1

)

8: C ← FILLCORRELATIONMATRIX(n, K, constraints)

9: // Shuffle the variables.

10: σ ← random permutation([1, . . . , n])

11: C ← reorder rows(σ)

12: C ← reorder columns(σ)

Copulas. Copulas denote the set of multivariate cumulative

distribution functions F

C

: [0, 1]

n

→ [0, 1] over continuous

random vectors (X

1

, . . . , X

n

) such that the marginal of each

variable satisfies F

i

(x) = x, i.e., is uniformly distributed

in the interval [0, 1]. Sklar’s theorem [38], [39] states the

fundamental result that for any random variables X

1

, . . . , X

n

with continuous marginals F

1

, . . . , F

n

, their joint probability

distribution can be described in terms of the marginals and a

copula F

C

modeling the dependencies between the variables.

To see why, let us consider a continuous random vector

(X

1

, . . . , X

n

) with CDF F and marginals F

i

. Using the

fact that the random variable U

i

= F

−1

i

(X

i

) is uniformly

distributed in the interval [0, 1], it follows that the CDF of

(U

1

, . . . , U

n

) is equal to P (U

1

≤ u

1

, . . . , U

n

≤ u

n

) =

Pr(X

1

≤ F

−1

1

(u

1

), . . . , X

n

≤ F

−1

n

(u

n

)). As a result, the

copula of (X

1

, . . . , X

n

) can be written as follows:

F

C

(u

1

, . . . , u

n

) = F (F

−1

1

(u

1

), . . . , F

−1

n

(u

n

))

This result can be used to generate samples from F when

both the copula and the marginals of the variables are known.

A variety of copula-based generative models, including Gaus-

sian copulas which we use in this paper, assume that the copula

belongs to a given restricted family.

Gaussian copulas. Given an n-dimensional correlation

matrix C, its Gaussian copula is defined as:

F

C

(u

1

, . . . , u

d

) = Φ

C

(Φ

−1

(u

1

), . . . , Φ

−1

(u

d

)) (3)

∀u = (u

1

, . . . , u

n

) ∈ [0, 1]

n

(4)

Alg. 2 describes a procedure to generate a sample Y =

(Y

1

, . . . , Y

n

) from a distribution satisfying the following two

properties: (1) the marginals of the distribution are F

1

, . . . , F

n

and (2) its dependencies are given by the Gaussian copula F

C

.

Note that for arbitrary marginals, the correlations between the

variables Y

1

, . . . , Y

n

are not necessarily equal to the correla-

tions C. However, when the marginals are standard normals

F

i

= Φ, i = 1, . . . , n, the correlations are the same. Some