Fully Convolutional Networks for Semantic Segmentation

Jonathan Long

∗

Evan Shelhamer

∗

Trevor Darrell

UC Berkeley

{jonlong,shelhamer,trevor}@cs.berkeley.edu

Abstract

Convolutional networks are powerful visual models that

yield hierarchies of features. We show that convolu-

tional networks by themselves, trained end-to-end, pixels-

to-pixels, exceed the state-of-the-art in semantic segmen-

tation. Our key insight is to build “fully convolutional”

networks that take input of arbitrary size and produce

correspondingly-sized output with efficient inference and

learning. We define and detail the space of fully convolu-

tional networks, explain their application to spatially dense

prediction tasks, and draw connections to prior models. We

adapt contemporary classification networks (AlexNet [22],

the VGG net [34], and GoogLeNet [35]) into fully convolu-

tional networks and transfer their learned representations

by fine-tuning [5] to the segmentation task. We then define a

skip architecture that combines semantic information from

a deep, coarse layer with appearance information from a

shallow, fine layer to produce accurate and detailed seg-

mentations. Our fully convolutional network achieves state-

of-the-art segmentation of PASCAL VOC (20% relative im-

provement to 62.2% mean IU on 2012), NYUDv2, and SIFT

Flow, while inference takes less than one fifth of a second

for a typical image.

1. Introduction

Convolutional networks are driving advances in recog-

nition. Convnets are not only improving for whole-image

classification [22, 34, 35], but also making progress on lo-

cal tasks with structured output. These include advances

in bounding box object detection [32, 12, 19], part and key-

point prediction [42, 26], and local correspondence [26, 10].

The natural next step in the progression from coarse to

fine inference is to make a prediction at every pixel. Prior

approaches have used convnets for semantic segmentation

[30, 3, 9, 31, 17, 15, 11], in which each pixel is labeled with

the class of its enclosing object or region, but with short-

comings that this work addresses.

∗

Authors contributed equally

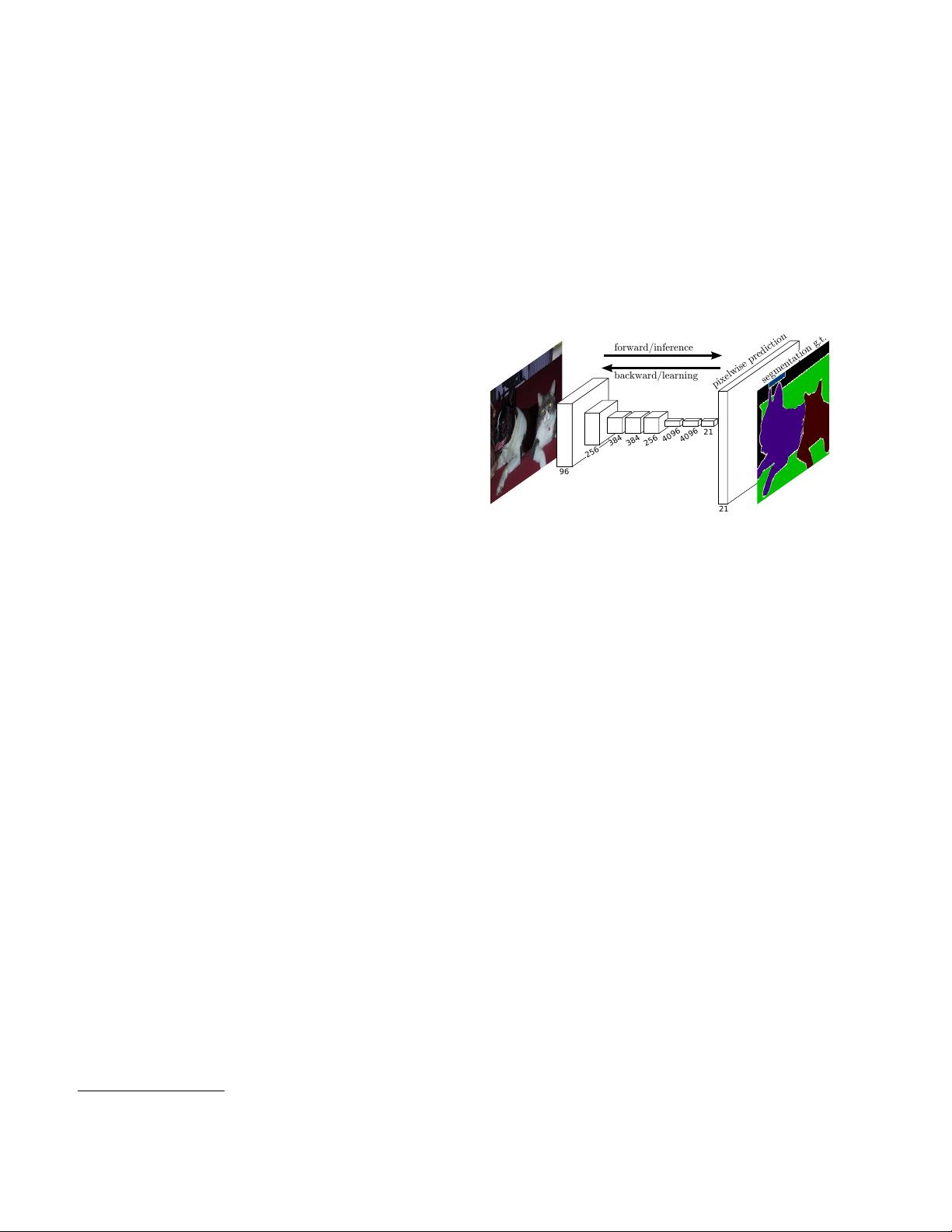

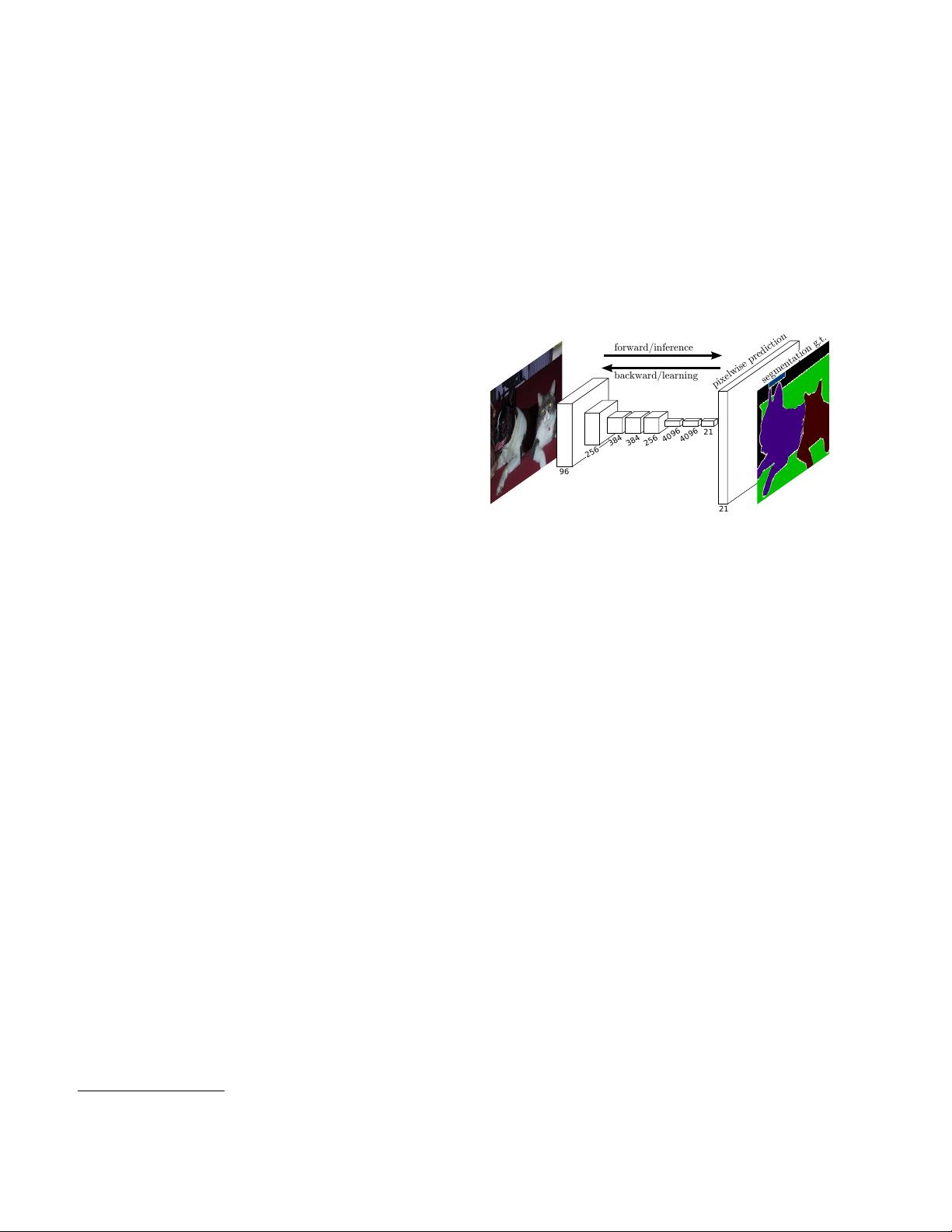

96

384

256

4096

4096

21

21

backward/learning

forward/inference

pixelwise prediction

segmentation g.t.

256

384

Figure 1. Fully convolutional networks can efficiently learn to

make dense predictions for per-pixel tasks like semantic segmen-

tation.

We show that a fully convolutional network (FCN)

trained end-to-end, pixels-to-pixels on semantic segmen-

tation exceeds the state-of-the-art without further machin-

ery. To our knowledge, this is the first work to train FCNs

end-to-end (1) for pixelwise prediction and (2) from super-

vised pre-training. Fully convolutional versions of existing

networks predict dense outputs from arbitrary-sized inputs.

Both learning and inference are performed whole-image-at-

a-time by dense feedforward computation and backpropa-

gation. In-network upsampling layers enable pixelwise pre-

diction and learning in nets with subsampled pooling.

This method is efficient, both asymptotically and abso-

lutely, and precludes the need for the complications in other

works. Patchwise training is common [30, 3, 9, 31, 11], but

lacks the efficiency of fully convolutional training. Our ap-

proach does not make use of pre- and post-processing com-

plications, including superpixels [9, 17], proposals [17, 15],

or post-hoc refinement by random fields or local classifiers

[9, 17]. Our model transfers recent success in classifica-

tion [22, 34, 35] to dense prediction by reinterpreting clas-

sification nets as fully convolutional and fine-tuning from

their learned representations. In contrast, previous works

have applied small convnets without supervised pre-training

[9, 31, 30].

Semantic segmentation faces an inherent tension be-

tween semantics and location: global information resolves

what while local information resolves where. Deep feature

hierarchies encode location and semantics in a nonlinear

1