1M 5M 13M

Dataset size

112K

457K

1120K

Training steps

0.0 13.8 20.7

2.6 21.2 28.9

0.3 10.8 31.6

ImageNet-A

1M 5M 13M

Dataset size

0.0 21.2 25.9

7.2 29.5 36.1

0.2 16.0 38.7

ImageNet-C

1M 5M 13M

Dataset size

0.0 20.0 25.7

1.9 27.6 32.6

-4.9 20.1 32.3

ImageNet-V2

1M 5M 13M

Dataset size

0.0 13.2 16.7

1.5 17.7 24.6

-3.7 13.5 25.4

ObjectNet

1M 5M 13M

Dataset size

0.0 22.0 25.9

5.5 27.7 32.1

1.7 18.2 36.6

ImageNet-Vid

1M 5M 13M

Dataset size

0.0 11.9 14.3

2.4 12.1 16.1

-3.0 6.8 16.8

YouTube-BB

1M 5M 13M

Dataset size

0.0 11.6 18.5

3.5 20.1 25.9

-1.9 8.4 26.6

ImageNet-Vid-W

1M 5M 13M

Dataset size

0.0 7.1 11.6

1.0 9.4 12.9

-2.4 4.1 14.0

YouTube-BB-W

1M 5M 13M

Dataset size

112K

457K

1120K

Training steps

1.0 12.0 18.9

3.1 16.3 24.5

0.3 3.3 24.1

ImageNet-A

1M 5M 13M

Dataset size

8.0 18.7 22.1

11.4 19.8 30.5

4.2 3.1 28.2

ImageNet-C

1M 5M 13M

Dataset size

10.2 16.9 20.7

8.9 18.7 24.8

-0.1 7.0 18.0

ImageNet-V2

1M 5M 13M

Dataset size

5.0 9.7 12.6

4.1 10.7 19.4

-0.5 4.4 16.4

ObjectNet

1M 5M 13M

Dataset size

7.3 18.8 23.2

8.1 18.5 25.3

4.4 4.7 23.5

ImageNet-Vid

1M 5M 13M

Dataset size

4.0 8.9 10.9

5.1 5.5 10.8

0.9 -0.4 8.0

YouTube-BB

1M 5M 13M

Dataset size

6.5 10.2 16.2

8.1 15.4 24.0

3.2 3.4 18.8

ImageNet-Vid-W

1M 5M 13M

Dataset size

2.4 4.9 10.4

2.9 6.4 10.3

-1.6 1.8 10.2

YouTube-BB-W

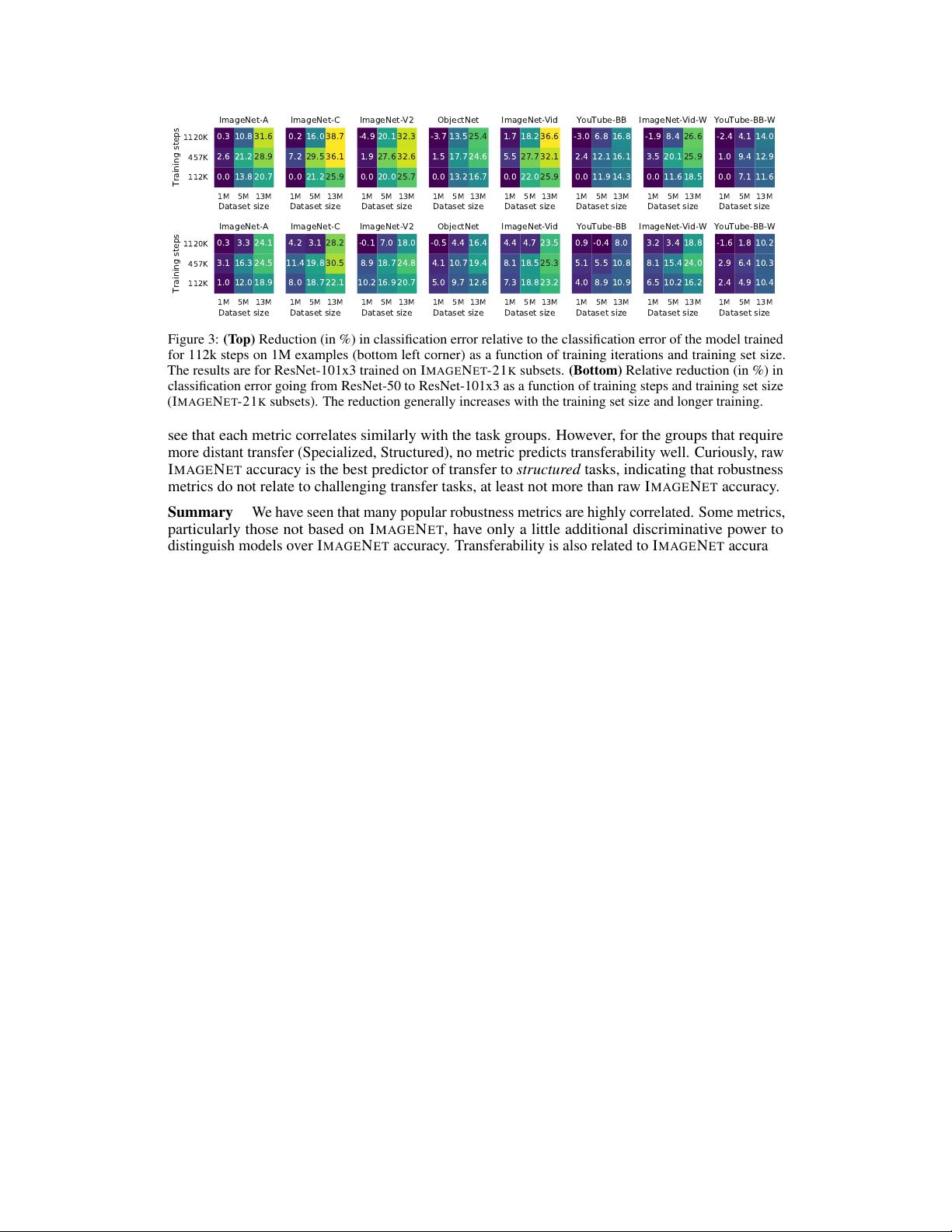

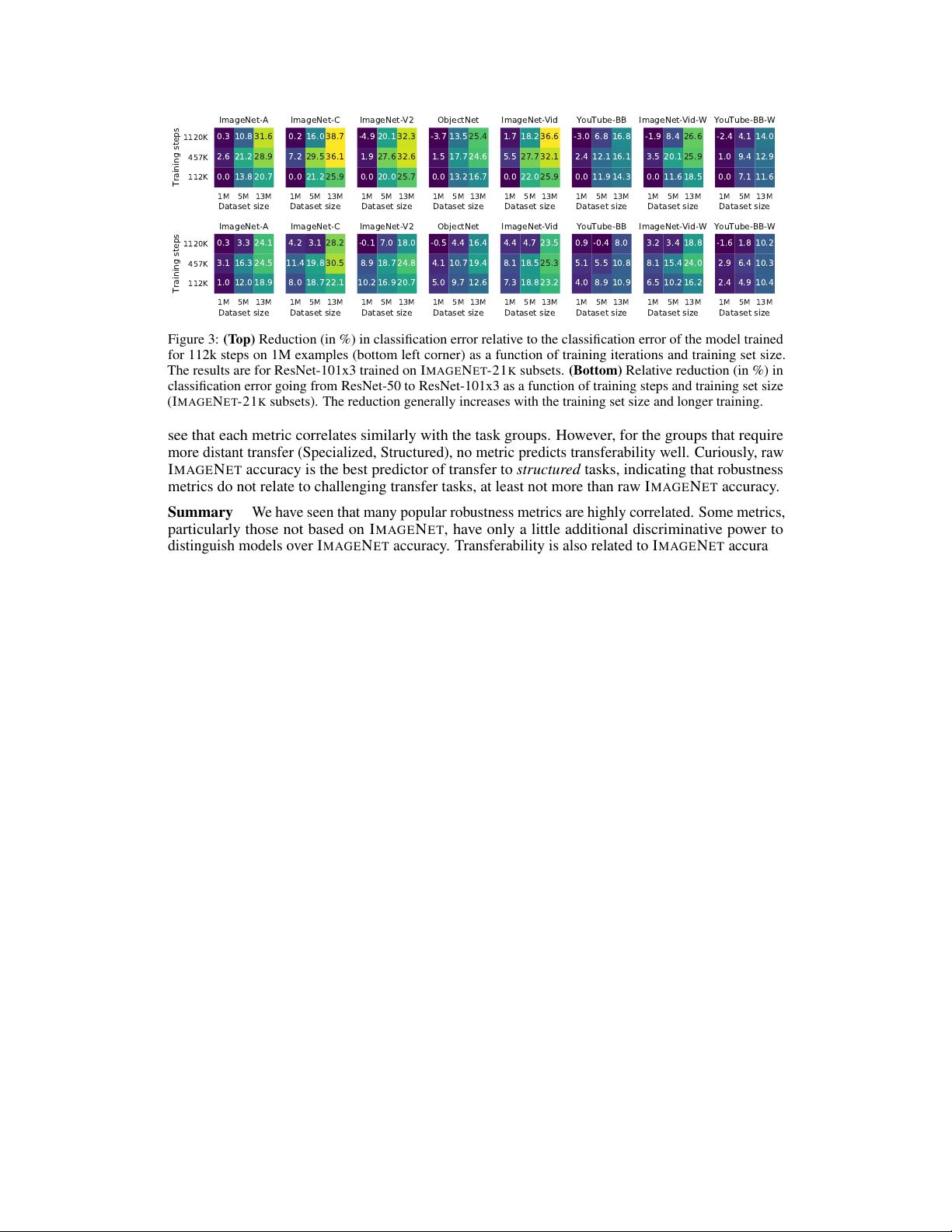

Figure 3:

(Top)

Reduction (in %) in classification error relative to the classification error of the model trained

for 112k steps on 1M examples (bottom left corner) as a function of training iterations and training set size.

The results are for ResNet-101x3 trained on IMAGENET-21K subsets.

(Bottom)

Relative reduction (in %) in

classification error going from ResNet-50 to ResNet-101x3 as a function of training steps and training set size

(IMAGENET-21K subsets). The reduction generally increases with the training set size and longer training.

see that each metric correlates similarly with the task groups. However, for the groups that require

more distant transfer (Specialized, Structured), no metric predicts transferability well. Curiously, raw

IMAGENET accuracy is the best predictor of transfer to structured tasks, indicating that robustness

metrics do not relate to challenging transfer tasks, at least not more than raw IMAGENET accuracy.

Summary

We have seen that many popular robustness metrics are highly correlated. Some metrics,

particularly those not based on IMAGENET, have only a little additional discriminative power to

distinguish models over IMAGENET accuracy. Transferability is also related to IMAGENET accuracy,

and hence robustness. We observe that while there is correlation, transfer highlights failures that are

somewhat independent of robustness. Further, no particular robustness metric appears to correlate

better with any particular group of transfer tasks than IMAGENET does. Since all of these metrics

seem closely linked, we investigate strategies known to be effective for IMAGENET and transfer

learning on the newer robustness benchmarks.

4 The effectiveness of scale for OOD generalization

Increasing the scale of pre-training data, model architecture, and training steps have recently led

to diminishing improvements in terms of IMAGENET accuracy. By contrast, it has been recently

established that scaling along these axes can lead to substantial improvements in transfer learning

performance [

34

,

58

]. In the context of robustness, this type of scaling has been explored less. While

there are some results suggesting that scale improves robustness [

25

,

50

,

67

,

61

], no principled

study decoupling the different scale axes has been performed. Given the strong correlation between

transfer performance and robustness, this motivates the systematic investigation of the effects of the

pre-training data size, model architecture size, training steps, and input resolution.

4.1 Effect of model size, training set size, and training schedule

We consider the standard IMAGENET training setup [

23

] as a baseline, and scale up the training

accordingly. To study the impact of dataset size, we consider the IMAGENET-21K [

11

] and JFT [

55

]

datasets for the experiments, as pre-training on either of them has shown great performance in transfer

learning [

34

]. We scale from the IMAGENET training set size (

1.28

M images) to the IMAGENET-21K

training set size (13M images, about

10

times larger than IMAGENET). To explore the effect of the

model size, we use a ResNet-50 as well as the deeper and

3×

wider ResNet-101x3 model. We further

investigate the impact of the training schedule as larger datasets are known to benefit from longer

training for transfer learning [

34

]. To disentangle the impact of dataset size and training schedules,

we train the models for every pair of dataset size and schedule.

We fine-tune the trained models to IMAGENET using the BiT HyperRule [

34

], and assess their OOD

generalization performance in the next section. Throughout, we report the reduction in classification

error relative to the model which was trained on the smallest number of examples, for the fewest

iterations, and hence achieves the lowest accuracy. Other details are presented in Appendix B.

5