Copyright © 2006 by the Association for Computing Machinery, Inc.

Permission to make digital or hard copies of part or all of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for commercial advantage and that copies bear this notice and the full citation on the

first page. Copyrights for components of this work owned by others than ACM must be

honored. Abstracting with credit is permitted. To copy otherwise, to republish, to post on

servers, or to redistribute to lists, requires prior specific permission and/or a fee.

Request permissions from Permissions Dept, ACM Inc., fax +1 (212) 869-0481 or e-mail

permissions@acm.org.

© 2006 ACM 0730-0301/06/0700- $5.00 0541

Appearance-Space Texture Synthesis

Sylvain Lefebvre Hugues Hoppe

Microsoft Research

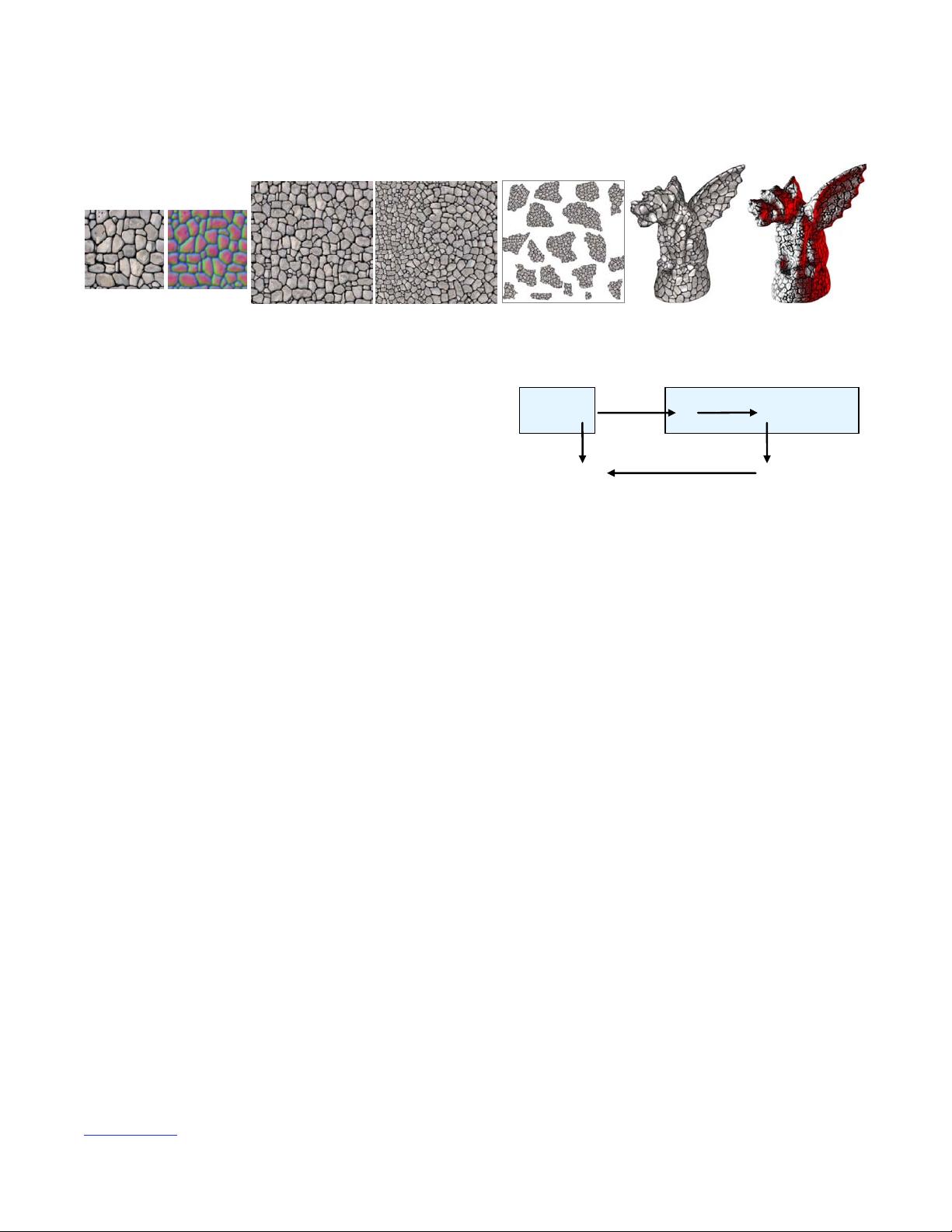

Exemplar E Transformed

′

Isometric synthesis Anisometric synthesis Synthesis in atlas domain Textured surface Radiance-transfer syn.

Figure 1: Transforming an exemplar into an 8D appearance space

improves synthesis quality and enables new real-time functionalities.

Abstract

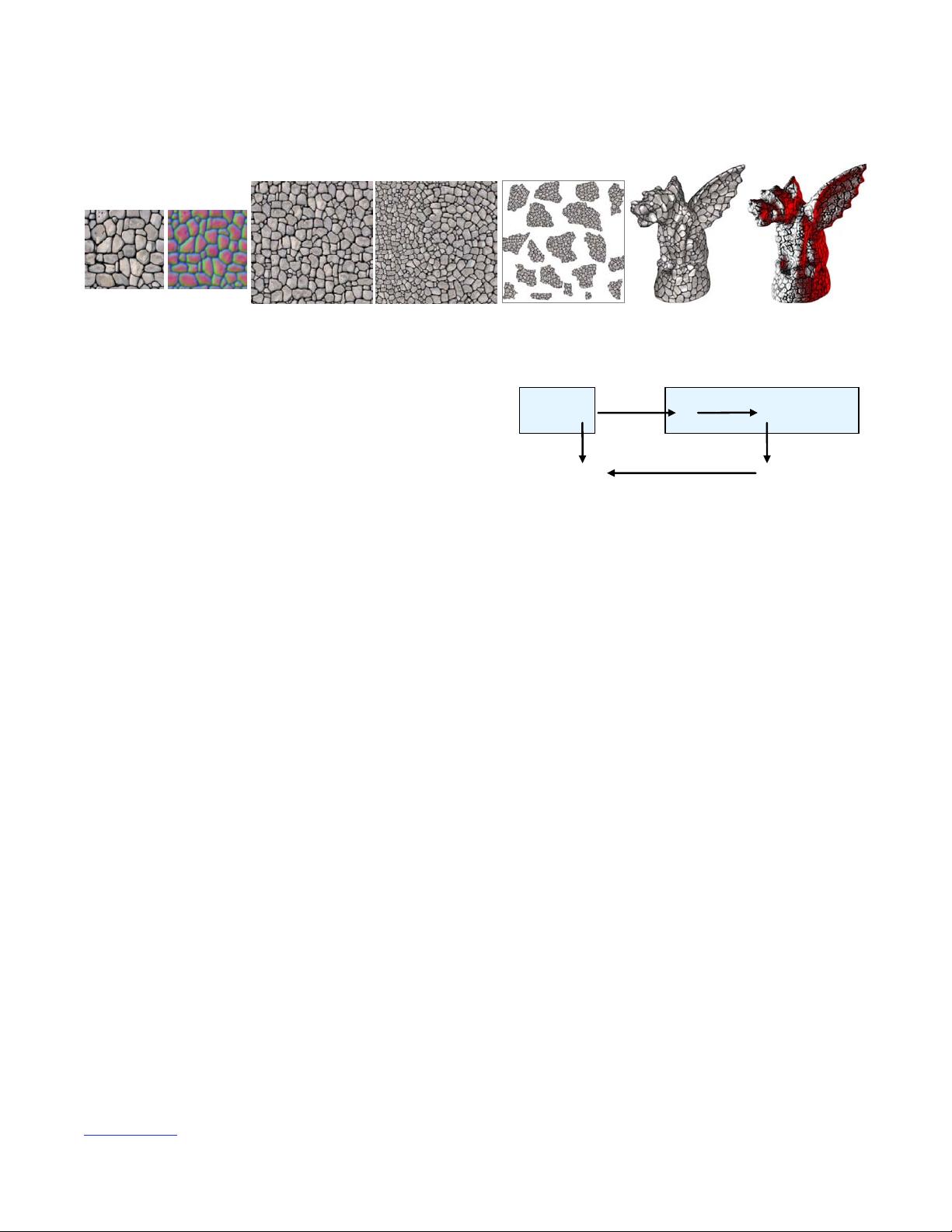

Exemplar

E

Color space

E

′

Appearance space

E

dim. red.

Transforme

exemplar

S

texture synthesis

[]ES

appearance

vectors

Synthesized

r

in

Synthesized

texture

Figure 2: Overview of synthesis using exemplar transformation.

The traditional approach in texture synthesis is to compare color

neighborhoods with those of an exemplar. We show that quality

is greatly improved if pointwise colors are replaced by appearance

vectors that incorporate nonlocal information such as feature and

radiance-transfer data. We perform dimensionality reduction on

these vectors prior to synthesis, to create a new appearance-space

exemplar. Unlike a texton space, our appearance space is low-

dimensional and Euclidean. Synthesis in this information-rich

space lets us reduce runtime neighborhood vectors from 5×5 grids

to just 4 locations. Building on this unifying framework, we

introduce novel techniques for coherent anisometric synthesis,

surface texture synthesis directly in an ordinary atlas, and texture

advection. Remarkably, we achieve all these functionalities in

real-time, or 3 to 4 orders of magnitude faster than prior work.

Keywords: exemplar-based synthesis, surface textures, feature-based

synthesis, anisometric synthesis, dimensionality reduction, RTT synthesis.

1. Introduction

We describe a new framework for exemplar-based texture synthe-

sis (Figure 1). Our main idea is to transform an exemplar image E

from the traditional space of pixel colors to a space of appearance

vectors, and then perform synthesis in this transformed space

(Figure 2). Specifically, we compute a high-dimensional appear-

ance vector at each pixel to form an appearance-space image E′,

and map E′ onto a low-dimensional transformed exemplar

using principal component analysis (PCA) or nonlinear dimen-

sionality reduction. Using

′

as the exemplar, we synthesize an

image S of exemplar coordinates. Finally, we return E[S] which

accesses the original exemplar, rather than

.

[]ES

′

The idea of exemplar transformation is simple, but has broad

implications. As we shall see, it improves synthesis quality and

enables new functionalities while maintaining fast performance.

Several prior synthesis schemes use appearance vectors. Heeger

and Bergen [1995], De Bonet [1997], and Portilla and Simoncelli

[2000] evaluate steerable filters on image pyramids. Malik et al

[1999] use multiscale Gaussian derivative filters, and apply

clustering to form discrete textons. Tong et al [2002] and Magda

and Kriegman [2003] synthesize texture by examining inter-

texton distances. However, textons have two drawbacks: the

clustering introduces discretization errors, and the distance metric

requires costly access to a large inner-product matrix. In contrast,

our approach defines an appearance space that is continuous, low-

dimensional, and has a trivial Euclidean metric.

The appearance vector at an image pixel should capture the local

structure of the texture, so that each pixel of the transformed

exemplar

provides an information-rich encoding for effective

synthesis (Section 3). We form the appearance vector using:

• Neighborhood information, to encode not just pointwise attrib-

utes but local spatial patterns including gradients.

• Feature information, to faithfully recover structural texture

elements not captured by local L

2

error.

• Radiance transfer, to synthesize material with consistent meso-

scale self-shadowing properties.

Because exemplar transformation is a preprocess, incorporating

the neighborhood, feature, and radiance-transfer information has

little cost. Moreover, the dimensionality reduction encodes all the

information concisely using exemplar-adapted basis functions,

rather than generic steerable filters.

In addition we present the following contributions:

• We show that exemplar transformation permits parallel pixel-

based synthesis using a runtime neighborhood vector of just 4

spatial points (Section 4), whereas prior schemes require at least

5×5 neighborhoods (and often larger for complex textures).

• We design a scheme for high-quality anisometric synthesis.

The key idea is to maintain texture coherence by only accessing

immediate pixel neighbors, and to transform their synthesized

coordinates according to a desired Jacobian field (Section 5).

• We create surface texture by performing anisometric synthesis

directly in the parametric domain of an ordinary texture atlas.

Because our synthesis algorithm accesses only immediate pixel

neighbors, we can jump across atlas charts using an indirection

map to form seamless texture. Prior state-of-the-art schemes

541