GIF2Video: Color Dequantization and Temporal Interpolation of GIF images

Yang Wang

1

, Haibin Huang

2

, Chuan Wang

2

, Tong He

3

, Jue Wang

2

, Minh Hoai

1

1

Stony Brook University,

2

Megvii Research USA,

3

UCLA

Abstract

Graphics Interchange Format (GIF) is a highly portable

graphics format that is ubiquitous on the Internet. De-

spite their small sizes, GIF images often contain undesir-

able visual artifacts such as flat color regions, false con-

tours, color shift, and dotted patterns. In this paper, we

propose GIF2Video, the first learning-based method for en-

hancing the visual quality of GIFs in the wild. We focus

on the challenging task of GIF restoration by recovering

information lost in the three steps of GIF creation: frame

sampling, color quantization, and color dithering. We first

propose a novel CNN architecture for color dequantization.

It is built upon a compositional architecture for multi-step

color correction, with a comprehensive loss function de-

signed to handle large quantization errors. We then adapt

the SuperSlomo network for temporal interpolation of GIF

frames. We introduce two large datasets, namely GIF-Faces

and GIF-Moments, for both training and evaluation. Ex-

perimental results show that our method can significantly

improve the visual quality of GIFs, and outperforms direct

baseline and state-of-the-art approaches.

1. Introduction

GIFs [1] are everywhere, being created and consumed

by millions of Internet users everyday on the Internet. The

widespread of GIFs can be attributed to its high portability

and small file sizes. However, due to heavy quantization

in the creation process, GIFs often have much worse visual

quality than their original source videos. Creating an ani-

mated GIF from a video involves three major steps: frame

sampling, color quantization, and optional color dithering.

Frame sampling introduces jerky motion, while color quan-

tization and color dithering create flat color regions, false

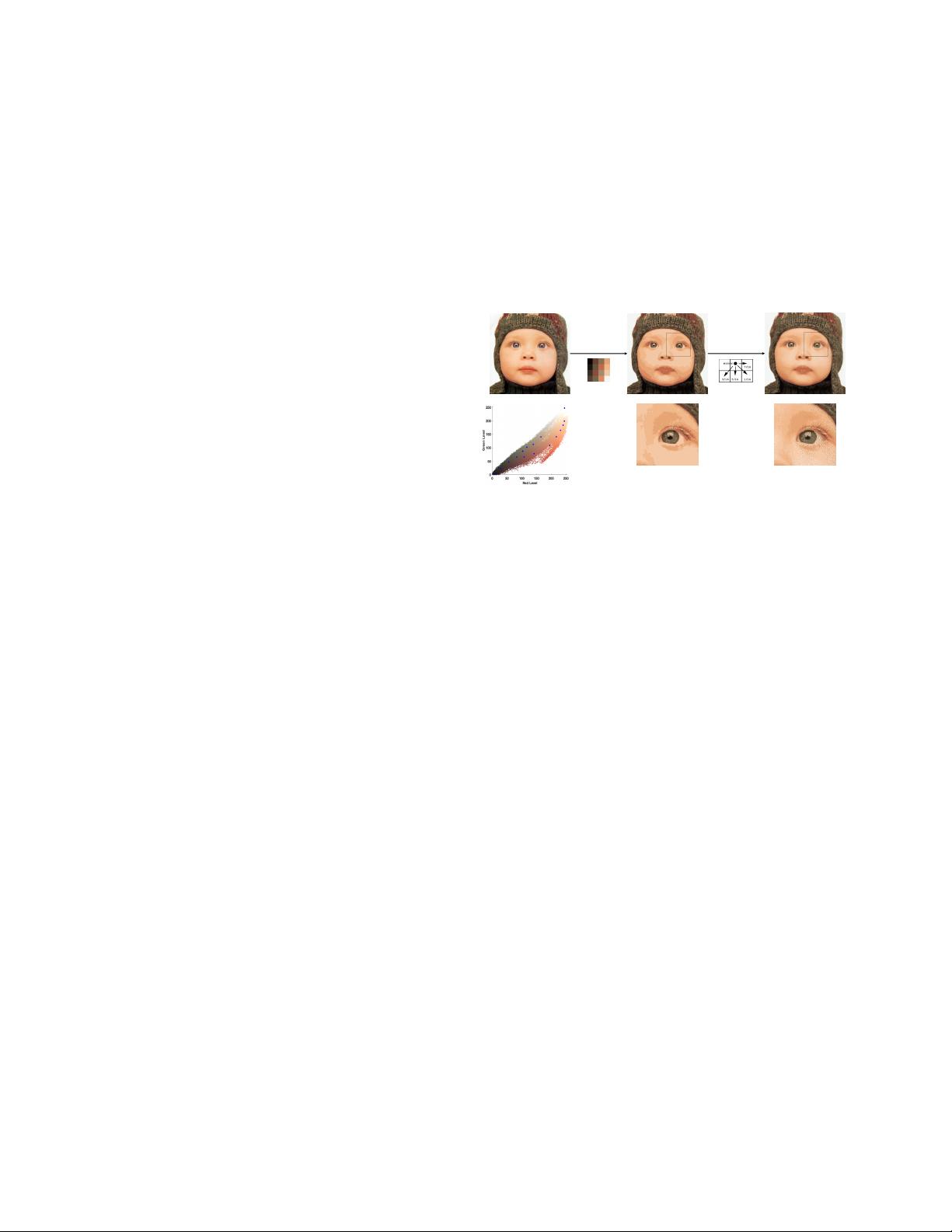

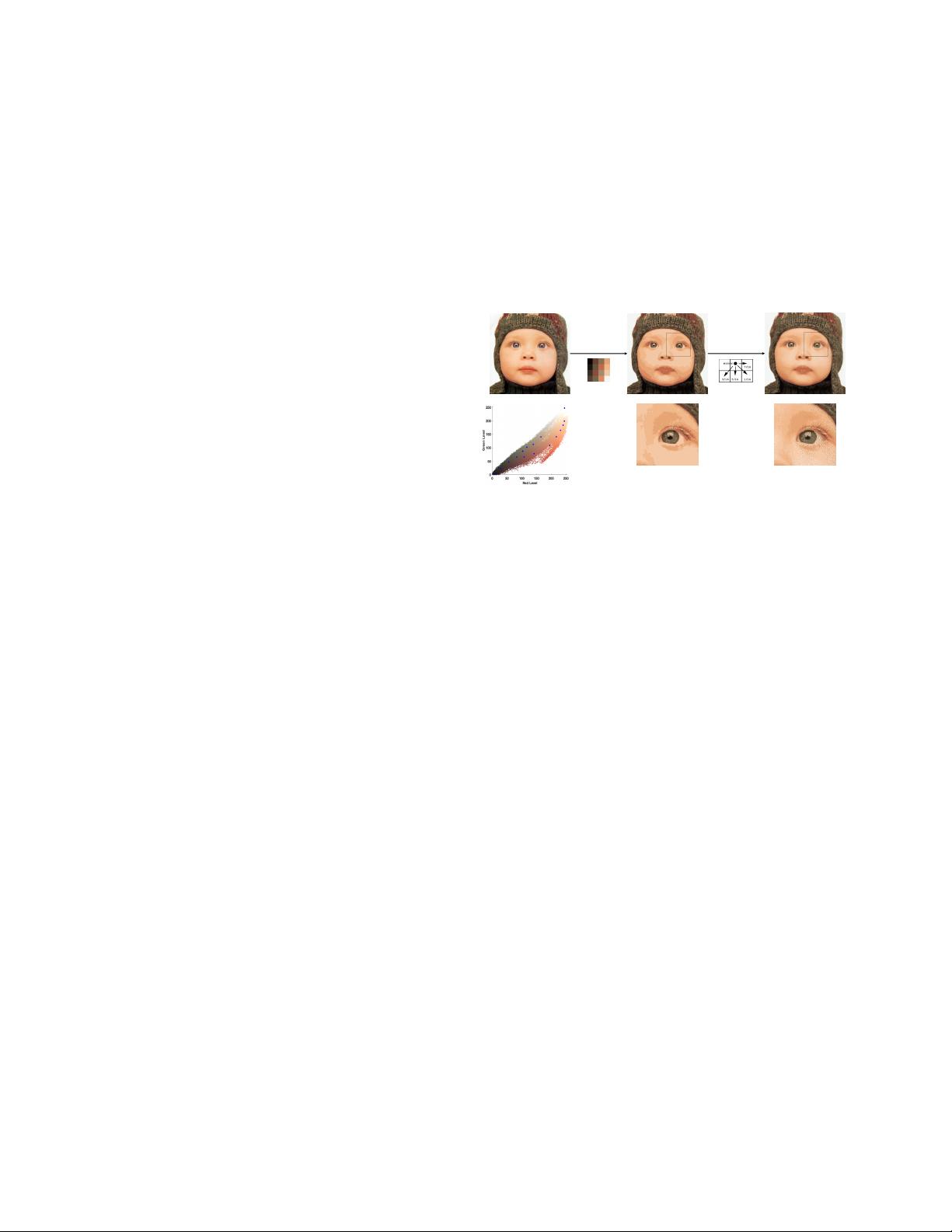

contours, color shift, and dotted pattern, as shown in Fig. 1.

In this paper, we propose GIF2Video, the first learning-

based method for enhancing the visual quality of GIFs. Our

algorithm consists of two components. First, it performs

color dequantization for each frame of the animated gif

sequence, removing the artifacts introduced by both color

quantization and color dithering. Second, it increases the

Color

Quantization

Color

Dithering

Artifacts:

1. False Contour

2. Flat Region

3. Color Shift

Artifacts:

4. Dotted Pattern

!"#"$ %&#'(('

)$$"$ *+,,-.+"/

Figure 1. Color quantization and color dithering. Two major

steps in the creation of a GIF image. These are lossy compression

processes that result in undesirable visual artifacts. Our approach

is able to remove these artifacts and produce a much more natural

image.

temporal resolution of the image sequence by using a mod-

ified SuperSlomo [19] network for temporal interpolation.

The main effort of this work is to develop a method for

color dequantization, i.e., removing the visual artifacts in-

troduced by heavy color quantization. Color quantization is

a lossy compression process that remaps original pixel col-

ors to a limited set of entries in a small color palette. This

process introduces quantization artifacts, similar to those

observed when the bit depth of an image is reduced. For

example, when the image bit depth is reduced from 48-bit

to 24-bit, the size of the color palette shrinks from 2.8×10

14

colors to 1.7 × 10

7

colors, leading to a small amount of ar-

tifacts. The color quantization process for GIF, however,

is far more aggressive with a typical palette of 256 dis-

tinct colors or less. Our task is to perform dequantization

from a tiny color palette (e.g., 256 or 32 colors), and it is

much more challenging than traditional bit depth enhance-

ment [15, 24, 36].

Of course, recovering all original pixel colors from the

quantized image is nearly impossible, thus our goal is ren-

der a plausible version of what the original image might

look like. The idea is to collect training data and train a

convolutional neural network [22, 32] to map a quantized

image to its original version. It is however difficult to ob-

1

arXiv:1901.02840v1 [cs.CV] 9 Jan 2019