1

Vision meets Robotics: The KITTI Dataset

Andreas Geiger, Philip Lenz, Christoph Stiller and Raquel Urtasun

Abstract—We present a novel dataset captured from a VW

station wagon for use in mobile robotics and autonomous driving

research. In total, we recorded 6 hours of traffic scenarios at

10-100 Hz using a variety of sensor modalities such as high-

resolution color and grayscale stereo cameras, a Velodyne 3D

laser scanner and a high-precision GPS/IMU inertial navigation

system. The scenarios are diverse, capturing real-world traffic

situations and range from freeways over rural areas to inner-

city scenes with many static and dynamic objects. Our data is

calibrated, synchronized and timestamped, and we provide the

rectified and raw image sequences. Our dataset also contains

object labels in the form of 3D tracklets and we provide online

benchmarks for stereo, optical flow, object detection and other

tasks. This paper describes our recording platform, the data

format and the utilities that we provide.

Index Terms—dataset, autonomous driving, mobile robotics,

field robotics, computer vision, cameras, laser, GPS, benchmarks,

stereo, optical flow, SLAM, object detection, tracking, KITTI

I. INTRODUCTION

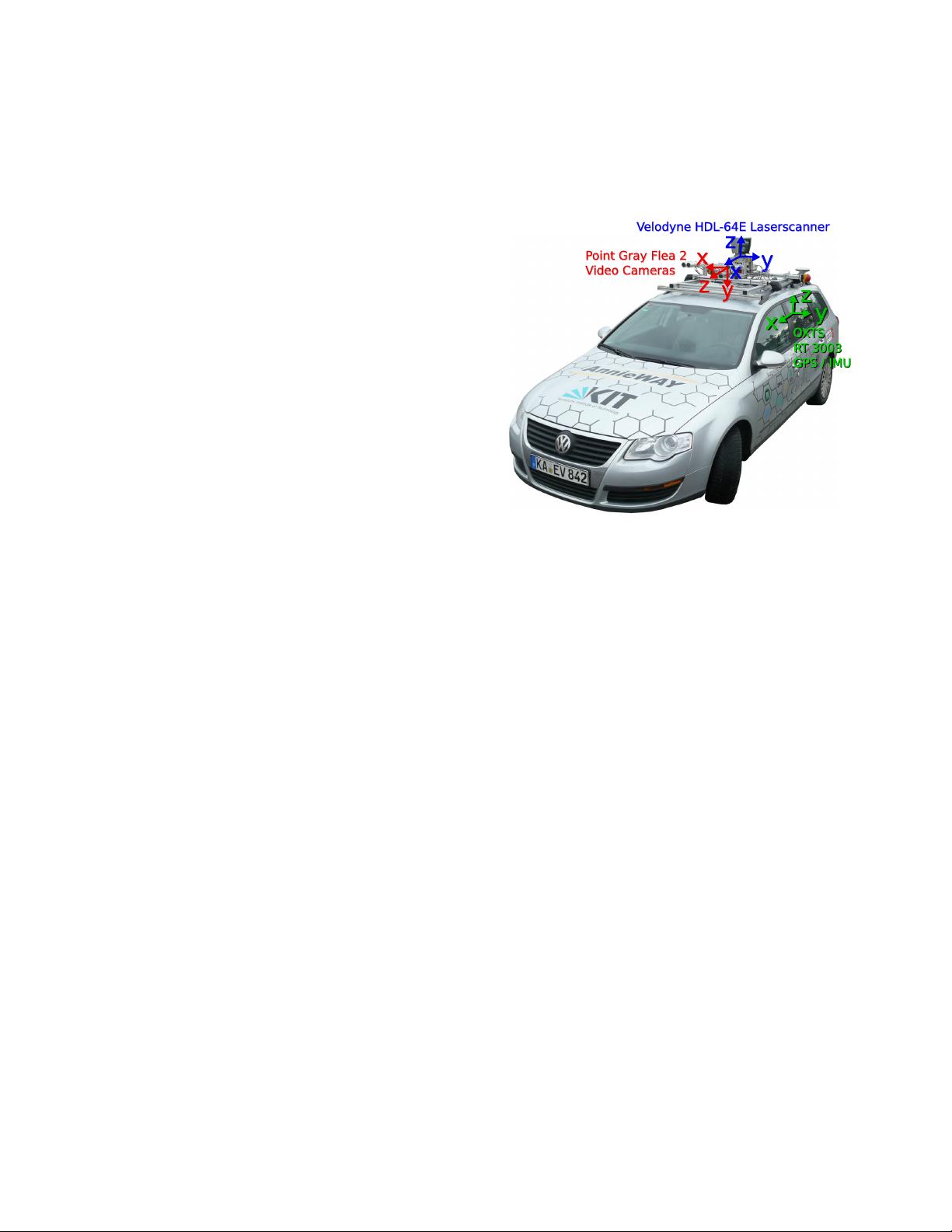

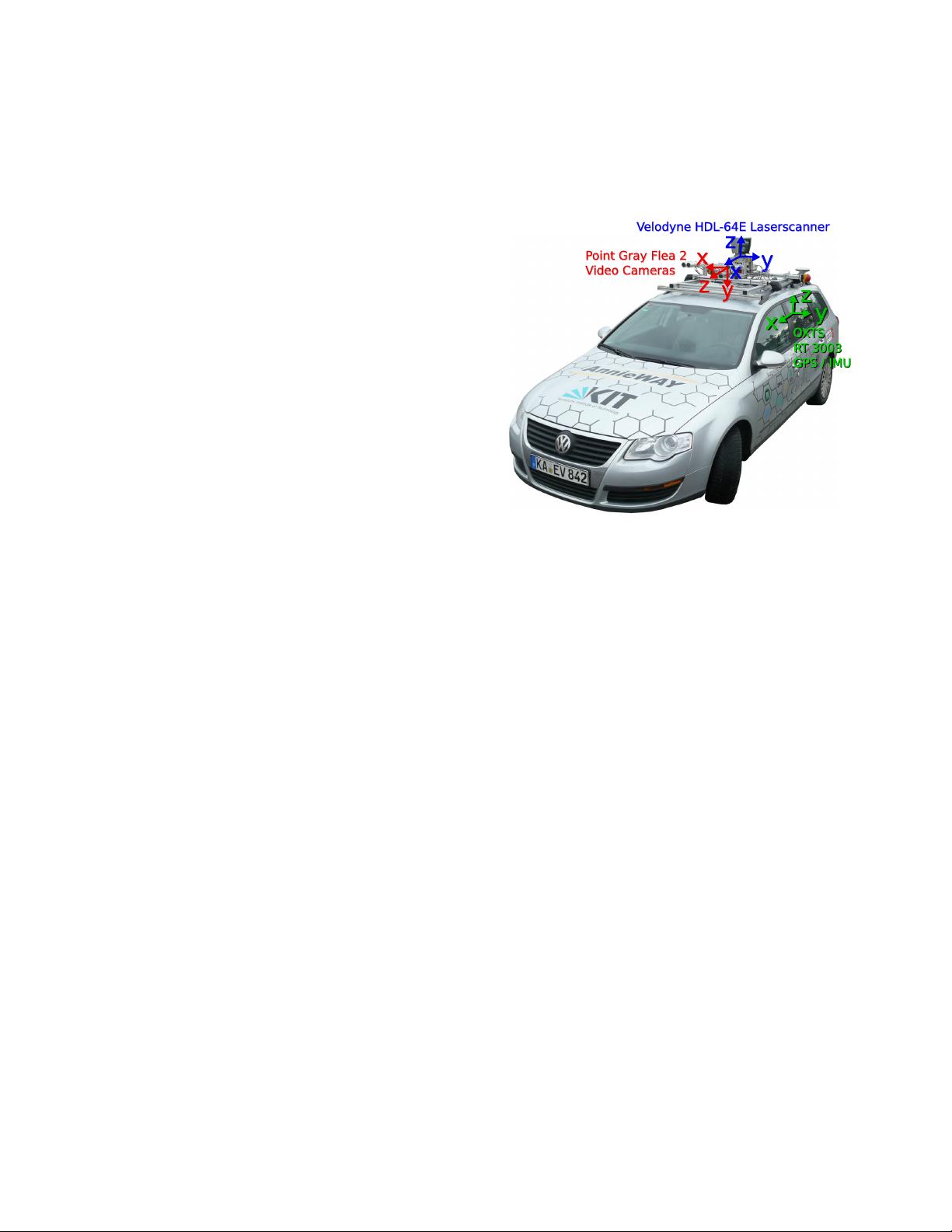

The KITTI dataset has been recorded from a moving plat-

form (Fig. 1) while driving in and around Karlsruhe, Germany

(Fig. 2). It includes camera images, laser scans, high-precision

GPS measurements and IMU accelerations from a combined

GPS/IMU system. The main purpose of this dataset is to

push forward the development of computer vision and robotic

algorithms targeted to autonomous driving [1]–[7]. While our

introductory paper [8] mainly focuses on the benchmarks,

their creation and use for evaluating state-of-the-art computer

vision methods, here we complement this information by

providing technical details on the raw data itself. We give

precise instructions on how to access the data and comment

on sensor limitations and common pitfalls. The dataset can

be downloaded from http://www.cvlibs.net/datasets/kitti. For

a review on related work, we refer the reader to [8].

II. SENSOR SETUP

Our sensor setup is illustrated in Fig. 3:

• 2 × PointGray Flea2 grayscale cameras (FL2-14S3M-C),

1.4 Megapixels, 1/2” Sony ICX267 CCD, global shutter

• 2 × PointGray Flea2 color cameras (FL2-14S3C-C), 1.4

Megapixels, 1/2” Sony ICX267 CCD, global shutter

• 4 × Edmund Optics lenses, 4mm, opening angle ∼ 90

◦

,

vertical opening angle of region of interest (ROI) ∼ 35

◦

• 1 × Velodyne HDL-64E rotating 3D laser scanner, 10 Hz,

64 beams, 0.09 degree angular resolution, 2 cm distance

accuracy, collecting ∼ 1.3 million points/second, field of

view: 360

◦

horizontal, 26.8

◦

vertical, range: 120 m

A. Geiger, P. Lenz and C. Stiller are with the Department of Measurement

and Control Systems, Karlsruhe Institute of Technology, Germany. Email:

{geiger,lenz,stiller}@kit.edu

R. Urtasun is with the Toyota Technological Institute at Chicago, USA.

Email: rurtasun@ttic.edu

x

z

y

y

z

x

Velodyne HDL-64E Laserscanner

Point Gray Flea 2

Video Cameras

x

y

z

OXTS

RT 3003

GPS / IMU

x

y

z

OXTS

RT 3003

GPS / IMU

Fig. 1. Recording Platform. Our VW Passat station wagon is equipped

with four video cameras (two color and two grayscale cameras), a rotating

3D laser scanner and a combined GPS/IMU inertial navigation system.

• 1 × OXTS RT3003 inertial and GPS navigation system,

6 axis, 100 Hz, L1/L2 RTK, resolution: 0.02m / 0.1

◦

Note that the color cameras lack in terms of resolution due

to the Bayer pattern interpolation process and are less sensitive

to light. This is the reason why we use two stereo camera

rigs, one for grayscale and one for color. The baseline of

both stereo camera rigs is approximately 54 cm. The trunk

of our vehicle houses a PC with two six-core Intel XEON

X5650 processors and a shock-absorbed RAID 5 hard disk

storage with a capacity of 4 Terabytes. Our computer runs

Ubuntu Linux (64 bit) and a real-time database [9] to store

the incoming data streams.

III. DATASET

The raw data described in this paper can be accessed from

http://www.cvlibs.net/datasets/kitti and contains ∼ 25% of our

overall recordings. The reason for this is that primarily data

with 3D tracklet annotations has been put online, though we

will make more data available upon request. Furthermore, we

have removed all sequences which are part of our benchmark

test sets. The raw data set is divided into the categories ’Road’,

’City’, ’Residential’, ’Campus’ and ’Person’. Example frames

are illustrated in Fig. 5. For each sequence, we provide the raw

data, object annotations in form of 3D bounding box tracklets

and a calibration file, as illustrated in Fig. 4. Our recordings

have taken place on the 26th, 28th, 29th, 30th of September

and on the 3rd of October 2011 during daytime. The total size

of the provided data is 180 GB.