ClickHouse Documentation, Release

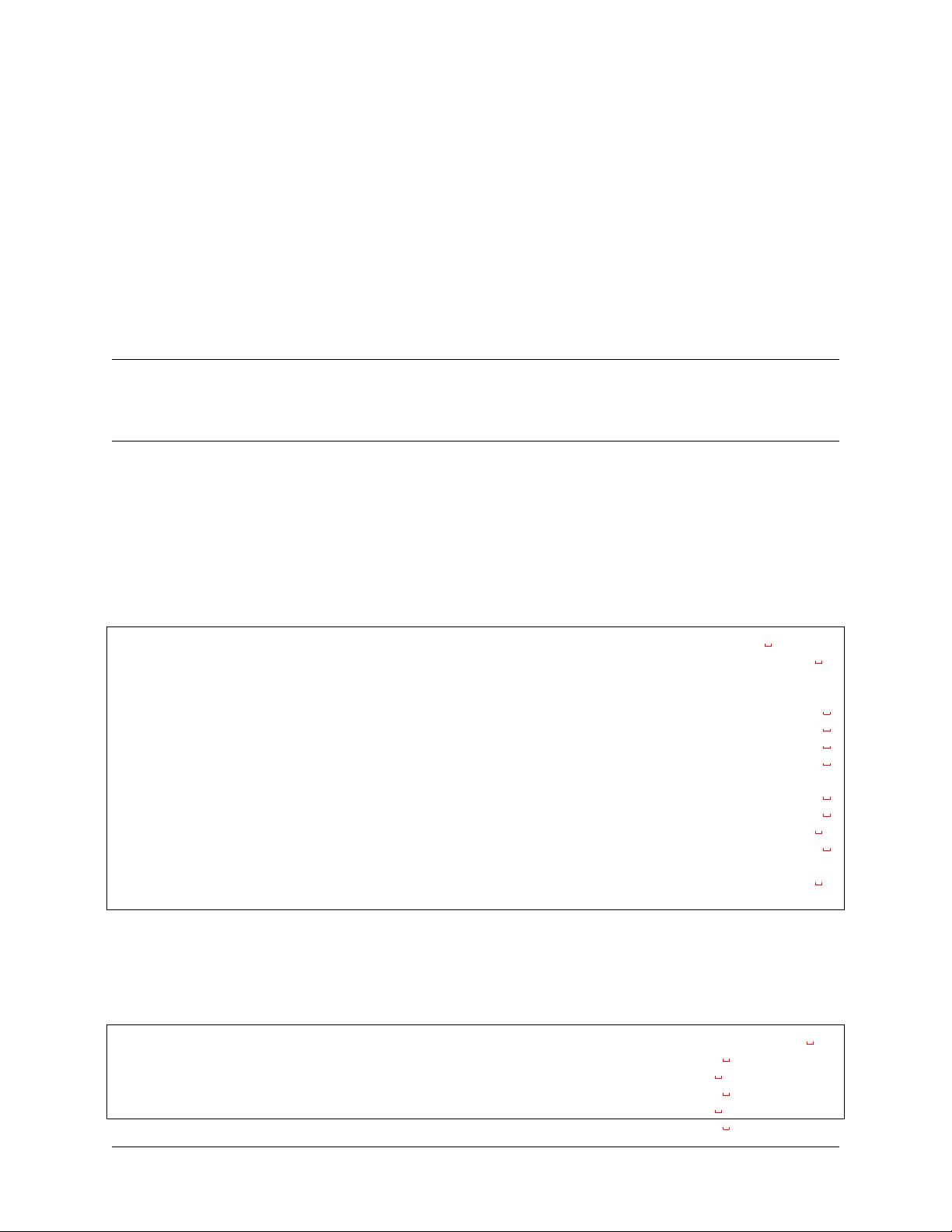

JavaEnable: 1 0 1 0 0 0 1 0 1 1

˓→ 1 1 1 1 0 1 0 0 1 1

Title: Yandex Announcements - Investor Relations - Yandex Yandex -- Contact

˓→us -- Moscow Yandex -- Mission Ru Yandex -- History -- History of

˓→Yandex Yandex Financial Releases - Investor Relations - Yandex Yandex --

˓→Locations Yandex Board of Directors - Corporate Governance - Yandex

˓→Yandex -- Technologies

GoodEvent: 1 1 1 1 1 1 1 1 1 1

˓→ 1 1 1 1 1 1 1 1 1 1

EventTime: 2016-05-18 05:19:20 2016-05-18 08:10:20 2016-05-18 07:38:00

˓→2016-05-18 01:13:08 2016-05-18 00:04:06 2016-05-18 04:21:30 2016-05-18

˓→00:34:16 2016-05-18 07:35:49 2016-05-18 11:41:59 2016-05-18 01:13:32

These examples only show the order that data is arranged in. The values from different columns are stored separately,

and data from the same column is stored together. Examples of a column-oriented DBMS: Vertica, Paraccel

(Actian Matrix) (Amazon Redshift), Sybase IQ, Exasol, Infobright, InfiniDB, MonetDB

(VectorWise) (Actian Vector), LucidDB, SAP HANA, Google Dremel, Google PowerDrill,

Druid, kdb+ . .

Different orders for storing data are better suited to different scenarios. The data access scenario refers to what queries

are made, how often, and in what proportion; how much data is read for each type of query - rows, columns, and bytes;

the relationship between reading and updating data; the working size of the data and how locally it is used; whether

transactions are used, and how isolated they are; requirements for data replication and logical integrity; requirements

for latency and throughput for each type of query, and so on.

The higher the load on the system, the more important it is to customize the system to the scenario, and the more

specific this customization becomes. There is no system that is equally well-suited to significantly different scenarios.

If a system is adaptable to a wide set of scenarios, under a high load, the system will handle all the scenarios equally

poorly, or will work well for just one of the scenarios.

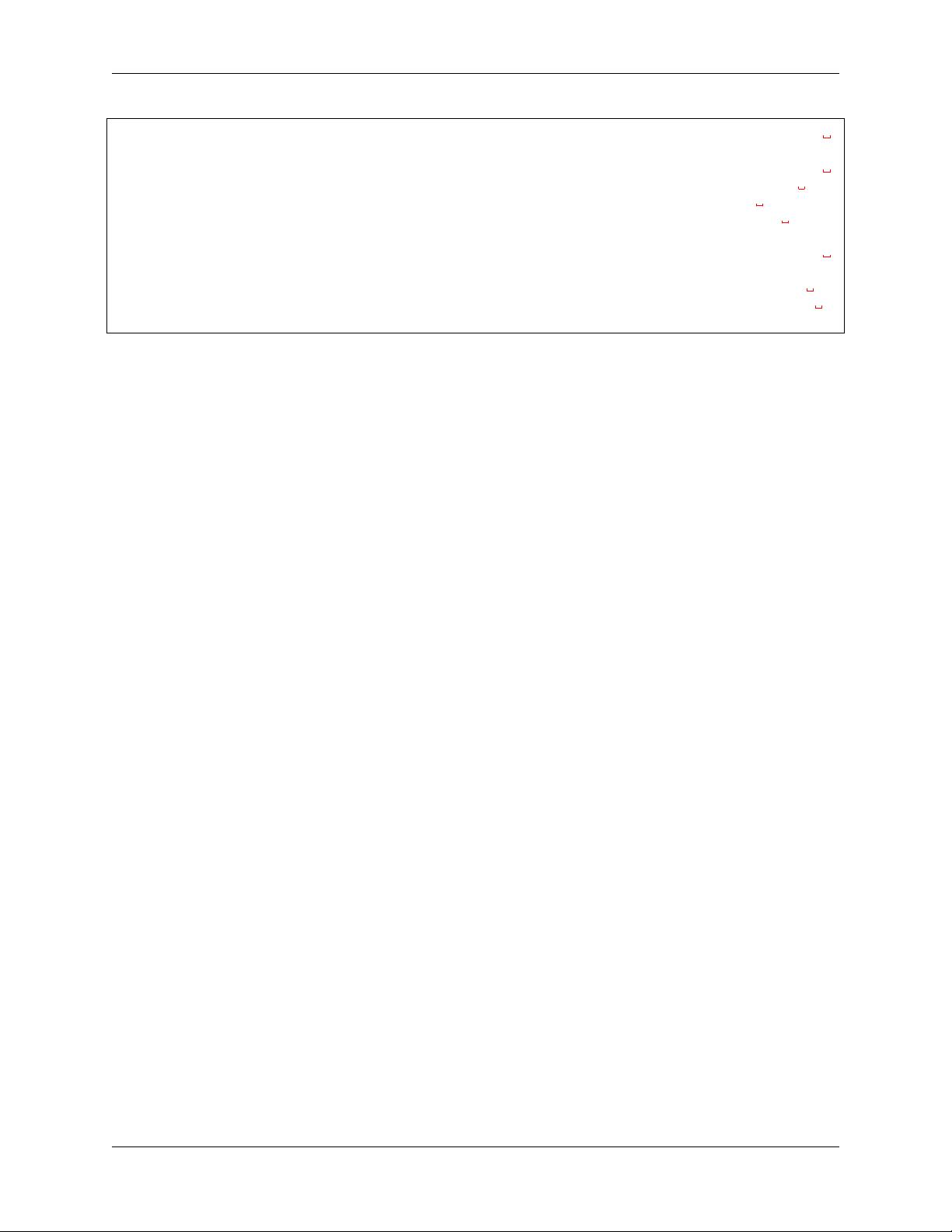

We’ll say that the following is true for the OLAP (online analytical processing) scenario:

• The vast majority of requests are for read access.

• Data is updated in fairly large batches (> 1000 rows), not by single rows; or it is not updated at all.

• Data is added to the DB but is not modified.

• For reads, quite a large number of rows are extracted from the DB, but only a small subset of columns.

• Tables are “wide,” meaning they contain a large number of columns.

• Queries are relatively rare (usually hundreds of queries per server or less per second).

• For simple queries, latencies around 50 ms are allowed.

• Column values are fairly small - numbers and short strings (for example, 60 bytes per URL).

• Requires high throughput when processing a single query (up to billions of rows per second per server).

• There are no transactions.

• Low requirements for data consistency.

• There is one large table per query. All tables are small, except for one.

• A query result is significantly smaller than the source data. That is, data is filtered or aggregated. The result fits

in a single server’s RAM.

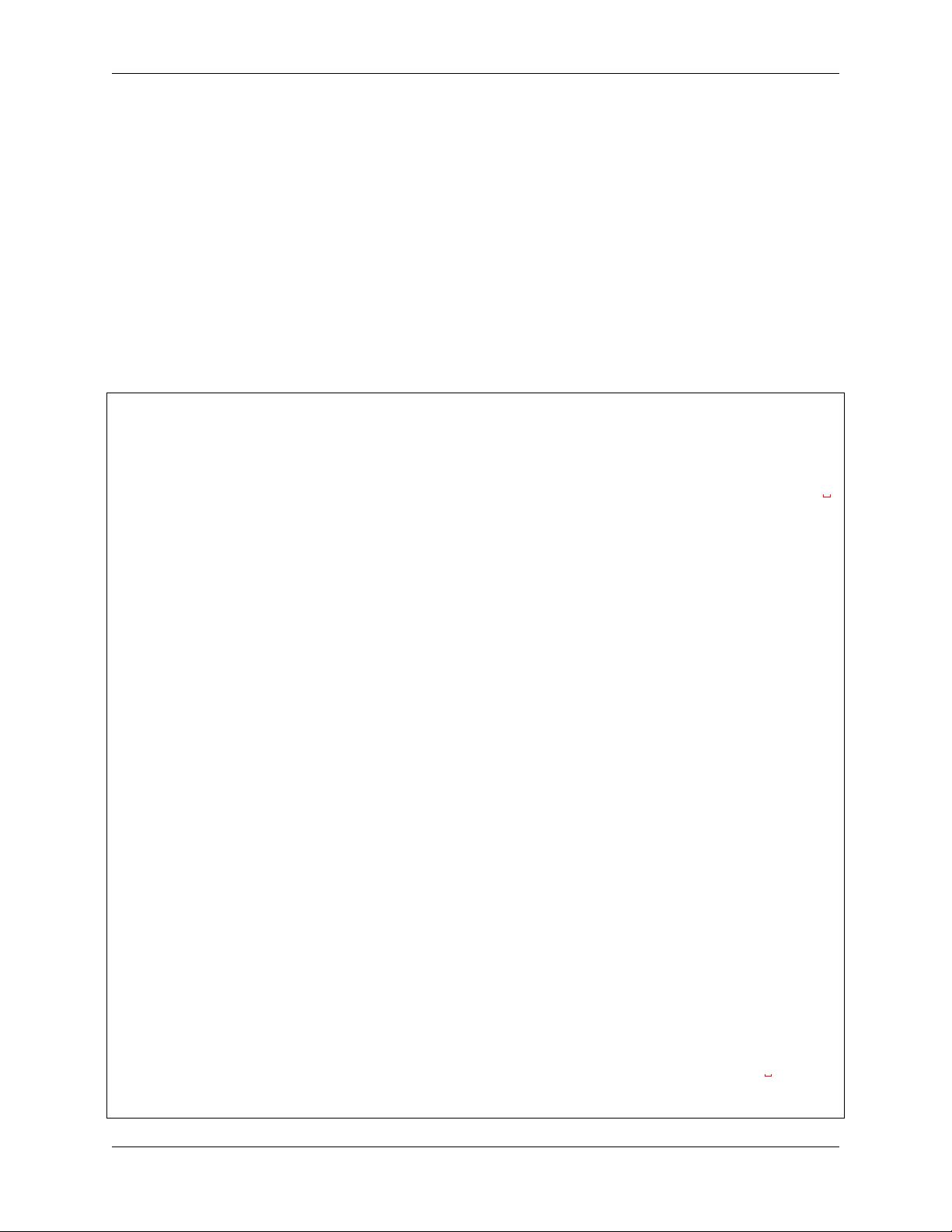

It is easy to see that the OLAP scenario is very different from other popular scenarios (such as OLTP or Key-Value

access). So it doesn’t make sense to try to use OLTP or a Key-Value DB for processing analytical queries if you want

to get decent performance. For example, if you try to use MongoDB or Elliptics for analytics, you will get very poor

performance compared to OLAP databases.

2 Chapter 1. Introduction