defines the DB organization and the other module defines the

format of DB records plus the functions that access their contents.

Listing B-2 is the erlang implementation of the dbtree module. It

defines the in memory DB organization as a general-balanced tree

of records that are identified by a unique sequence number.

Listing B-4 is the xact module which defines the record format for

DB records, each representing an account transaction.

Each of these modules is a compilation unit that exposes only its

exported functions; together they represent a dynamic process unit

that exhibits the behaviour of the message protocol interface. The

joining of these three modules is created dynamically as a call to

the start_link function, passing the module name of the DB

organization as well as the module name of the DB record format.

This implementation may be replaced at runtime by naming a

different module when start_link is called. Likewise, the frame-

work can be modified without concern to the implementation or

organization of records. Mechanism is completely separate from

data structure and data organization.

The process defined above is an example of strong encapsula-

tion. The boundaries of the process space cannot be violated,

even accidentally, by any other code because the OS will prevent

it. To interact with this component of the design, an external

process must send a message to either add, update, delete, get a

single record, get the number of records, filter the records, export

them or stop the server process. All other messages will be

ignored.

The filter message is designed to create a clone of the core

memory DB, which will contain a subset of the records. This is

the technique for obtaining only the entertainment expense

records, or, given only the entertainment expenses, all entertain-

ment transactions for a week long vacation. The main DB will

have an application controller as its parent process. A subset

database will have a main DB process as its parent. A manage

process can only receive modification messages from its parent

process, thus ensuring that messages received which affect the

state of the DB can be propagated to the receiver’s children and so

on in succession, so that all dependent record subsets may be

synchronized. For example, one person could be editing the DB

while a second person requests a report. The report will be a live

clone of a subset of the main DB and will reflect any

modifications to the DB subsequent to the initiation of the report.

The design technique illustrated here is termed inductive

decomposition. It is used when a feature set of a system design

can be reduced to a series of identical processes operating on

different subsets of the data. Since the report processes reuse

code already needed by the core DB manager, the design can

reduce the amount of code needed and the number of modules

implemented, thus increasing the probability of producing a

reliable system. Other benefits may result from the code sub-

sumption illustrated: behavior abstraction, automated testing,

dynamic data versioning and dynamic code revision, while at the

same time allowing parallelism across multiple CPUs. Because

all the processes share the same interface, their behavior can be

abstracted and adapted by an external management process. The

user can control a single process that can direct its messages to

any of the dynamically created reports using the same code and

mechanism for altering their behavior. If the management process

were to read a set of test cases, apply them and verify the results,

the abstracted interface could be used for test automation. The

strong encapsulation of the process also allows for two core DB

managers to be co-resident, each with a different data version

module, for example two versions of the xact record formats.

Each report or record subset created will retain the characteristics

and data version of its parent process, while at the same time the

behavior abstraction will be consistent so any automated testing

tools or user control process can simultaneously interact with

either version of data. In the same manner, a particular version of

software running in a single process can load a replacement

version and continue without disrupting the system, provided the

message interface does not change. In Ericsson’s full

implementation for a non-stop environment [1], this latter

restriction is lifted by introducing system messages which notify

processes prior to a code change.

3.2. Abstracted and Adapted Interfaces;

Transformers

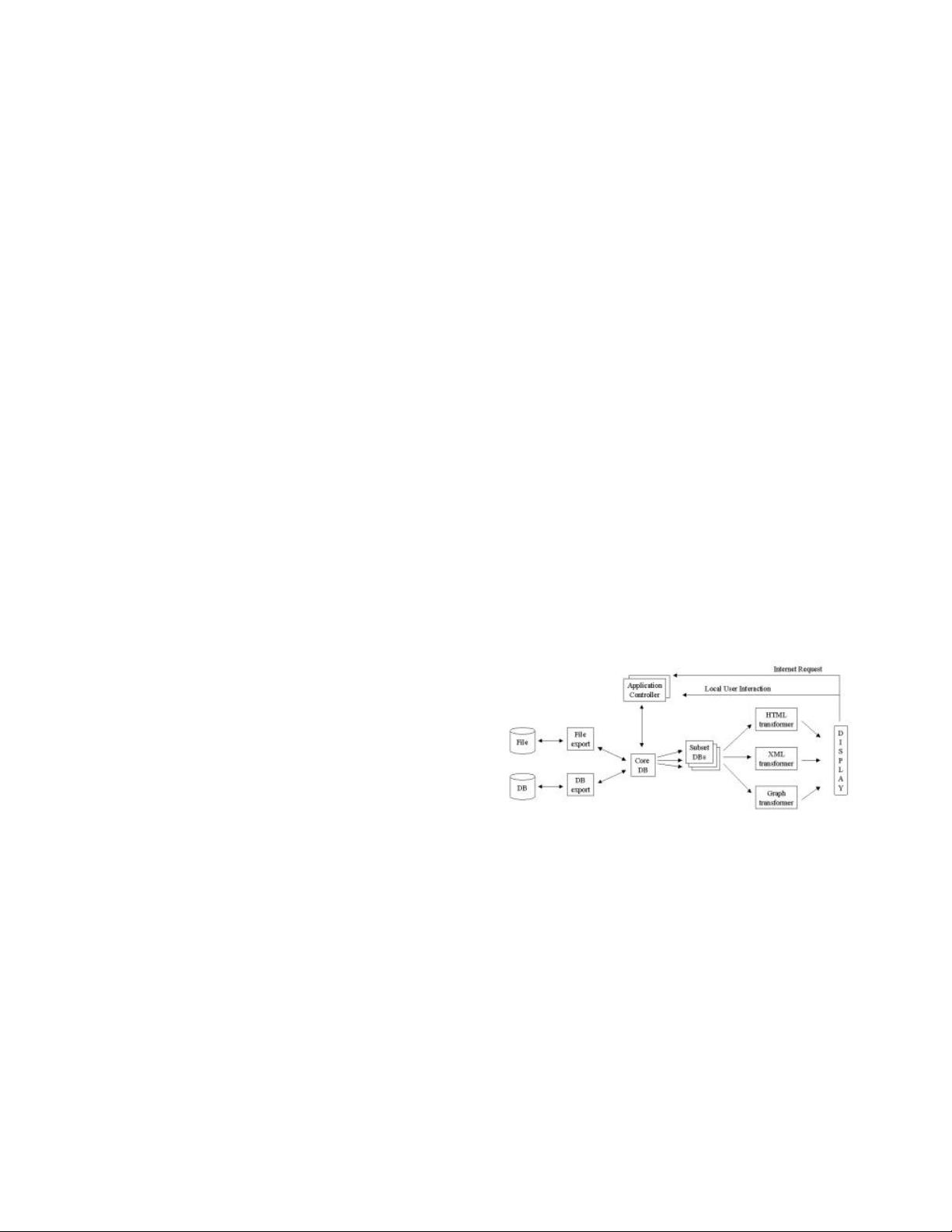

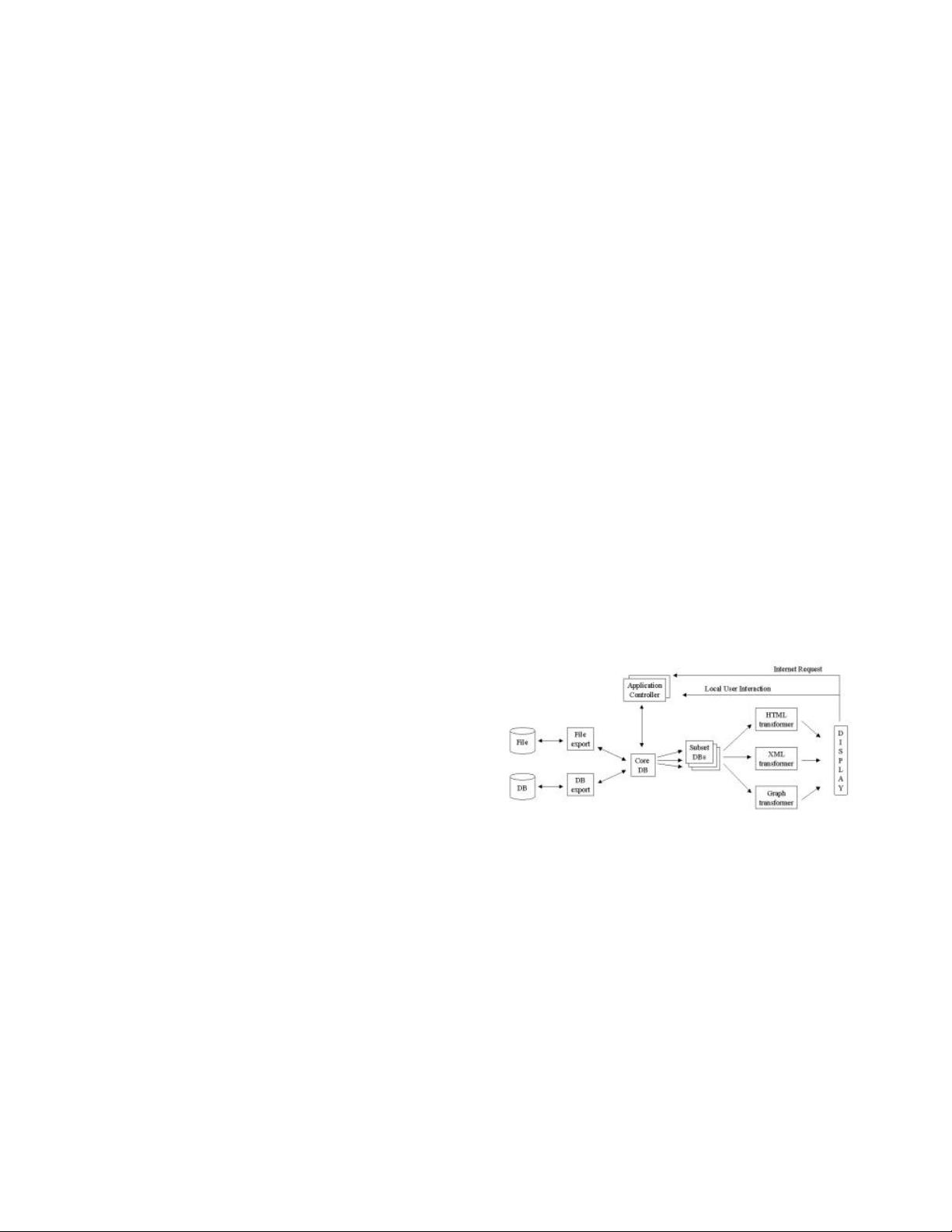

Figure 1 diagrams the full design with the inductively derived

core DB and subset DB processes shown in the middle. Consider

next the export function provided by the xact module. Instead of

generating a report, it formats a record as a binary. The DB

servers export records as a list of binaries, sending the list to the

calling process. Because strong encapsulation requires the

transmission of data from one process to another, each is free to

store it in the format most advantageous for the process’ own

purposes. The OOPL approach is to add interface methods to the

xact object to export in the proper format, or adaptors to transform

the internal data structure to the desired output format. The COPL

approach introduces a transformer process which can be used as

an abstracted interface to avoid unnecessary code growth in the

xact module. It also adds runtime flexibility in the conversion of

export data to target formats.

Figure 1. Personal Accounting Application design diagram

One export transformer per DB process can maintain a current

reflection of each of the core DB or subset DB processes, or

multiple can exist per subset DB if snapshot views prior to recent

DB modifications need to be maintained. In any case, the exported

data can be adapted to output an HTML report, raw ASCII text or

even a graph through the introduction of transformer processes

which generate the desired output data and pass them to the

requesting process. A single export process can catch the

exported data records and then deliver them to any number of

requesting processes by replacing or extracting bytes much as

UNIX character stream tools such as awk or sed work [7].

The problem description calls for both a file for storage of