Fast and Accurate Recurrent Neural Network Acoustic Models for Speech

Recognition

Has¸im Sak, Andrew Senior, Kanishka Rao, Franc¸oise Beaufays

Google

{hasim,andrewsenior,kanishkarao,fsb}@google.com

Abstract

We have recently shown that deep Long Short-Term Memory

(LSTM) recurrent neural networks (RNNs) outperform feed

forward deep neural networks (DNNs) as acoustic models for

speech recognition. More recently, we have shown that the

performance of sequence trained context dependent (CD) hid-

den Markov model (HMM) acoustic models using such LSTM

RNNs can be equaled by sequence trained phone models initial-

ized with connectionist temporal classification (CTC). In this

paper, we present techniques that further improve performance

of LSTM RNN acoustic models for large vocabulary speech

recognition. We show that frame stacking and reduced frame

rate lead to more accurate models and faster decoding. CD

phone modeling leads to further improvements. We also present

initial results for LSTM RNN models outputting words directly.

Index Terms: speech recognition, acoustic modeling, connec-

tionist temporal classification, CTC, long short-term memory

recurrent neural networks, LSTM RNN.

1. Introduction

While speech recognition systems using recurrent and feed-

forward neural networks have been around for more than two

decades [1, 2], it is only recently that they have displaced Gaus-

sian mixture models (GMMs) as the state-of-the-art acoustic

model. More recently, it has been shown that recurrent neural

networks can outperform feed-forward networks on large-scale

speech recognition tasks [3, 4].

Conventional speech systems use cross-entropy training

with HMM CD state targets followed by sequence training.

CTC models use a “blank” symbol between phonetic labels and

propose an alternative loss to conventional cross-entropy train-

ing. We recently showed that RNNs for LVCSR trained with

CTC can be improved with the sMBR sequence training crite-

rion and approaches state-of-the-art [5]. In this paper we further

investigate the use of sMBR-trained CTC models for acoustic

speech recognition and show that with appropriate features and

the introduction of context dependent phone models they out-

perform the conventional LSTM RNN models by 8% relative

in recognition accuracy. The next section describes the LSTM

RNNs and summarizes the CTC method and sequence training.

We then describe acoustic frame stacking as well as context de-

pendent phone and whole-word modeling. The following sec-

tion describes our experiments and presents results which are

summarized in the conclusions.

2. RNN Acoustic Modeling Techniques

In this work we focus on the LSTM RNN architecture which

has shown good performance in our previous research, outper-

forming deep neural networks.

input

Forward Forward Forward Forward Forward

output

640 500 500 500 500 500 42 or 9248

input

Forward

Backward

Forward

Backward

Forward

Backward

Forward

Backward

Forward

Backward

output

240

300

300

300

300

300

300

300

300

300

300

42 or 9248

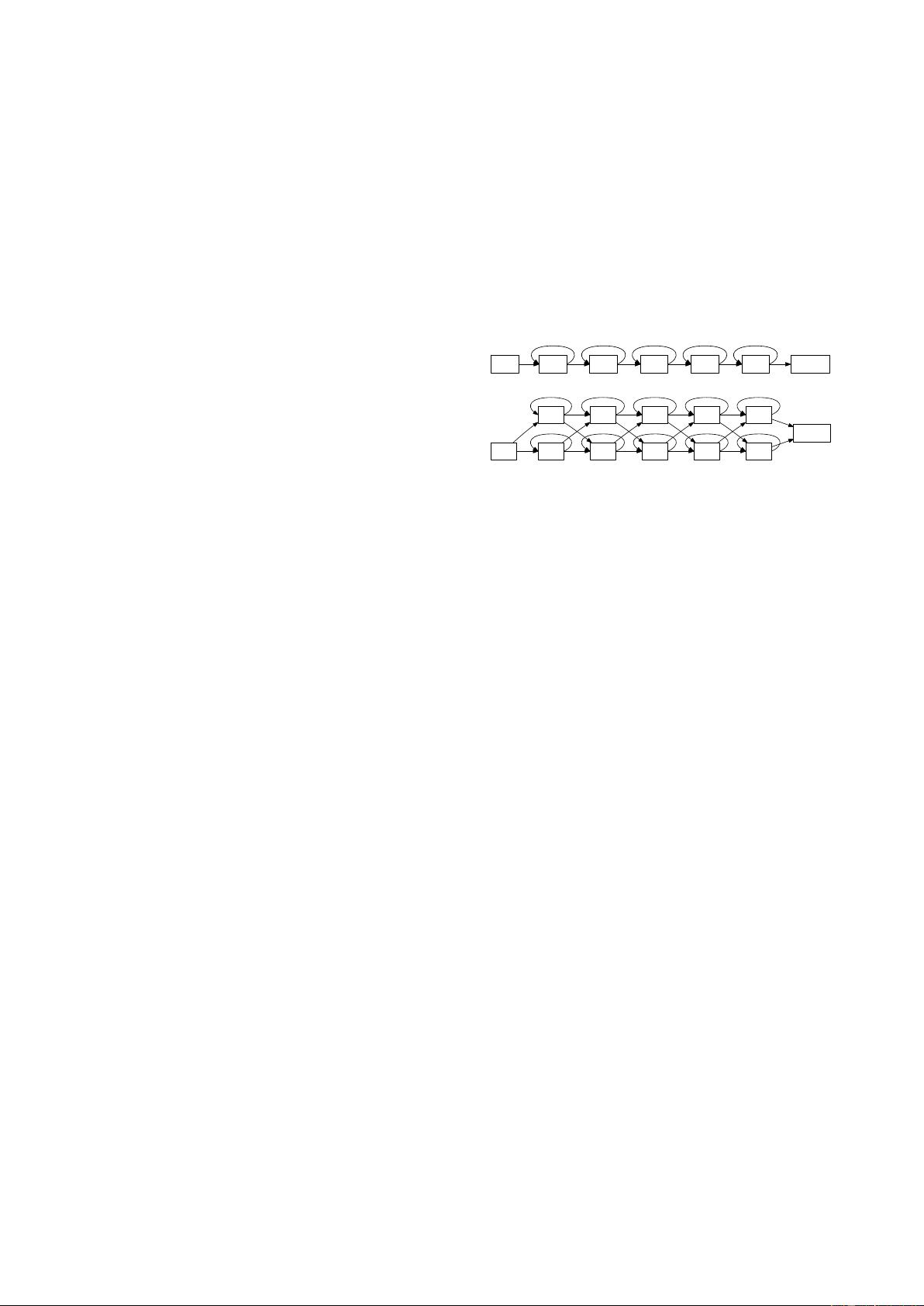

Figure 1: Layer connections in unidirectional (top) and bidirec-

tional (bottom) 5-layer LSTM RNNs.

RNNs model the input sequence either unidirectionally or

bidirectionally [6]. Unidirectional RNNs (Figure 1 top) esti-

mate the label posteriors y

t

l

= p(l

t

|x

t

,

−→

h

t

) using only left con-

text of the current input x

t

by processing the input from left

to right and having a hidden state

−→

h

t

in the forward direction.

This is desirable for applications requiring low latency between

inputs and corresponding outputs. Usually output targets are de-

layed with respect to features, giving access to a small amount

of right/future context, improving classification accuracy with-

out incurring much latency.

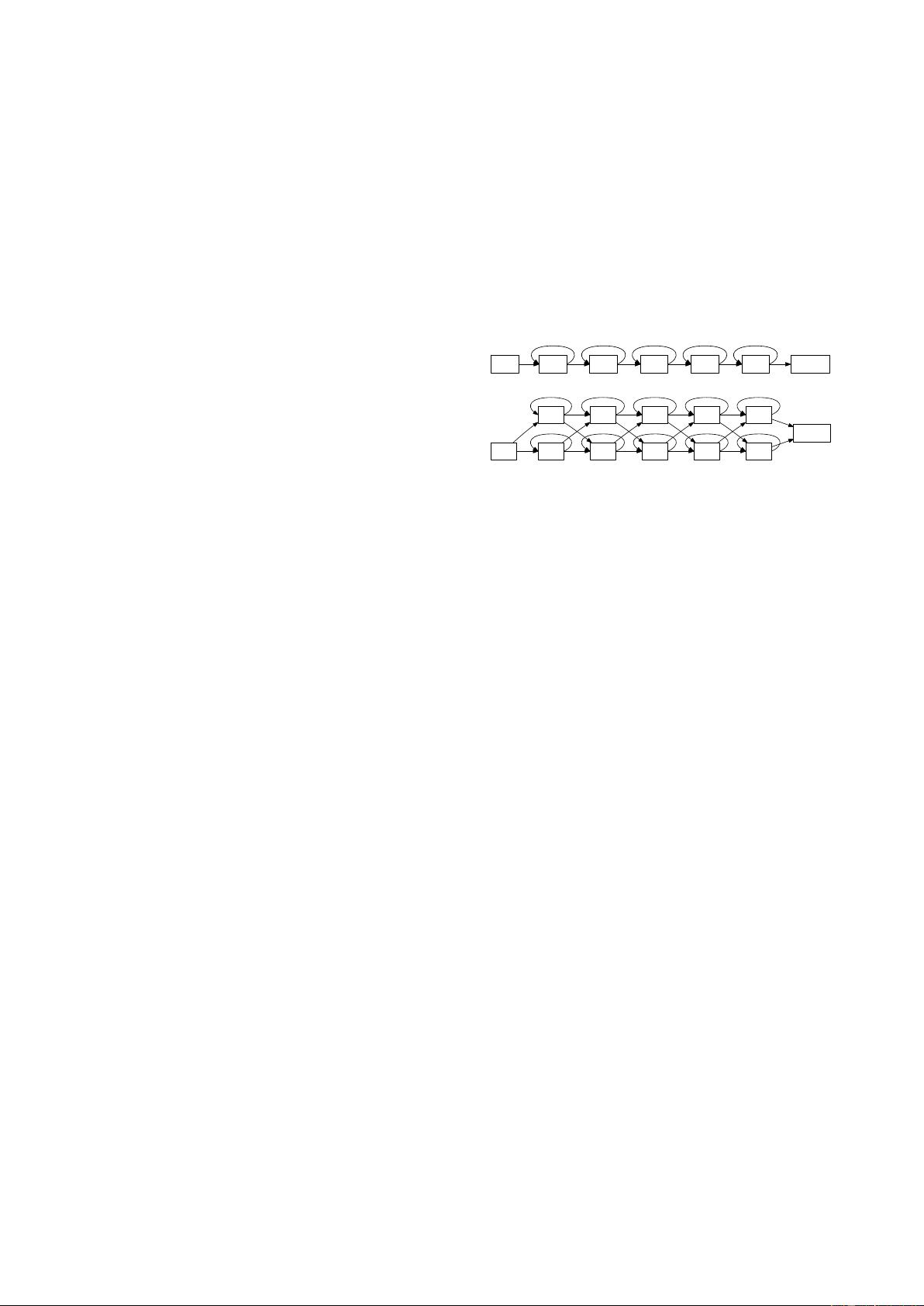

If one can afford the latency of seeing the entire sequence,

bidirectional RNNs (Figure 1 bottom) estimate the label poste-

riors p(l

t

|x

t

,

−→

h

t

,

←−

h

t

) using separate layers for processing the

input in the forward and backward directions. We use deep

LSTM RNN architectures built by stacking multiple LSTM lay-

ers. These have been shown to perform better than shallow

models for speech recognition [7, 8, 9, 3]. For bidirectional

models, we use two LSTM layers at each depth — one operat-

ing in the forward and another operating in the backward direc-

tion over the input sequence. Both of these layers are connected

to both the previous forward and backward layers. The output

layer is also connected to both of the final forward and back-

ward layers. We experiment with different acoustic units for

the output layer, including context dependent HMM states and

phones, both context independent and context dependent (Sec-

tion 2.4). We train the models in a distributed manner using

asynchronous stochastic gradient descent (ASGD) optimization

technique allowing parallelization of training over a large num-

ber of machines on a cluster and enabling large scale training

of neural networks [10, 11, 12, 13, 3]. The weights in all the

networks are randomly initialized with a uniform (-0.04, 0.04)

distribution. We clip the activations of memory cells to [-50,

50], and their gradients to [-1, 1], making CTC training stable.

2.1. CTC Training

The CTC approach [14] is a technique for sequence labeling

using RNNs where the alignment between the inputs and tar-

arXiv:1507.06947v1 [cs.CL] 24 Jul 2015