Vote3Deep: Fast Object Detection in 3D Point Clouds Using Efficient

Convolutional Neural Networks

Martin Engelcke, Dushyant Rao, Dominic Zeng Wang, Chi Hay Tong, Ingmar Posner

Abstract— This paper proposes a computationally efficient

approach to detecting objects natively in 3D point clouds

using convolutional neural networks (CNNs). In particular, this

is achieved by leveraging a feature-centric voting scheme to

implement novel convolutional layers which explicitly exploit

the sparsity encountered in the input. To this end, we ex-

amine the trade-off between accuracy and speed for different

architectures and additionally propose to use an L

1

penalty

on the filter activations to further encourage sparsity in the

intermediate representations. To the best of our knowledge, this

is the first work to propose sparse convolutional layers and L

1

regularisation for efficient large-scale processing of 3D data.

We demonstrate the efficacy of our approach on the KITTI

object detection benchmark and show that Vote3Deep models

with as few as three layers outperform the previous state of the

art in both laser and laser-vision based approaches by margins

of up to 40% while remaining highly competitive in terms of

processing time.

I. INTRODUCTION

3D point cloud data is ubiquitous in mobile robotics

applications such as autonomous driving, where efficient and

robust object detection is pivotal for planning and decision

making. Recently, computer vision has been undergoing

a transformation through the use of convolutional neural

networks (CNNs) (e.g. [1], [2], [3], [4]). Methods which

process 3D point clouds, however, have not yet experienced a

comparable breakthrough. We attribute this lack of progress

to the computational burden introduced by the third spatial

dimension. The resulting increase in the size of the input

and intermediate representations renders a naive transfer of

CNNs from 2D vision applications to native 3D perception

in point clouds infeasible for large-scale applications. As a

result, previous approaches tend to convert the data into a 2D

representation first, where nearby features are not necessarily

adjacent in the physical 3D space – requiring models to

recover these geometric relationships.

In contrast to image data, however, typical point clouds

encountered in mobile robotics are spatially sparse, as most

regions are unoccupied. This fact was exploited in [5],

where the authors propose Vote3D, a feature-centric voting

algorithm leveraging the sparsity inherent in these point

clouds. The computational cost is proportional only to the

number of occupied cells rather than the total number of

cells in a 3D grid. [5] proves the equivalence of the voting

scheme to a dense convolution operation and demonstrates

its effectiveness by discretising point clouds into 3D grids

and performing exhaustive 3D sliding window detection with

Authors are from the Oxford Robotics Institute, University of Oxford.

{firstname}@robots.ox.ac.uk

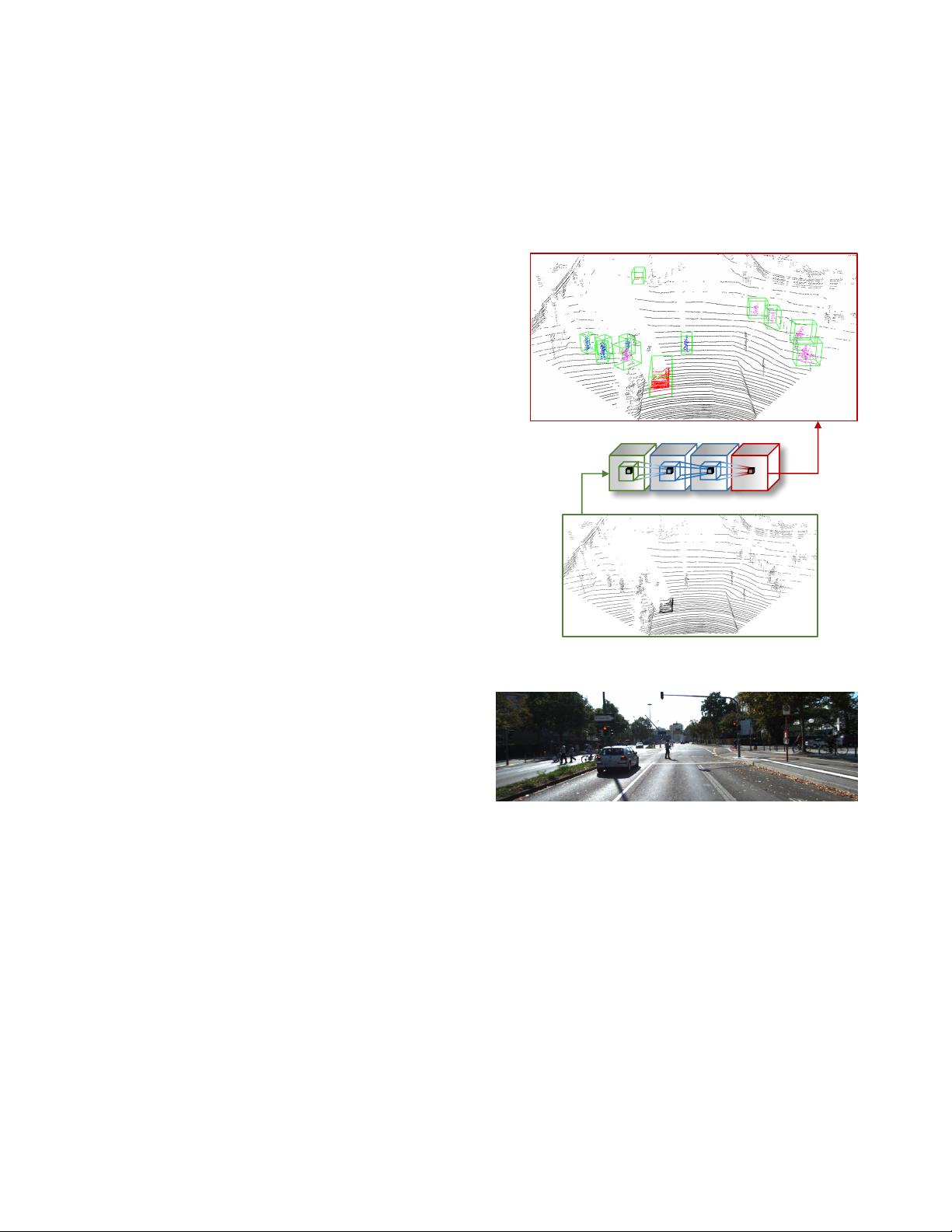

Inpu t&Point&Cloud

Object&Detections

CNNs

(a) 3D point cloud detection with CNNs

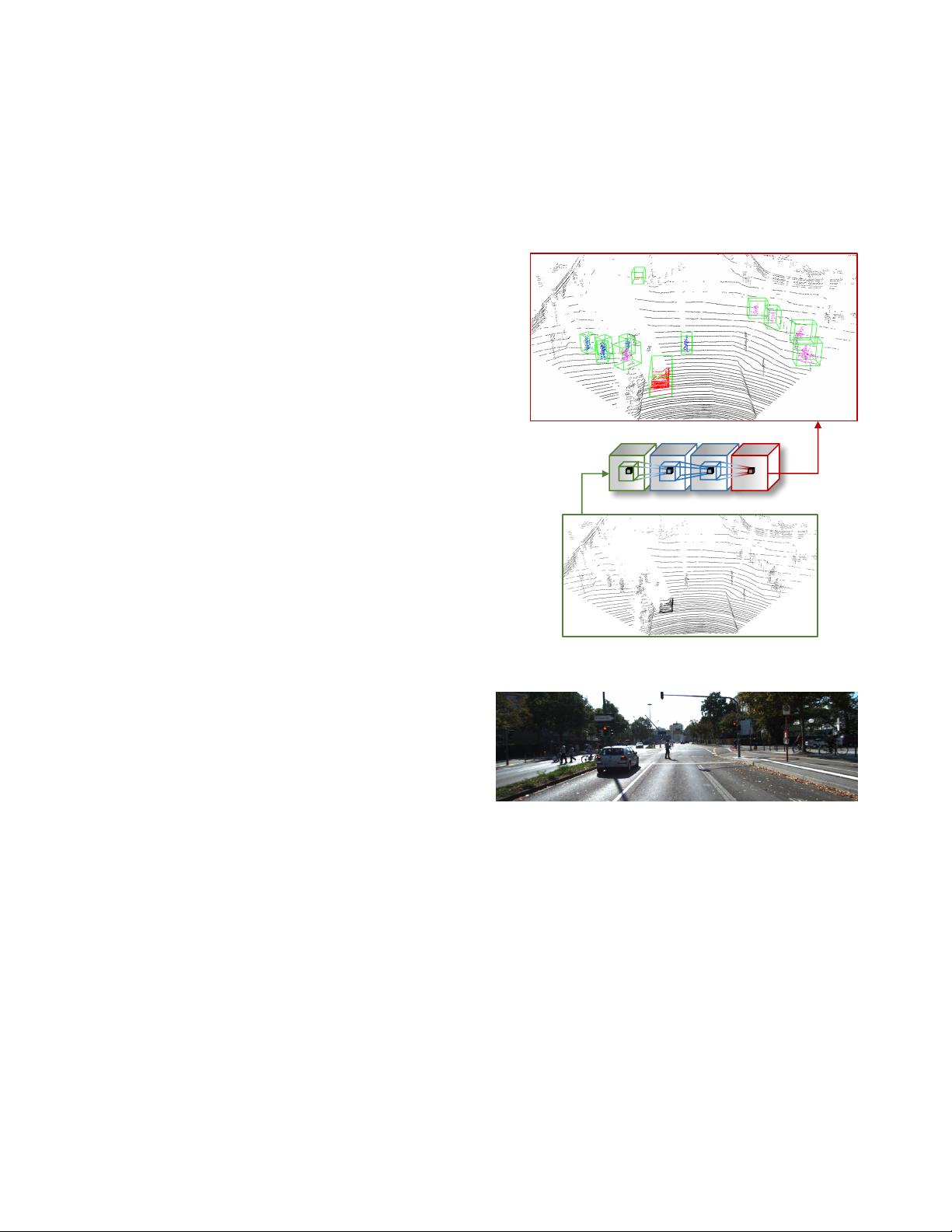

(b) Reference image

Fig. 1. The result of applying Vote3Deep to an unseen point cloud from

the KITTI dataset, with the corresponding image for reference. The CNNs

apply sparse convolutions natively in 3D via voting. The model detects cars

(red), pedestrians (blue), and cyclists (magenta), even at long range, and

assigns bounding boxes (green) sized by class. Best viewed in colour.

a linear Support Vector Machine (SVM). Consequently, [5]

achieves the previous state of the art in both performance and

processing speed for detecting cars, pedestrians and cyclists

in point clouds on the object detection task from the popular

KITTI Vision Benchmark Suite [6].

Inspired by [5], we propose to exploit feature-centric

voting to build efficient CNNs to detect objects in point

clouds natively in 3D – that is to say without projecting the

input into a lower-dimensional space first or constraining the

search space of the detector (Fig. 1). This enables our ap-

arXiv:1609.06666v2 [cs.RO] 5 Mar 2017