没有合适的资源?快使用搜索试试~ 我知道了~

首页模式识别经典:Richard Duda的《Pattern Classification》第二版

模式识别经典:Richard Duda的《Pattern Classification》第二版

需积分: 9 4 下载量 42 浏览量

更新于2024-07-24

收藏 11.28MB PDF 举报

"Richard O. Duda的《Pattern Classification》是模式识别领域的经典教材,英文原版第二版,常被用于国外大学的模式识别课程。本书涵盖了机器感知、特征提取、噪声处理、过拟合、模型选择等多个模式分类的子问题,并深入探讨了学习与适应的不同类型,如监督学习、无监督学习和强化学习。"

在模式分类领域,Richard Duda的著作深入浅出地介绍了这一主题。首先,书中提到机器感知是模式识别的基础,它涉及到如何让计算机理解和解释来自不同感官通道的信息。通过一个例子,作者引出了模式识别涉及的相关领域,包括信号处理、统计学、人工智能等。

接着,Duda详细阐述了模式分类的子问题。特征提取是将原始数据转换成有意义的表示,这是预处理的关键步骤。噪声的存在可能干扰这一过程,因此需要有效的噪声处理策略。过拟合是训练模型时常见的问题,它可能导致模型在新数据上的表现不佳。模型选择则涉及到如何在多个模型中找到最佳的平衡点,以适应特定任务。此外,书中的先验知识讨论了利用先验信息来指导分类决策的重要性,而缺失特征处理则探讨了在数据不完整的情况下进行分类的方法。

Mereology(部分与整体的关系)和分割是图像分析中常见的概念,它们帮助我们理解对象的结构和边界。上下文信息对于理解模式的含义至关重要,尤其是在自然语言处理和图像识别中。不变性指的是模型应能识别出在不同变换下的同一模式,如旋转或缩放。证据聚合则涉及如何结合多个证据源来做出更准确的决策。成本和风险的考虑使我们能够权衡错误分类的后果。最后,计算复杂性分析了算法的效率,这对于实际应用中的可扩展性和资源管理至关重要。

在学习与适应章节,Duda区分了三种主要的学习方式:监督学习,其中模型根据已知输入和输出对进行训练;无监督学习,模型试图从没有标签的数据中发现结构;以及强化学习,通过与环境的交互来优化决策策略。

每一章的总结帮助读者回顾关键概念,而参考文献和历史评论提供了深入研究的路径。全书内容丰富,对于希望深入理解模式识别理论和技术的读者来说是一本不可或缺的参考资料。

4 CHAPTER 1. INTRODUCTION

images — variations in lighting, position of the fish on the conveyor, even “static”

due to the electronics of the camera itself.

Given that there truly are differences between the population of sea bass and that

of salmon, we view them as having different models — different descriptions, whichmodel

are typically mathematical in form. The overarching goal and approach in pattern

classification is to hypothesize the class of these models, process the sensed data

to eliminate noise (not due to the models), and for any sensed pattern choose the

model that corresponds best. Any techniques that further this aim should be in the

conceptual toolbox of the designer of pattern recognition systems.

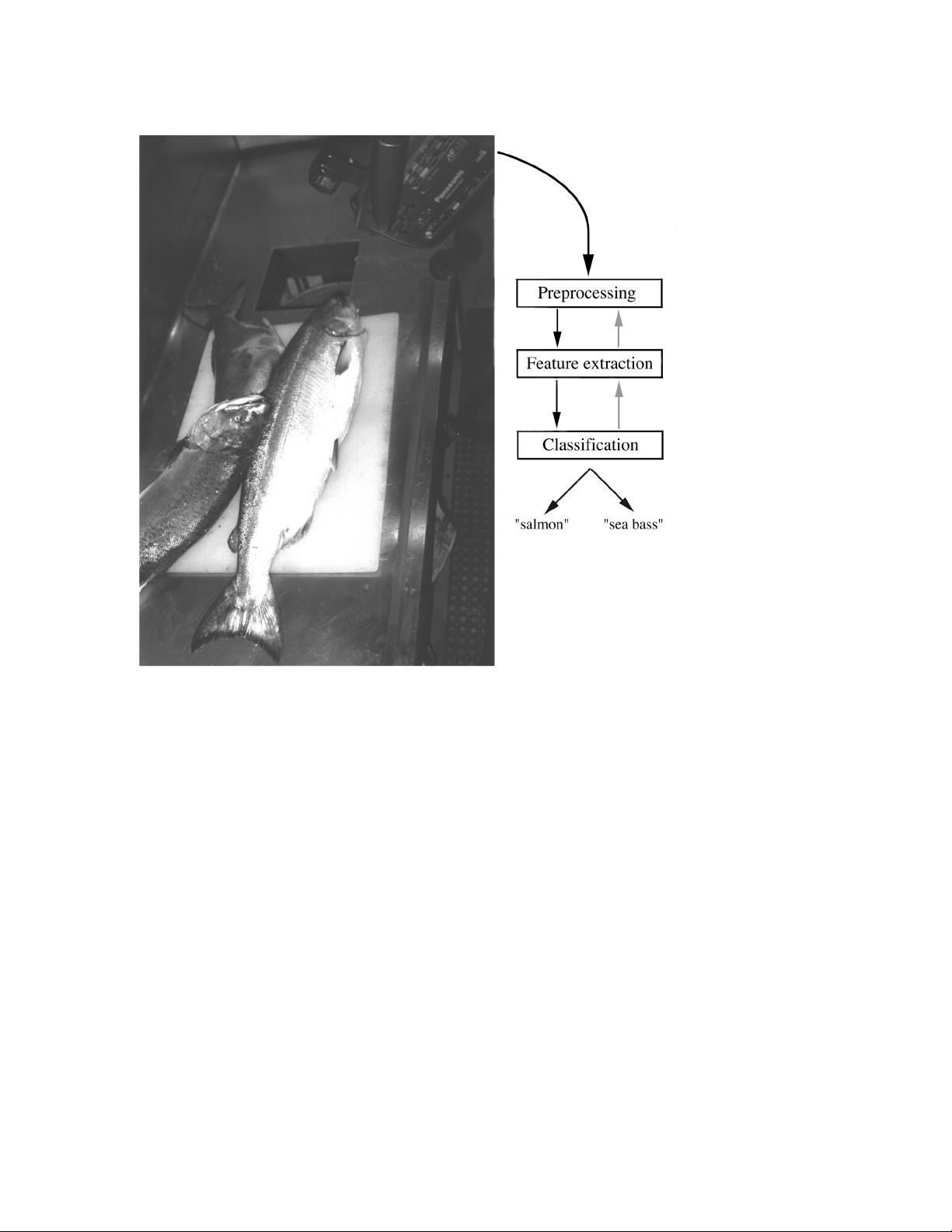

Our prototype system to perform this very specific task might well have the form

shown in Fig. 1.1. First the camera captures an image of the fish. Next, the camera’s

signals are preprocessed to simplify subsequent operations without loosing relevantpre-

processing information. In particular, we might use a segmentation operation in which the images

segmentation

of different fish are somehow isolated from one another and from the background. The

information from a single fish is then sent to a feature extractor, whose purpose is to

feature

extraction

reduce the data by measuring certain “features” or “properties.” These features

(or, more precisely, the values of these features) are then passed to a classifier that

evaluates the evidence presented and makes a final decision as to the species.

The preprocessor might automatically adjust for average light level, or threshold

the image to remove the background of the conveyor belt, and so forth. For the

moment let us pass over how the images of the fish might be segmented and consider

how the feature extractor and classifier might be designed. Suppose somebody at the

fish plant tells us that a sea bass is generally longer than a salmon. These, then,

give us our tentative models for the fish: sea bass have some typical length, and this

is greater than that for salmon. Then length becomes an obvious feature, and we

might attempt to classify the fish merely by seeing whether or not the length l of

a fish exceeds some critical value l

∗

. To choose l

∗

we could obtain some design or

training samples of the different types of fish, (somehow) make length measurements,training

samples and inspect the results.

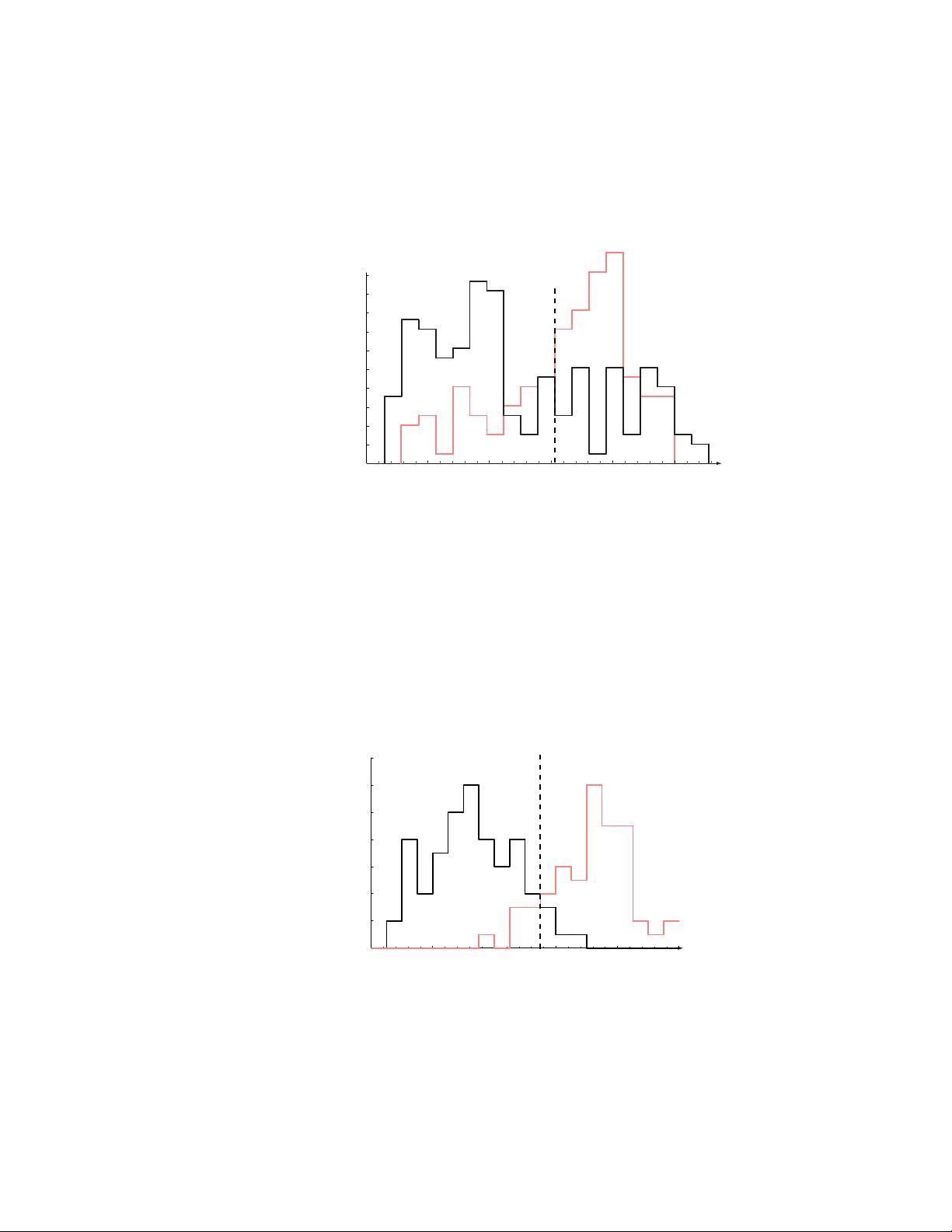

Suppose that we do this, and obtain the histograms shown in Fig. 1.2. These

disappointing histograms bear out the statement that sea bass are somewhat longer

than salmon, on average, but it is clear that this single criterion is quite poor; no

matter how we choose l

∗

, we cannot reliably separate sea bass from salmon by length

alone.

Discouraged, but undeterred by these unpromising results, we try another feature

— the average lightness of the fish scales. Now we are very careful to eliminate

variations in illumination, since they can only obscure the models and corrupt our

new classifier. The resulting histograms, shown in Fig. 1.3, are much more satisfactory

— the classes are much better separated.

So far we have tacitly assumed that the consequences of our actions are equally

costly: deciding the fish was a sea bass when in fact it was a salmon was just as

undesirable as the converse. Such a symmetry in the cost is often, but not invariablycost

the case. For instance, as a fish packing company we may know that our customers

easily accept occasional pieces of tasty salmon in their cans labeled “sea bass,” but

they object vigorously if a piece of sea bass appears in their cans labeled “salmon.”

If we want to stay in business, we should adjust our decision boundary to avoid

antagonizing our customers, even if it means that more salmon makes its way into

the cans of sea bass. In this case, then, we should move our decision boundary x

∗

to

smaller values of lightness, thereby reducing the number of sea bass that are classified

as salmon (Fig. 1.3). The more our customers object to getting sea bass with their

1.2. AN EXAMPLE 5

Figure 1.1: The objects to be classified are first sensed by a transducer (camera),

whose signals are preprocessed, then the features extracted and finally the classifi-

cation emitted (here either “salmon” or “sea bass”). Although the information flow

is often chosen to be from the source to the classifier (“bottom-up”), some systems

employ “top-down” flow as well, in which earlier levels of processing can be altered

based on the tentative or preliminary response in later levels (gray arrows). Yet others

combine two or more stages into a unified step, such as simultaneous segmentation

and feature extraction.

salmon — i.e., the more costly this type of error — the lower we should set the decision

threshold x

∗

in Fig. 1.3.

Such considerations suggest that there is an overall single cost associated with our

decision, and our true task is to make a decision rule (i.e., set a decision boundary)

so as to minimize such a cost. This is the central task of decision theory of which decision

theorypattern classification is perhaps the most important subfield.

Even if we know the costs associated with our decisions and choose the optimal

decision boundary x

∗

, we may be dissatisfied with the resulting performance. Our

first impulse might be to seek yet a different feature on which to separate the fish.

Let us assume, though, that no other single visual feature yields better performance

than that based on lightness. To improve recognition, then, we must resort to the use

6 CHAPTER 1. INTRODUCTION

salmon sea bass

Length

Count

l*

0

2

4

6

8

10

12

16

18

20

22

510 2015 25

Figure 1.2: Histograms for the length feature for the two categories. No single thresh-

old value l

∗

(decision boundary) will serve to unambiguously discriminate between

the two categories; using length alone, we will have some errors. The value l

∗

marked

will lead to the smallest number of errors, on average.

2 4 6 8 10

0

2

4

6

8

10

12

14

Lightness

Count

x*

salmon sea bass

Figure 1.3: Histograms for the lightness feature for the two categories. No single

threshold value x

∗

(decision boundary) will serve to unambiguously discriminate be-

tween the two categories; using lightness alone, we will have some errors. The value

x

∗

marked will lead to the smallest number of errors, on average.

1.2. AN EXAMPLE 7

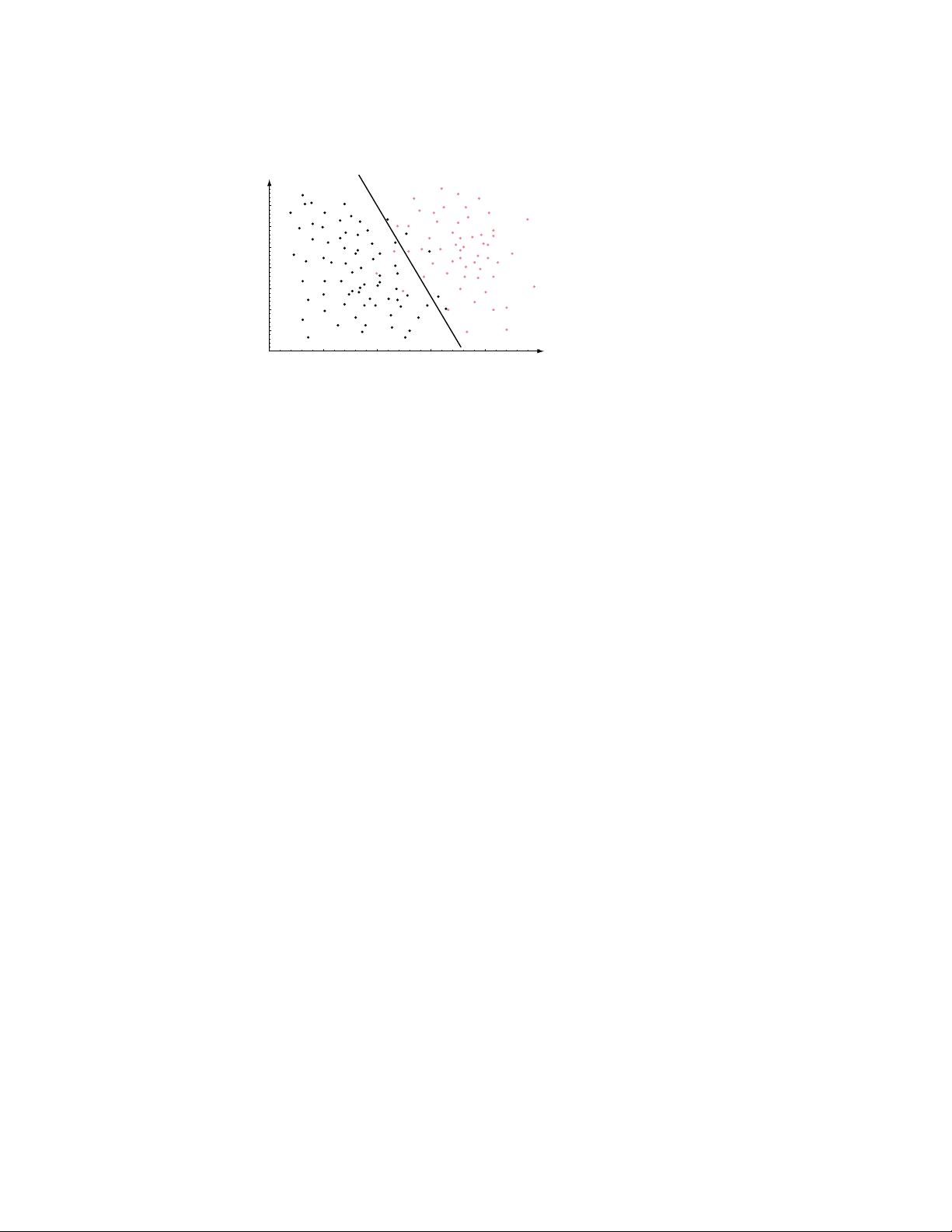

2 4 6 8 10

14

15

16

17

18

19

20

21

22

Width

Lightness

salmon sea bass

Figure 1.4: The two features of lightness and width for sea bass and salmon. The

dark line might serve as a decision boundary of our classifier. Overall classification

error on the data shown is lower than if we use only one feature as in Fig. 1.3, but

there will still be some errors.

of more than one feature at a time.

In our search for other features, we might try to capitalize on the observation that

sea bass are typically wider than salmon. Now we have two features for classifying

fish — the lightness x

1

and the width x

2

. If we ignore how these features might be

measured in practice, we realize that the feature extractor has thus reduced the image

of each fish to a point or feature vector x in a two-dimensional feature space, where

x =

x

1

x

2

.

Our problem now is to partition the feature space into two regions, where for all

patterns in one region we will call the fish a sea bass, and all points in the other we

call it a salmon. Suppose that we measure the feature vectors for our samples and

obtain the scattering of points shown in Fig. 1.4. This plot suggests the following rule

for separating the fish: Classify the fish as sea bass if its feature vector falls above the

decision boundary shown, and as salmon otherwise. decision

boundary

This rule appears to do a good job of separating our samples and suggests that

perhaps incorporating yet more features would be desirable. Besides the lightness

and width of the fish, we might include some shape parameter, such as the vertex

angle of the dorsal fin, or the placement of the eyes (as expressed as a proportion of

the mouth-to-tail distance), and so on. How do we know beforehand which of these

features will work best? Some features might be redundant: for instance if the eye

color of all fish correlated perfectly with width, then classification performance need

not be improved if we also include eye color as a feature. Even if the difficulty or

computational cost in attaining more features is of no concern, might we ever have

too many features?

Suppose that other features are too expensive or expensive to measure, or provide

little improvement (or possibly even degrade the performance) in the approach de-

scribed above, and that we are forced to make our decision based on the two features

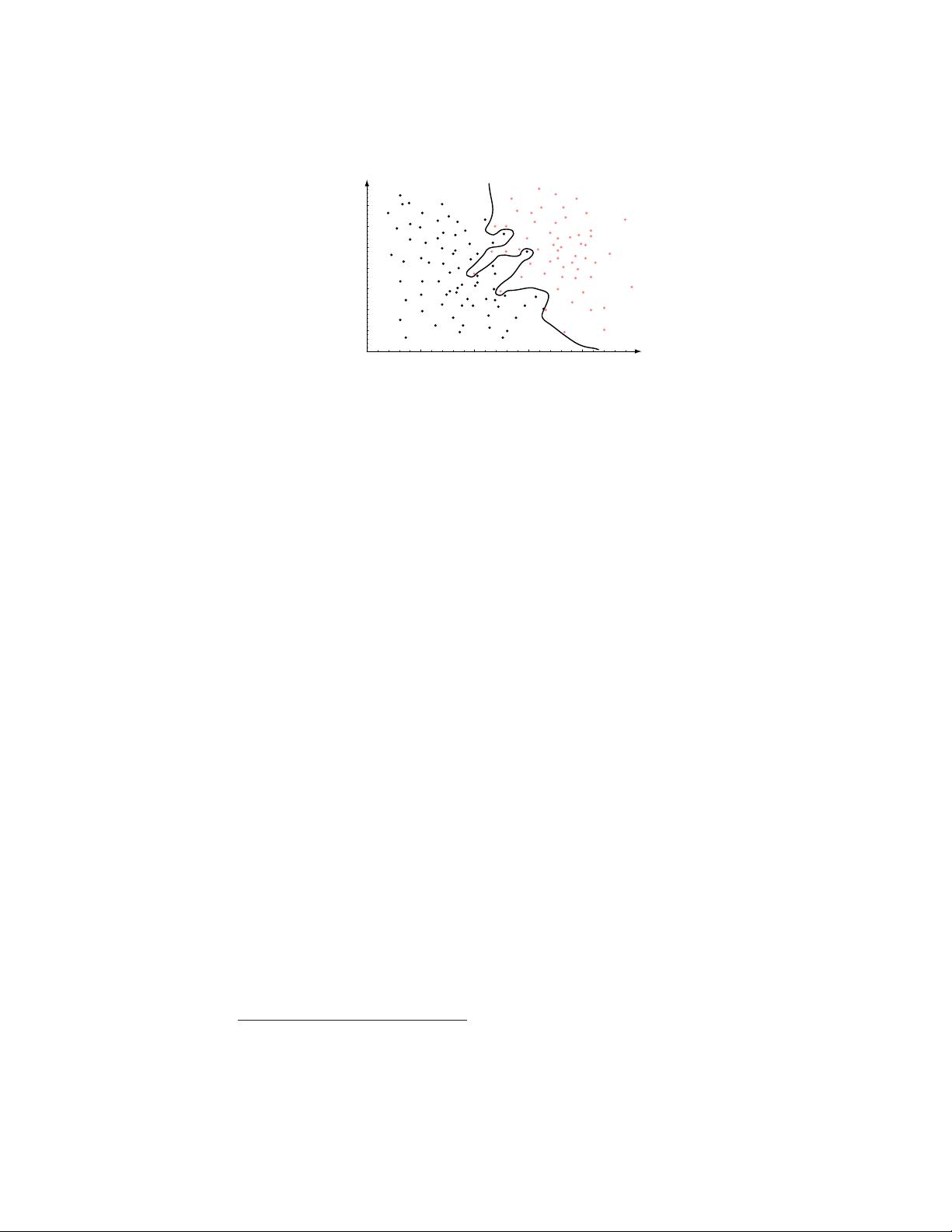

in Fig. 1.4. If our models were extremely complicated, our classifier would have a

decision boundary more complex than the simple straight line. In that case all the

8 CHAPTER 1. INTRODUCTION

2 4 6 8 10

14

15

16

17

18

19

20

21

22

Width

Lightness

?

salmon sea bass

Figure 1.5: Overly complex models for the fish will lead to decision boundaries that are

complicated. While such a decision may lead to perfect classification of our training

samples, it would lead to poor performance on future patterns. The novel test point

marked ? is evidently most likely a salmon, whereas the complex decision boundary

shown leads it to be misclassified as a sea bass.

training patterns would be separated perfectly, as shown in Fig. 1.5. With such a

“solution,” though, our satisfaction would be premature because the central aim of

designing a classifier is to suggest actions when presented with novel patterns, i.e.,

fish not yet seen. This is the issue of generalization. It is unlikely that the complexgeneral-

ization decision boundary in Fig. 1.5 would provide good generalization, since it seems to be

“tuned” to the particular training samples, rather than some underlying characteris-

tics or true model of all the sea bass and salmon that will have to be separated.

Naturally, one approach would be to get more training samples for obtaining a

better estimate of the true underlying characteristics, for instance the probability

distributions of the categories. In most pattern recognition problems, however, the

amount of such data we can obtain easily is often quite limited. Even with a vast

amount of training data in a continuous feature space though, if we followed the

approach in Fig. 1.5 our classifier would give a horrendously complicated decision

boundary — one that would be unlikely to do well on novel patterns.

Rather, then, we might seek to “simplify” the recognizer, motivated by a belief

that the underlying models will not require a decision boundary that is as complex as

that in Fig. 1.5. Indeed, we might be satisfied with the slightly poorer performance

on the training samples if it means that our classifier will have better performance

on novel patterns.

∗

But if designing a very complex recognizer is unlikely to give

good generalization, precisely how should we quantify and favor simpler classifiers?

How would our system automatically determine that the simple curve in Fig. 1.6

is preferable to the manifestly simpler straight line in Fig. 1.4 or the complicated

boundary in Fig. 1.5? Assuming that we somehow manage to optimize this tradeoff,

can we then predict how well our system will generalize to new patterns? These are

some of the central problems in statistical pattern recognition.

For the same incoming patterns, we might need to use a drastically different cost

∗

The philosophical underpinnings of this approach derive from William of Occam (1284-1347?), who

advocated favoring simpler explanations over those that are needlessly complicated — Entia non

sunt multiplicanda praeter necessitatem (“Entities are not to be multiplied without necessity”).

Decisions based on overly complex models often lead to lower accuracy of the classifier.

剩余737页未读,继续阅读

pengare

- 粉丝: 0

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

- 利用dlib库实现99.38%精确度的人脸识别技术

- 深入解析AT91 NAND控制器的技术要点

- React Cube Navigation:实现Instagram故事风格的3D立方体导航

- STM32控制ESP8266实现OneNet云MQTT开关控制源代码示例

- 深入探索多边形有效边表填充算法原理与实现

- Gitblit Windows版搭建开源项目服务器指南

- C++教学管理系统:详解与调试

- React Native集成JPush插件教程与Android平台支持

- TravelFeed帖子的tf内容呈现器技术解析

- Android四页面Activity跳转实战教程

- Ruby编程语言第二天习题解答详解

- 简化伺服调试:探索ServoPlus Arduino库的新特性

- 惠普hp39gs计算器使用指南解析

- STM32F103与VL53L0X红外测距模块的集成方案

- 北大青鸟y2CRM系统结业项目源码及需求分析

- 深入解析贴吧扫号机的操作与功能

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功