Monocular 3D Human Pose Estimation by Predicting Depth on Joints

Bruce Xiaohan Nie

1∗

, Ping Wei

2,1∗

, and Song-Chun Zhu

1

1

Center for Vision, Cognition, Learning, and Autonomy, UCLA, USA

2

Xi’an Jiaotong University, China

xiaohan.nie@gmail.com, pingwei@xjtu.edu.cn, sczhu@stat.ucla.edu

Abstract

This paper aims at estimating full-body 3D human pos-

es from monocular images of which the biggest challenge

is the inherent ambiguity introduced by lifting the 2D pose

into 3D space. We propose a novel framework focusing on

reducing this ambiguity by predicting the depth of human

joints based on 2D human joint locations and body part

images. Our approach is built on a two-level hierarchy of

Long Short-Term Memory (LSTM) Networks which can be

trained end-to-end. The first level consists of two compo-

nents: 1) a skeleton-LSTM which learns the depth informa-

tion from global human skeleton features; 2) a patch-LSTM

which utilizes the local image evidence around joint loca-

tions. The both networks have tree structure defined on the

kinematic relation of human skeleton, thus the information

at different joints is broadcast through the whole skeleton in

a top-down fashion. The two networks are first pre-trained

separately on different data sources and then aggregated in

the second layer for final depth prediction. The empirical e-

valuation on Human3.6M and HHOI dataset demonstrates

the advantage of combining global 2D skeleton and local

image patches for depth prediction, and our superior quan-

titative and qualitative performance relative to state-of-the-

art methods.

1. Introduction

1.1. Motivation and Objective

This paper aims at reconstructing full-body 3D human

poses from a single RGB image. Specifically we want to lo-

calize the human joints in 3D space, as shown in Fig. 1. Es-

timating 3D human pose is a classic task in computer vision

and serves as a key component in many human related prac-

tical applications such as intelligent surveillance, human-

robot interaction, human activity analysis, human attention

recognition,etc. There are some existing works which esti-

mate 3D poses in constrained environment from depth im-

∗

Bruce Xiaohan Nie and Ping Wei are co-first authors.

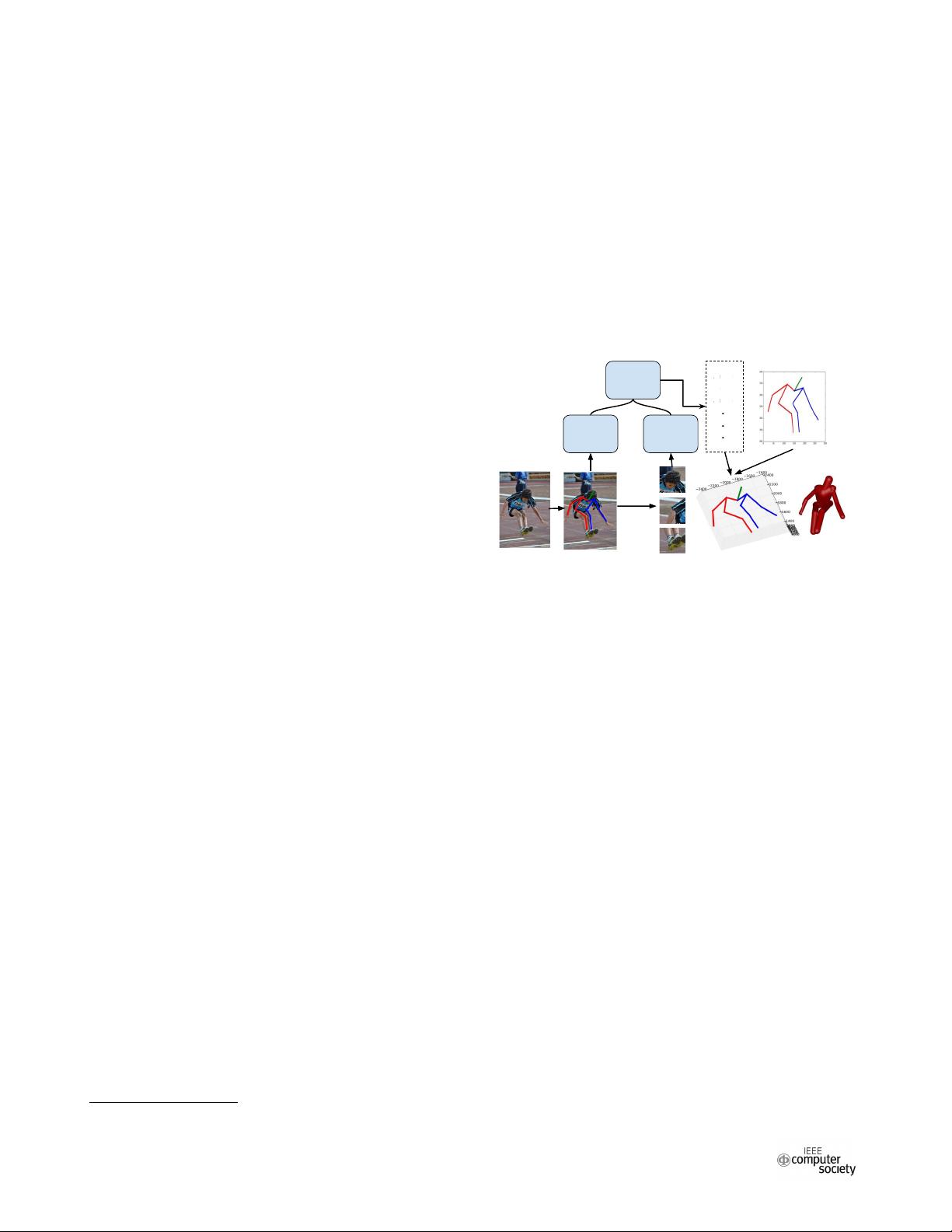

6NHOHWRQ

/670

3DWFK

/670

)LUVWOHYHO

6HFRQG

OHYHO

/670

=KHDG

=HOERZ

=KDQG

=K

Z

Q

Figure 1. Our two-level hierarchy of LSTM for 3D pose estima-

tion. The skeleton-LSTM and patch-LSTM captures information

from global 2D skeleton and local image patches respectively at

the first level. The global and local features are integrated in the

second level to predict the depth on joints. The 3D pose is recov-

ered by attaching depth values onto the 2D pose. We render the

3D pose for better visualization.

ages [40, 26] or RGB images captured simultaneously at

multiple viewpoints [2, 10]. Different from them, we focus

on recognizing 3D pose directly from a single RGB image

which is easier to be captured from general environment.

Estimating 3D human poses from a single RGB image

is a challenging problem due to two main reasons: 1) the

target person in the image always exhibits large appear-

ance and geometric variation because of different clothes,

postures, illuminations, camera viewpoints and so on. The

highly articulated human pose also brings about heavy self-

occlusions. 2) even the ground-truth 2D pose is given, re-

covering the 3D pose is inherently ambiguous since that

there are infinite 3D poses which can be projected onto the

same 2D pose when the depth information is unknown.

One inspiration of our work is the huge progress of 2D

human pose estimation made by recent works based on deep

architectures [33, 32, 17, 37, 3]. In those works, the appear-

ance and geometric variation are implicitly modeled in feed-

forward computations in networks with hierarchical deep

structure. The self-occlusion is also addressed well by fil-

ters from different layers capturing features at different s-

2017 IEEE International Conference on Computer Vision

2380-7504/17 $31.00 © 2017 IEEE

DOI 10.1109/ICCV.2017.373

3467