conv5

context

features

semantic

segmentation

(optional regularizer)

deconv

concat

concat

1x1

conv

1x1

conv

1x1 conv

+ReLU

recurrent transitions

(shared

input-to-

hidden

transition)

(shared

input-to-

hidden

transition)

(hidden-to-

output

transition)

4x copy 4x copy

512

H

W

2048 512

16W

21

16H

H

W

2048

(hidden-to-hidden, equation 1)

recurrent transitions

(hidden-to-hidden, equation 1)

512512

512 512

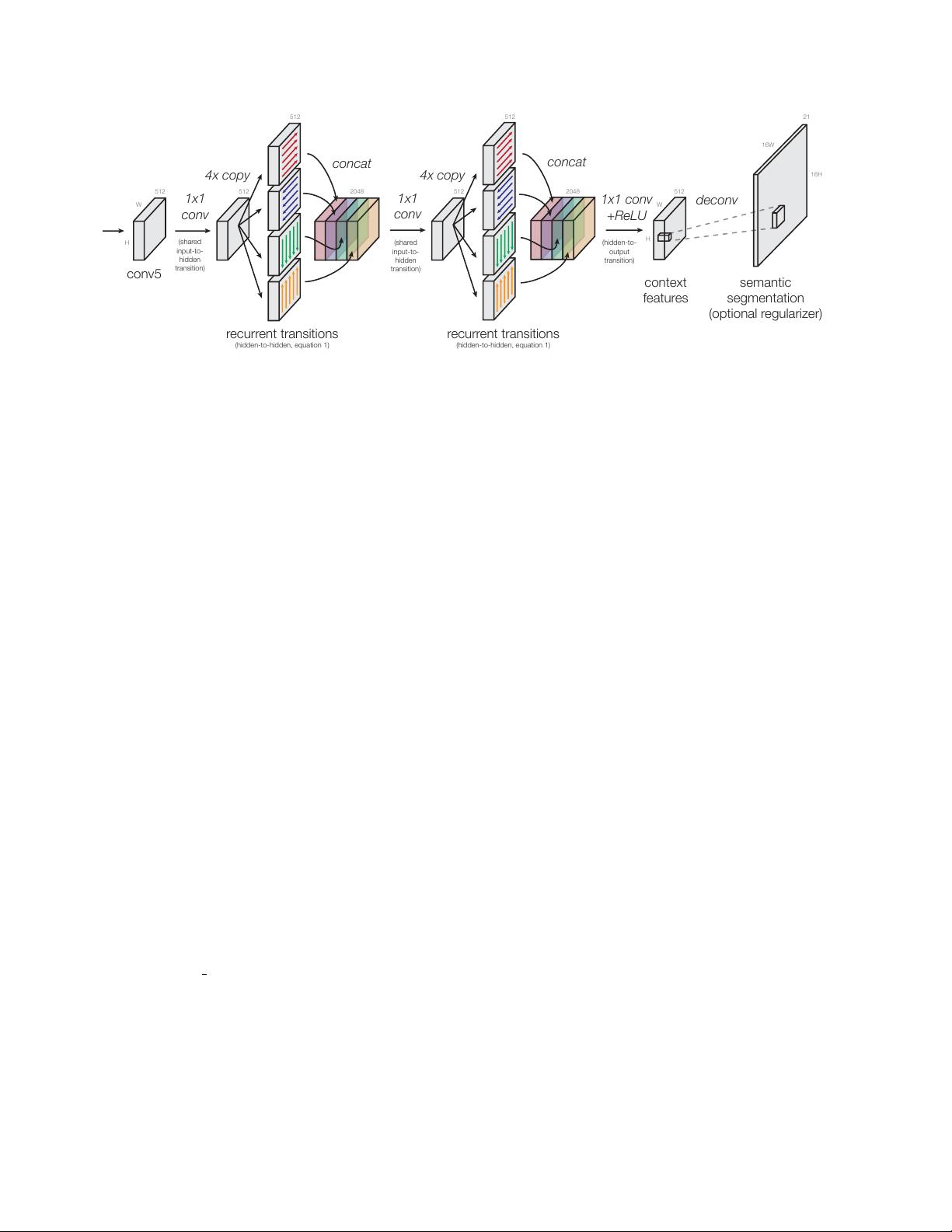

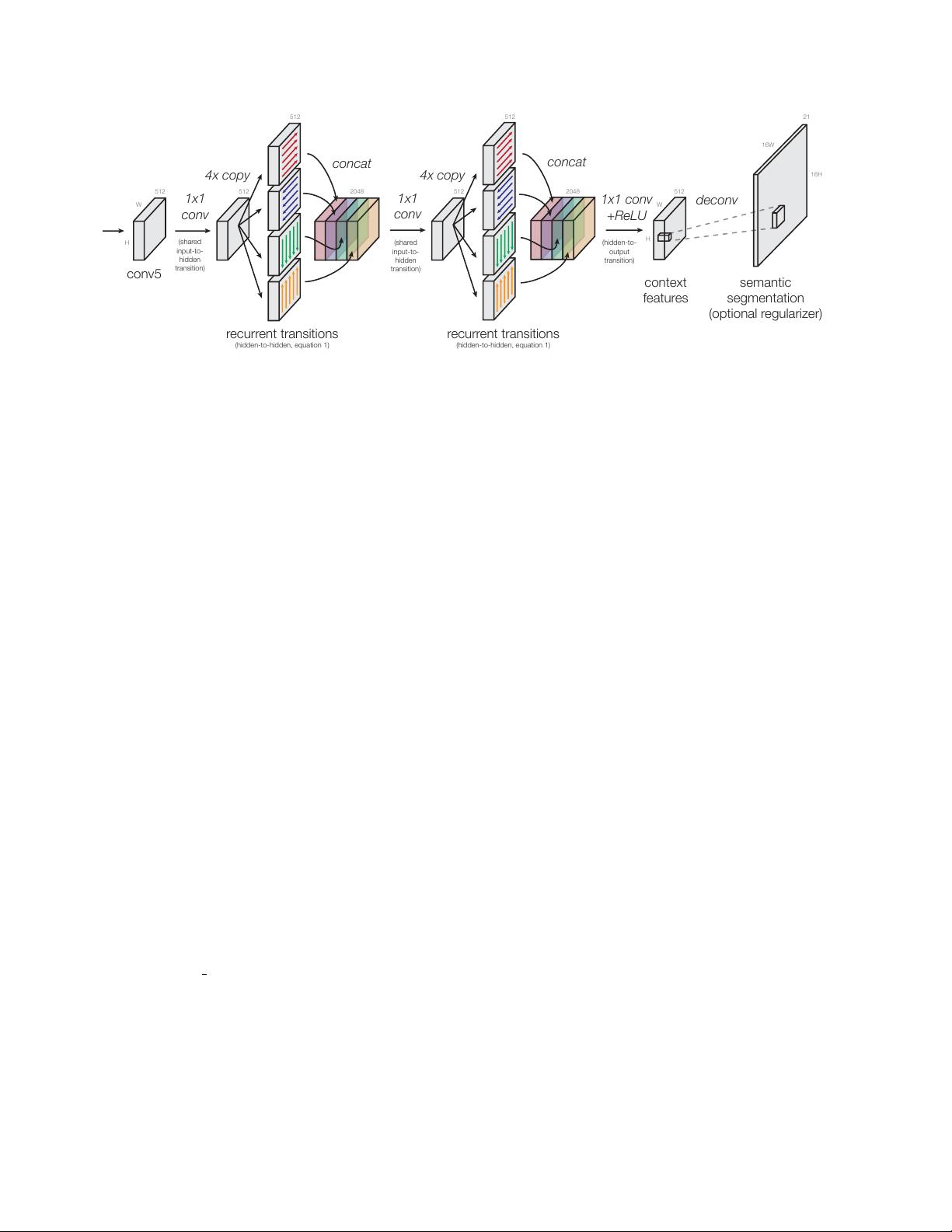

Figure 3. Four-directional IRNN architecture. We use “IRNN” units [21] which are RNNs with ReLU recurrent transitions, initialized

to the identity. All transitions to/from the hidden state are computed with 1x1 convolutions, which allows us to compute the recurrence

more efficiently (Eq. 1). When computing the context features, the spatial resolution remains the same throughout (same as conv5). The

semantic segmentation regularizer has a 16x higher resolution; it is optional and gives a small improvement of around +1 mAP point.

3. Architecture: Inside-Outside Net (ION)

In this section we describe ION, a detector with an im-

proved descriptor both inside and outside the ROI. An im-

age is processed by a single deep ConvNet, and the con-

volutional feature maps at each stage of the ConvNet are

stored in memory. At the top of the network, a 2x stacked

4-directional IRNN (explained later) computes context fea-

tures that describe the image both globally and locally. The

context features have the same dimensions as “conv5.” This

is done once per image. In addition, we have thousands

of proposal regions (ROIs) that might contain objects. For

each ROI, we extract a fixed-length feature descriptor from

several layers (“conv3”, “conv4”, “conv5”, and “context

features”). The descriptors are L2-normalized, concate-

nated, re-scaled, and dimension-reduced (1x1 convolution)

to produce a fixed-length feature descriptor for each pro-

posal of size 512x7x7. Two fully-connected (FC) layers

process each descriptor and produce two outputs: a one-of-

K object class prediction (“softmax”), and an adjustment to

the proposal region’s bounding box (“bbox”).

The rest of this section explains the details of ION and

motivates why we chose this particular architecture.

3.1. Pooling from multiple layers

Recent successful detectors such as Fast R-CNN, Faster

R-CNN [30], and SPPnet, all pool from the last convolu-

tional layer (“conv5

3”) in VGG16 [35]. In order to extend

this to multiple layers, we must consider issues of dimen-

sionality and amplitude.

Since we know that pre-training on ImageNet is im-

portant to achieve state-of-the-art performance [1], and

we would like to use the previously trained VGG16 net-

work [35], it is important to preserve the existing layer

shapes. Therefore, if we want to pool out of more layers,

the final feature must also be shape 512x7x7 so that it is

the correct shape to feed into the first fully-connected layer

(fc6). In addition to matching the original shape, we must

also match the original activation amplitudes, so that we can

feed our feature into fc6.

To match the required 512x7x7 shape, we concatenate

each pooled feature along the channel axis and reduce the

dimension with a 1x1 convolution. To match the original

amplitudes, we L2 normalize each pooled ROI and re-scale

back up by an empirically determined scale. Our experi-

ments use a “scale layer” with a learnable per-channel scale

initialized to 1000 (measured on the training set). We later

show in Section 5.2 that a fixed scale works just as well.

As a final note, as more features are concatenated to-

gether, we need to correspondingly decrease the initial

weight magnitudes of the 1x1 convolution, so we use

“Xavier” initialization [36].

3.2. Context features with IRNNs

Our architecture for computing context features in ION

is shown in more detail in Figure 3. On top of the last convo-

lutional layer (conv5), we place RNNs that move laterally

across the image. Traditionally, an RNN moves left-to-right

along a sequence, consuming an input at every step, updat-

ing its hidden state, and producing an output. We extend

this to two dimensions by placing an RNN along each row

and along each column of the image. We have four RNNs in

total that move in the cardinal directions: right, left, down,

up. The RNNs sit on top of conv5 and produce an output

with the same shape as conv5.

There are many possible forms of recurrent neural net-

works that we could use: gated recurrent units (GRU) [4],

long short-term memory (LSTM) [14], and plain tanh re-

current neural networks. In this paper, we explore RNNs

composed of rectified linear units (ReLU). Le et al. [21]

3