Binarized Mode Seeking for Scalable Visual Pattern Discovery

Wei Zhang

1

, Xiaochun Cao

1,2∗

, Rui Wang

1

, Yuanfang Guo

1

, Zhineng Chen

3

1: SKLOIS, Institute of Information Engineering, Chinese Academy of Sciences

2: School of Cyber Security, University of Chinese Academy of Sciences

3: Institute of Automation, Chinese Academy of Sciences

{wzhang, caoxiaochun, wangrui, guoyuanfang}@iie.ac.cn, zhineng.chen@ia.ac.cn

Abstract

This paper studies visual pattern discovery in large-scale

image collections via binarized mode seeking, where im-

ages can only be represented as binary codes for efficien-

t storage and computation. We address this problem from

the perspective of binary space mode seeking. First, a bi-

nary mean shift (bMS) is proposed to discover frequen-

t patterns via mode seeking directly in binary space. The

binomial-based kernel and binary constraint are introduced

for binarized analysis. Second, we further extend bMS to

a more general form, namely contrastive binary mean shift

(cbMS), which maximizes the contrastive density in bina-

ry space, for finding informative patterns that are both fre-

quent and discriminative for the dataset. With the binarized

algorithm and optimization, our methods demonstrate sig-

nificant computation (50×) and storage (32×) improvement

compared to standard techniques operating in Euclidean s-

pace, while the performance does not largely degenerate.

Furthermore, cbMS discovers more informative patterns

by suppressing low discriminative modes. We evaluate our

methods on both annotated ILSVRC (1M images) and un-

annotated blind Flickr (10M images) datasets with million

scale images, which demonstrates both the scalability and

effectiveness of our algorithms for discovering frequent and

informative patterns in large scale collection.

1. Introduction

Pattern discovery is one of the most fundamental prob-

lems in computer vision and pattern analysis. Given a large-

scale un-ordered image collection (e.g., images crawled

from an anonymous website), the first question is to ask

“What kind of images are in the dataset? What’s the differ-

ence with other ‘common’ datasets?” Visual pattern discov-

ery aims to automatically find dominant items in an unsu-

pervised setting. A lot of researchers have studied this prob-

∗

Corresponding author.

10110100001011

10100011101010

00101100110111

00110110101010

00111111010101

10111000110010

00111011101000

......

10101101001010

10101101001010

00101010101000

......

(a) large image sets

(b) binary codes (c) modes

(d) patterns

bMS

cbMS

10110100001011

10100011101010

00101100110111

00110110101010

00111111010101

00111011101000

......

10101101011010

00101010101000

00101011101000

......

targetset

contrastiveset

frequentpatterns

informativepatterns

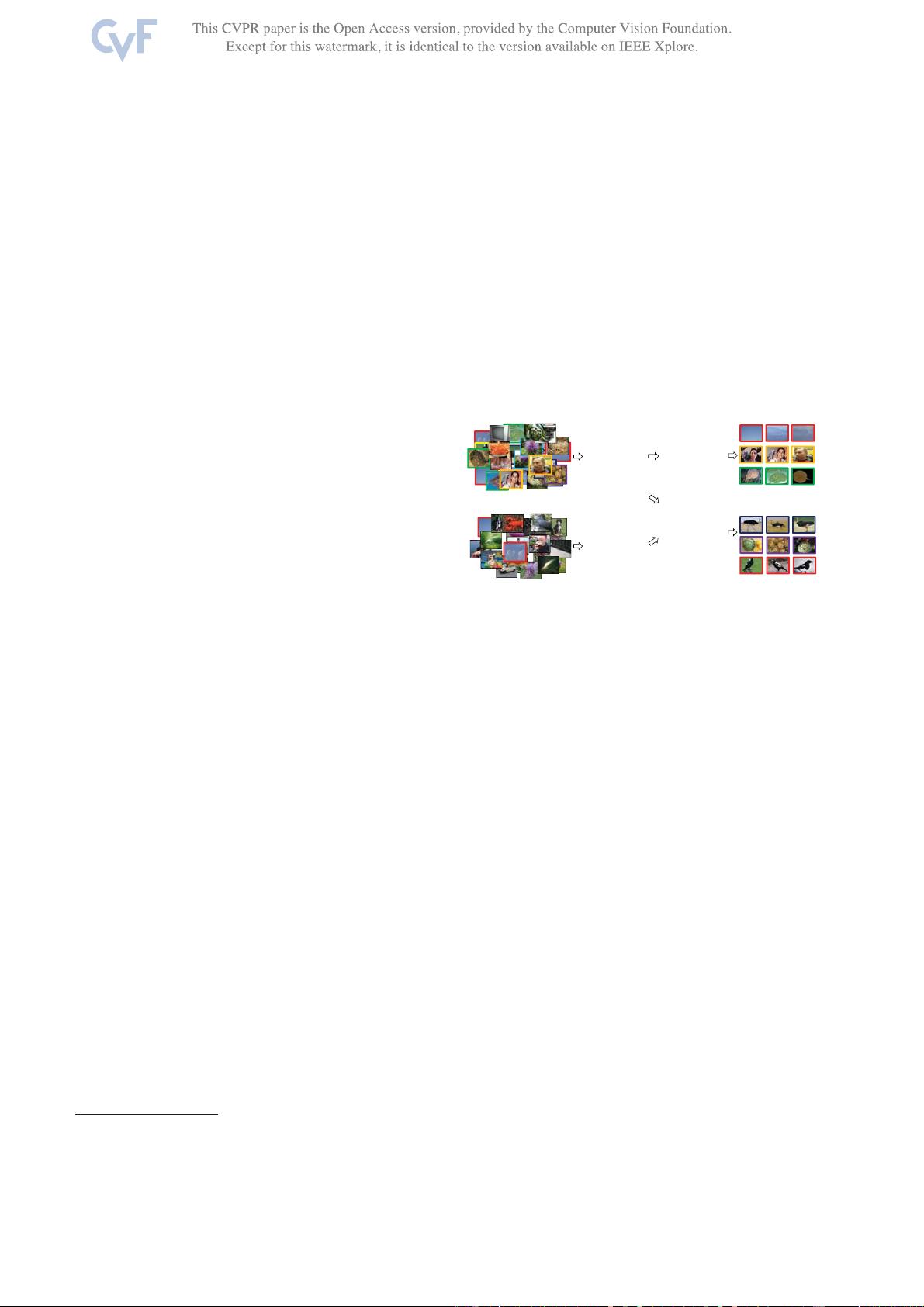

Figure 1. Given a large collection of images (a), our goal is to

discover patterns (d) by seeking modes (c) in the binary space (b).

Our bMS seeks frequent patterns from the target set, while cbMS

discovers more informative patterns by referencing an additional

contrastive set.

lem due to its wide applications in various vision tasks such

as classification [

7], retrieval [27], summarization [32]. In

the context of big data, visual pattern discovery is becoming

even more important, as it provides an efficient way charac-

terizing a large image collection. Particularly, this problem

is even important as the data explodes in photo sharing web-

sites, such as Instagram, Flickr, Imgur.

Among the techniques for pattern discovery, cluster anal-

ysis serves as the core part. By projecting data into the fea-

ture space, patterns are closely related to the high density

regions. Previous techniques are mostly based on cluster-

ing directly in Euclidean space. However, there are still two

fundamental problems seldomly addressed.

One problem is the scalability issue. It is quite inefficien-

t to cluster large datasets in Euclidean space. First, large

amount of real-value arithmetic operations is involved dur-

ing distance evaluation, which makes it impossible for an-

alyzing large dataset. Second, it is also difficult to keep all

the data in memory for large datasets. For example, keeping

one million real value vectors, say R

4,096

- FC7 activation-

s of AlexNet[

11], takes 30GB memory, not to mention the

1

3864