SCHUHMACHER et al.: A CONSISTENT METRIC FOR PERFORMANCE EVALUATION OF MULTI-OBJECT FILTERS 3449

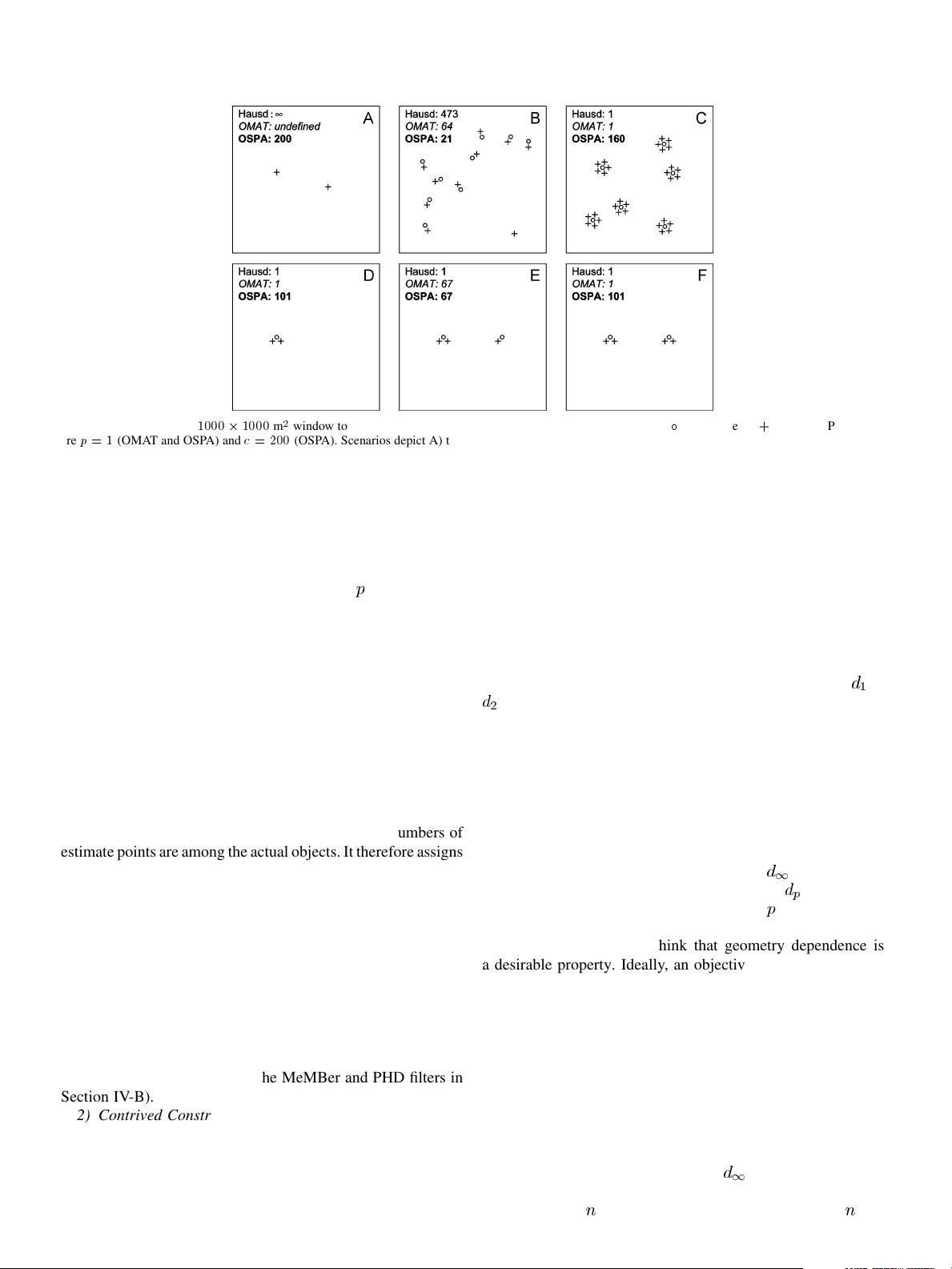

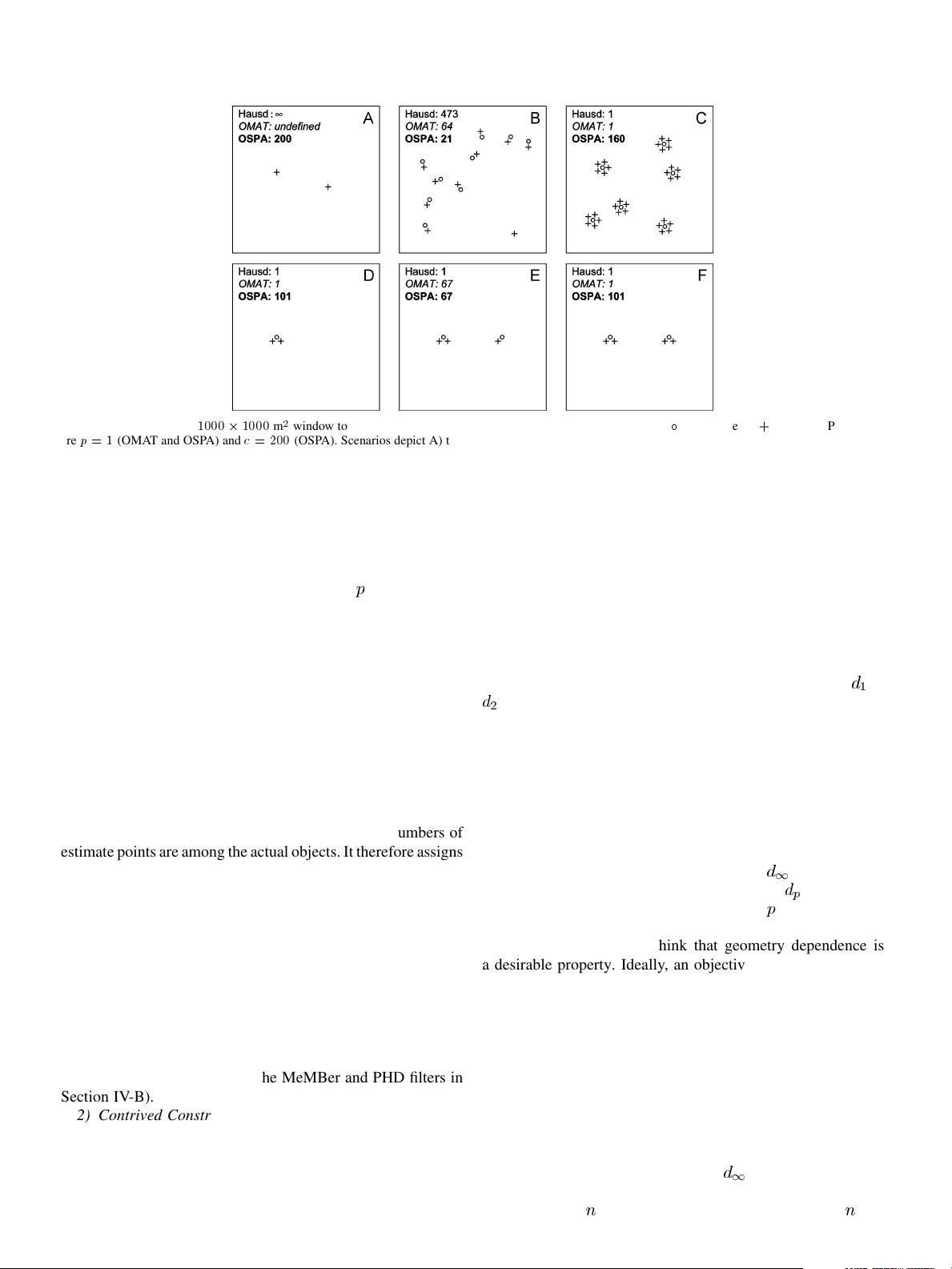

Fig. 2. Six scenarios in a

1000

2

1000

m

window to illustrate the strengths and weaknesses of the various metrics.

actual objects,

+

estimates. Parameters

are

p

=1

(OMAT and OSPA) and

c

= 200

(OSPA). Scenarios depict A) two false estimates; B) an outlier false estimate among several accurate estimates; C)

multiple estimates per object; D–F) a comparison of balanced and unbalanced allocations of estimates to objects. Note that Scenarios B

–F are an artistic impression

rather than an exact rendering of the situation in the sense that the smallest distances have been considerably in

flated for better viewing (from 1 meter, which was

assumed in the computations, to 40 meters in the scale of the pictures).

The merits of the OMAT metric are that it partly fixes the

undesirable cardinality behaviour of the Hausdorff metric (see

Scenario E of Fig. 2) and that it can cope with the outlier

problem by the introduction of the parameter

(Scenario B of

Fig. 2). Regarding this second point, it should be mentioned

that there are also generalizations of the Hausdorff metric that

avoid this problem [16].

On the downside, the OMAT metric entails a host of prob-

lems; the most important of these are outlined as follows:

1) Inconsistency of the Metric: Consider Scenarios D–Fin

Fig. 2. Each of these examples involves a cardinality error, and

from an intuitive point of view we would say that the estimation

errors are roughly equal, but that E is probably a bit better than

F and (arguably) somewhat better than D. While the Hausdorff

distance is the same in all three examples (undesirably small due

to the cardinality problem, but at least consistent), the OMAT

metric actually depends on how well balanced the numbers of

estimate points are among the actual objects. It therefore assigns

a much larger distance in Scenario E than in the other two cases,

and thus ranks the scenario that we intuitively prefer (E) as by

far the worst among the three.

This series of examples further reveals that the OMAT metric

is not always better than the Hausdorff metric at detecting

different cardinalities. This becomes even more obvious in

Scenario C of Fig. 2, where the cardinality of the estimated

point pattern is quite far from the truth. However, since the

estimates are perfectly balanced among the ground truth ob-

jects, the OMAT metric does not detect this. While the scenario

depicted is certainly an extreme one, it is in essence not unre-

alistic (see our comparison of the MeMBer and PHD filters in

Section IV-B).

2) Contrived Construction for Differing Cardinalities: De-

composing individual objects into small parts does not seem

very attractive from an intuitive point of view and often makes

the resulting distances hard to interpret. While visual perception

sometimes tells us that a reasonable matching of estimated ob-

jects to ground truths would involve splitting up of unit masses

(e.g., Scenario E in Fig. 2, where we would naturally match the

two estimates on the left with the one ground truth object next to

them), the OMAT metric does not cater for such natural structure

(and it is in fact hard for any metric to do so). Instead, it tends to

assign partial masses between the two sets of objects in a com-

plicated way, which may be difficult to comprehend for a human

observer. For example the Hausdorff distance in Scenario B is

clearly the distance from the isolated filter estimate to its closest

ground truth object, whereas the optimal mass transfer for

or

is not so obvious.

3) Geometry Dependent Behaviour: In [14], Hoffman and

Mahler describe what they call the geometry dependence of the

OMAT metric, stating that a multi-object filter should be more

heavily penalized for misestimating cardinality when the objects

are far apart than when they are closely spaced. The rationale be-

hind this statement is that it is harder to estimate the number of

objects when they are closely spaced. Under the assumption that

a multi-object filter consistently misestimates the number of ob-

jects, the authors argue that the magnitude of

approximately

equals the diameter of the ground truth (and that

shows a sim-

ilar dependence on the diameter for smaller

albeit to a lesser

degree).

In contrast, we do not think that geometry dependence is

a desirable property. Ideally, an objective performance metric

should depend on as few specific features of the considered

test case as possible in order to allow direct comparisons be-

tween different scenarios (or different stages within the same

scenario). The need for reinterpretation of the OMAT distances

according to the geometry of the ground truth as described in

[14] means that we have to transform these distances in order to

arrive at error quantities that are comparable with one another.

Such quantities, however, do not in general satisfy the metric

axioms any more and other desirable properties of the OMAT

metric might be lost as well.

Moreover, the stated dependence of

on the diameter of the

ground truth is a strong simplification. Consider for example a

ground truth of

objects that are arranged as a regular -gon