TANG AND WU: SCENE TEXT DETECTION AND SEGMENTATION BASED ON CASCADED CNN 1511

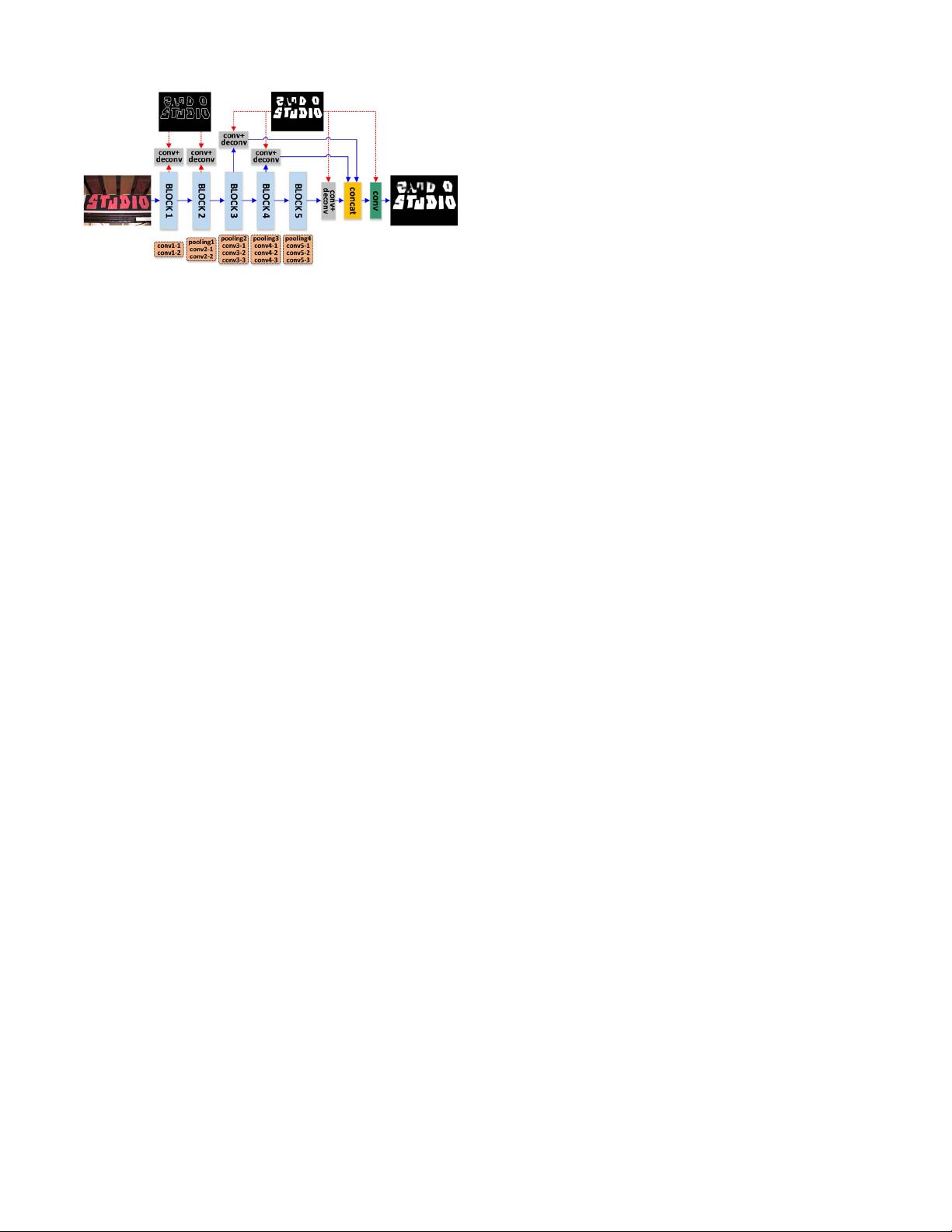

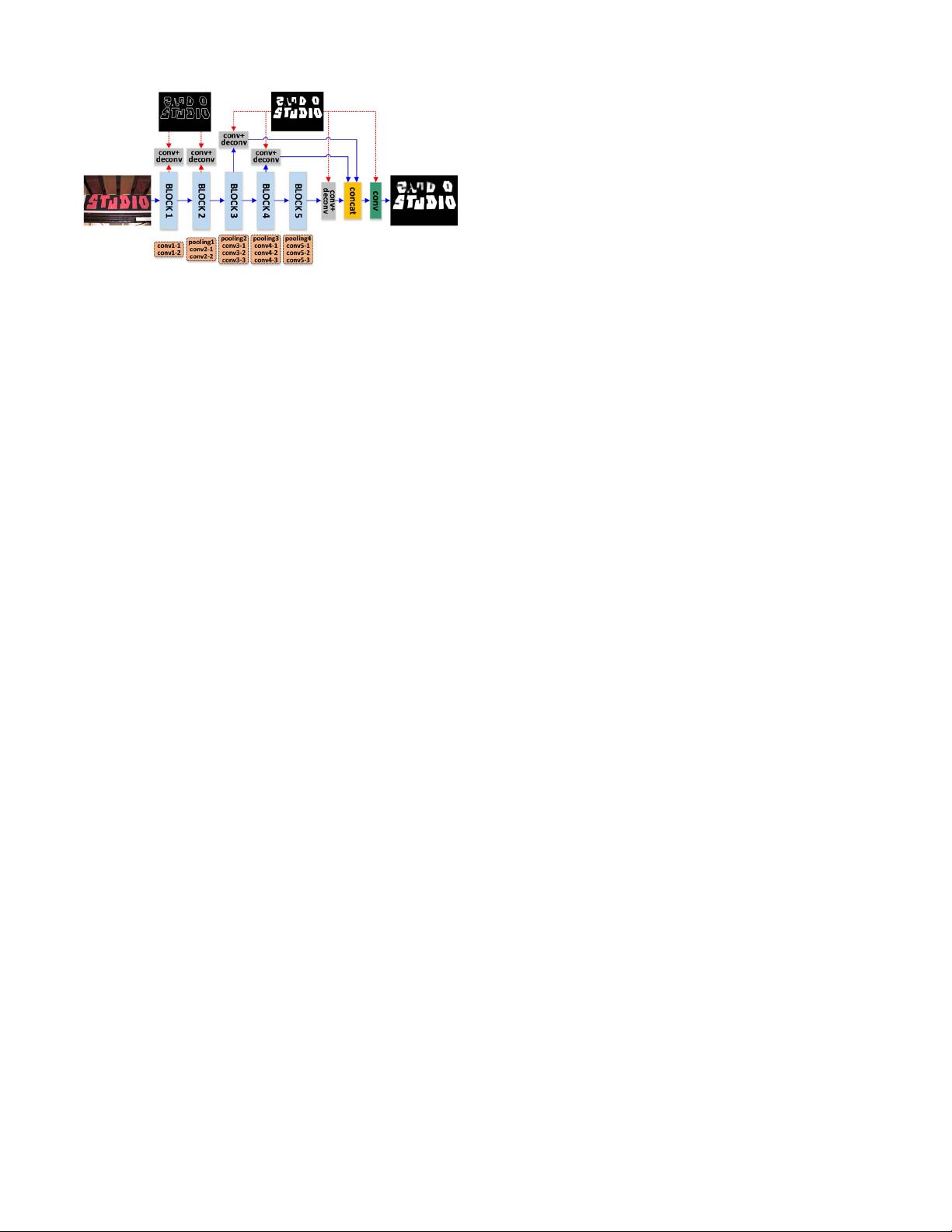

Fig. 3. The architecture of DNet.

To make DNet focus on the text regions, the information

which reflects the properties of the text is used as supervisory

information to train the CNN model. The shape of the text

regions is one of the most important pieces of information for

distinguishing between text and backgrounds. The edges and

the whole regions of text can represent its shape. As we know,

CNN learns local and global features as we move from the

shallow to deep layers. For text, the edges can be considered

as local information and the regions as global information.

Therefore, in this work, the edges and regions of text are used

as the supervisory information of the shallow and the deep

layers of the CNN for model training, respectively.

To get an accurate saliency prediction, the CNN architecture

should be deep and have multi-scale stages with different

strides, so that we can learn discriminative and multi-scale

features for pixels. Training such a deep network from scratch

is hard work when the number training samples available is

insufficient. So this work chooses VGGNet-16 [41] trained on

the ImageNet dataset as the pre-trained model for fine-tuning

as done by [53]. VGGNet-16 has six blocks. The first five

blocks contain convolutional layers and pooling layers, and the

last block contains a pooling layer and fully connected layers.

The last block is removed in this work, since the pooling

operation in this block makes the feature maps become too

small (about 1

32 of the input image size) to obtain fine

full-size prediction and the fully connected layers are time

and memory consuming. Based on the first five blocks of

VGGNet-16, DNet is constructed as shown in Fig. 3. There

are thirteen convolutional layers and four max-pooling layers

in the five blocks. Specifically, the numbers of convolution

kernels in the five blocks are 64, 128, 256, 512 and 512. For all

convolutional layers, the size of all of the convolution kernels

is 3

∗

3, and both the stride and padding are 1 pixel. For all

max-pooling layers, the operation window size is 2

∗

2andthe

stride is 2 pixels.

As we know, the shallow CNN layers usually learn local

features, especially for edges and there are different kinds

of edges including those of text and background in natural

scene images. Therefore, only the edges of text are used as

supervisory information for the shallow layers to make the

CNN model pay more attention to the edges of text during

early feature learning in this work. Usually, the deep layers

learn the global object features. Therefore, the text regions

are used as the supervisory information for the deep layers, so

as to learn more discriminative global features to represent

the text properties. As we can see, from shallow to deep,

the whole DNet always focuses on learning the features of

text. We investigate which layers should be supervised by text

edges or regions and find that the best performance is obtained

when the first two and the last three blocks are supervised by

text edges and regions, respectively. He et al. [35] also used

text regions as supervisory information for model training, but

the text region was used as supervisory information in one

convolutional layer. In the proposed DNet model (as shown in

Fig. 3), the text regions are used as supervisory information in

four convolutional layers, and another important information

of text, i.e. the edges of text, is also used as supervisory

information in the shallow layers, which makes the model

pay more attention to the edges of text during early feature

learning.

To introduce the supervisory information into the CNN,

a side-output is generated, as done in [53], from the last

convolutional layer of each block with a convolutional layer

and a deconvolutional layer. The additional convolutional layer

with a 1

∗

1 convolution kernel converts the feature maps to a

probability map, and the additional deconvolutional layer is

used to render probability map the same size as the input

image. To make the final probability map robust to the size

variation of the text, the side-outputs of the last three blocks

are fused by concatenating them in the channel direction

and using a convolution kernel of size 1

∗

1toconvertthe

concatenation maps to the final probability map. In fact,

convolution with a 1

∗

1 kernel is a weighted fusion process.

Here, the side-outputs of the first two blocks are not considered

during fusion, since we hope to capture the global information

of the text regions during text-aware saliency prediction and

we have found through experiments that it does not improve

the performance to add the first two side-outputs during fusion.

So far, the whole architecture of DNet has been constructed,

as shown in Fig. 3. The additional deconvolutional layer and

last convolutional layer are followed by a sigmoid activation

function.

For DNet training, the errors between all side-outputs and

the supervisory ground truth should be computed and back-

ward propagated. Therefore, we need to define a loss function

to compute these errors. For most of the scene images, the

pixel numbers of text and background are heavy imbalanced.

Here, given an image X and its ground truth Y , a cross-

entropy loss function defined in [53] is used to balance the

loss between text and non-text classes as follows:

l

m

side

W,w

m

=−α

|

Y

+

|

i=1

log P

y

i

= 1|X; W,w

m

−

(

1 − α

)

|

Y

−

|

i=1

log P

y

i

= 0|X; W,w

m

(1)

where α =

|

Y

−

|

/

(

|

Y

+

|

+

|

Y

−

|

)

,

|

Y

+

|

and

|

Y

−

|

mean the num-

ber of text pixels and non-text pixels in the ground truth,

W denotes the parameters of all of the network layers in the

five blocks, w

m

denotes the weights of the m

th

side-output

layer including a convolutional layer and a deconvolutional

layer, and P

(

y

i

= 1|X; W,w

m

)

∈ [0, 1] is computed using