This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination.

HE et al.: CBN FOR CLASSIFICATION OF EEG-BASED MULTICLASS MOTOR IMAGERY BCI 3

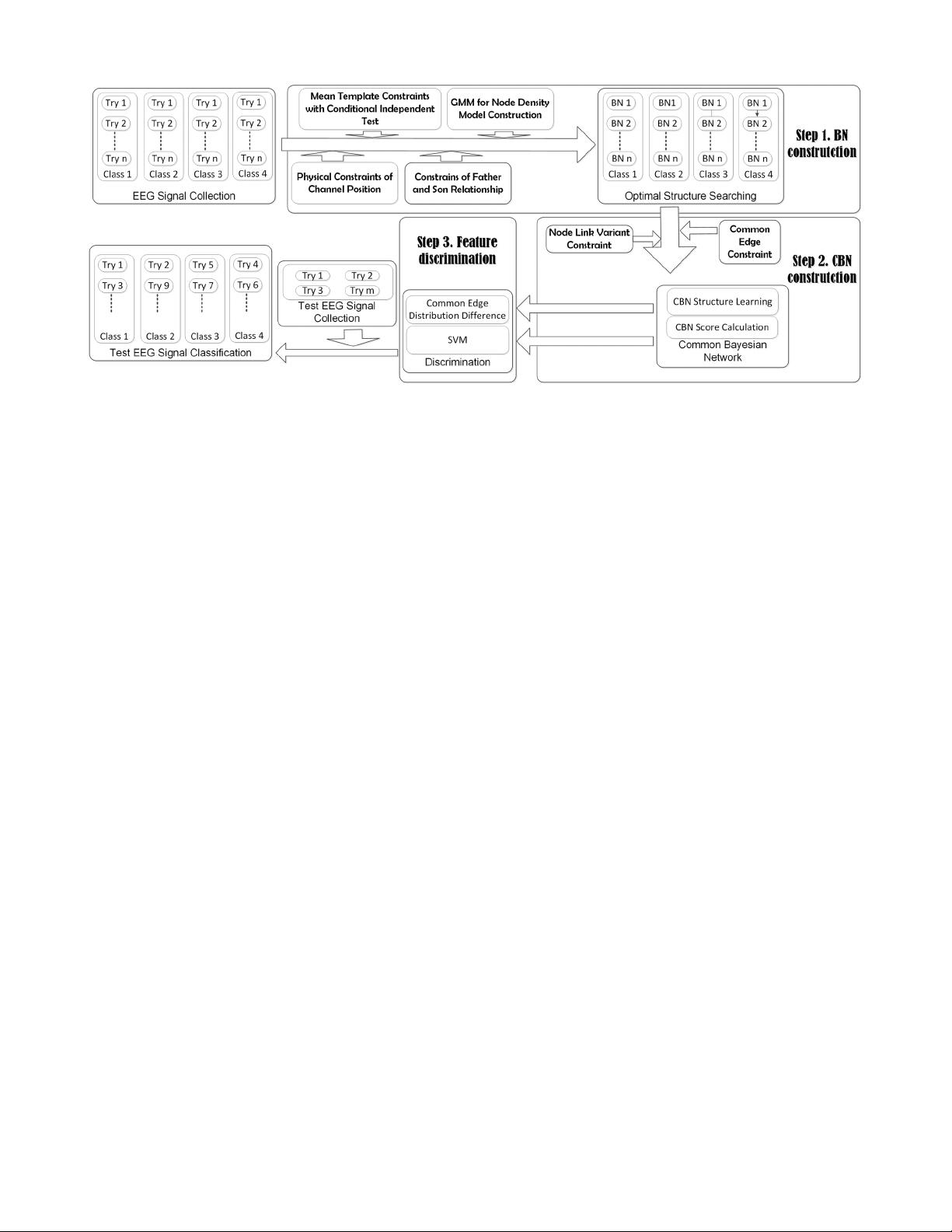

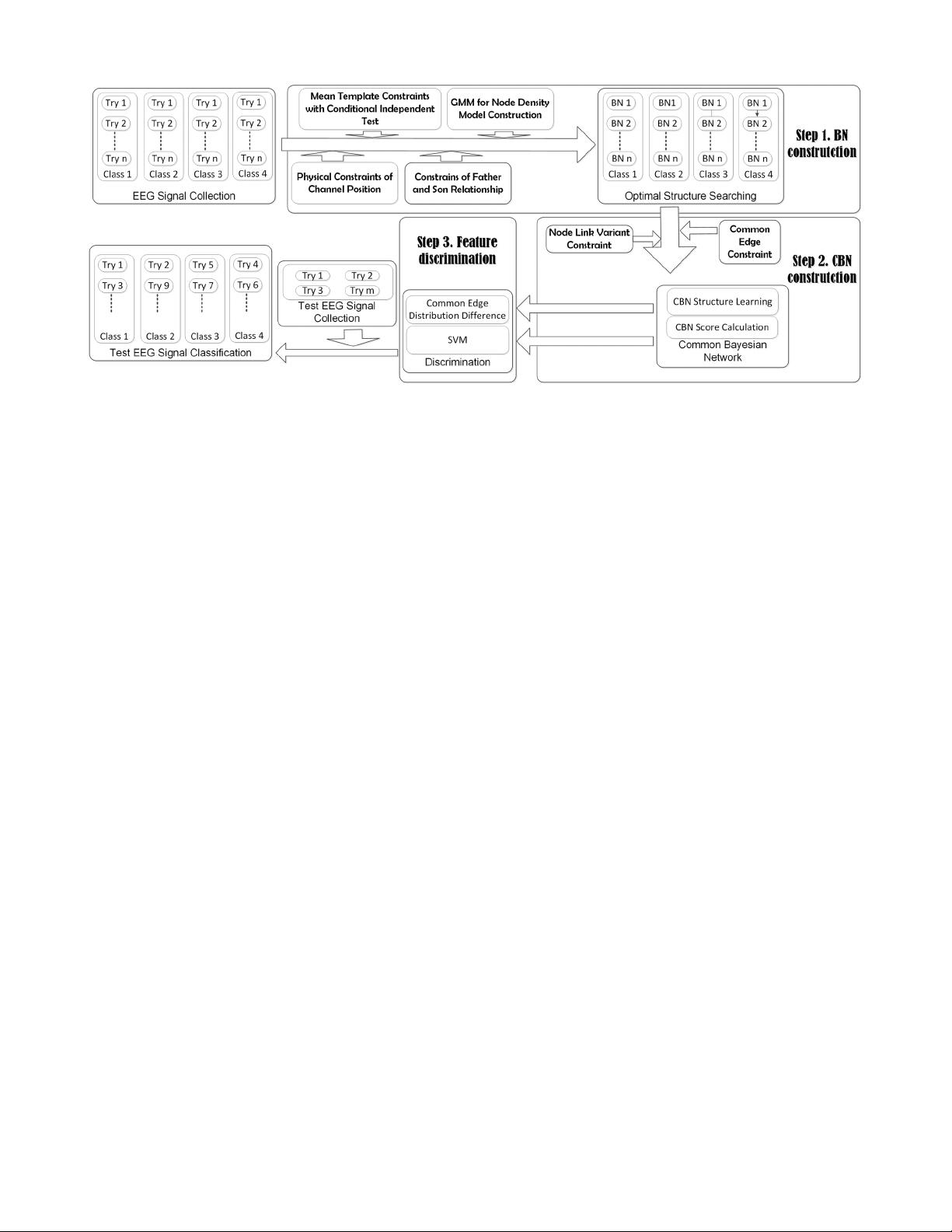

Fig. 2. Framework of the proposed CBN method.

space-time variants are extracted and organized at the same

time. The spatial information is shown in the structure and

time variant is represented in the edge probability. In the last

step, the features are selected based on common edges on the

position with structure variation in different MI tasks. These

features can have more discrimination information than the

edges which have no change among different MI tasks.

The primary novelty of the proposed CBN is a spatio-

temporal statistical information extraction method. First, we

use GMM to calculate temporal statistical information of MI

intrachannel EEG data. Second, the spatial statistical infor-

mation of MI interchannel EEG data is extracted through the

inference probability of constructed common edges in BN.

Therefore, according to statistic theory, if we have enough

data, we can analyze MI with high performance that was not

possible before.

The organization of this paper is as follows. Section II

introduces some basic BN theory and then describes the pro-

posed CBN; experimental results are reported in Section III

and conclusions are drawn in Section IV.

II. C

OMMON BAYESIAN NETWORK

A. Preliminary of Bayesian Network

A BN is represented by a directed acyclic graph. Let

X = (x

1

, x

2

,...,x

n

) be a collection of random variables.

A BN over X is specified by a pair (G;P), where G = (V;E)

is graph structure with node set V = (v

1

, v

2

,...) and edge

set E = (e

1

, e

2

,...). If there is an arc from v

i

to v

j

, i.e.,

(v

i

, v

j

) = e

k

∈ E, we say that v

i

is a parent of v

j

and v

j

is a

child of v

i

. P is the joint distribution. G satisfies the follow-

ing two conditions: 1) node v

i

corresponds to variable x

i

and

2) every variable is conditionally independent of its nonde-

scendants given its parents, i.e., p(x

i

|x

j

, x

k

) = p(x

i

|x

j

) if node

x

k

is node x

j

’s parent.

The learning task in BN can be separated into two subtasks:

structure learning and parameter estimation. The former aims

to identify the most suitable topology and implies a set of con-

ditional independence relations among the variables involved

as long as they are valid. In particular, the graph structure con-

strains the conditional independencies among those variables.

Given a certain causal structure, only some patterns of condi-

tional independence are expected to occur generically among

the variables. Besides independencies, the BN structure can

also be used in certain domains to represent cause and effect

relationships through the edges and their directions. In these

cases, the parent of a node is taken to be the directed cause of

the quantity represented by that node. The parameters define

the conditional probability distributions for a given network

topology. They need to be estimated as accurately as possible.

Existing structure learning approaches follow two basic

strategies: they either look over structures that maximize the

likelihood of the observed data (score-based methods), or they

test for conditional independencies and use these to constrain

the space of possible structures. The former starts by defining a

statistically motivated score that describes the fitness of each

possible structure to the observed data. Such scores include

Bayesian scores [32], [33] and minimum description length

scores [34]. The latter tries to estimate properties of condi-

tional independence among the attributes in the data. This is

usually done via a statistical hypothesis test, e.g., χ

2

test.

In general, finding a structure that maximizes the score

is known as an NP-hard problem [35]–[37]. Although the

constraint satisfaction approach is efficient, it is sensitive to

independence tests. It is commonly believed that an opti-

mization approach is a better tool for structure learning from

data. Most existing learning tools apply such standard heuris-

tic search techniques as greedy hill-climbing and simulated

annealing to find high-scoring structures [33], [38], [39].

Recently, new methods are proposed by formulating a struc-

ture learning problem as an integer linear program and solving

it via a branch-and-cut method [40], [41].

As EEG signals are collected from several channels in dif-

ferent brain areas under a given cognition task, studies on

brain cognition have shown that every cognition task is com-

pleted via the collaboration of many if not all brain areas under

some definite cognition law [42]. It is also shown that different

cognition tasks have different cognition functions which lead