... This is a supernice

superminecraft game. I love

the nicesque affirmation of

minecraftesque. ...

minecraft

affirm

nice

$ation

$esque

super$

TRAINING

super$nice 3

affirm$esque 7

nice$esque 3

minecraft$ation 7

super$affirm 7

minecraft$esque 3

TEST

super$minecraft 0.9

affirm$ation 0.6

nice$ation 0.1

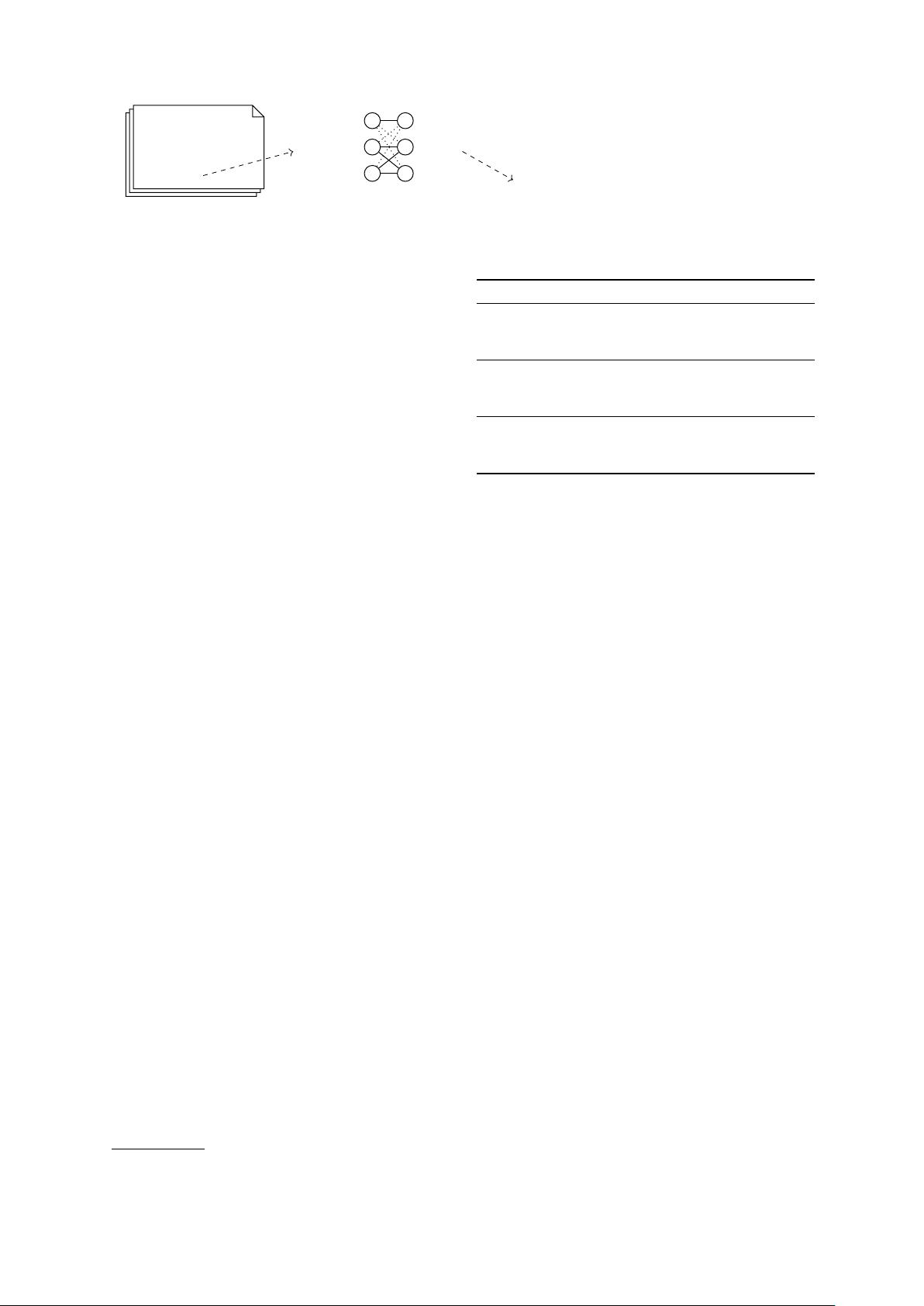

Figure 2: Experimental setup. We extract DGs from Reddit and train link prediction models on them. In the shown

toy example, the derivatives super$minecraft and affirm$ation are held out for the test set.

ation of a derivative corresponds to a new link be-

tween a stem and a derivational pattern in the DG,

which in turn reflects the inclusion of a new word

into a lexical cluster with a shared derivational pat-

tern in the mental lexicon.

3 Experimental Data

3.1 Corpus

We base our study on data from the social media

platform Reddit.

2

Reddit is divided into so-called

subreddits (SRs), smaller communities centered

around shared interests. SRs have been shown

to exhibit community-specific linguistic properties

(del Tredici and Fern

´

andez, 2018).

We draw upon the Baumgartner Reddit Cor-

pus, a collection of publicly available comments

posted on Reddit since 2005.

3

The preprocess-

ing of the data is described in Appendix A.1.

We examine data in the SRs r/cfb (cfb – college

football), r/gaming (gam), r/leagueoflegends (lol),

r/movies (mov), r/nba (nba), r/nfl (nfl), r/politics

(pol), r/science (sci), and r/technology (tec) be-

tween 2007 and 2018. These SRs were chosen

because they are of comparable size and are among

the largest SRs (see Table 1). They reflect three

distinct areas of interest, i.e., sports (cfb, nba, nfl),

entertainment (gam, lol, mov), and knowledge (pol,

sci, tec), thus allowing for a multifaceted view on

how topical factors impact MWF: seeing MWF as

an emergent property of the mental lexicon entails

that communities with different lexica should differ

in what derivatives are most likely to be created.

3.2 Morphological Segmentation

Many morphologically complex words are not de-

composed into their morphemes during cognitive

processing (Sonnenstuhl and Huth, 2002). Based

on experimental findings in Hay (2001), we seg-

ment a morphologically complex word only if the

stem has a higher token frequency than the deriva-

2

reddit.com

3

files.pushshift.io/reddit/comments

SR n

w

n

t

|S| |A| |E|

cfb 475,870,562 522,675 10,934 2,261 46,110

nba 898,483,442 801,260 13,576 3,023 64,274

nfl 911,001,246 791,352 13,982 3,016 64,821

gam 1,119,096,999 1,428,149 19,306 4,519 107,126

lol 1,538,655,464 1,444,976 18,375 4,515 104,731

mov 738,365,964 860,263 15,740 3,614 77,925

pol 2,970,509,554 1,576,998 24,175 6,188 143,880

sci 277,568,720 528,223 11,267 3,323 58,290

tec 505,966,695 632,940 11,986 3,280 63,839

Table 1: SR statistics. n

w

: number of tokens; n

t

: num-

ber of types; |S|: number of stem nodes; |A|: number

of affix group nodes; |E|: number of edges.

tive (in a given SR). Segmentation is performed

by means of an iterative affix-stripping algorithm

introduced in Hofmann et al. (2020) that is based

on a representative list of productive prefixes and

suffixes in English (Crystal, 1997). The algorithm

is sensitive to most morpho-orthographic rules of

English (Plag, 2003): when

$ness

is removed

from

happi$ness

, e.g., the result is

happy

, not

happi. See Appendix A.2. for details.

The segmented texts are then used to create DGs

as described in Section 2.3. All processing is done

separately for each SR, i.e., we create a total of

nine different DGs. Figure 2 illustrates the general

experimental setup of our study.

4 Models

Let

W

be a Bernoulli random variable denoting

the property of being morphologically well-formed.

We want to model

P (W |d, C

r

) = P (W |s, a, C

r

)

,

i.e., the probability that a derivative

d

consisting of

stem

s

and affix group

a

is morphologically well-

formed according to SR corpus C

r

.

Given the established properties of derivational

morphology (see Section 2), a good model of

P (W |d, C

r

)

should include both semantics and

formal structure,

P (W |d, C

r

) = P (W |m

s

, f

s

, m

a

, f

a

, C

r

), (1)

where

m

s

,

f

s

,

m

a

,

f

a

, are meaning and form (here