Real-time Depth-Image-Based Rendering on GPU

Zengzeng Sun

SRC-Xian DMC Lab/SSG, Samsung Electronics

Xi’an, China

zengzeng.sun@samsung.com

Cheolkon Jung

School of Electronic Engineering, Xidian University

Xi’an 710071, China

zhengzk@xidian.edu.cn

Abstract—In this paper, we propose real-time depth-image-

based rendering (DIBR) on GPU for 1280×720 resolution. We

utilize depth adaptive preprocessing and super-resolution to

achieve high-quality DIBR. Moreover, we employ GPU-based

parallel computing to achieve real-time DIBR. Experimental

results demonstrate that the proposed method achieves

superior performance in comparison with the existing methods

with respect to rendering quality and computing time.

1

Keywords- Depth-image-based rendering (DIBR), GPU, hole

filling, parallel computing, real-time.

I. INTRODUCTION

Stereoscopic 3D (S3D) videos are considered as a new

media which provides a realistic and immersive experience

to viewers [1]. They are captured by stereo imaging systems

imitating the human visual system (HVS), and played in S3D

displays such as 3DTV which provides viewers with 3D

effects [2]. Because they are recorded by 3D stereoscopic

cameras like 2D situations, there are some critical issues

such as synchronizing the stereoscopic cameras [3], storing

and transmission of a huge amount of data [4], etc. In

practice, future S3D displays provide viewers with the

services of navigating through the scenes to choose an

appropriate viewpoint depending on their positions for

reality and immersive, which is known as free-viewpoint

television (FTV) [5]. It is of more practical use to use S3D

videos using stereoscopic cameras. The existing 2D videos

should be converted into 3D ones to play on S3D displays.

Thus, recent studies have focused on virtual view synthesis

which generates various intermediate views using limited

number of camera images. Depth-image-based rendering

(DIBR) [6] is a well-known technique of virtual view

synthesis which generates novel views from a 2D reference

image and its corresponding depth image. A coding standard

for the video-plus-depth representation, known as MPEG-C

part 3 [7], is issued by MPEG due to the compression-

efficiency for transmission and compatibility with the

existing 2D broadcasting system. DIBR is also a core

technology in advanced three dimensional television system

(ATTEST 3DTV System) [8] whose goals include a 2D

compatible coding and transmission scheme for S3D videos

using MPEG-2/4/7 and a flexible and commercially feasible

3DTV system for broadcast environments. For the virtual

view synthesis, the 3D warping should be performed to map

1

This work was supported by the National Natural Science Foundation of

China (No. 61271298) and the International S&T Cooperation Program of

China (No. 2014DFG12780).

the pixels in the 2D reference image into desired virtual view

using explicit geometric information, depth maps, and

camera viewpoint parameters [9]. However, the 3D warping

generates obvious holes in the synthesized virtual view

mostly caused by the reason that occluded areas in the

reference image become visible in the synthesized virtual

view. The hole problem is an inherent problem in DIBR

because it has a great influence on the visual perceptual

quality of the generated S3D videos.

Various techniques have been proposed for hole-filling

[10]-[18]. They are classified into two main groups:

interpolation based hole-filling [10]-[14] and inpainting-

based hole-filling [15]-[17]. Recently, Solh et al. have

proposed a novel hole-filling approach in DIBR referred to

as hierarchal hole-filling (HHF) that fills in the holes using a

pyramid-like structure [18]. HHF yields a better rendering

quality than the other approaches, but suffers from blurred

results in the hole area around the object because the

upsampling from lower resolution produces blurred effects.

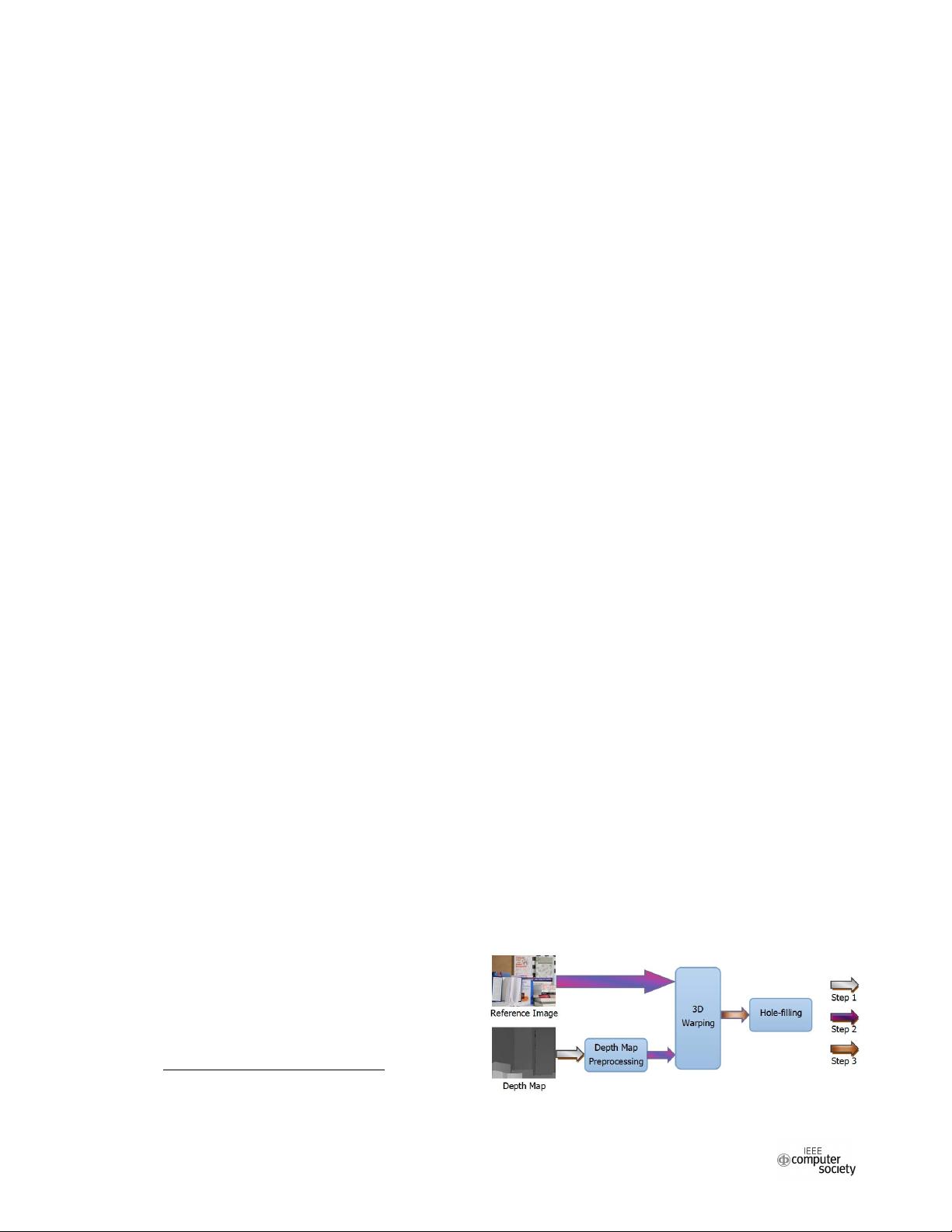

It is noteworthy that Zhang et al. [11] first introduce depth

map preprocessing into DIBR framework (see Fig. 1) which

is composed of three major components because a depth map

with horizontal sharp edges would result in big holes after

3D warping. They reduce the size of big holes before 3D

warping by smoothing the edges of the depth sharp transition,

which can make the subsequent hole-filling much more

easier. However, depth map preprocessing may bring about

the problems of geometric distortions and losses in depth

cues [19]. Thus, several approaches have been proposed to

reduce the artifacts of depth map preprocessing, but still

suffer from the annoying phenomena and remarkably

increasing the computational complexity. To implement

image processing algorithms in fast speed or real-time, the

parallelization based on graphics processing unit (GPU) have

recently become an active topic in the community [20], [21].

Real-time processing is required in the practical applications,

and the resultant quality of implementation should be

ensured under the real-time constraint as well.

Fig. 1 DIBR with depth map preprocessing, redrawn from [11].

2015 International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery

978-1-4673-9200-6/15 $31.00 © 2015 IEEE

DOI 10.1109/CyberC.2015.97

324