A Quantitative Analysis on Microarchitectures of

Modern CPU-FPGA Platforms

Young-kyu Choi Jason Cong Zhenman Fang

Yuchen Hao Glenn Reinman Peng Wei

Center for Domain-Specific Computing, University of California, Los Angeles

{ykchoi, cong, zhenman, haoyc, reinman, peng.wei.prc}@cs.ucla.edu

ABSTRACT

CPU-FPGA heterogeneous acceleration platforms have shown

great potential for continued performance and energy efficiency im-

provement for modern data centers, and have captured great atten-

tion from both academia and industry. However, it is nontrivial for

users to choose the right platform among various PCIe and QPI

based CPU-FPGA platforms from different vendors. This paper

aims to find out what microarchitectural characteristics affect the

performance, and how. We conduct our quantitative comparison

and in-depth analysis on two representative platforms: QPI-based

Intel-Altera HARP with coherent shared memory, and PCIe-based

Alpha Data board with private device memory. We provide multi-

ple insights for both application developers and platform designers.

1. INTRODUCTION

In today’s data center design, power and energy efficiency have

become two of the primary constraints. The increasing demand

for energy-efficient high-performance computing has stimulated a

growing number of heterogeneous architectures that feature hard-

ware accelerators or coprocessors, such as GPUs (Graphics Pro-

cessing Units), FPGAs (Field Programmable Gate Arrays), and

ASICs (Application Specific Integrated Circuits). Among various

heterogeneous acceleration platforms, the FPGA-based approach

is considered to be one of the most promising directions, since

FPGAs provide low power and high energy efficiency, and can be

reprogrammed to accelerate different applications. For example,

Microsoft has designed a customized FPGA board called Catapult

and deployed it in its data center [16], which improved the rank-

ing throughput of the Bing search engine by 2x. In other words, the

number of servers can be reduced by 2x with each new CPU-FPGA

server consuming only 10% more power.

Motivated by FPGAs’ high energy efficiency and reprogramma-

bility, industry vendors, such as Microsoft, Intel/Altera, Xilinx,

IBM and Convey, are providing various ways to integrate high-

performance FPGAs into data center servers. We have classified

modern CPU-FPGA acceleration platforms in Table 1 according

to their physical integration and memory models. Traditionally,

the most widely used integration is to connect an FPGA to a CPU

via PCIe, with both components equipped with private memory.

Many FPGA boards built on top of Xilinx or Altera FPGAs use

this way of integration because of its extensibility. The customized

Microsoft Catapult board integration is such an example. Another

example is the Alpha Data FPGA board [1] with Xilinx FPGA

fabric that can leverage the Xilinx SDAccel development environ-

ment [2] to support efficient accelerator design using high-level

programming languages, including C/C++ and OpenCL. More-

over, vendors like IBM tend to support a PCIe connection with

Permission to make digital or hard copies of all or part of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for profit or commercial advantage and that copies bear this notice and the full cita-

tion on the first page. Copyrights for components of this work owned by others than

ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or re-

publish, to post on servers or to redistribute to lists, requires prior specific permission

and/or a fee. Request permissions from permissions@acm.org.

DAC ’16, June 05-09, 2016, Austin, TX, USA

c

2016 ACM. ISBN 978-1-4503-4236-0/16/06. . . $15.00

DOI: http://dx.doi.org/10.1145/2897937.2897972

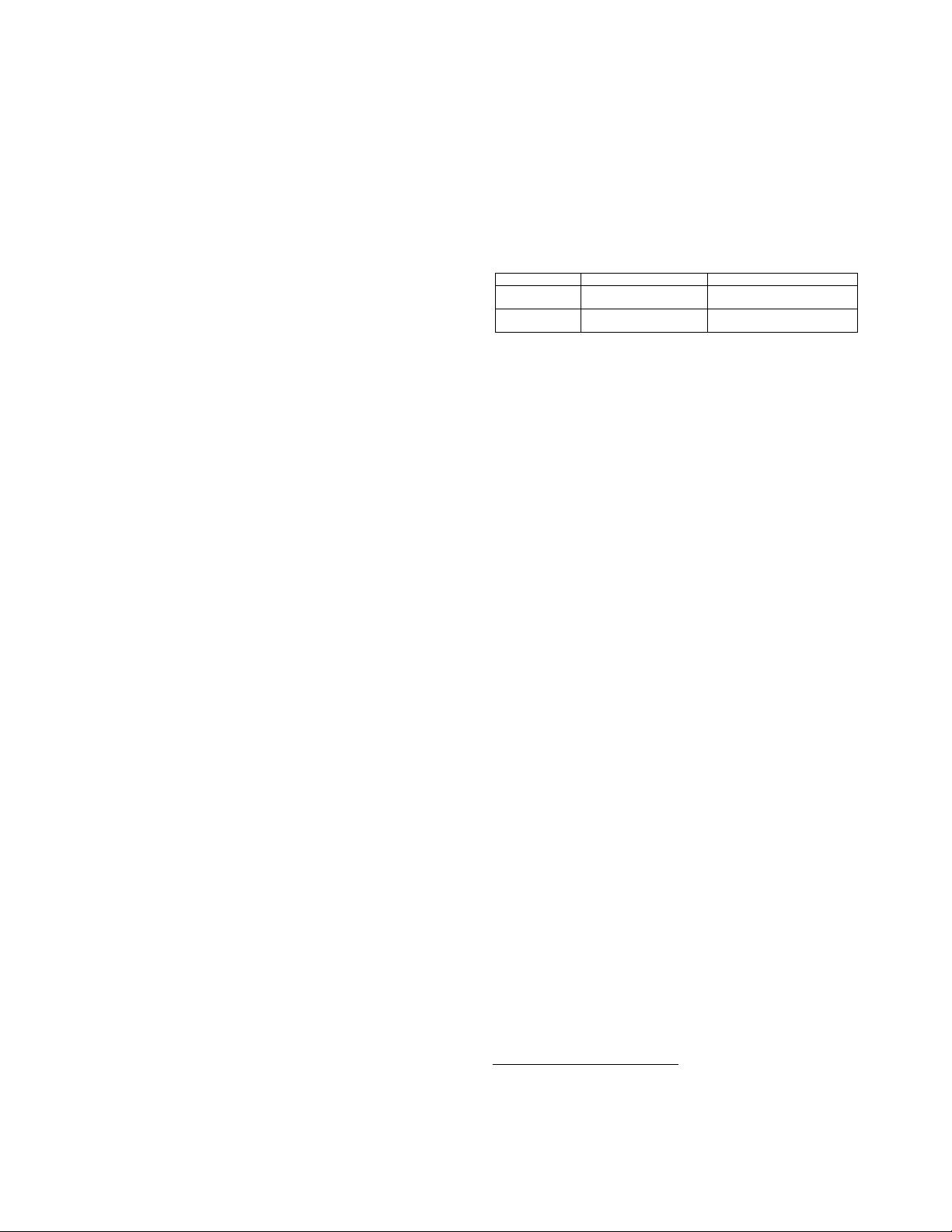

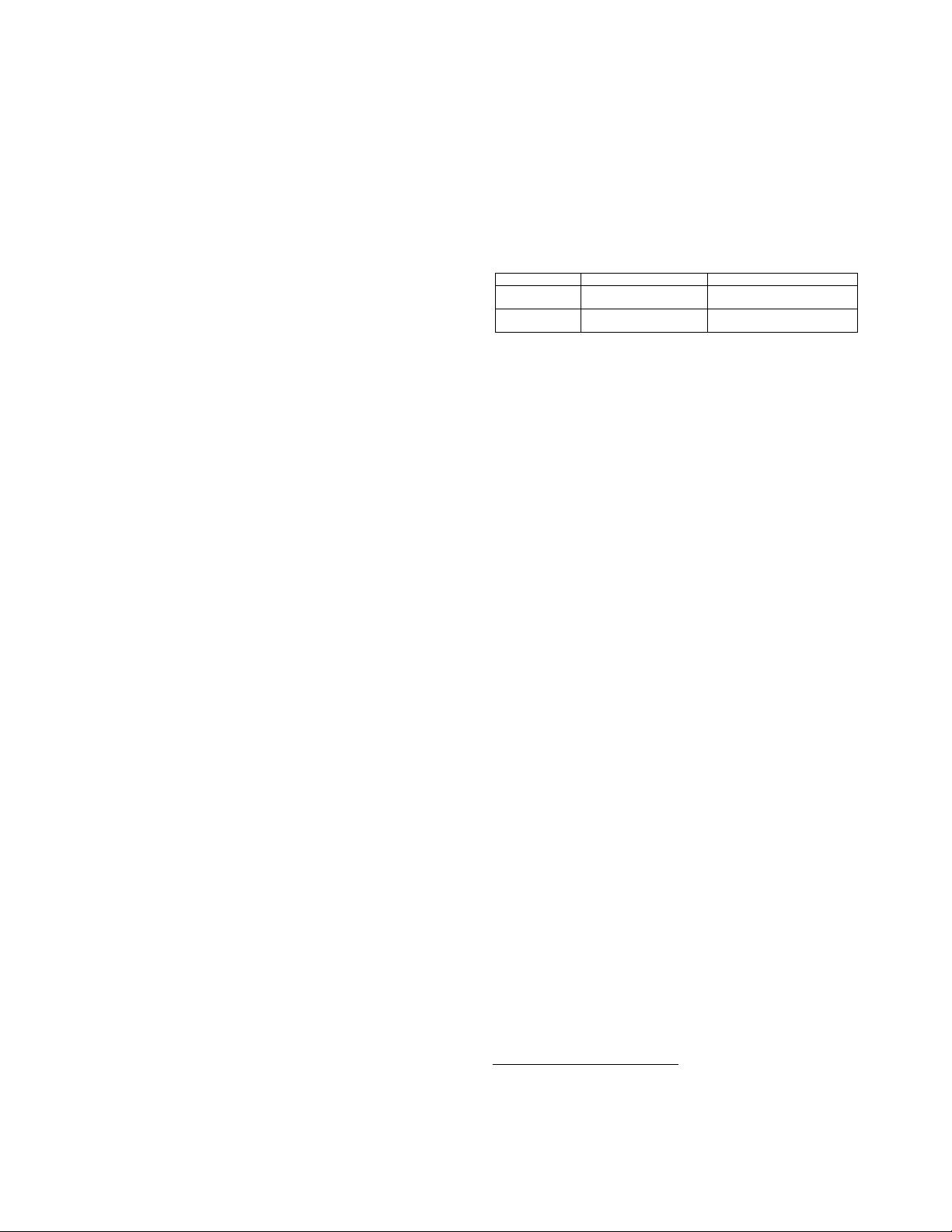

Table 1: Classification of modern CPU-FPGA platforms

Separate Private Memory Coherent Shared Memory

PCIe Peripheral

Interconnect

Alpha Data board [2],

Microsoft Catapult [16]

IBM CAPI [19]

Processor

Interconnect

N/A

Intel-Altera HARP (QPI) [13],

Convey HC-1 (FSB) [4]

a coherent shared memory for easier programming. For exam-

ple, IBM has been developing the Coherent Accelerator Processor

Interface (CAPI) on POWER8 [19] for such an integration, and

has used this platform in the IBM data engine for NoSQL [3].

More recently, closer integration becomes available using a new

class of processor interconnects such as Front-Side Bus (FSB) and

the newer QuickPath Interconnect (QPI), and provides a coherent

shared memory, such as the FSB-based Convey machine [4] and,

the latest Intel-Altera Heterogeneous Architecture Research Plat-

form (HARP) [13] that targets data centers.

The evolvement of various CPU-FPGA platforms brings up two

challenging questions: 1) which platform should we choose to gain

better performance and energy efficiency? and 2) how can we de-

sign an optimal accelerator on a given platform? There are nu-

merous factors that can affect the choice and optimization, such

as platform cost, programming models and efforts, logic resource

and frequency of FPGA fabric, CPU-FPGA communication latency

and bandwidth, to name just a few. While some of them are easy

to figure out, others are nontrivial, especially the communication

latency and bandwidth between CPU and FPGA under different

integration. One reason is that there are few publicly available doc-

uments for newly announced platforms like HARP

1

. More impor-

tantly, those architectural parameters in the datasheets are often ad-

vertised values, which are usually difficult to achieve in practice.

Actually, sometimes there could be a huge gap between the ad-

vertised numbers and practical numbers. For example, the adver-

tised bandwidth of the PCIe Gen3 x8 interface is 8GB/s; however,

our experimental results show that the PCIe-equipped Alpha Data

platform can only provide 1.6GB/s PCIe-DMA bandwidth using

OpenCL APIs implemented by Xilinx (see Section 3.2.1). Quan-

titative evaluation and in-depth analysis of such kinds of microar-

chitectural characteristics could aid CPU-FPGA platform users to

accurately predict (and optimize) the performance of a candidate

accelerator design on a platform, and make the right choice. Fur-

thermore, it could also benefit CPU-FPGA platform designers for

identifying performance bottlenecks and providing better hardware

and software support.

Motivated by those potential benefits to both platform users and

designers, this paper aims to discover what microarchitectural char-

acteristics affect the performance of modern CPU-FPGA platforms,

and evaluate how they will affect that performance. We conduct our

quantitative comparison on two representative modern CPU-FPGA

platforms to cover both integration dimensions, i.e., PCIe-based vs.

QPI-based, and private vs. shared memory model. One is the re-

cently announced QPI-based HARP with coherent shared memory,

and the other is the commonly used PCIe-based Alpha Data with

separate private memory. We quantify each platform’s CPU-FPGA

communication latency and bandwidth with in-depth analysis, and

1

For simplicity, in the rest of this paper, we will use HARP for the

Intel-Altera HARP platform, and Alpha Data for the Alpha Data

board integrated with a Xeon CPU.