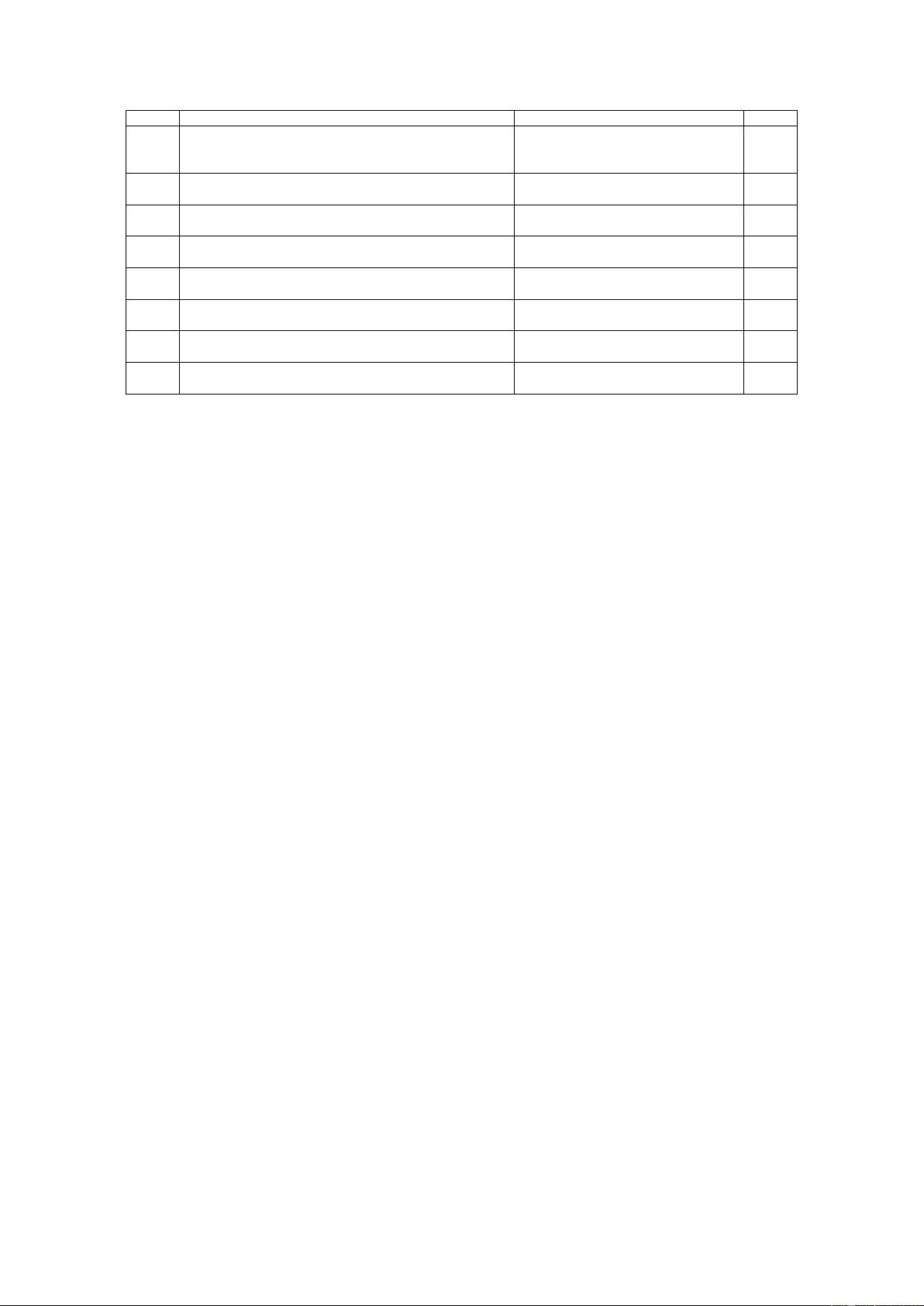

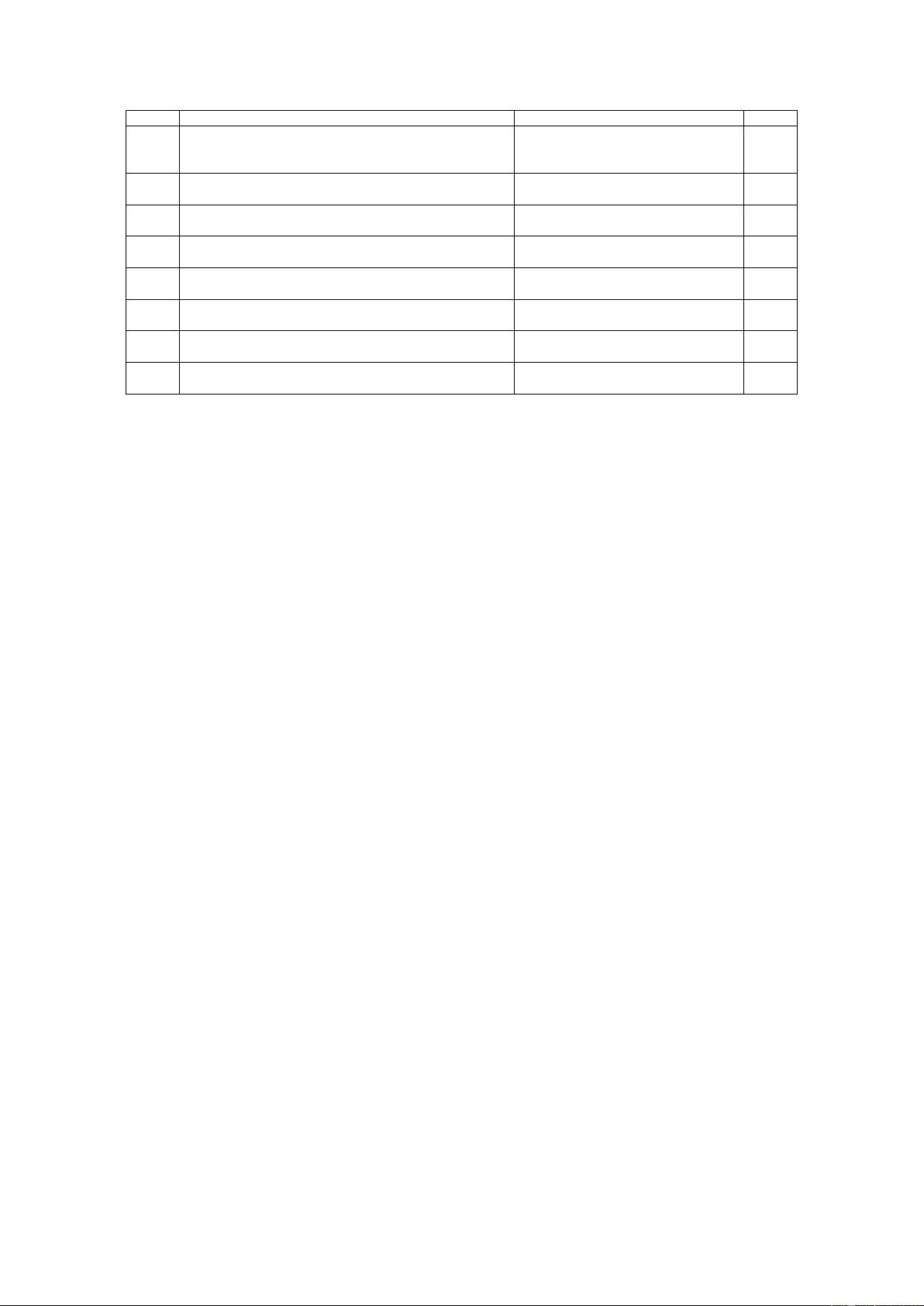

Adversarial Text Explaination α

35

(o)

album as "full of exhilarating, ecstatic, thrilling, fun and

sometimes downright silly songs"

the original top-activated words and

their contexts for transformer factor

Φ

:,35

9.5

(a)

album as "full of delightful, lively, exciting, interesting

and sometimes downright silly songs"

use different adjective. 9.2

(b)

album as "full of unfortunate, heartbroken, annoying, bor-

ing and sometimes downright silly songs"

Change all adjective to negative adjec-

tive.

8.2

(c)

album as "full of [UNK], [UNK], thrilling, [UNK] and

sometimes downright silly songs"

Mask all adjective with Unknown To-

ken.

5.3

(d)

album as "full of thrilling and sometimes downright silly

songs"

Remove the first three adjective. 7.8

(e)

album as "full of natural, smooth, rock, electronic and

sometimes downright silly songs"

Change adjective to neutral adjective. 6.2

(f)

each participant starts the battle with one balloon. these

can be re@-@ inflated up to four

Use an random sentence that has quo-

tation mark.

0.0

(g)

The book is described as "innovative, beautiful and bril-

liant". It receive the highest opinion from James Wood

A sentence we created that contain the

pattern of consecutive adjective.

7.9

Table 4: We construct adversarial texts similar but different to the pattern “Consecutive adjective”. The last column

shows the activation of Φ

:,35

, or α

(8)

35

, w.r.t. the blue-marked word in layer 8.

correspond to a specific pattern, we can use con-

structed example words and context to probe their

activation. In Table 4, we construct several text

sequences that are similar to the patterns corre-

sponding to a certain transformer factor but with

subtle differences. The result confirms that the con-

text that strictly follows the pattern represented by

that transformer factor triggers a high activation.

On the other hand, the more closer the adversar-

ial example to this pattern, the higher activation it

receives at this transformer factor.

High-Level: Long-Range Dependency.

High-

level transformer factors correspond to those lin-

guistic patterns that span a long-range in the text.

Since the IS curves of mid-level and high-level

transformer factors are similar, it is difficult to dis-

tinguish those transformer factors based on their IS

curves. Thus, we have to manually examine the top-

activation words and contexts for each transformer

factor to distinguish whether they are mid-level or

high-level transformer factors. In order to ease the

process, we can use the black-box interpretation

algorithm LIME (Ribeiro et al., 2016) to identify

the contribution of each token in a sequence.

Given a sequence

s ∈ S

, we can treat

α

(l)

c,i

, the

activation of

Φ

:,c

in layer-

l

at location

i

, as a scalar

function of

s

,

f

(l)

c,i

(s)

. Assume a sequence

s

trig-

gers a high activation

α

(l)

c,i

, i.e.

f

(l)

c,i

(s)

is large. We

want to know how much each token (or equivalently

each position) in

s

contributes to

f

(l)

c,i

(s)

. To do

so, we generated a sequence set

S(s)

, where each

s

0

∈ S(s)

is the same as

s

except for that several

random positions in

s

0

are masked by [‘UNK’] (the

unknown token). Then we learns a linear model

g

w

(s

0

)

with weights

w ∈ R

T

to approximate

f(s

0

)

,

where

T

is the length of sentence

s

. This can be

solved as a ridge regression:

min

w∈R

T

L(f, w, S(s)) + σkwk

2

2

.

The learned weights

w

can serve as a saliency

map that reflects the “contribution” of each token

in the sequence

s

. Like in Figure 7, the color re-

flects the weights

w

at each position, red means

the given position has positive weight, and green

means negative weight. The magnitude of weight

is represented by the intensity. The redder a token

is, the more it contributions to the activation of

the transformer factor. We leave more implementa-

tion and mathematical formulation details of LIME

algorithm in the appendix.

We provide detailed visualization for 2 different

transformer factors that show long-range depen-

dency in Figure 7, 8. Since visualization of high-

level information requires longer context, we only

show the top 2 activated words and their contexts

for each such transformer factor. Many more will

be provided in the appendix section G.

We name the pattern for transformer factor

Φ

:,297

in Figure 7 as “repetitive pattern detector”. All top

activated contexts for

Φ

:,297

contain an obvious

repetitive structure. Specifically, the text snippet

“can’t get you out of my head" appears twice in

the first example, and the text snippet “xxx class

passenger, star alliance” appears 3 times in the sec-

ond example. Compared to the patterns we found

in the mid-level [6], the high-level patterns like