Deep Learning on Radar Centric 3D Object Detection

Seungjun Lee

#1

#

Seoul National University, Korea

*

AI COLLEGE, Korea

1

lsjj096@snu.ac.kr

Abstract — Even though many existing 3D object

detection algorithms rely mostly on camera and LiDAR,

camera and LiDAR are prone to be affected by harsh

weather and lighting conditions. On the other hand, radar

is resistant to such conditions. However, research has

found only recently to apply deep neural networks on

radar data. In this paper, we introduce a deep learning

approach to 3D object detection with radar only. To the

best of our knowledge, we are the first ones to demonstrate

a deep learning-based 3D object detection model with

radar only that was trained on the public radar dataset. To

overcome the lack of radar labeled data, we propose a

novel way of making use of abundant LiDAR data by

transforming it into radar-like point cloud data and

aggressive radar augmentation techniques.

Keywords

— object detection, deep learning, neural network,

radar, autonomous driving

I. INTRODUCTION

Due to its broad Real-World applications such as

autonomous driving and robotics, the proper use of 3D object

detection is one of the most crucial and indispensable

problems to solve. Object detection is the task of recognizing

and localizing multiple objects in a scene. Objects are usually

recognized by estimating a classification probability and

localized with bounding boxes. In autonomous driving, the

main concern is to perform 3D object detection with accuracy,

robustness and real-time. Therefore, it makes almost all the

autonomous vehicles equipped with multiple sensors of

multiple modalities to ensure safety: camera, LiDAR (light

detection and ranging), and Radar (radio detection and

ranging).

Currently, with cameras, the most widely adapted vision

sensor to carry out 3D object detection is LiDAR which

outputs spatially accurate 3D point clouds of its surroundings.

While recent 2D object detection algorithms are capable of

handling large variations in RGB images, 3D point clouds are

special in the sense that their unordered, sparse and locality

sensitive characteristics still show great challenges to solve 3D

object detection problems. Furthermore, cameras and LiDARs

are prone to harsh weather conditions like rain, snow, fog or

dust and illumination.

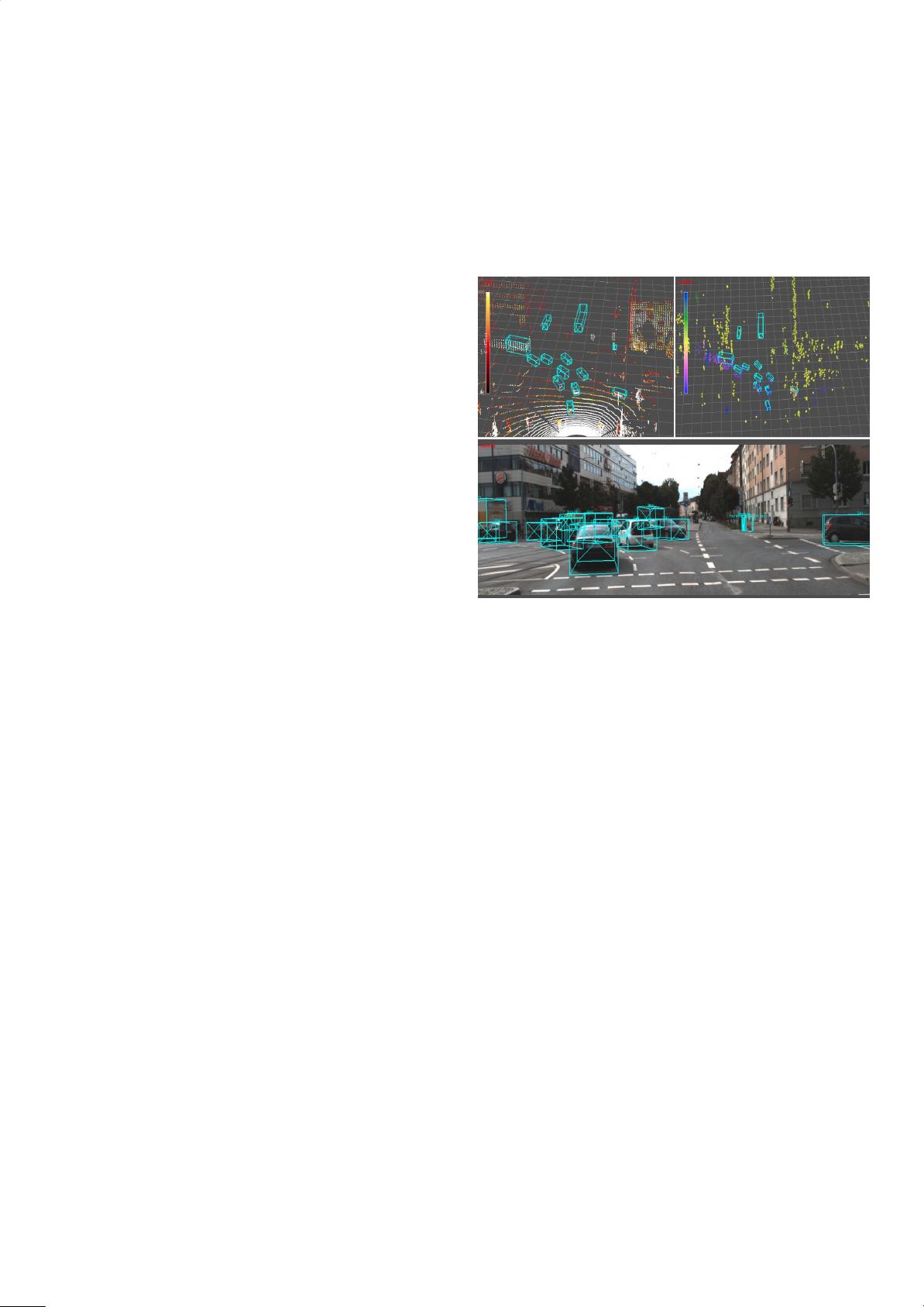

Fig. 1. An Example of radar image (up right) with the corresponding RGB

camera images (down) and LiDAR images (up left) from [10].

On the contrary, automotive radar, being considerably

cheaper than a LiDAR and resistant to adverse weather and

insensitive to lighting variations that provides long and

accurate range measurements of the surroundings

simultaneously with relative radial velocity measurements by

Doppler effect, is widely used within modern advanced driver

assistance and vehicle safety systems. Moreover, the recent

demand for autonomous radar introduced a new generation of

high-resolution automotive “imaging” radar like [10] which is

expected to be a substitute for expensive LiDARs.

However, there exist more difficulties in the development

of radar-based detectors than LiDAR-based ones. As deep

learning is a heavily data-driven approach, the top bottleneck

in radar-based applications is the availability of publicly

usable data annotated with ground truth information. Only the

very recent nuScenes dataset [8] provides non-disclosed type

of 2D radar sensor with sparsely populated 2D points but

without the sampled radar ADC data required for deep radar

detection whereas Astyx HiRes2019 Dataset [10] provides 3D

imaging radar data that contains only 546 frames with

ground-truth labels, which is relatively too small for common

image datasets in the computer vision community.

Even though recent publications have shown that the

radar-camera fusion object detector that exploits both images

and point cloud data can be reliable to some degree in the