to a term c (representing an entity or a concept). Specifically, if

most similar terms of c have h as the hypernym, c is likely to have

the hypernym h. We propose an iterative framework to realize

this idea. Algorithm 1 outlines the framework. In each itera-

tion, we use CF-based approach to find missing hypernyms

for each term c in the current taxonomy. Specifically, for each

c, we first find top-K terms that are similar to c (line 3). Each

hypernym (h)ofthesetop-K terms but c is a candidate hyper-

nym (line 4). We rank candidate hypernyms by aggregating

the votes from the top-K similar terms of c by a scoring func-

tion (line 5). We add the candidates with a score larger than

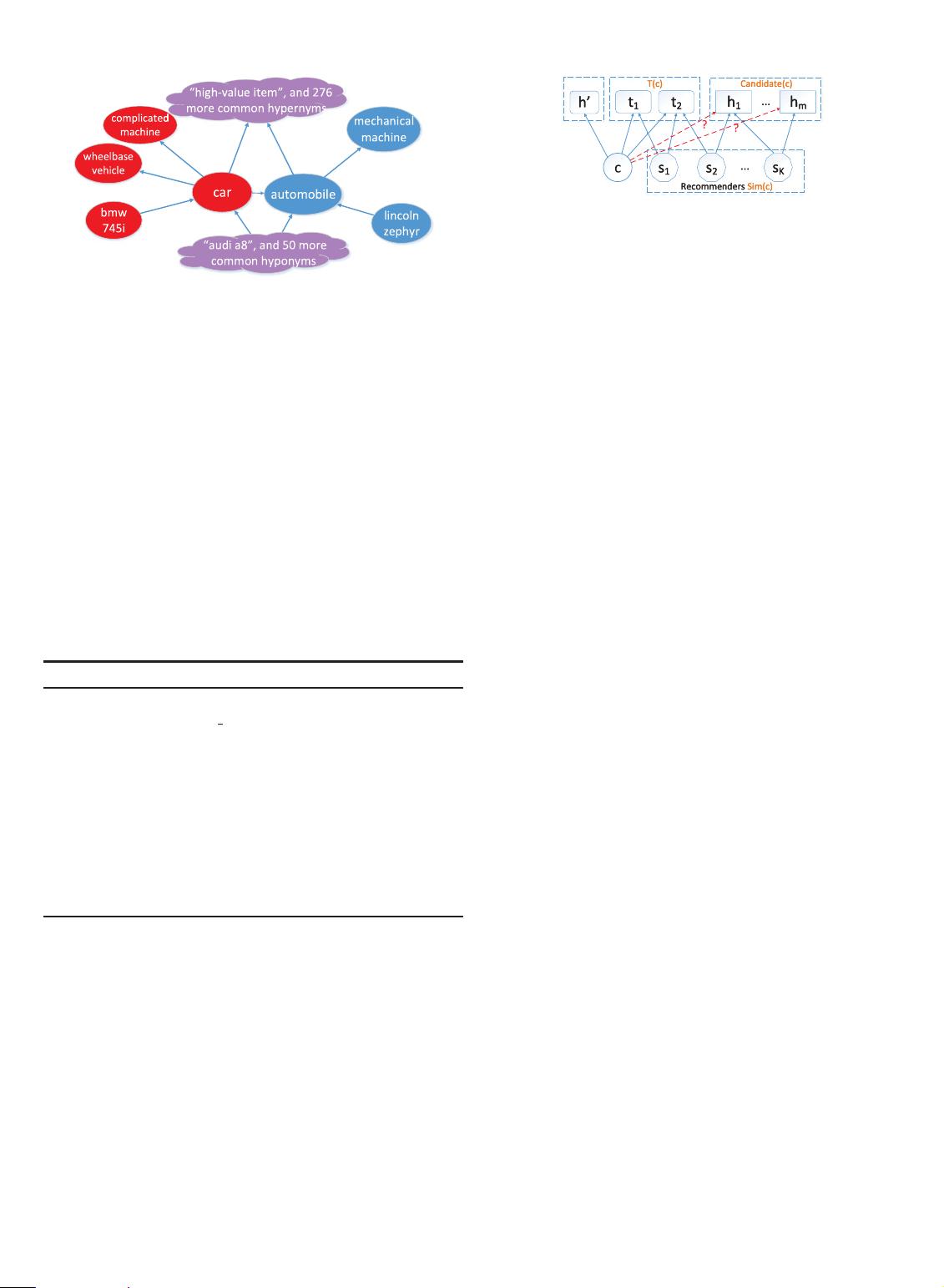

the threshold u as the missing hypernyms of c (line 6). Fig. 3

illustrates the key roles in the CF-based recommendation

model, where TðcÞ is the intersection of c’s existing hyper-

nyms and c’s similar terms’ hypernyms. TðcÞ will be used as

prior knowledge to estimate the frequency of newly discov-

ered isA relations (Section 3.3) and to tune a threshold for the

selection of the final hypernyms (Section 4.2).

Algorithm 1. CF-Based Missing isA Relationship Finding

Input: Taxonomy T , parameters K; u

1: while iteration < max

iteration do

2: for term c 2 T do

3: SimðcÞ top-K similar terms of c;

4: CandidateðcÞ ½

S

s2SimðcÞ

hypeðsÞ hype ðcÞ;

5: Rank candidates in CandidateðcÞ by a scoring

function f;

6: Update T by attaching c to any x 2 CandidateðcÞ s.t.

fðx Þu;

7: end for

8: iteration iteration þ 1;

9: end while

Relationship to User-Based KNN Recommendation. Our solu-

tion is essentially the user-based KNN approach, which is

widely used in recommender systems. There are two types of

recommendation algorithms: user-based [24], [25] and item-

based [26], [27]. In out settings, the user is the term for which

we need to find its missing hypernyms as well as its similar

terms, while item means the missing hypernyms. Item-based

algorithms in general are not applicable in our problem

because a similar hypernym is not necessarily an appropriate

hypernym for a specific instance, as illustrated in Example 2.

Although recommender systems have been extensively stud-

ied, we still need great efforts to tailor the generic user-based

KNN approach to our problems because of a completely dif-

ferent setting.

1.4 Challenges and Contributions

Using CF to solve our problem is not trivial. In general, we

still have the following obstacles to overcome.

C1: Similarity metric. We need an effective semantic simi-

larity metric to find similar terms. Since Probase has ambigu-

ous words or phrases, and noisy or missing isA relationships,

it is not easy to ensure the effectiveness of the metric. We

will address this issue in Section 2.

C2: Relationship frequency. In Probase, each isA relationship

is associated with a frequency that the relationship is observed

from corpora. The frequency is critical for the successful usage

of Probase in real applications [23], [28]. While the generic CF

framework can only give a likelihood that a concept is a right

hypernym of a term. We still need great efforts to estimate an

appropriate frequency for the predicted missing relationships.

In Section 3 we propose a regression model to estimate the

frequency of newly found missing relationships.

C3: Parameter tuning. There are two key parameters in our

solution (as shown in Algorithm 1): K (used for the selection

of the similar concepts of c)andu (used for the selection of

final missing hypernyms). In general, a fixed setting is not an

optimal choice due to the heterogeneity of data. We propose

heuristics to set a best K and u for different c in Section 4.

C4: Efficiency. Probase has millions of terms and tens of

millions of relationships. A straightforward solution is not

efficient. In Section 2.5, we identify the bottleneck (the com-

putation of similarity metrics). We further propose a sum-

mary based pruning strategy to speedup the computation

in Section 5.

In summary, our contributions are as follows:

We propose a collaborative filtering based inferenc-

ing framework to find missing isA relationships in a

data-driven taxonomy.

We propose effective similarity metrics (to measure a

pair of terms) and ranking mechanisms (of candidate

missing hypernyms) to enable the effective inference

of missing isA relationships under the CF framework.

We identify the performance bottleneck and propose a

corresponding speedup strategy. We also give param-

eter tuning solutions to improve the effectiveness.

We conduct extensive experiments to justify the pro-

posed models and solutions. We build a taxonomy

Probase+ containing 5.1 million (about 30 percent)

more isA relationships than its prototype Probase, and

its accuracy is above 90 percent. As far as we know, it

is the largest conceptual taxonomy ever known.

The rest of the paper is organized as follows. In Section 2,

we give the similarity metrics to find similar terms. In

Section 3, we elaborate the ranking scores of candidate

hypernyms. In Section 4, we describe our method of param-

eter tuning. In Section 5, we optimize the performance of

our solution. In Section 6, we report the effectiveness of our

approach based on comprehensive experiments. We discuss

related works in Section 7, and conclude in Section 8.

2SIMILARITY METRICS

To apply collaborative filtering, we need to find terms that

are similar to a given term. In general, our framework is

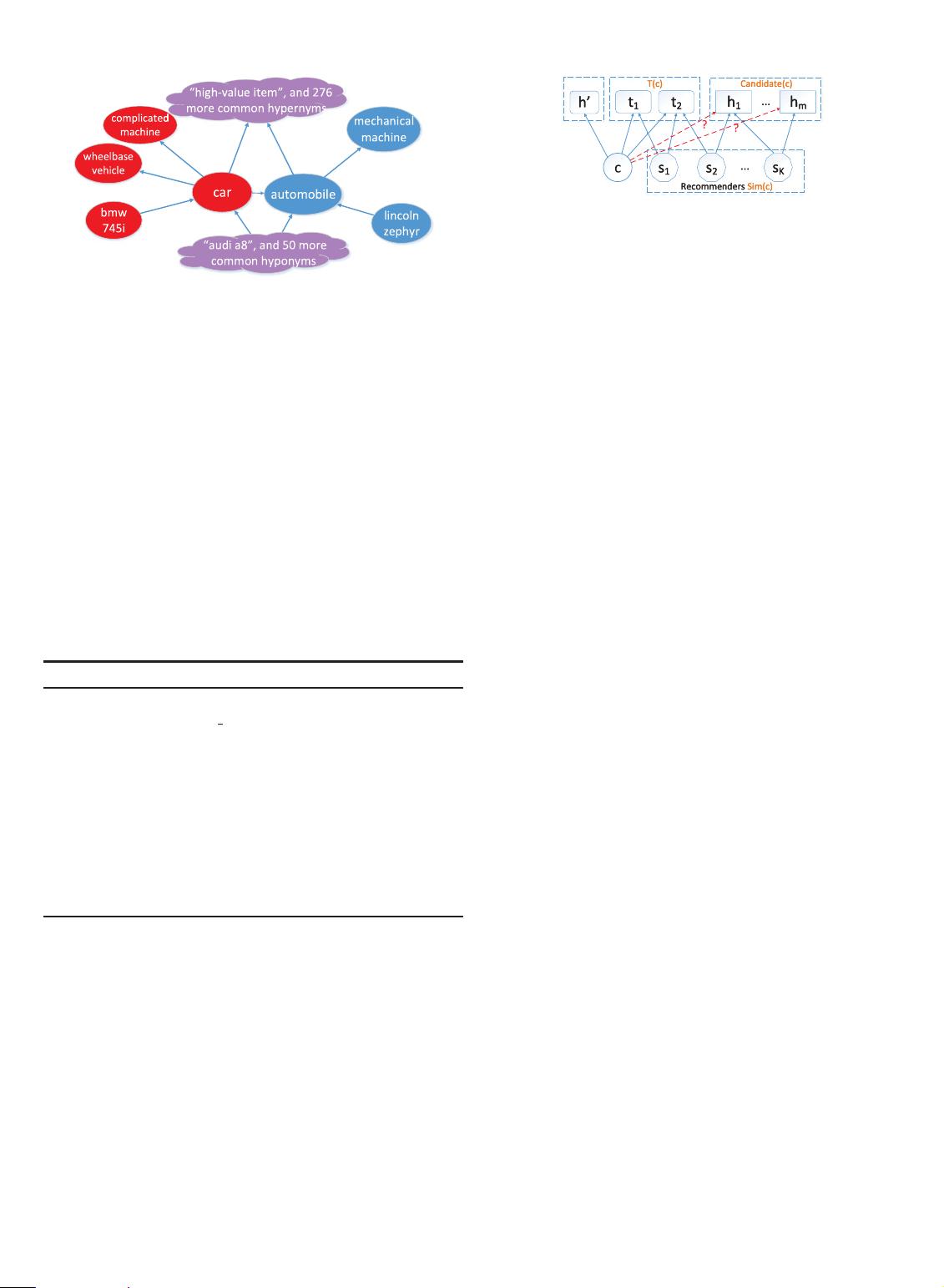

Fig. 2. Probase fragment: “car” and “automobile.”

Fig. 3. The CF model.

LIANG ET AL.: PROBASE+: INFERRING MISSING LINKS IN CONCEPTUAL TAXONOMIES 1283