1939-1374 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TSC.2016.2539945, IEEE

Transactions on Services Computing

JOURNAL OF IEEE TRANSACTIONS ON SERVICES COMPUTING, VOL. X, NO. X, X 2016 3

2.1 Scope of Studied Software Changes

In this paper, we focus on two types of software changes on servers

in large Web-based services, software upgrades and configuration

changes, for the following three reasons. (1) The operations

team typically care about the unexpected consequences that are

potentially due to these planned changes; (2) These changes are

controllable by the operations team via command line interfaces

and observable in logs; (3) We have observed that these two types

constitute more than 90% of the tens of thousands of software

changes in our data.

Software Upgrades. With the current rapid evolution of

the Internet, new features are continuously being deployed with

software upgrades. The operations team also conducts software

upgrades to fix bugs or improve service performance. In a large

service, it is often the case that one software upgrade implements

multiple features or bug fixes, and FUNNEL considers such a

software upgrade as a whole. FUNNEL decides whether the

whole software upgrade introduces any KPI change but does

not attempt to distinguish which individual feature or bug fix

introduces KPI changes. In addition, the web services in our

scenario usually trigger a software upgrade when operators try

to patch the software, and thus we classify the patches as software

upgrades.

Configuration Changes. Using command line interfaces, the

operations team can change the configurations by using specific

commands. The configuration change can be in the operating sys-

tem (OS) or infrastructure software (e.g., a configuration change

in Apache), service configuration (e.g., an increase in the number

of threads in a service process by command lines), deployment

scale (e.g., an increase in the number of servers where a service

is deployed), or data source (e.g., an update to the strategy that

calculates the valid page view counts).

With above focuses, the following perspectives are out of

scope for this paper.

1) We do not consider the software changes on the network

devices such as routers and switches, which have been

already studied in depth in [6], [7], [14];

2) We do not consider software changes that were external

to the company, e.g., a change in a peer company, since

these changes might be invisible to the studied company’s

operations team.

3) We do not explicitly consider the interactions across

multiple concurrent or consecutive software changes on

a same server, which can be considered as one combined

change as a straw man approach.

More detailed studies along the last three directions are left as

future work.

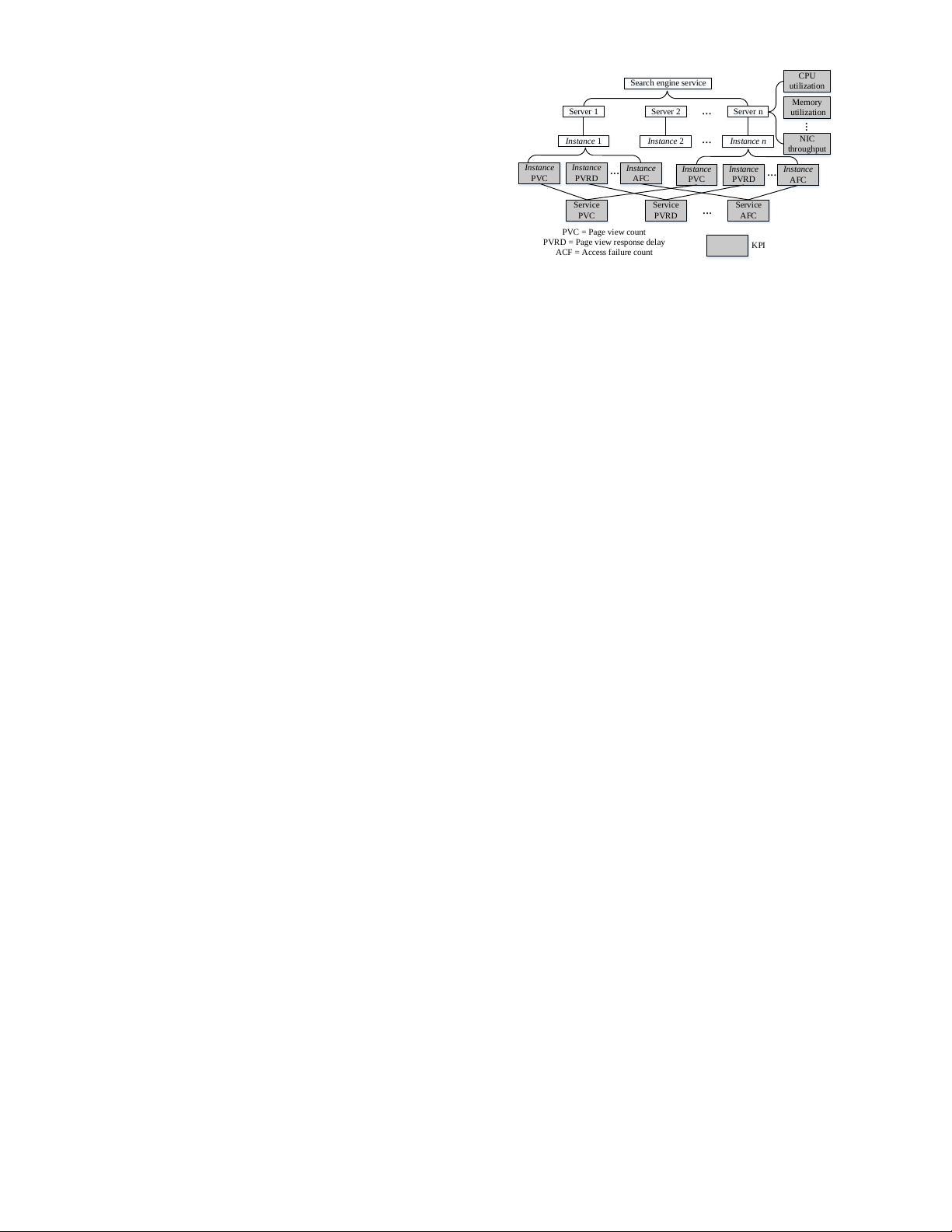

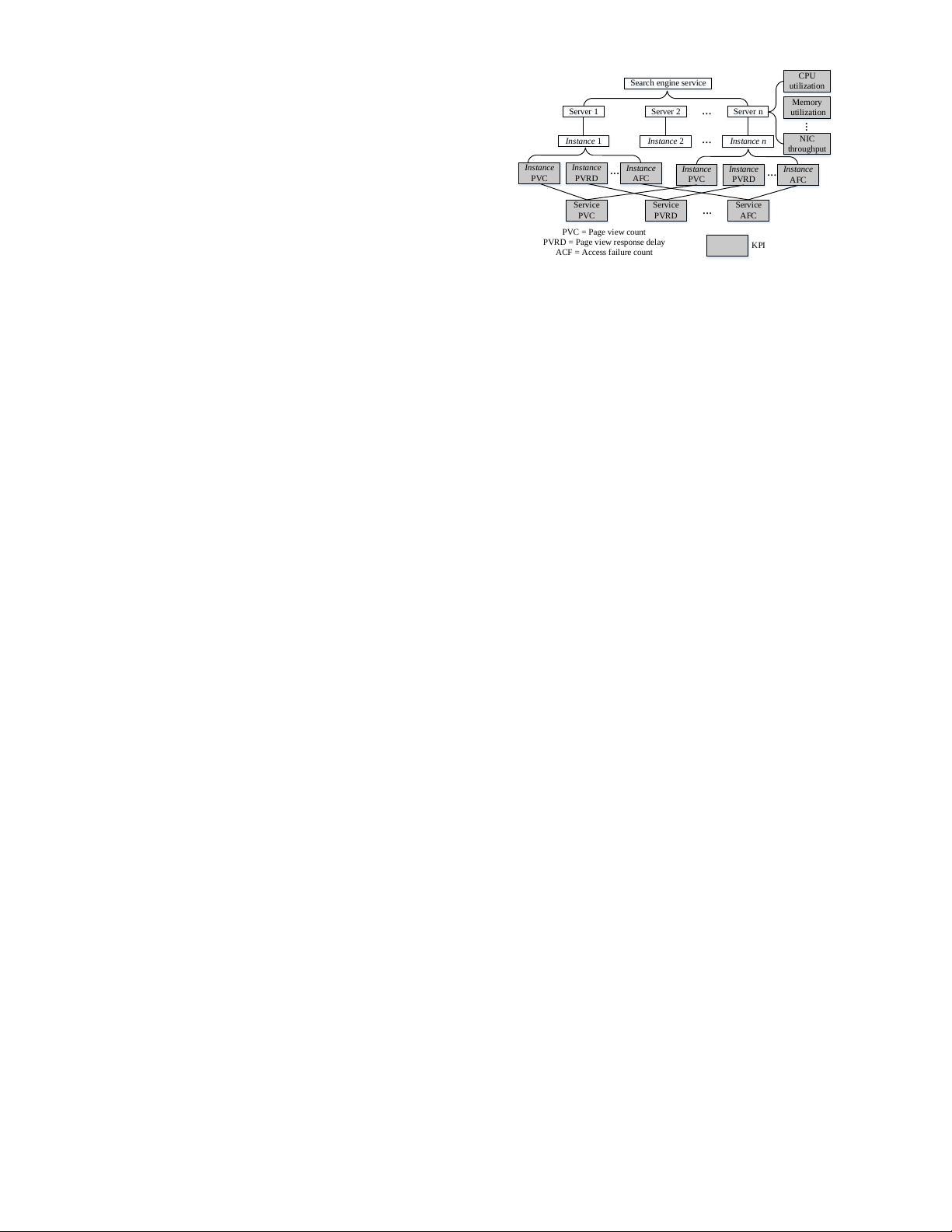

2.2 KPI

In the studied Web-based services, there are hundreds of thousands

of servers providing various types of services. Each service (e.g.,

search, web mail, social networking) runs on one or more servers

with a specific process on each server. An instance denotes a

process of a specific service on a specific server. A KPI is a

performance metric of a given server/service/process. There are

three types of KPIs that need to be monitored for software changes

assessment: server KPIs, instance KPIs and service KPIs. The

operations team deploys an agent on each server to monitor the

status of each instance and collect the KPIs of all instances

Search engine service

Server 1 Server 2 Server n

Instance 1 Instance n

Instance

PVC

...

...

Instance 2

Instance

PVRD

Instance

AFC

...

...

Instance

PVC

Instance

PVRD

Instance

AFC

...

CPU

utilization

Memory

utilization

NIC

throughput

Service

PVC

Service

PVRD

Service

AFC

...

PVC = Page view count

PVRD = Page view response delay

ACF = Access failure count

KPI

Fig. 1. The relationship among service, server, instance and KPI

continuously. For example, immediately after the process serves

a customer with some Web page view, the page view count is

incremented and a new page view response delay is recorded.

In addition, by analyzing server log files that record the system

status, the agent is able to periodically collect server KPIs, such

as CPU utilization, memory utilization, and NIC throughput. A

service KPI is an aggregation of all instance KPIs in the service.

Fig. 1 shows an example of the relationship among service (search

engine service), server (server 1, server 2, ..., server n), instance

(instance 1, instance 2, ..., instance n) and KPI (page view count,

page view response delay, access failure count, CPU utilization,

memory utilization, and NIC throughput).

After collecting the measurements of KPIs of servers and

instances, the agent on each server delivers the measurements

via datacenter networks to a centralized Hadoop-based database,

which also stores the service KPIs aggregated based on the KPIs

of the instances. The database also provides a subscription tool for

other systems, such as FUNNEL, to periodically receive the sub-

scribed measurements based on the server, instance, and service.

The data collection interval at the servers is typically 1 minute,

and thus the time granularity of the input KPI measurements

of FUNNEL is 1 minute. If we set the time granularity of the

KPI measurements larger, the detection delay of FUNNEL will

be larger. Although the accuracy of FUNNEL may be impacted

by the time granularity of the measurements [18], the accuracy

of FUNNEL is relatively good (see Section 4.2) with the time

granularity of 1 minute, we set the time granularity of the KPI

measurements as 1 minute. Within one second, the measurements

subscribed by FUNNEL are pushed to FUNNEL via datacenter

networks.

In large services, there might exist some KPIs of dubious

quality. To the best of our knowledge, there is no previous work on

eliminating low-quality KPIs in Web-based services. In this paper,

we do not focus on eliminating low-quality KPIs either. FUNNEL

detects all KPI changes in the impact set regardless of the quality

of the KPI, and delivers the results to the operations team. The

operations team will then determine whether the performance

changes in the low-quality KPIs are induced by the software

change or not.

As described in [19], even the operations team does not know

exactly what is a “good” threshold for a specific KPI, e.g., due to

seasonal variation. Therefore, it is difficult to obtain the expected

values from the operations team for FUNNEL, and we do not give

a threshold for any KPI in this paper.