HIERARCHICAL MULTI-VLAD FOR IMAGE RETRIEVAL

Yitong Wang

⋆ †

, Ling-Yu Duan

⋆ †∗

, Jie Lin

‡

, Zhe Wang

⋆ †

, Tiejun Huang

⋆ †

⋆

Institute of Digital Media, School of EE&CS, Peking University, Beijing, China

†

Cooperative Medianet Innovation Center, Shanghai, China

‡

Institute for Infocomm Research, Singapore

e-mail:

⋆ †

{wangyitong, lingyu, zwang, tjhuang}@pku.edu.cn,

‡

lin-j@i2r.a-star.edu.sg

ABSTRACT

Constructing discriminative feature descriptors is crucial towards ef-

fective image retrieval. The state-of-the-art powerful global descrip-

tor for this purpose is Vector of Locally Aggregated Descriptors

(VLAD). Given a set of local features (say, SIFT) extracted from

an image, the VLAD is generated by quantizing local features with a

small visual vocabulary (64 to 512 centroids), aggregating the resid-

ual statistics of quantized features for each centroid and concate-

nating the aggregated residual vectors from each centroid. One can

increase the search accuracy by increasing the size of vocabulary

(from hundreds to hundreds of thousands), which, however, it lead-

s to heavy computation cost with flat quantization. In this paper,

we propose a hierarchical multi-VLAD to seek the tradeoff between

descriptor discriminability and computation complexity. We build

up a tree-structured hierarchical quantization (TSHQ) to accelerate

the VLAD computation with a large vocabulary. As quantization

error may propagate from root to leaf node (centroid) with TSHQ,

we introduce multi-VLAD, which constructing a VLAD descriptor

for each level of the vocabulary tree, so as to compensate for the

quantization error at that level. Extensive evaluation over benchmark

datasets has shown that the proposed approach outperforms state-of-

the-art in terms of retrieval accuracy, fast extraction, as well as light

memory cost.

Index Terms— Image Retrieval, Hierarchical Quantization,

Multi-VLAD

1. INTRODUCTION

Image retrieval regards the discovery of images contained within a

large database that depict the same objects/scenes as those depicted

by query images. In general, state-of-the-art image search systems

are built upon a visual vocabulary with an inverted indexing struc-

ture, which quantizes local features (e.g., SIFT [1] or SURF [2]) of

query and database images into centroids. Each database image is

then represented as a Bag-of-Words (BoW) histogram [3] and is in-

vert indexed by quantized centroids of local features in the image.

Recently, the Vector of Locally Aggregated Descriptors (VLAD)

[4][5] and Fisher vectors [6] have extended the BoW by aggregat-

ing higher-order statistics of the distribution of local features. The

VLAD is generated by quantizing the set of local features with a

small vocabulary (64 to 512 centroids), aggregating the residual s-

tatistics of features quantized to each centroid and concatenating the

residual vector from each centroid. Compared to the BoW with a

large vocabulary (e.g., 1 million centroids), the VLAD have achieved

∗

Corresponding author

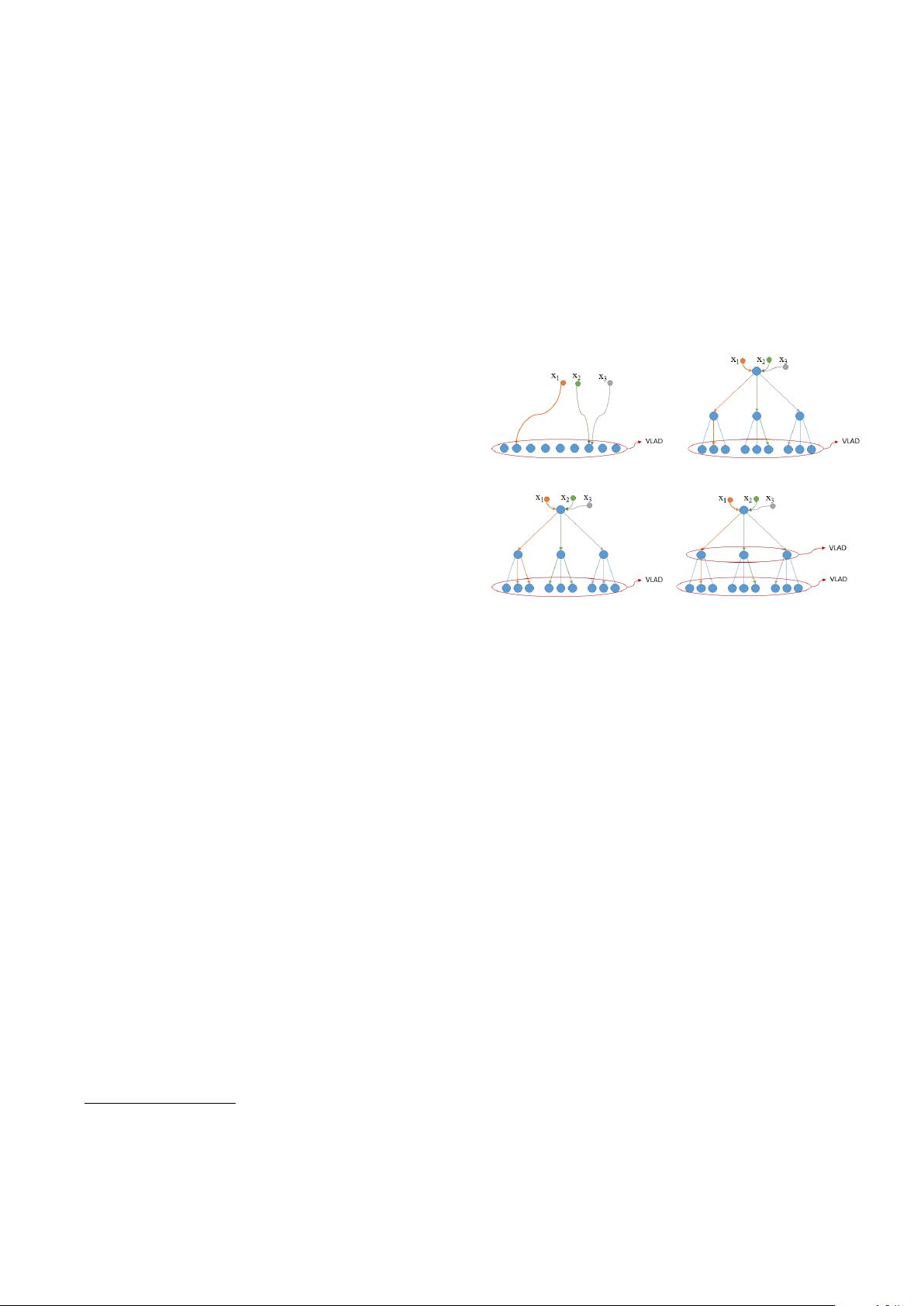

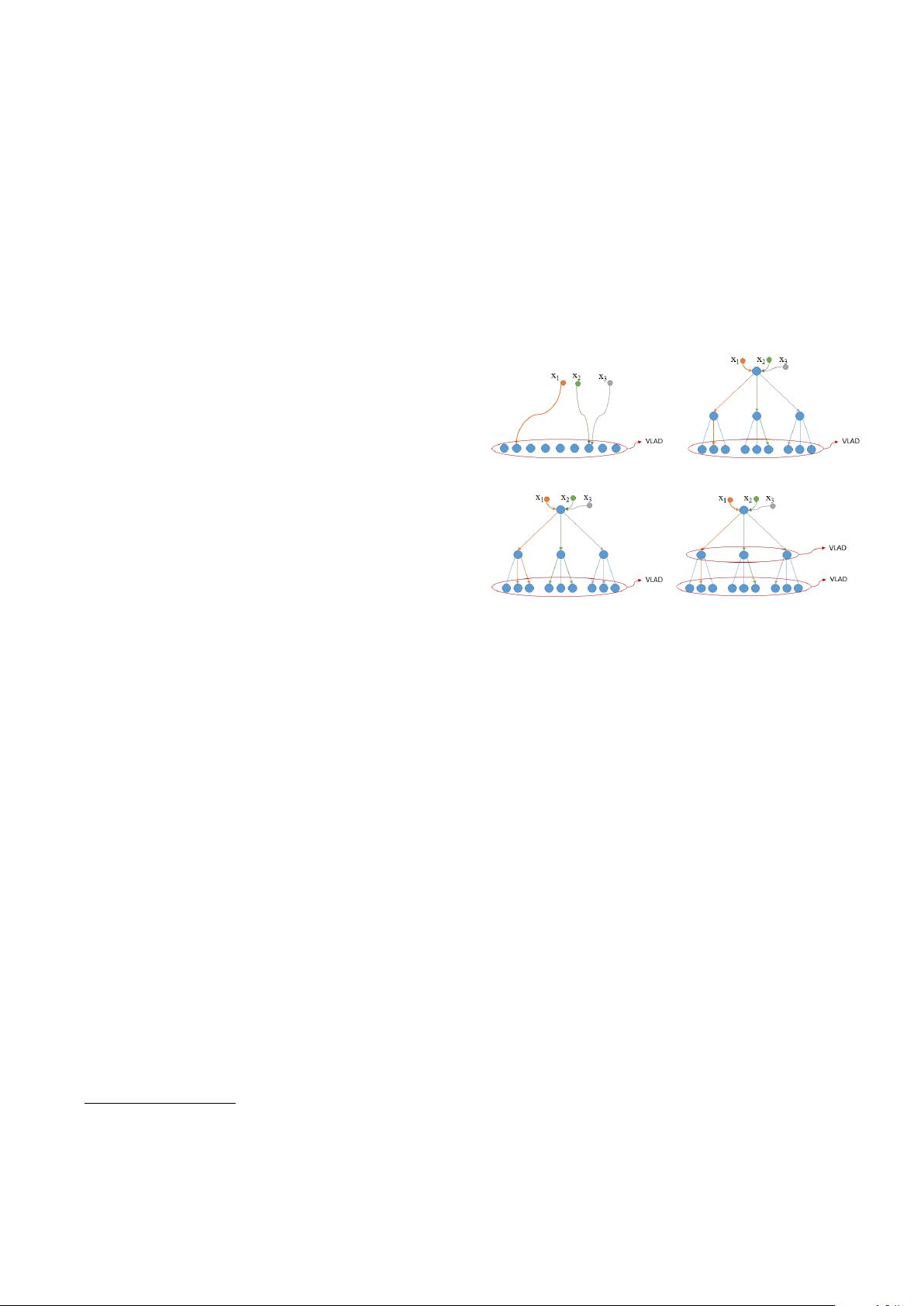

(a) Flat VLAD (b) Hierarchical VLAD

(c) Hierarchical VLAD + MA (d) Hierarchical Multi-VLAD

Fig. 1. Several VLAD schemes. (a) Flat VLAD. (b) Hierarchical

VLAD. (c) Hierarchical VLAD with Multi-assignment. (d) Hierar-

chical Multi-VLAD. x

1

, x

2

, x

3

refer to local features quantized to

centroids.

the state-of-the-art search performance at a much smaller vocabulary

[4][5][7].

Recent works have proposed to improve the VLAD represen-

tation by enhancing the residual statistics in the aggregation stage

after quantizing local features. For example, Tolias et al. [8] intro-

duced an aggregation approach that achieves orientation covariance

of residual statistics. J

´

egou et al. [9] presented democratic aggre-

gation to limit the interaction of unrelated local features in gener-

ating the residual vectors. Arandjelovic et al. [10] proposed intra

normalization of residual vectors to suppress bursty visual elements.

These improved aggregation strategies have shown promising result-

s. However, the descriptor discriminability of VLAD is yet limited

by the coarse quantization due to a small vocabulary.

An alternative solution is to directly increase the vocabulary

size, as a large vocabulary usually provides fine-grained partition

of feature space and improves the discriminability of centroids. For

instance, Tolias et al. [11] formulated image retrieval as a match

kernel framework and used a large vocabulary trained with flat k-

means, leading to state-of-the-art search accuracy. Unfortunately,

the computation cost with flat quantization is linearly increased with

the vocabulary size. This usually leads to slower VLAD extraction.

In this paper, we propose a hierarchical multi-VLAD to address

the problem of image retrieval using the state-of-the-art VLAD de-

scriptor with large vocabulary (see Fig. 1). Firstly, we adopt tree-