portion of the game, and upload data to the experience buffer, while optimizers continually sample

from whatever data is present in the experience buffer to optimize (Figure 2).

Early on in the project, we had rollout workers collect full episodes before sending it to the

optimizers and downloading new parameters. This means that once the data finally enters the

optimizers, it can be several hours old, corresponding to thousands of gradient steps. Gradients

computed from these old parameters were often useless or destructive. In the final system rollout

workers send data to optimizers after only 256 timesteps, but even so this can be a problem.

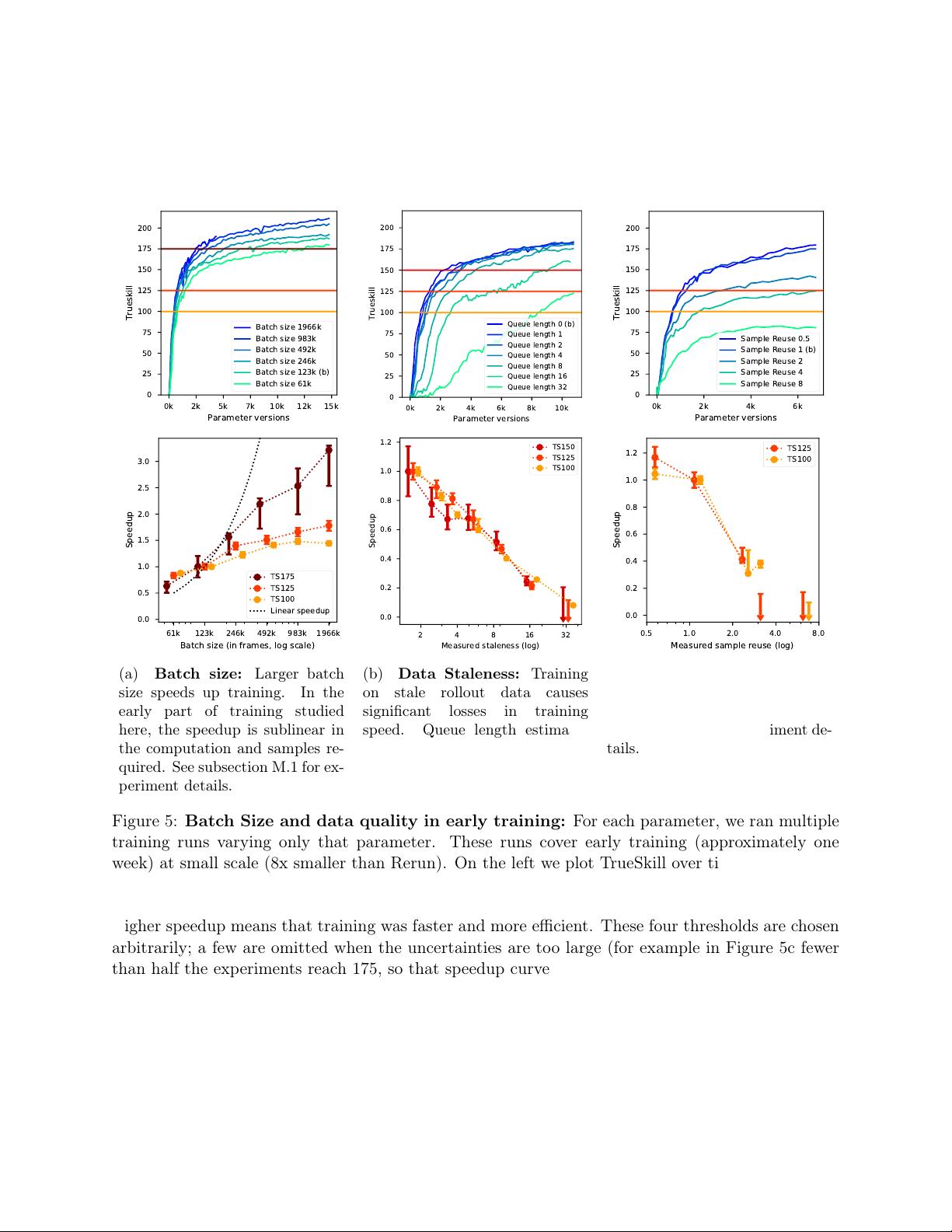

We found it useful to define a metric for this called staleness. If a sample was generated

by parameter version N and we are now optimizing version M, then we define the staleness of

that data to be M − N. In Figure 5b, we see that increasing staleness by ∼ 8 versions causes

significant slowdowns. Note that this level of staleness corresponds to a few minutes in a multi-

month experiment. Our final system design targeted a staleness between 0 and 1 by sending game

data every 30 seconds of gameplay and updating to fresh parameters approximately once a minute,

making the loop faster than the time it takes the optimizers to process a single batch (32 PPO

gradient steps). Because of the high impact of staleness, in future work it may be worth investigating

whether optimization methods more robust to off-policy data could provide significant improvement

in our asynchronous data collection regime.

Because optimizers sample from an experience buffer, the same piece of data can be re-used many

times. If data is reused too often, it can lead to overfitting on the reused data[18]. To diagnose this,

we defined a metric called the sample reuse of the experiment as the instantaneous ratio between

the rate of optimizers consuming data and rollouts producing data. If optimizers are consuming

samples twice as fast as rollouts are producing them, then on average each sample is being used

twice and we say that the sample reuse is 2. In Figure 5c, we see that reusing the same data even

2-3 times can cause a factor of two slowdown, and reusing it 8 times may prevent the learning of a

competent policy altogether. Our final system targets sample reuse ∼ 1 in all our experiments.

These experiments on the early part of training indicate that high quality data matters even

more than compute consumed; small degradations in data quality have severe effects on learning.

Full details of the experiment setup can be found in Appendix M.

4.5 Long term credit assignment

Dota 2 has extremely long time dependencies. Where many reinforcement learning environment

episodes last hundreds of steps ([4, 29–31]), games of Dota 2 can last for tens of thousands of time

steps. Agents must execute plans that play out over many minutes, corresponding to thousands of

timesteps. This makes our experiment a unique platform to test the ability of these algorithms to

understand long-term credit assignment.

In Figure 6, we study the time horizon over which our agent discounts rewards, defined as

H =

T

1 − γ

(3)

Here γ is the discount factor [17] and T is the real game time corresponding to each step (0.133

seconds). This measures the game time over which future rewards are integrated, and we use it as

a proxy for the long-term credit assignment which the agent can perform.

In Figure 6, we see that resuming training a skilled agent using a longer horizon makes it perform

better, up to the longest horizons we explored (6-12 minutes). This implies that our optimization

was capable of accurately assigning credit over long time scales, and capable of learning policies and

14