理解神经网络:Python中从零开始的实现

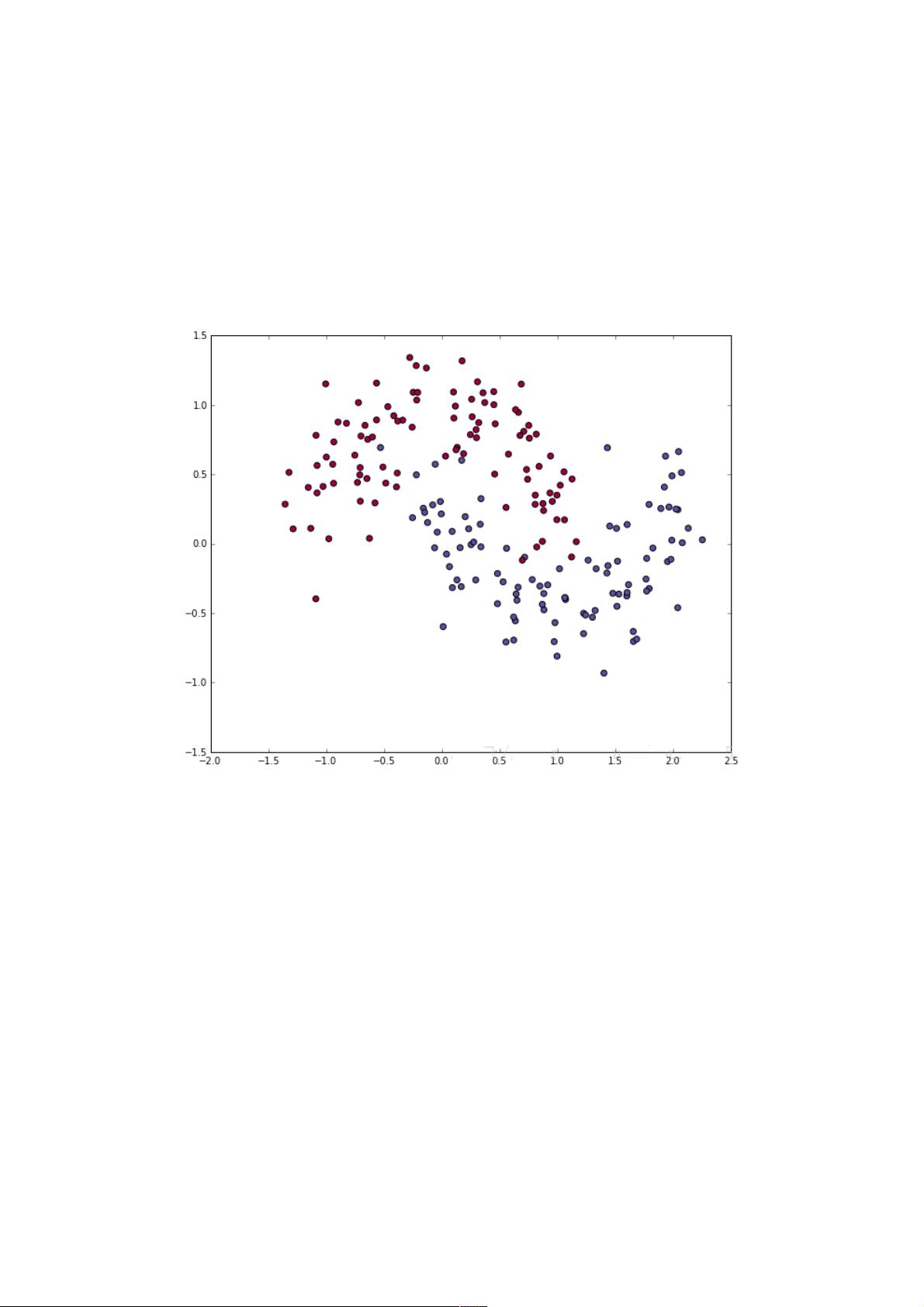

"本文将介绍如何使用Python从头构建一个简单的神经网络,通过实践加深对神经网络工作原理的理解。文章中使用scikit-learn的make_moons函数生成了一个非线性可分的二分类数据集,以此来演示为什么神经网络在处理此类问题时具有优势。在比较了线性分类器(如Logistic回归)的局限性后,文章构建了一个包含输入层、隐藏层和输出层的3层神经网络,并解释了网络结构和预测过程。"

在这篇文章中,作者强调了亲手实现神经网络的重要性,即便最终可能会使用现成的库,如PyBrain。这样做有助于深入理解神经网络的运作机制,并为设计高效模型打下基础。文章中,首先介绍了数据集的生成,利用scikit-learn的`make_moons`函数创建了一个非线性分布的二分类数据集,呈现出月牙形分布,难以通过线性方法分割。

接着,文章展示了Logistic回归在处理这类数据时的不足。由于数据的非线性特性,Logistic回归无法找到合适的决策边界完全区分两类数据。这突显了神经网络的强项,即它们能自动学习非线性特征,无需手动进行特征工程。

然后,文章进入了神经网络的构建部分,描述了一个简单的3层神经网络架构:一个输入层接收x和y坐标,一个隐藏层负责学习特征,一个输出层产生预测结果,每个类别对应一个输出节点。由于这里只有两个类别,因此输出层有两个节点,分别表示属于两类别的概率。通过这种方式,神经网络能够更好地适应数据的月牙形状,提供更准确的分类结果。

在实际实现过程中,神经网络的训练通常涉及权重初始化、前向传播、损失计算、反向传播以及权重更新等步骤。这些步骤在文章中可能没有详述,但这是构建神经网络的基本流程。权重初始化确保网络在开始训练时具有合适的参数,前向传播用于根据当前权重计算预测,损失函数衡量预测与真实值之间的差距,反向传播则用来计算梯度以便更新权重,以减小损失。

这篇文章通过实例解释了神经网络如何处理非线性问题,以及其相比线性模型的优势。对于初学者来说,这是一个很好的起点,帮助他们理解神经网络的基本结构和工作原理,为进一步深入学习和应用神经网络奠定了基础。

点击了解资源详情

点击了解资源详情

点击了解资源详情

2023-07-27 上传

2022-08-10 上传

2021-02-18 上传

2021-03-04 上传

2021-04-06 上传

weixin_38611459

- 粉丝: 6

- 资源: 917

最新资源

- 全国江河水系图层shp文件包下载

- 点云二值化测试数据集的详细解读

- JDiskCat:跨平台开源磁盘目录工具

- 加密FS模块:实现动态文件加密的Node.js包

- 宠物小精灵记忆配对游戏:强化你的命名记忆

- React入门教程:创建React应用与脚本使用指南

- Linux和Unix文件标记解决方案:贝岭的matlab代码

- Unity射击游戏UI套件:支持C#与多种屏幕布局

- MapboxGL Draw自定义模式:高效切割多边形方法

- C语言课程设计:计算机程序编辑语言的应用与优势

- 吴恩达课程手写实现Python优化器和网络模型

- PFT_2019项目:ft_printf测试器的新版测试规范

- MySQL数据库备份Shell脚本使用指南

- Ohbug扩展实现屏幕录像功能

- Ember CLI 插件:ember-cli-i18n-lazy-lookup 实现高效国际化

- Wireshark网络调试工具:中文支持的网口发包与分析